Once upon a time, I was a loser and a college student. When I was in high school, the teacher gave us a scare and said... People called it a white lie, but I foolishly believed it. I worked hard for a while in my senior year of high school, but unfortunately fate always likes to play tricks on people. I got into a second-rate university in the college entrance examination. From then on, I said goodbye to my hometown, stepped onto the university campus, and came to a place where birds don’t lay eggs but can poop. . When I first came to the university, I spent my freshman year in a haze. The front door was good, but the second door was not open. I stayed in the dormitory and played games for most of half a year. Just like that, the best days in college were far away from me. It’s hard to look back on the past. One year has passed and I am now a senior. As a senior, I am not willing to lag behind, so I listen hard in class. After class, I go to play ball with my classmates or go to the library to study html, css, javascript, java, living a life of four points and one line, and won two scholarships in my sophomore year. Now, I am already a junior in college..., time is still passing by, and now I am still a loser, a hard-working college student, and I like to crawl on various websites to satisfy my little vanity... Okay, the time for nonsense is over, it’s time to write code.

Forgive me for my nonsense. Let's start with today's topic, HTML parsing and web crawling.

What is html, web crawler?

I won’t go into detail here about what html is, but what is a web crawler? Are you afraid of bugs on the Internet? Haha, this is so weak. As mentioned in the previous nonsense, I like to crawl all kinds of websites. I have crawled my school’s official website and academic management system, crawled various IT websites, and became a simple news client. end. A web crawler actually refers to a program or script that automatically crawls information from the World Wide Web, or a program that dynamically crawls website data.

How to parse html?

Here we use Jsoup, a powerful tool for parsing html in Java, to parse html. Use jsoup to easily handle html parsing, allowing you to transform from a short and poor person to a tall, high-end person in an instant. The atmosphere is classy.

Why parse html?

We all know that there are three commonly used forms of network data transmission, xml, json ([JSON parsing] JSON parsing expert) and html, our client requests the server, and the server usually returns us the above three forms of data. At the same time, if it is an individual development, since we do not have our own server, then the application we develop can obtain the data we want by crawling other people's websites and parsing HTML. Of course, the data obtained in this way is not recommended, and there are too many It is limited, such as: restricted by the website, difficult to parse, etc. Of course, after reading this article, it is not difficult to analyze, haha.

Introduction to jsoup:

jsoup is a Java HTML parser that can directly parse a URL address and HTML text content. It provides a very labor-saving API, which can retrieve and manipulate data through DOM, CSS and operation methods similar to jQuery.

Main functions:

Parse HTML from a URL, file or string ;

Use DOM or CSS selector to find and retrieve data;

operableHTML elements, attributes, Text;

Jar package download (two methods):

Official website to download the latest version: http://jsoup.org/download

jsoup-1.8.3.jar (jar, doc and source code)

jsoup For more information, check the official website: http://jsoup. org

Create a new Android project (encoding is set to UTF-8), and add the downloaded jsoup jar package to the libs directory of the project Download it and add it to the build path. Since we don’t plan to develop a complete application here, the development tool used is eclipse that we are more familiar with. To be simpler, you don’t need Android Studio (as), you can use as Same.

As test data, let’s crawl this website: http://it.ithome.com/

When you visit this website, you can see that the latest page is displayed as follows. Of course, its articles will Constantly updated, this is the page (part of the page) when the article was written:

Our task is to capture the relevant information of the article, including:

The picture url on the left side of the article

The title of the article article

The content of the article summary

Keyword tags at the bottom

Posttime in the upper right corner

As shown below:

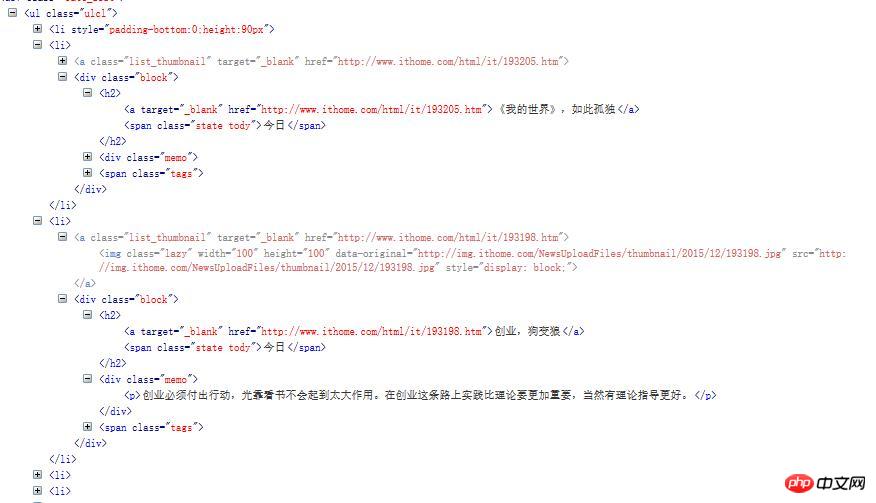

OK, after determining the information we want to capture, we use the browser debugging tool Firebug to open and view the source code of the page to find the part of the data we care about:

Except that the first li in this ul is not the data we want, each other li stores information about an article. Choose two of them to take a look.

Now we can write the parsing code.

The first step: Create a new JavaBean, Article.java

package com.jxust.lt.htmlparse;

/**

* 文章bean

* @author lt

*

*/

public class Article {

private String title; // 标题

private String summary; // 文章内容简介

private String imageUrl; // 图片url

private String tags; // 关键子

private String postime; // 发表时间

// setter...

// getter...

@Override

public String toString() {

return "Article [title=" + title + ", summary=" + summary

+ ", imageUrl=" + imageUrl + ", tags=" + tags + ", postime="

+ postime + "]";

}

}The second step: Create a new tool class, HtmlParseUtil.java, write one Method to connect to the network and parse the returned html page:

/**

* 请求网络加载数据得到文章的集合

* @param url:网站url

*/

public static List<Article> getArticles(String url){

List<Article> articles = new ArrayList<Article>();

Connection conn = Jsoup.connect(url);

try {

// 10秒超时时间,发起get请求,也可以是post

Document doc = conn.timeout(10000).get();

// 1. 只要我们关心的信息数据,这里使用css类选择器

Element ul = doc.select(".ulcl").get(0);

// 2. 得到所有的li,排除个别不是同种类型的数据

Elements lis = ul.getElementsByTag("li");

for(int i=1;i<lis.size();i++){ // 通过FileBug发现这个网页里面第一个li不是我们要的类型,所以从1开始

Article article = new Article();

Element li = lis.get(i);

// 数据1,得到图片的url,通过img标签的src属性获得

Element img = li.getElementsByTag("img").first();

// 获取标签的属性值,参数为属性名称

String imageUrl = img.attr("src");

// 数据2,得到文章的标题

Element h2 = li.getElementsByTag("h2").first();

// 取h2元素下面的第一个a标签的文本即为标题

String title = h2.getElementsByTag("a").first().text();

// 数据3,得到文章的发表时间,取h2元素下面的第一个span标签的文本即为文章发表时间

String postime = h2.getElementsByTag("span").first().text();

// 数据4,得到文章内容简介,取li下面第一个p标签的文本

String summary = li.getElementsByTag("p").first().text();

// 数据5,得到文章的关键字,取li下面的class为tags的第一个元素的所有的a标签文本

Element tagsSpan = li.getElementsByClass("tags").first();

Elements tags = tagsSpan.getElementsByTag("a");

String key = "";

for(Element tag : tags){

key+=","+tag.text();

}

// 去掉key的第一个","号

key = key.replaceFirst(",", "");

article.setTitle(title);

article.setSummary(summary);

article.setImageUrl(imageUrl);

article.setPostime(postime);

article.setTags(key);

articles.add(article);

}

} catch (Exception ex) {

ex.printStackTrace();

}

return articles;

}Add request for network permission under the manifest file:

<uses-permission android:name="android.permission.INTERNET"/>

Note: After requesting the network to get the Document object (do not export the package, it is under jsoup), through select The () method selects the ul element with class ulcl. There is only one class ulcl on this page. The first li under ul is not what we want. We exclude it and then get each li object. Each li element contains an article. Information, analysis of important method instructions:

Document.select(String cssQuery): Obtain the E element set Elements through the css selector

Element .getElementsByTag(String tagName): Get elements by tag name

experience should easily understand the above method , for more method information, please view the Jsoup official website documentation. Step 3: Test the parsing results:

Use android<instrumentation android:targetPackage="com.jxust.lt.htmlparse" android:name="android.test.InstrumentationTestRunner"></instrumentation>

<uses-library android:name="android.test.runner"/>

Create a new test class HtmlParseTest.java

AndroidTestCase Write a test method:

public void testParseHtml(){

List<Article> articles = HtmlParseUtil.getArticles(url); for(int i=0;i<articles.size();i++){

Log.e("result"+i, articles.get(i).toString());

}

}

The value of the url here is: "http: //it.ithome.com/"

Open the simulator and run the test method Run As Android JUnit Test

Log output results:

...

...

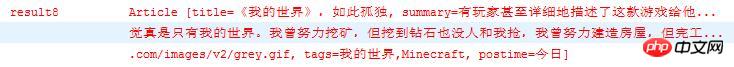

You can see that we got 20 pieces of data, let’s take a look at one of them

You can see that we got 20 pieces of data, let’s take a look at one of them

You can see that the five data we care about, including the article title, content introduction, image URL, keywords, and publication time, have all been parsed out. The html parsing is over here. Now that we have the data, we can display the data in the listView (the data will not be displayed in the ListView here. This is very simple. It can be done with one layout and one adapter. I don’t understand. You can ask), so that I can write a news client for the website myself, grab all the data I want, and experience the joy of using other people's data for my own use, haha.

To summarize:

The steps for jsoup to parse html:

Get the Document object:

By sending a Jsoup get or post request Return the Document object

Convert the html string into a Document object (via Jsoup.parse() method):

Use Document.select() for preliminary filtering of data

Use Element A series of methods to filter out the data we want

Note: It is necessary to analyze the page source code. Before parsing any data, we must first know the structure of the data to be parsed. Look at the html page How can the source code call related methods of Document, Element and other objects simply and parsed?

jsoup's get and post request network are not used much in actual applications. Usually I will use jsoup in conjunction with Volley, XUtils, Okhttp and other famous android network frameworks, that is, request the network Use frameworks such as Volley and Jsoup for parsing, at least that's what I do.

The above is the detailed content of HTML parsing web crawler graphic introduction. For more information, please follow other related articles on the PHP Chinese website!