Backend Development

Backend Development

Python Tutorial

Python Tutorial

Detailed explanation of python log printing and writing concurrency implementation code

Detailed explanation of python log printing and writing concurrency implementation code

Detailed explanation of python log printing and writing concurrency implementation code

Everyone generally uses logging for printing, but logging is thread-safe. There are also many introductions to multi-process. Introducing some file locks and configuring logging can ensure support.

However, through testing, it was found that when there are multiple processes, it is still easy to have the problem of repeatedly writing files or missing files when printing is normal.

My logging requirements are relatively simple, I can distinguish files and write log files correctly.

Introducing file locks; the log writing function is encapsulated into an operation_Logger class; the log name and writing level are encapsulated into a business class Logger.

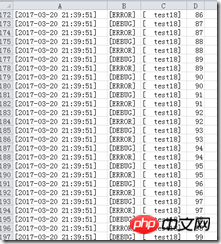

This example is implemented based on python3. In this example, 20 processes are concurrently writing to 3 files respectively. Each file writes more than 100 lines of data every second. There is no data redundancy or data omission in the log file.

# -*-coding:utf-8-*-

"""

Author:yinshunyao

Date:2017/3/5 0005下午 10:50

"""

# import logging

import os

import time

# 利用第三方系统锁实现文件锁定和解锁

if os.name == 'nt':

import win32con, win32file, pywintypes

LOCK_EX = win32con.LOCKFILE_EXCLUSIVE_LOCK

LOCK_SH = 0 # The default value

LOCK_NB = win32con.LOCKFILE_FAIL_IMMEDIATELY

__overlapped = pywintypes.OVERLAPPED()

def lock(file, flags):

hfile = win32file._get_osfhandle(file.fileno())

win32file.LockFileEx(hfile, flags, 0, 0xffff0000, __overlapped)

def unlock(file):

hfile = win32file._get_osfhandle(file.fileno())

win32file.UnlockFileEx(hfile, 0, 0xffff0000, __overlapped)

elif os.name == 'posix':

from fcntl import LOCK_EX

def lock(file, flags):

fcntl.flock(file.fileno(), flags)

def unlock(file):

fcntl.flock(file.fileno(), fcntl.LOCK_UN)

else:

raise RuntimeError("File Locker only support NT and Posix platforms!")

class _Logger:

file_path = '' #初始化日志路径

@staticmethod

def init():

if not _Logger.file_path:

_Logger.file_path = '%s/Log' % os.path.abspath(os.path.dirname(__file__))

return True

@staticmethod

def _write(messge, file_name):

if not messge:

return True

messge = messge.replace('\t', ',')

file = '{}/{}'.format(_Logger.file_path, file_name)

while True:

try:

f = open(file, 'a+')

lock(f, LOCK_EX)

break

except:

time.sleep(0.01)

continue

# 确保缓冲区内容写入到文件

while True:

try:

f.write(messge + '\n')

f.flush()

break

except:

time.sleep(0.01)

continue

while True:

try:

unlock(f)

f.close()

return True

except:

time.sleep(0.01)

continue

@staticmethod

def write(message, file_name, only_print=False):

if not _Logger.init(): return

print(message)

if not only_print:

_Logger._write(message, file_name)

class Logger:

def __init__(self, logger_name, file_name=''):

self.logger_name = logger_name

self.file_name = file_name # 根据消息级别,自定义格式,生成消息

def _build_message(self, message, level):

try:

return '[%s]\t[%5s]\t[%8s]\t%s' \

% (time.strftime('%Y-%m-%d %H:%M:%S'), level, self.logger_name, message)

except Exception as e:

print('解析日志消息异常:{}'.format(e))

return ''

def warning(self, message):

_Logger.write(self._build_message(message, 'WARN'), self.file_name)

def warn(self, message):

_Logger.write(self._build_message(message, 'WARN'), self.file_name)

def error(self, message):

_Logger.write(self._build_message(message, 'ERROR'), self.file_name)

def info(self, message):

_Logger.write(self._build_message(message, 'INFO'), self.file_name, True)

def debug(self, message):

_Logger.write(self._build_message(message, 'DEBUG'), self.file_name)

# 循环打印日志测试函数

def _print_test(count):

logger = Logger(logger_name='test{}'.format(count), file_name='test{}'.format(count % 3))

key = 0

while True:

key += 1

# print('{}-{}'.format(logger, key))

logger.debug('%d' % key)

logger.error('%d' % key)

if __name__ == '__main__':

from multiprocessing import Pool, freeze_support

freeze_support() # 进程池进行测试

pool = Pool(processes=20)

count = 0

while count < 20:

count += 1

pool.apply_async(func=_print_test, args=(count,))

else:

pool.close()

pool.join()The above is the detailed content of Detailed explanation of python log printing and writing concurrency implementation code. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1390

1390

52

52

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

VS Code can run on Windows 8, but the experience may not be great. First make sure the system has been updated to the latest patch, then download the VS Code installation package that matches the system architecture and install it as prompted. After installation, be aware that some extensions may be incompatible with Windows 8 and need to look for alternative extensions or use newer Windows systems in a virtual machine. Install the necessary extensions to check whether they work properly. Although VS Code is feasible on Windows 8, it is recommended to upgrade to a newer Windows system for a better development experience and security.

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

VS Code extensions pose malicious risks, such as hiding malicious code, exploiting vulnerabilities, and masturbating as legitimate extensions. Methods to identify malicious extensions include: checking publishers, reading comments, checking code, and installing with caution. Security measures also include: security awareness, good habits, regular updates and antivirus software.

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

In VS Code, you can run the program in the terminal through the following steps: Prepare the code and open the integrated terminal to ensure that the code directory is consistent with the terminal working directory. Select the run command according to the programming language (such as Python's python your_file_name.py) to check whether it runs successfully and resolve errors. Use the debugger to improve debugging efficiency.

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

VS Code can be used to write Python and provides many features that make it an ideal tool for developing Python applications. It allows users to: install Python extensions to get functions such as code completion, syntax highlighting, and debugging. Use the debugger to track code step by step, find and fix errors. Integrate Git for version control. Use code formatting tools to maintain code consistency. Use the Linting tool to spot potential problems ahead of time.

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

VS Code is available on Mac. It has powerful extensions, Git integration, terminal and debugger, and also offers a wealth of setup options. However, for particularly large projects or highly professional development, VS Code may have performance or functional limitations.

Can vscode run ipynb

Apr 15, 2025 pm 07:30 PM

Can vscode run ipynb

Apr 15, 2025 pm 07:30 PM

The key to running Jupyter Notebook in VS Code is to ensure that the Python environment is properly configured, understand that the code execution order is consistent with the cell order, and be aware of large files or external libraries that may affect performance. The code completion and debugging functions provided by VS Code can greatly improve coding efficiency and reduce errors.