I didn’t expect python to be so powerful and fascinating. I used to copy and paste pictures one by one when I saw them. Now it’s better. If you learn python, you can use a program to save pictures one by one. The following article mainly introduces you to the relevant information on using Python3.6 to crawl images from Sogou picture web pages. Friends in need can refer to it.

Preface

In the past few days, I have studied the crawler algorithm that I have always been curious about. Here I will write down some of my experiences in the past few days. Enter the text below:

We use sogou as the crawling object here.

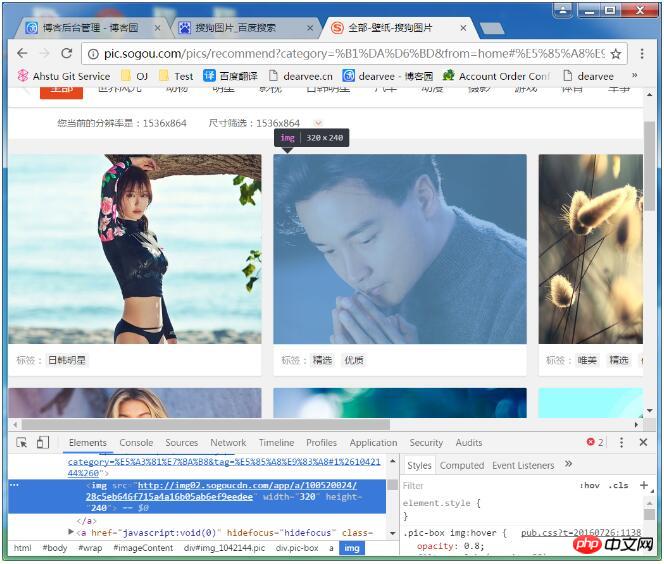

First we enter Sogou Pictures and enter the wallpaper category (of course it is just an example Q_Q), because if we need to crawl a certain website information, we need to have a preliminary understanding of it...

After entering, this is it, then F12 to enter the developer options. The author uses Chrome.

Right-click on the image>>Check

We found that the src of the image we needed was under the img tag, so we first tried to use Python requests to extract the image component, and then obtain the src of img and then use urllib.request.urlretrieve to download the pictures one by one, so as to achieve the purpose of obtaining data in batches. The idea is good. The following should tell the program that the url to be crawled is http://pic.sogou.com/ pics/recommend?category=%B1%DA%D6%BD, this url comes from the address bar after entering the category. Now that we understand the url address, let’s start the happy coding time:

When writing this crawler program, it is best to debug it step by step to ensure that every step of our operation is correct. This is what programmers should do. Habit. The author doesn't know if I am a programmer. Let’s analyze the web page pointed to by this url.

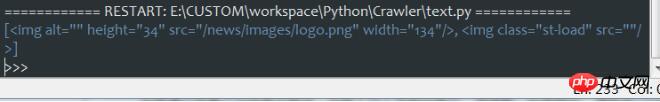

import requests import urllib from bs4 import BeautifulSoup res = requests.get('http://pic.sogou.com/pics/recommend?category=%B1%DA%D6%BD') soup = BeautifulSoup(res.text,'html.parser') print(soup.select('img'))

output:

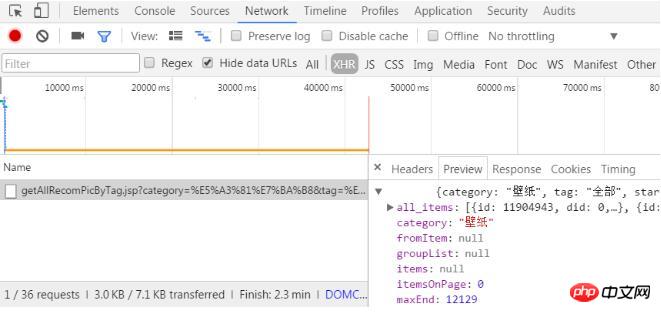

It is found that the output content does not contain the image elements we want, but only parses the img of the logo. This is obviously not what we want. need. In other words, the required picture information is not in the URL http://pic.sogou.com/pics/recommend?category=%B1%DA%D6%BD. Therefore, it is considered that the element may be dynamic. Careful students may find that when sliding the mouse wheel down on the web page, the image is dynamically refreshed. In other words, the web page does not load all the resources at once, but Dynamically load resources. This also prevents the webpage from being too bloated and affecting the loading speed. The painful exploration begins below. We want to find the real URLs of all pictures. The author is new to this and is not very experienced in finding this. The last location found is F12>>Network>>XHR>>(Click the file under XHR)>>Preview.

I found that it is a bit close to the elements we need. Click on all_items and find that the following are 0 1 2 3... one by one, they seem to be picture elements. Try opening a url. I found it was really the address of the picture. After finding the target. Click Headers

under XHR to get the second line

Request URL:

http://pic.sogou.com/pics/channel/getAllRecomPicByTag.jsp?category= %E5%A3%81%E7%BA%B8&tag=%E5%85%A8%E9%83%A8&start=0&len=15&width=1536&height=864, try to remove some unnecessary parts, the trick is to delete possible After the section, access is not affected. Screened by the author. The final url obtained: http://pic.sogou.com/pics/channel/getAllRecomPicByTag.jsp?category=%E5%A3%81%E7%BA%B8&tag=%E5%85%A8%E9%83%A8&start =0&len=15 Literal meaning, knowing that category may be followed by classification. start is the starting subscript, len is the length, that is, the number of pictures. Okay, let’s start the happy coding time:

The development environment is Win7 Python 3.6. Python needs to install requests when running.

To install requests for Python3.6, you should type in CMD:

pip install requests

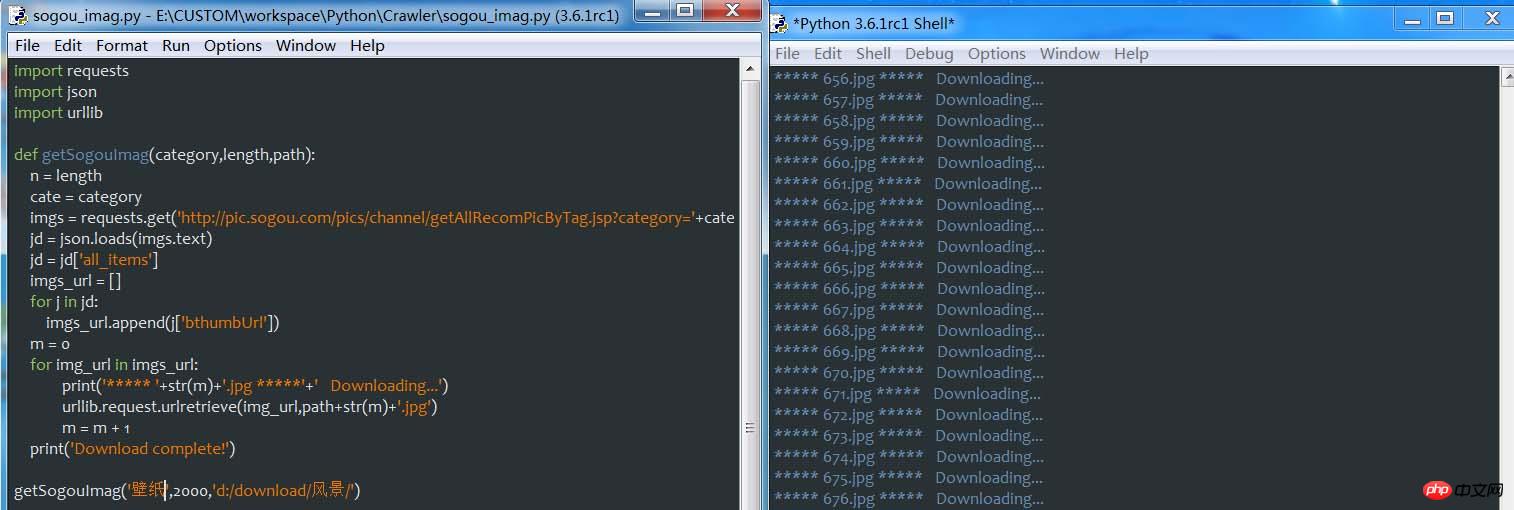

The author is also debugging and writing here, and the final code is posted here:

import requests import json import urllib def getSogouImag(category,length,path): n = length cate = category imgs = requests.get('http://pic.sogou.com/pics/channel/getAllRecomPicByTag.jsp?category='+cate+'&tag=%E5%85%A8%E9%83%A8&start=0&len='+str(n)) jd = json.loads(imgs.text) jd = jd['all_items'] imgs_url = [] for j in jd: imgs_url.append(j['bthumbUrl']) m = 0 for img_url in imgs_url: print('***** '+str(m)+'.jpg *****'+' Downloading...') urllib.request.urlretrieve(img_url,path+str(m)+'.jpg') m = m + 1 print('Download complete!') getSogouImag('壁纸',2000,'d:/download/壁纸/')

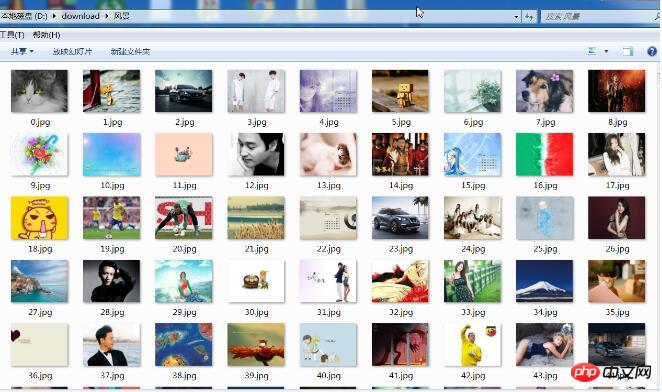

When the program started running, the author was still a little excited. Come and feel it:

At this point, the description of the programming process of the crawler program is completed. Overall, finding the URL where the element needs to be crawled is the key to many aspects of the crawler

The above is the detailed content of Detailed explanation of Python's method of crawling Sogou images from web pages. For more information, please follow other related articles on the PHP Chinese website!