WeChat Applet

WeChat Applet

Mini Program Development

Mini Program Development

Detailed explanation of comparison of python and bash versions of small programs

Detailed explanation of comparison of python and bash versions of small programs

Detailed explanation of comparison of python and bash versions of small programs

最近有一个小需求:在一个目录下有很多文件,每个文件的第一行是BEGIN开头的,最后一行是END开头的,中间每一行有多列,数量不等,第一列称为"DN", 第二列称为"CV",DN和CV的联合作为主键,现在需要检测文件中是否有重复的DN-CV。

于是写了个简单的python程序

#! /usr/bin/python

import os

import sys

cmd = "cat /home/zhangj/hosts/* | grep -v BEGIN | grep -v END"

def check_dc_line():

has_duplicate = False

dc_set = set()

for dc_line in os.popen(cmd, 'r').readlines():

dc_token = dc_line.split()

dn = dc_token[0]

cv = dc_token[1]

dc = dn + "," + cv

if dc in dc_set:

print "duplicate dc found:", dc

has_duplicate = True

else:

dc_set.add(dc)

return has_duplicate

if not check_dc_line():

print "no duplicate dc"对于250个文件,共60万行的数据,过滤一遍约1.67秒

有点不甘心这个效率,于是又写了一个同样功能的shell脚本

#! /bin/bash

cat /home/zhangj/hosts/* | grep -v BEGIN | grep -v END | awk '

BEGIN {

has_duplicate = 0

}

{

dc = $1","$2;

if (dc in dc_set)

{

print "duplicate dc found", dc

has_duplicate = 1

}

else {

dc_set[dc] = 1

}

}

END {

if (has_duplicate ==0)

{

print "no duplicate dc found"

}

}

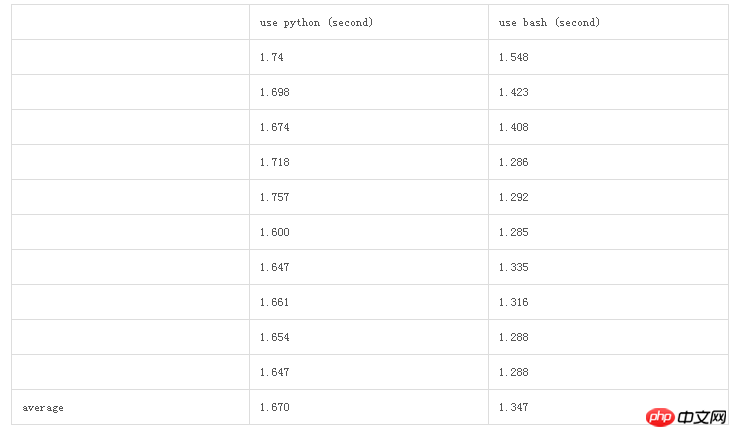

'为了进一步比较,重复了10次实验。

The above is the detailed content of Detailed explanation of comparison of python and bash versions of small programs. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52