Backend Development

Backend Development

XML/RSS Tutorial

XML/RSS Tutorial

RSS and crawlers, detailed explanation of how to collect data

RSS and crawlers, detailed explanation of how to collect data

RSS and crawlers, detailed explanation of how to collect data

Abstract: Before the value of data can be mined, it must first go through processes such as collection, storage, analysis and calculation. Obtaining comprehensive and accurate data is the basis for data value mining. This issue of CSDN Cloud Computing Club's "Big Data Story" will start with the most common data collection methods - RSS and search engine crawlers.

On December 30, the CSDN Cloud Computing Club event was held at 3W Coffee. The theme of the event was "RSS and Crawlers: The Story of Big Data - Starting with How to Collect Data." Before the value of data can be mined, it must first go through processes such as collection, storage, analysis and calculation. Obtaining comprehensive and accurate data is the basis for data value mining. Perhaps the current data cannot bring actual value to the enterprise or organization, but as a far-sighted decision-maker, you should realize that important data should be collected and saved as early as possible. Data is wealth. This issue of "Big Data Story" will start with the most common data collection methods-RSS and search engine crawlers.

The event site was packed with people

First of all, Cui Kejun, general manager of the Library Division of Beijing Wanfang Software Co., Ltd., shared the theme of "Large-scale implementation of RSS Initial applications of aggregation and website downloading in scientific research.” Cui Kejun has worked in the library and information industry for 12 years and has rich experience in data collection. He mainly shared RSS, an important way of information aggregation, and its implementation technology.

RSS (Really Simple Syndication) is a source format specification used to aggregate websites that frequently publish updated data, such as blog posts, news, audio or video excerpts. RSS files contain full text or excerpted text, plus excerpted data and authorization metadata from the network to which the user subscribes.

The aggregation of hundreds or even thousands of RSS seeds closely related to a certain industry will enable a quick and comprehensive understanding of the latest developments in a certain industry; By downloading complete data from a website and conducting data mining, you will be able to understand the ins and outs of the development of a certain topic in the industry.

Cui Kejun, General Manager of the Library Business Department of Beijing Wanfang Software Co., Ltd.

Cui Kejun introduced the role of RSS in the Institute of High Energy Physics as an example. Applications in scientific research institutes. High-energy physics information monitoring targets high-energy physics peer institutions around the world: laboratories, industry societies, international associations, government agencies in charge of scientific research in various countries, key comprehensive scientific publications, high-energy physics experimental projects and experimental facilities. The types of information monitored are: news, papers, conference reports, analysis and reviews, preprints, case studies, multimedia, books, recruitment information, etc.

High energy physics literature information adopts the most advanced open source content management system Drupal, open source search technology Apache Solr, as well as PubSubHubbub technology developed by Google employees to subscribe to news in real time and Amazon's OpenSearch to establish a set of high energy physics The information monitoring system is different from traditional RSS subscription and push, and realizes almost real-time information capture and active push of news of any keyword, any category, and compound conditions.

Next, Cui Kejun shared his experience in using technologies such as Drupal, Apache Solr, PubSubHubbub and OpenSearch.

Next, Ye Shunping, the head of the crawler group of the architect of the Search Department of Yisou Technology, gave a sharing titled "Web Search Crawler Timeliness System", including the main goals, architecture, and various sub-modules of the timeliness system. design plan.

Yisou Technology Search Department Architect and Head of Crawl Team Ye Shunping

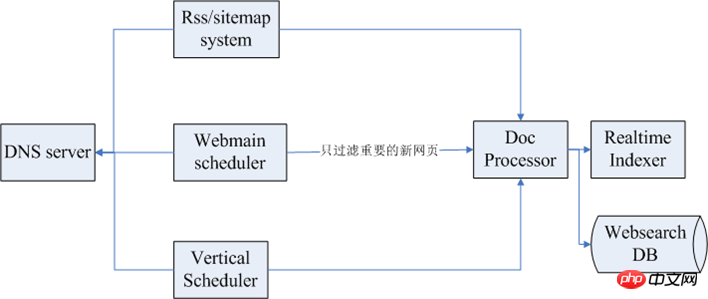

The goals of web crawlers are high coverage and low dead link rate As with good effectiveness, the goal of the crawler effectiveness system is similar, mainly to achieve rapid and comprehensive inclusion of new web pages. The following figure shows the overall architecture of the timeliness system:

Among them, the first one above is the RSS/sitemap subsystem, and the next one is the Webmain scheduler, the scheduling system for web page crawling. , and then a timeliness module Vertical Scheduler. The far left is the DNS service. When crawling, there are usually dozens or even hundreds of crawling clusters. If each one is protected, the pressure on DNS will be relatively high. Large, so there is usually a DNS service module to provide global services. After the data is captured, subsequent data processing is generally performed.

The modules related to effectiveness include the following:

RSS/sitemap system: The process of using RSS/sitemap by the timeliness system is to mine seeds, crawl regularly, and analyze the link release time. Crawl and index newer web pages first.

Pan-crawling system: If the pan-crawling system is well designed, it will help improve the high coverage of time-sensitive web pages, but pan-crawling needs to shorten the scheduling cycle as much as possible.

Seed scheduling system: It is mainly a time-sensitive seed library. There is some information in this seed library. The scheduling system will continuously scan the database and then send it to the crawling cluster. After the cluster is crawled, some links will be extracted. Process, and then send these out by category, and each vertical channel will obtain timely data.

Seed mining: involves page parsing or other mining methods, which can be constructed through site maps and navigation bars, and based on page structural characteristics and page change rules.

Seed update mechanism: record the crawl history and follow link information of each seed, regularly recalculate the update cycle of the seed based on the external link update characteristics of the seed.

Crawling system and JavaScript parsing: Use the browser to crawl and build a crawling cluster based on browser crawling. Or adopt an open source project such as Qtwebkit.

The above is the detailed content of RSS and crawlers, detailed explanation of how to collect data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

Big data structure processing skills: Chunking: Break down the data set and process it in chunks to reduce memory consumption. Generator: Generate data items one by one without loading the entire data set, suitable for unlimited data sets. Streaming: Read files or query results line by line, suitable for large files or remote data. External storage: For very large data sets, store the data in a database or NoSQL.

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

AEC/O (Architecture, Engineering & Construction/Operation) refers to the comprehensive services that provide architectural design, engineering design, construction and operation in the construction industry. In 2024, the AEC/O industry faces changing challenges amid technological advancements. This year is expected to see the integration of advanced technologies, heralding a paradigm shift in design, construction and operations. In response to these changes, industries are redefining work processes, adjusting priorities, and enhancing collaboration to adapt to the needs of a rapidly changing world. The following five major trends in the AEC/O industry will become key themes in 2024, recommending it move towards a more integrated, responsive and sustainable future: integrated supply chain, smart manufacturing

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

In today's big data era, data processing and analysis have become an important support for the development of various industries. As a programming language with high development efficiency and superior performance, Go language has gradually attracted attention in the field of big data. However, compared with other languages such as Java and Python, Go language has relatively insufficient support for big data frameworks, which has caused trouble for some developers. This article will explore the main reasons for the lack of big data framework in Go language, propose corresponding solutions, and illustrate it with specific code examples. 1. Go language

Getting Started Guide: Using Go Language to Process Big Data

Feb 25, 2024 pm 09:51 PM

Getting Started Guide: Using Go Language to Process Big Data

Feb 25, 2024 pm 09:51 PM

As an open source programming language, Go language has gradually received widespread attention and use in recent years. It is favored by programmers for its simplicity, efficiency, and powerful concurrent processing capabilities. In the field of big data processing, the Go language also has strong potential. It can be used to process massive data, optimize performance, and can be well integrated with various big data processing tools and frameworks. In this article, we will introduce some basic concepts and techniques of big data processing in Go language, and show how to use Go language through specific code examples.

Big data processing in C++ technology: How to use in-memory databases to optimize big data performance?

May 31, 2024 pm 07:34 PM

Big data processing in C++ technology: How to use in-memory databases to optimize big data performance?

May 31, 2024 pm 07:34 PM

In big data processing, using an in-memory database (such as Aerospike) can improve the performance of C++ applications because it stores data in computer memory, eliminating disk I/O bottlenecks and significantly increasing data access speeds. Practical cases show that the query speed of using an in-memory database is several orders of magnitude faster than using a hard disk database.

Golang and big data: a perfect match or at odds?

Mar 05, 2024 pm 01:57 PM

Golang and big data: a perfect match or at odds?

Mar 05, 2024 pm 01:57 PM

Golang and big data: a perfect match or at odds? With the rapid development of big data technology, more and more companies are beginning to optimize business and decision-making through data analysis. For big data processing, efficient programming languages are crucial. Among many programming languages, Golang (Go language) has become one of the popular choices for big data processing due to its concurrency, efficiency, simplicity and other characteristics. So, are Golang and big data a perfect match or contradictory? This article will start from the application of Golang in big data processing,

Deep mining: using Go language to build efficient crawlers

Jan 30, 2024 am 09:17 AM

Deep mining: using Go language to build efficient crawlers

Jan 30, 2024 am 09:17 AM

In-depth exploration: Using Go language for efficient crawler development Introduction: With the rapid development of the Internet, obtaining information has become more and more convenient. As a tool for automatically obtaining website data, crawlers have attracted increasing attention and attention. Among many programming languages, Go language has become the preferred crawler development language for many developers due to its advantages such as high concurrency and powerful performance. This article will explore the use of Go language for efficient crawler development and provide specific code examples. 1. Advantages of Go language crawler development: High concurrency: Go language