Collection of commonly used SQL Server specifications

Common field type selection

1. It is recommended to use varchar/nvarchar data type for character type

2. It is recommended to use the money data type for the amount currency

3. Scientific notation recommends using numeric data type

4. It is recommended to use the bigint data type for self-increasing logos (the amount of data is large, and it cannot be loaded with the int type, so it will be troublesome to transform in the future)

5. The time type is recommended to be datetime data type

6. It is forbidden to use the old data types of text, ntext and image

7. It is prohibited to use xml data type, varchar(max), nvarchar(max)

Constraints and Indexes

Each table must have a primary key

Each table must have a primary key to enforce entity integrity

A single table can only have one primary key (empty and duplicate data are not allowed)

Try to use single-field primary keys

Foreign keys are not allowed

Foreign keys increase the complexity of table structure changes and data migration

Foreign keys have an impact on the performance of inserts and updates. You need to check the primary foreign key constraints

Data integrity is controlled by the program

NULL attribute

For the newly added table, NULL is prohibited in all fields

(Why does the new table not allow NULL?

Allowing NULL values will increase the complexity of the application. You must add specific logic code to prevent various unexpected bugs

Three-valued logic, all equal sign ("=") queries must add isnull judgment.

Null=Null, Null!=Null, not(Null=Null), not(Null!=Null) are all unknown, not true)

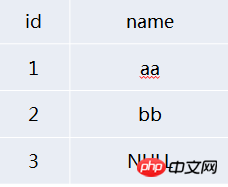

To illustrate with an example:

If the data in the table is as shown in the figure:

You want to find all data except name equal to aa, and then you inadvertently use SELECT * FROM NULLTEST WHERE NAME<>’aa’

The result was different from expected. In fact, it only found the data record with name=bb but not the data record with name=NULL

Then how do we find all the data except name equal to aa? We can only use the ISNULL function

SELECT * FROM NULLTEST WHERE ISNULL(NAME,1)<>’aa’

But you may not know that ISNULL can cause serious performance bottlenecks, so in many cases it is best to limit user input at the application level to ensure that users enter valid data before querying.

New fields added to the old table need to be allowed to be NULL (to avoid data updates in the entire table and blocking caused by long-term lock holding) (this is mainly to consider the transformation of the previous table)

Index design guidelines

Indexes should be created on columns that are frequently used in the WHERE clause

Indexes should be created on columns that are frequently used to join tables

Indexes should be created on columns that are frequently used in ORDER BY clauses

Indexes should not be created on small tables (tables that use only a few pages) because a full table scan operation may be faster than a query using the index

The number of indexes in a single table does not exceed 6

Do not build single-column indexes for fields with low selectivity

Make full use of unique constraints

The index contains no more than 5 fields (including include columns)

Do not create single-column indexes for fields with low selectivity

SQL SERVER has requirements for the selectivity of index fields. If the selectivity is too low, SQL SERVER will give up using

Fields not suitable for indexing: Gender, 0/1, TRUE/FALSE

- ## Fields suitable for creating indexes: ORDERID, UID, etc.

- Indexes speed up queries, but affect write performance

- The index of a table should be created comprehensively by combining all the SQL related to the table, and try to merge

- The principle of combined index is that the fields with better filterability are placed higher

- Too many indexes will not only increase compilation time, but also affect the database's ability to select the best execution plan

- It is prohibited to perform complex operations in the database

Use of SELECT *

# is prohibited Disallowing the use of functions or calculations on indexed columns

Cursor use prohibited

Triggers prohibited

It is forbidden to specify index in the query

The variable/parameter/associated field type must be consistent with the field type

Parameterized query

Limit the number of JOIN

Limit the length of SQL statements and the number of IN clauses

Try to avoid large transaction operations

# The row count information affected by the shutdown returns

Unless necessary, NOLOCK

must be added to the SELECT statement. Replace UNION

with UNION ALL Query large amounts of data using paging or TOP

Recursive query level restrictions

NOT EXISTS replaces NOT IN

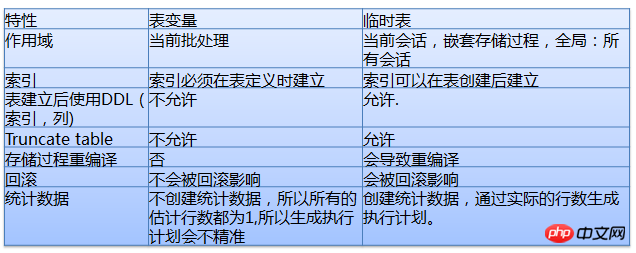

Temporary tables and table variables

Use local variables to select a mean execution plan

Try to avoid using the OR operator

# Add transaction exception handling mechanism

The output columns use the two-part naming format

It is prohibited to perform complex operations in the database

XML parsing

String similarity comparison

String search (Charindex)

Complex operations are completed on the program side

It is forbidden to use SELECT *

Reduce memory consumption and network bandwidth

# Give the query optimizer a chance to read the required columns from the index

When the table structure changes, it is easy to cause query errors

It is forbidden to use functions or calculations on index columns

It is forbidden to use functions or calculations on index columns

In the where clause, if the index is part of the function, the optimizer will no longer use the index and use a full table scan

Assuming that there is an index built on field Col1, the index will not be used in the following scenarios:

ABS[Col1]=1

[Col1]+1>9

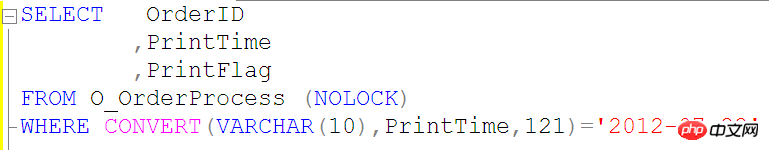

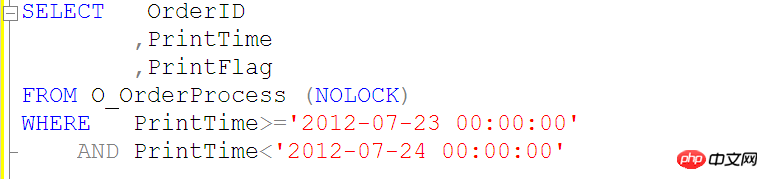

Let’s give another example

A query like the above will not be able to use the PrintTime index on the O_OrderProcess table, so we use the following query SQL

It is forbidden to use functions or calculations on index columns

Assuming that there is an index built on field Col1, the index can be used in the following scenarios:

[Col1]=3.14

[Col1]>100

[Col1] BETWEEN 0 AND 99

[Col1] LIKE ‘abc%’

[Col1] IN(2,3,5,7)

Index problem of LIKE query

1.[Col1] like “abc%” –index seek This uses index query

2.[Col1] like “%abc%” –index scan And this does not use the index query

3.[Col1] like “%abc” –index scan This does not use index query

I think from the above three examples, everyone should understand that it is best not to use fuzzy matching in front of the LIKE condition, otherwise the index query will not be used.

Cursors are prohibited

Relational databases are suitable for set operations, that is, set operations are performed on the result set determined by the WHERE clause and the select column. The cursor is a way to provide non-set operations. Under normal circumstances, the function implemented by a cursor is often equivalent to the function implemented by a loop on the client side.

The cursor places the result set in the server memory and processes the records one by one through a loop, which consumes a lot of database resources (especially memory and lock resources).

(In addition, cursors are really complicated and difficult to use, so use them as little as possible)

The use of triggers is prohibited

The trigger is opaque to the application (the application level does not know when the trigger will be triggered, nor does it know when it will occur. It feels inexplicable...)

It is forbidden to specify index in the query

With(index=XXX) (In queries, we generally use With(index=XXX) to specify the index)

As the data changes, the index performance specified by the query statement may not be optimal

The index should be transparent to the application. If the specified index is deleted, it will cause a query error, which is not conducive to troubleshooting

The newly created index cannot be used immediately by the application and must be published to take effect

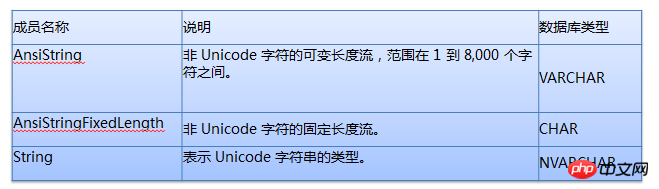

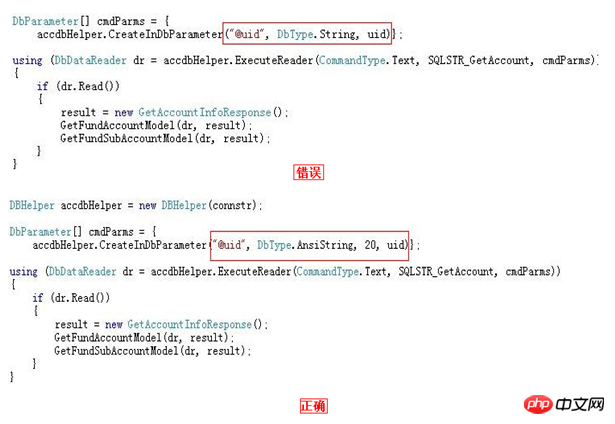

The variable/parameter/associated field type must be consistent with the field type (this is something I didn’t pay much attention to before)

Avoid the extra CPU consumption of type conversion, which is particularly serious for large table scans

After looking at the two pictures above, I don’t think I need to explain, everyone should already know it.

If the database field type is VARCHAR, it is best to specify the type as AnsiString in the application and clearly specify its length

If the database field type is CHAR, it is best to specify the type as AnsiStringFixedLength in the application and clearly specify its length

If the database field type is NVARCHAR, it is best to specify the type as String in the application and clearly specify its length

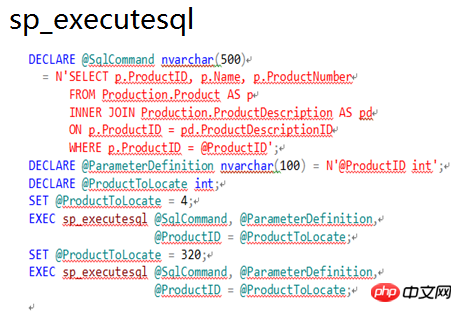

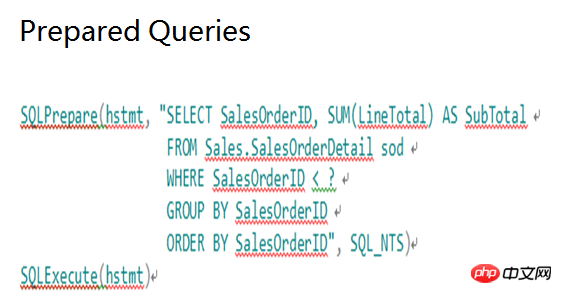

Parameterized query

Query SQL can be parameterized in the following ways:

sp_executesql

Prepared Queries

Stored procedures

Let me explain with a picture, haha.

Limit the number of JOIN

The number of table JOINs in a single SQL statement cannot exceed 5

Too many JOINs will cause the query analyzer to get the wrong execution plan

Too many JOINs consume a lot of money when compiling the execution plan

Limit the number of conditions in the IN clause

Including a very large number of values (thousands) in the IN clause may consume resources and return error 8623 or 8632. The number of conditions in the IN clause is required to be limited to 100.

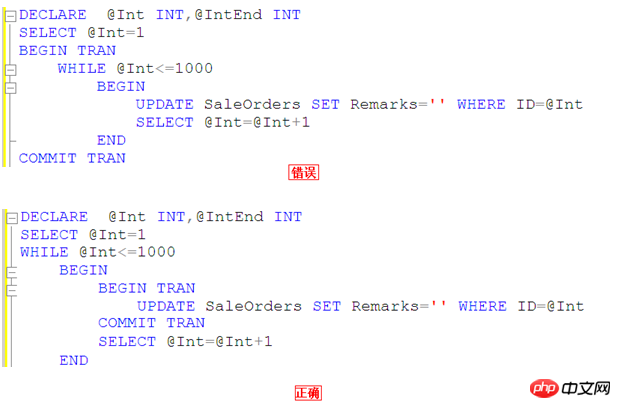

Try to avoid large transaction operations

Only start transactions when the data needs to be updated to reduce resource lock time

Add transaction exception capture preprocessing mechanism

The use of distributed transactions on the database is prohibited

Use pictures to explain

In other words, we should not commit tran after all 1,000 rows of data have been updated. Think about whether you are monopolizing resources when updating these 1,000 rows of data, causing other transactions to be unable to be processed.

Close the affected row count information and return

Display Set Nocount On in the SQL statement, cancel the return of affected row count information, and reduce network traffic

Unless necessary, SELECT statements must be added with NOLOCK

Unless necessary, try to make all select statements add NOLOCK

Specifies that dirty reads are allowed. Shared locks are not issued to prevent other transactions from modifying the data read by the current transaction, and exclusive locks set by other transactions will not prevent the current transaction from reading the locked data. Allowing dirty reads may result in more concurrent operations, but the cost is data modifications that will be rolled back by other transactions after reading. This could cause your transaction to error, show the user data that was never committed, or cause the user to see the record twice (or not see the record at all)

Use UNION ALL to replace UNION

Use UNION ALL to replace UNION

UNION will de-reorder the SQL result set and increase the consumption of CPU, memory, etc.

To query large amounts of data, use paging or TOP

Reasonably limit the number of record returns to avoid bottlenecks in IO and network bandwidth

Recursive query level restrictions

Use MAXRECURSION to prevent unreasonable recursive CTE from entering an infinite loop

Temporary tables and table variables

Use local variables to select a mean execution plan

In a stored procedure or query, accessing a table with very uneven data distribution often causes the stored procedure or query to use a suboptimal or even poor execution plan, causing problems such as High CPU and a large number of IO Reads. Use local variables to prevent wrong execution plans.

Using local variables, SQL does not know the value of this local variable when compiling. At this time, SQL will "guess" a return value based on the general distribution of data in the table. No matter what variable values the user substitutes when calling the stored procedure or statement, the generated plan is the same. Such a plan is generally more moderate, not necessarily the best plan, but generally not the worst plan

If the local variable in the query uses the inequality operator, the query analyzer uses a simple 30% calculation to estimate

Estimated Rows =(Total Rows * 30)/100

If the local variable in the query uses the equality operator, the query analyzer uses: precision * total number of table records to estimate

Estimated Rows = Density * Total Rows

Try to avoid using the OR operator

For the OR operator, a full table scan is usually used. Consider breaking it into multiple queries and implementing UNION/UNION ALL. Here you need to confirm that the query can go to the index and return a smaller result set

Add transaction exception handling mechanism

The application should handle accidents well and perform rollback in time.

Set connection properties “set xact_abort on”

The output column uses the two-part naming format

Two-stage naming format: table name.field name

In TSQL with a JOIN relationship, the field must indicate which table the field belongs to. Otherwise, after the table structure is changed in the future, program compatibility errors with Ambiguous column name may occur

Architecture design

Read and write separation

Schema decoupling

Data life cycle

Read and write separation

The separation of reading and writing is considered from the beginning of the design, even if the same library is read and written, it is conducive to rapid expansion

- ## According to the read characteristics, the reads are divided into real-time reads and deferrable reads, which correspond to the write library and the read library respectively

- Schema decoupling

Cross-database JOIN

is prohibited Data life cycle

Based on the frequency of data use, large tables are regularly archived in separate databases

Physical separation of main library/archive library

Log type tables should be partitioned or divided into tables

For large tables, partitioning is required. The partitioning operation divides the table and index into multiple partitions. Through partition switching, the old and new partitions can be quickly replaced, speeding up data cleaning, and significantly reducing IO resource consumption

Tables that are frequently written need to be partitioned or divided into tables

Self-Growth and Latch Lock

Latches are applied and controlled internally by SQL Server. Users have no way to intervene. They are used to ensure the consistency of the data structure in the memory. The lock level is page-level lock

The above is the detailed content of Collection of commonly used SQL Server specifications. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

What software is microsoft sql server?

Feb 28, 2023 pm 03:00 PM

What software is microsoft sql server?

Feb 28, 2023 pm 03:00 PM

Microsoft SQL Server is a relational database management system launched by Microsoft. It is a comprehensive database platform that uses integrated business intelligence (BI) tools to provide enterprise-level data management. It is easy to use, has good scalability, and has a high degree of integration with related software. High advantages. The SQL Server database engine provides more secure and reliable storage functions for relational data and structured data, allowing users to build and manage highly available and high-performance data applications for business.

SQL Server or MySQL? New research reveals the best database choices.

Sep 08, 2023 pm 04:34 PM

SQL Server or MySQL? New research reveals the best database choices.

Sep 08, 2023 pm 04:34 PM

SQLServer or MySQL? The latest research reveals the best database selection. In recent years, with the rapid development of the Internet and big data, database selection has become an important issue faced by enterprises and developers. Among many databases, SQL Server and MySQL, as the two most common and widely used relational databases, are highly controversial. So, between SQLServer and MySQL, which one should you choose? The latest research sheds light on this problem for us. First, let

PHP and SQL Server database development

Jun 20, 2023 pm 10:38 PM

PHP and SQL Server database development

Jun 20, 2023 pm 10:38 PM

With the popularity of the Internet, website and application development has become the main business of many companies and individuals. PHP and SQLServer database are two very important tools. PHP is a server-side scripting language that can be used to develop dynamic websites; SQL Server is a relational database management system developed by Microsoft and has a wide range of application scenarios. In this article, we will discuss the development of PHP and SQL Server, as well as their advantages, disadvantages and application methods. First, let's

How to connect to a Microsoft SQL Server database using PDO

Jul 29, 2023 pm 01:49 PM

How to connect to a Microsoft SQL Server database using PDO

Jul 29, 2023 pm 01:49 PM

Introduction to how to use PDO to connect to a Microsoft SQL Server database: PDO (PHPDataObjects) is a unified interface for accessing databases provided by PHP. It provides many advantages, such as implementing an abstraction layer of the database and making it easy to switch between different database types without modifying a large amount of code. This article will introduce how to use PDO to connect to a Microsoft SQL Server database and provide some related code examples. step

A brief analysis of five methods of connecting SQL Server with PHP

Mar 21, 2023 pm 04:32 PM

A brief analysis of five methods of connecting SQL Server with PHP

Mar 21, 2023 pm 04:32 PM

In web development, the combination of PHP and MySQL is very common. However, in some cases, we need to connect to other types of databases, such as SQL Server. In this article, we will cover five different ways to connect to SQL Server using PHP.

SQL Server vs. MySQL: Which database is more suitable for high availability architecture?

Sep 10, 2023 pm 01:39 PM

SQL Server vs. MySQL: Which database is more suitable for high availability architecture?

Sep 10, 2023 pm 01:39 PM

SQL Server vs. MySQL: Which database is more suitable for high availability architecture? In today's data-driven world, high availability is one of the necessities for building reliable and stable systems. As the core component of data storage and management, the database's high availability is crucial to the business operation of the enterprise. Among the many databases, SQLServer and MySQL are common choices. So in terms of high availability architecture, which database is more suitable? This article will compare the two and give some suggestions.

SQL Server vs. MySQL comparison: Which one is better for large-scale data processing?

Sep 09, 2023 am 09:36 AM

SQL Server vs. MySQL comparison: Which one is better for large-scale data processing?

Sep 09, 2023 am 09:36 AM

SQLServer and MySQL are currently two very popular relational database management systems (RDBMS). They are both powerful tools for storing and managing large-scale data. However, they have some differences in handling large-scale data. This article will compare SQL Server and MySQL, focusing on their suitability for large-scale data processing. First, let us understand the basic characteristics of SQLServer and MySQL. SQLServer is developed by Microsoft

SQL Server and MySQL compete, how to choose the best database solution?

Sep 10, 2023 am 08:07 AM

SQL Server and MySQL compete, how to choose the best database solution?

Sep 10, 2023 am 08:07 AM

With the continuous development of the Internet, database selection has become increasingly important. Among the many databases, SQLServer and MySQL are two high-profile options. SQLServer is a relational database management system developed by Microsoft, while MySQL is an open source relational database management system. So how to choose the best database solution between SQLServer and MySQL? First, we can compare these two databases in terms of performance. SQLServer is processing