It is easy to check whether a URL is normal. What if you check 2000 URLs, or a large number of URLs? This article introduces you to the tips of python to check whether the URL is accessed normally. It has certain reference value. Interested friends can refer to it

Today, the project manager asked me a question, ask me There are 2,000 URLs here to check whether they can be opened normally. In fact, I refused. I knew that because I had to write code, I happened to learn some Python. I thought that python is easy to process, so I chose python and started to think about it. :

1. First 2000 URLs. It can be placed in a txt text

2. Use python to put the URLs in the content one by one into the array

3. Open a simulated browser and access it.

4. If the access is normal, the output will be normal, and if there is an error, the error will be output.

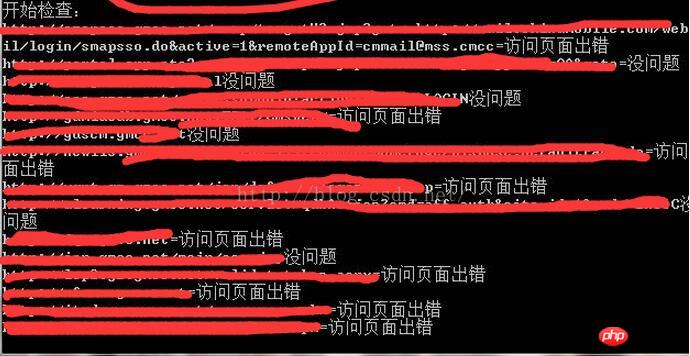

Just throw away the code simply and crudely. Because it involves privacy, the picture has been coded

import urllib.request

import time

opener = urllib.request.build_opener()

opener.addheaders = [('User-agent', 'Mozilla/49.0.2')]

#这个是你放网址的文件名,改过来就可以了

file = open('test.txt')

lines = file.readlines()

aa=[]

for line in lines:

temp=line.replace('\n','')

aa.append(temp)

print(aa)

print('开始检查:')

for a in aa:

tempUrl = a

try :

opener.open(tempUrl)

print(tempUrl+'没问题')

except urllib.error.HTTPError:

print(tempUrl+'=访问页面出错')

time.sleep(2)

except urllib.error.URLError:

print(tempUrl+'=访问页面出错')

time.sleep(2)

time.sleep(0.1)Rendering:

[Related recommendations]

2. Python basic introductory tutorial

3. Python object-oriented video tutorial

The above is the detailed content of Introduction to how to check whether URL access is normal. For more information, please follow other related articles on the PHP Chinese website!