H5 live video broadcast

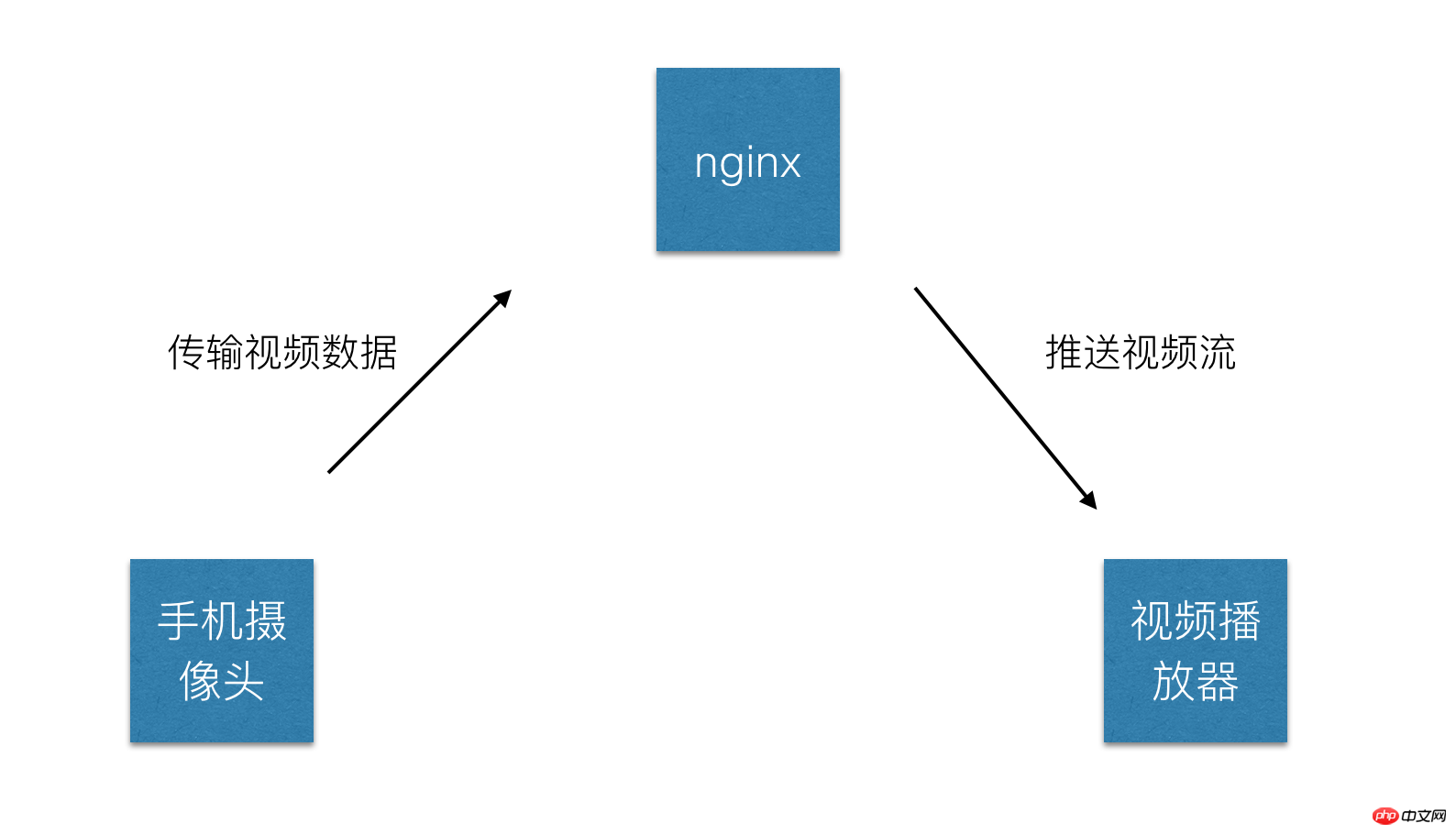

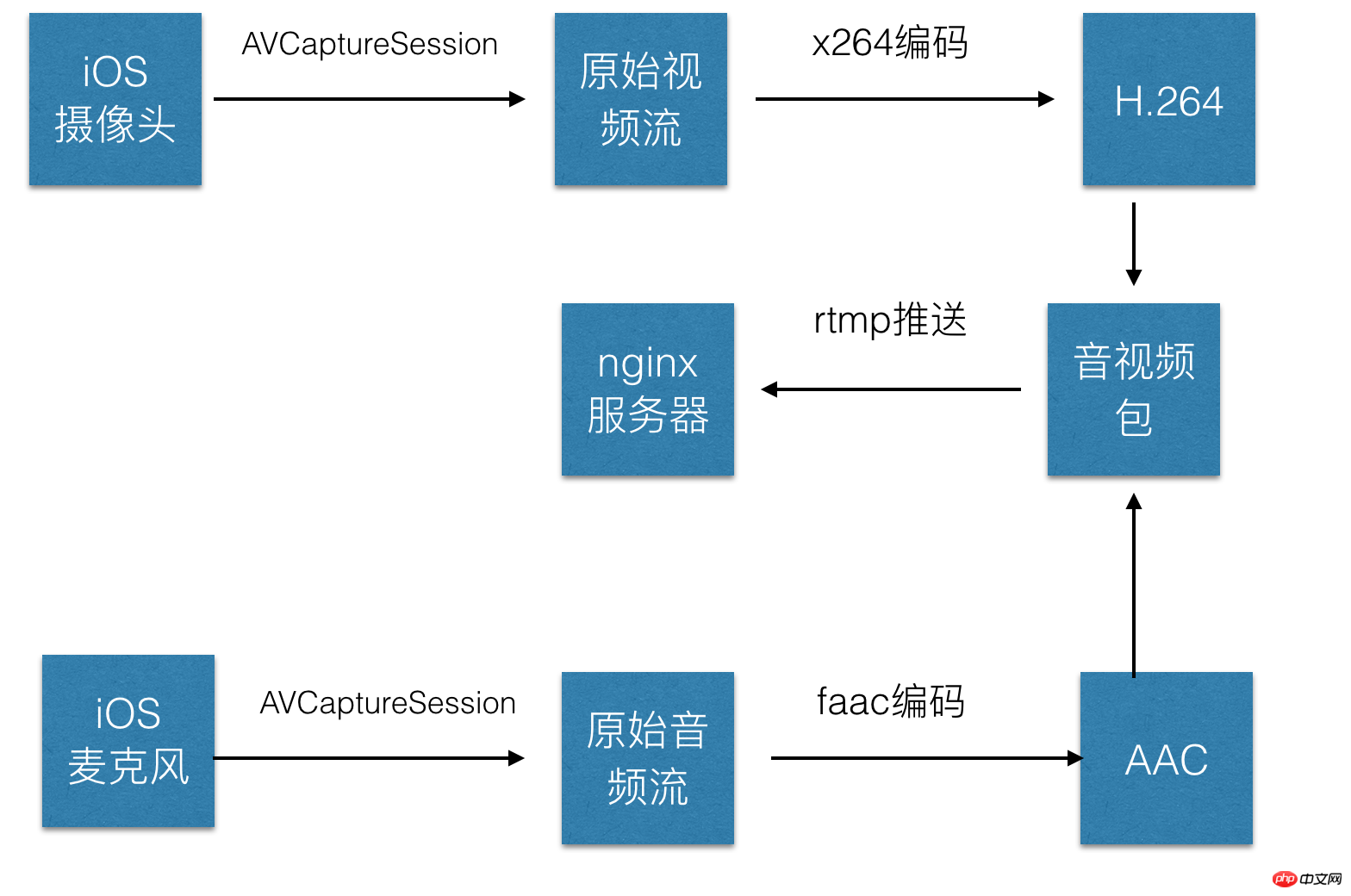

In order to keep up with the trend, this article will introduce to you the basic process and main technical points in Video, including but not limited to front-end technology.

1 Can H5 do live video streaming?

Of course, H5 has been popular for so long and covers all aspects of technology.

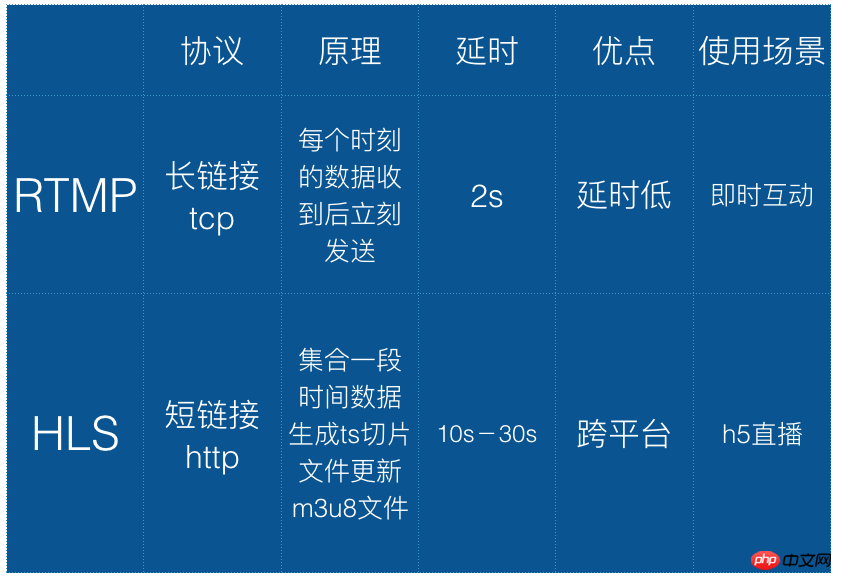

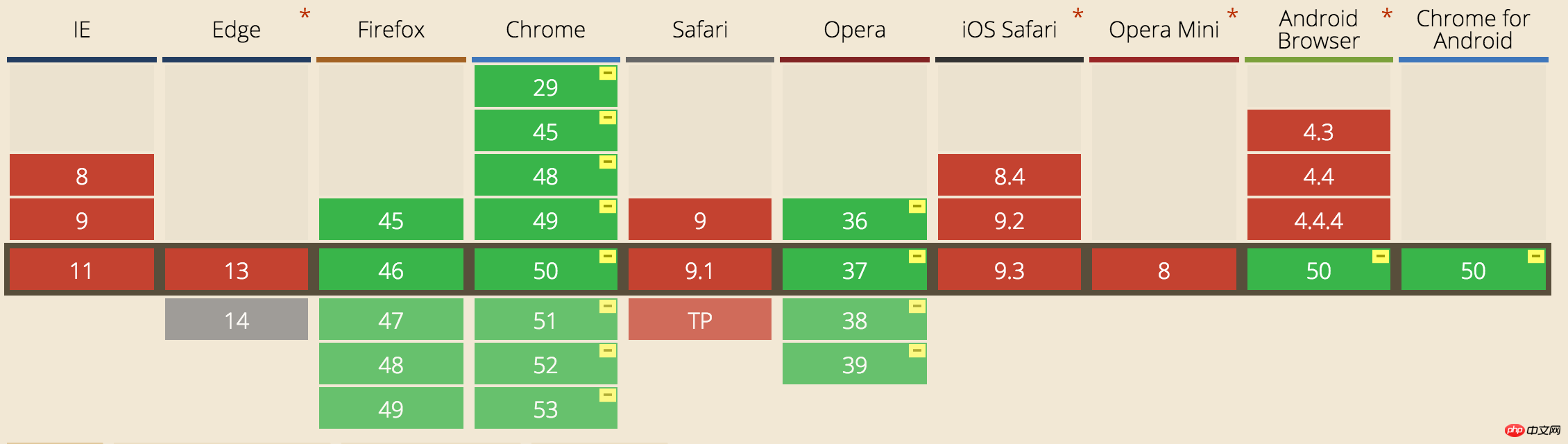

For video recording, you can use the powerful webRTC (Web Real-Time Communication), which is a technology that supports web browsers for real-time voice conversations or video conversations. The disadvantage is that it is only available on PCs. chrThe support on ome is good, but the mobile support is not ideal.

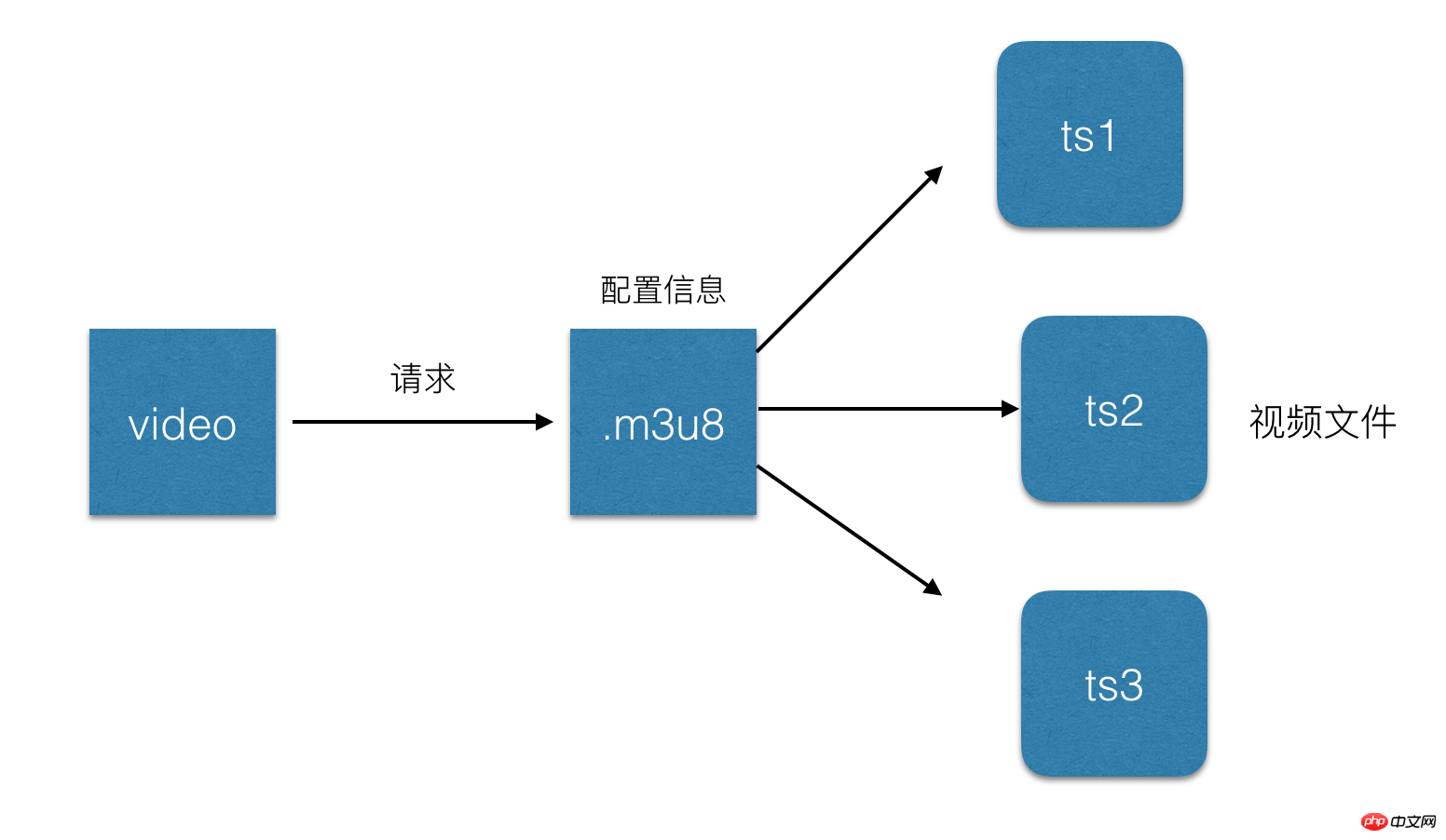

For video playback, you can use the HLS (HTTP Live Streaming) protocol to play the live stream. Both iOS and Android naturally support this protocol. It is simple to configure and can be used directly. Just the video tag.

webRTC compatibility:

video tag plays hls protocol video:

The above is the detailed content of H5 live video broadcast. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to use Go language framework to implement Websocket video live broadcast

Jun 05, 2023 pm 09:51 PM

How to use Go language framework to implement Websocket video live broadcast

Jun 05, 2023 pm 09:51 PM

With the rapid development of Internet technology, live video has become an important online media method, attracting more and more users. Websocket technology is one of the keys to supporting real-time communication. Using the Go language framework to implement Websocket video live broadcast has become the research direction of many developers. This article will introduce how to use the Go language framework to implement Websocket video live broadcast. 1. Introduction to Websocket Websocket is a full-duplex communication protocol based on TCP connection.

Workerman development: How to implement a video live broadcast system based on WebSocket protocol

Nov 07, 2023 am 11:25 AM

Workerman development: How to implement a video live broadcast system based on WebSocket protocol

Nov 07, 2023 am 11:25 AM

Workerman is a high-performance PHP framework that can achieve tens of millions of concurrent connections through asynchronous non-blocking I/O. It is suitable for real-time communication, high-concurrency servers and other scenarios. In this article, we will introduce how to use the Workerman framework to develop a live video system based on the WebSocket protocol, including building services, implementing push and reception of live video streams, display of front-end pages, etc. 1. Build the server 1. Install the Workerman dependency package: Run the following command to install Work

How to use Java language and Youpai Cloud to build a live video platform

Jul 07, 2023 pm 01:30 PM

How to use Java language and Youpai Cloud to build a live video platform

Jul 07, 2023 pm 01:30 PM

How to use Java language and Youpai Cloud to build a live video platform. Building a live video platform is a hot technology in the current Internet field. It can transmit real-time video streams to user devices to achieve real-time viewing and interaction. In this article, I will introduce how to use Java language and Youpai Cloud to build a simple video live broadcast platform. Step 1: Register an Upyun account. First, we need to register a developer account on Upyun (upyun.com). After logging in to your account, you can obtain some necessary information, such as service name,

Use Python to connect with Tencent Cloud interface to implement live video function

Jul 05, 2023 pm 04:45 PM

Use Python to connect with Tencent Cloud interface to implement live video function

Jul 05, 2023 pm 04:45 PM

Title: Use Python to connect with Tencent Cloud interface to realize the live video function. Abstract: This article will introduce how to use the Python programming language to connect with the Tencent Cloud interface to realize the live video function. Through the SDK and API provided by Tencent Cloud, we can quickly implement live streaming and playback functions. This article will use specific code examples to introduce in detail how to use Python for live streaming and playback operations. 1. Preparation work Before starting to write code, we need to do some preparation work. Register a Tencent Cloud account

How to use PHP and Youpai Cloud API to implement live video streaming

Jul 06, 2023 pm 01:45 PM

How to use PHP and Youpai Cloud API to implement live video streaming

Jul 06, 2023 pm 01:45 PM

How to use PHP and Youpai Cloud API to realize the function of live video streaming. Nowadays, with the continuous development and popularization of network technology, live video streaming has become one of the important ways for people to obtain information and entertainment. As a scripting language widely used in web development, PHP, combined with Youpaiyun API, can help us easily implement the function of live video streaming. Youpaiyun is a well-known cloud storage and content distribution network (CDN) service provider. It provides related functions and APIs for live video broadcasts. Its services are stable and reliable and its technical support is complete. It is very

PHP WebSocket Development Guide: Analysis of steps to implement live video function

Sep 12, 2023 pm 02:49 PM

PHP WebSocket Development Guide: Analysis of steps to implement live video function

Sep 12, 2023 pm 02:49 PM

PHPWebSocket Development Guide: Analysis of Steps to Implement the Live Video Function Introduction: With the continuous development of Internet technology, live video has become an indispensable part of people's lives. An effective way to implement the live video function is to use WebSocket technology. This article will introduce how to use PHP to develop WebSocket to realize the function of live video broadcast. Step 1: Understand WebSocket technology WebSocket is a full-duplex communication based on TCP

Use Workerman to build a high-performance video live broadcast platform

Aug 08, 2023 am 11:33 AM

Use Workerman to build a high-performance video live broadcast platform

Aug 08, 2023 am 11:33 AM

Use Workerman to build a high-performance live video platform Abstract: With the development of modern technology, live video has become an increasingly popular form of entertainment. However, live broadcast platforms need to handle a large number of concurrent connections and high bandwidth requirements, so a high-performance solution is needed. This article will introduce how to use PHP's network communication library Workerman to build a high-performance video live broadcast platform. Introduction: With the improvement of network bandwidth and the popularity of mobile terminal devices, live video has become a very popular entertainment.

How to use PHP to develop live video and live broadcast management modules in CMS

Jun 21, 2023 am 09:15 AM

How to use PHP to develop live video and live broadcast management modules in CMS

Jun 21, 2023 am 09:15 AM

With the development of live video, more and more websites need to incorporate live video into their CMS (content management system). PHP is a popular server-side scripting language that is widely used in web development because it is easy to learn and use. This article will introduce how to use PHP to develop live video and live broadcast management modules in CMS. Before you start, you need to prepare the following: a web server running PHP, a live video platform (Bilibili or Tencent Cloud Live is recommended), a live video plug-in (