I recently started to learn Python crawlers from this blog. The blogger uses Python version 2.7, while I use version 3.5. There are many incompatibilities, but it doesn’t matter. You can change it yourself. Just change it.

We want to filter the content of the website and only get the parts that interest us. For example, you want to filter out pornographic pictures on the XX website and package them up to take away. Here we only do a simple implementation, taking the joke (plain text) written by Sister Bai Sibu as an example. We want to achieve the following functions:

Download several pages of paragraphs in batches to local files

Press any key to start Read the next paragraph

and import the related library of urllib. In Python 3, it should be written like this:

import urllib.requestimport urllib.parseimport re

The re library is a regular expression (Regular Expression), which will be used later for matching.

Bessibejie's joke pageurl ='http://www.budejie.com/text/1', the number 1 at the end here means that this is the first page . The following code can return the content of the web page.

req = urllib.request.Request(url)# 添加headers 使之看起来像浏览器在访问req.add_header('User-Agent', 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 ' '(KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36')

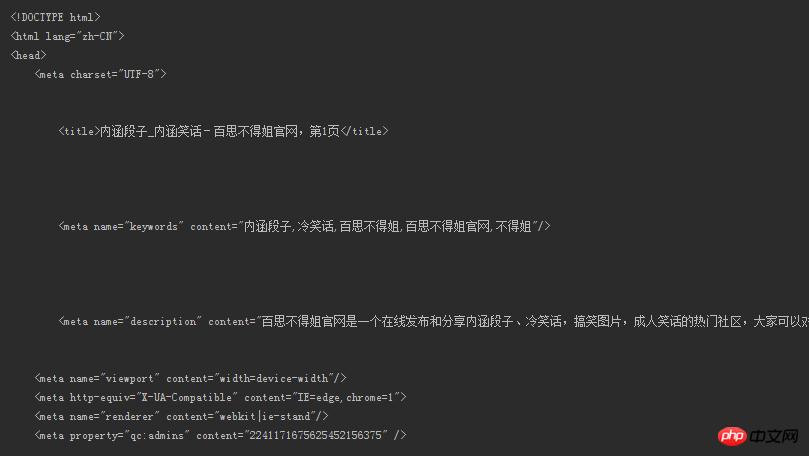

response = urllib.request.urlopen(req)# 得到网页内容,注意必须使用decode()解码html = response.read().decode('utf-8')print(html), the content is as follows:

You can see it ? Where's the joke? What about the joke we want? !

Oh, by the way, check the headers like this.

Press F12, and then...look at the picture

If you want to filter out the matching The content that ordinary people read (if it still has html tags, how can it be read?), the jokes are successfully extracted. For this, we need some established patterns to match the entire content of the web page, and return the objects that successfully match the pattern. We use powerful regular expressions for matching (Regular Expression). The relevant syntax can be found here.

Only for the content of the web page in this example, let’s first see what content in the web page the paragraph we need corresponds to.

You can see that the paragraph was <div class="j-r-list-c-desc">(the content we want)</div> Surrounded by tags like , you only need to specify the corresponding rules to extract it! As can be seen from the picture above, there are many spaces before and after the paragraph text, which needs to be matched.

pattern = re.compile(r'<div class="j-r-list-c-desc">\s+(.*)\s+</div>') result = re.findall(pattern, html)

Make rules through the

compilefunction of therelibrary.

\s+can match one or more spaces

.matches except newlines All characters except\n.

Now we have the matching results, let’s take a look.

Bingo! It was extracted, right? !

But we found that there are some nasty things inside<br />. It doesn't matter, just write a few lines of code. I won’t show the removed content here, just make up your own mind haha.

for each in content:# 如果某个段子里有<br />if '<br />' in each:# 替换成换行符并输出new_each = re.sub(r'<br />', '\n', each)print(new_each)# 没有就照常输出else:print(each)

Here

contentis the list we return throughre.findall().

So far, we have successfully gotten the jokes we want to see! What if you want to download it locally?

You can define a save() function. The num parameters are user-defined. You want to download the latest 100 The content of the page is fine! There are some variables that are not mentioned, and the source code will be given at the end.

# num是指定网页页数def save(num):# 写方式打开一个文本,把获取的段子列表存放进去with open('a.txt', 'w', encoding='utf-8') as f:

text = get_content(num)# 和上面去掉<br />类似for each in text:if '<br />' in each:

new_each = re.sub(r'<br />', '\n', each)

f.write(new_each)else:

f.write(str(each) + '\n')After downloading to the local document, it is shown in the figure below

There are so many, so many things to see. But we just want to read them one by one. You can switch to the next item by pressing any key on the keyboard, and the program will not end until the last item is read, or you can exit the program at any time by setting an exit key, such as setting the q key to exit. The entire code is given here.

import urllib.requestimport urllib.parseimport re pattern = re.compile(r'\s+(.*)\s+')# 返回指定网页的内容def open_url(url): req = urllib.request.Request(url) req.add_header('User-Agent', 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 ' '(KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36') response = urllib.request.urlopen(req) html = response.read().decode('utf-8')return html# num为用户自定,返回的是所有页的段子列表def get_content(num):# 存放段子的列表text_list = []for page in range(1, int(num)): address = 'http://www.budejie.com/text/' + str(page) html = open_url(address) result = re.findall(pattern, html)# 每一页的result都是一个列表,将里面的内容加入到text_listfor each in result: text_list.append(each)return text_list# num是指定网页页数def save(num):# 写方式打开一个文本,把获取的段子列表存放进去with open('a.txt', 'w', encoding='utf-8') as f: text = get_content(num)# 和上面去掉<br />类似for each in text:if '<br />' in each: new_each = re.sub(r'<br />', '\n', each) f.write(new_each)else: f.write(str(each) + '\n') if __name__ == '__main__':print('阅读过程中按q随时退出') number = int(input('想读几页的内容: ')) content = get_content(number + 1)for each in content:if '<br />' in each: new_each = re.sub(r'<br />', '\n', each)print(new_each)else:print(each)# 用户输入user_input = input()# 不区分大小写的q,输入则退出if user_input == 'q' or user_input == 'Q':break

Demonstrate it, the effect is like this.

Although the function is very useless, as a beginner, I am still very satisfied. If you are interested, you can go deeper! The crawler is not just that, you will learn more advanced functions in the future.

by @sunhaiyu

2016.8.15

The above is the detailed content of Python crawler for beginners: Crawling jokes. For more information, please follow other related articles on the PHP Chinese website!