Backend Development

Backend Development

Python Tutorial

Python Tutorial

Sequence annotation, handwritten lowercase letters OCR data set, bidirectional RNN

Sequence annotation, handwritten lowercase letters OCR data set, bidirectional RNN

Sequence annotation, handwritten lowercase letters OCR data set, bidirectional RNN

Sequence labeling, predicting a category for each frame of the input sequence. OCR (Optical Character Recognition optical character recognition).

The MIT Spoken Language System Research Group Rob Kassel collected and the Stanford University Artificial Intelligence Laboratory Ben Taskar preprocessed OCR data set (http://ai.stanford.edu/~btaskar/ocr/), which contains a large number of individual handwriting Lowercase letters, each sample corresponds to a 16X8 pixel binary image. Word lines combine sequences, and the sequences correspond to words. 6800 words of no more than 14 letters in length. gzip compressed, content is tab-delimited text file. Python csv module reads directly. Each line of the file has one normalized letter attribute, ID number, label, pixel value, next letter ID number, etc.

Next letter ID value sort, read each word letter in the correct order. Letters are collected until the next ID corresponding field is not set. Read new sequence. After reading the target letters and data pixels, fill the sequence object with zero images, which can be included in the NumPy array of all pixel data of the two larger target letters.

Share the softmax layer between time steps. The data and target arrays contain sequences, one image frame for each target letter. RNN extension, adding softmax classifier to each letter output. The classifier evaluates predictions on each frame of data rather than on the entire sequence. Calculate sequence length. A softmax layer is added to all frames: either several different classifiers are added to all frames, or all frames share the same classifier. With a shared classifier, the weights are adjusted more times during training, for each letter of the training word. A fully connected layer weight matrix dimension batch_size*in_size*out_size. Now it is necessary to update the weight matrix in the two input dimensions batch_size and sequence_steps. Let the input (RNN output activity value) be flattened into the shape batch_size*sequence_steps*in_size. The weight matrix becomes a larger batch of data. The result is unflattened.

Cost function, each frame of the sequence has a predicted target pair, averaged in the corresponding dimension. tf.reduce_mean normalized according to tensor length (maximum length of sequence) cannot be used. It is necessary to normalize according to the actual sequence length and manually call tf.reduce_sum and division operation mean.

Loss function, tf.argmax is for axis 2 and not axis 1, each frame is filled, and the mean is calculated based on the actual length of the sequence. tf.reduce_mean takes the mean of all words in the batch data.

TensorFlow automatic derivative calculation can use the same optimization operation for sequence classification, and only needs to substitute a new cost function. Cut all RNN gradients to prevent training divergence and avoid negative effects.

Train the model, get_sataset downloads the handwriting image, preprocessing, one-hot encoding vector of lowercase letters. Randomly disrupt the order of the data and divide it into training sets and test sets.

There is a dependency relationship (or mutual information) between adjacent letters of a word, and RNN saves all the input information of the same word to the implicit activity value. For the classification of the first few letters, the network does not have a large amount of input to infer additional information, and bidirectional RNN overcomes the shortcomings.

Two RNNs observe the input sequence, one reads words from the left end in the usual order, and the other reads words from the right end in the reverse order. Two output activity values are obtained for each time step. Splicing before sending to the shared softmax layer. The classifier obtains complete word information from each letter. tf.modle.rnn.bidirectional_rnn is implemented.

Implement bidirectional RNN. Divide the predictive attributes into two functions and focus on less content. The _shared_softmax function passes in the function tensor data to infer the input size. Reusing other architectural functions, the same flattening technique shares the same softmax layer at all time steps. rnn.dynamic_rnn creates two RNNs.

Sequence reversal is easier than implementing the new reverse pass RNN operation. The tf.reverse_sequence function reverses the sequence_lengths frames in the frame data. Data flow diagram nodes have names. The scope parameter is the rnn_dynamic_cell variable scope name, and the default value is RNN. The two parameters are different for RNN and require different domains.

The reversed sequence is fed into the backward RNN, and the network output is reversed and aligned with the forward output. Concatenate two tensors along the RNN neuron output dimension and return. The bidirectional RNN model performs better.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 |

|

Reference material:

"TensorFlow Practice for Machine Intelligence"

Welcome to add me on WeChat to communicate: qingxingfengzi

me My wife Zhang Xingqing’s WeChat public account: qingxingfengzigz

The above is the detailed content of Sequence annotation, handwritten lowercase letters OCR data set, bidirectional RNN. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1207

1207

24

24

How to delete Xiaohongshu notes

Mar 21, 2024 pm 08:12 PM

How to delete Xiaohongshu notes

Mar 21, 2024 pm 08:12 PM

How to delete Xiaohongshu notes? Notes can be edited in the Xiaohongshu APP. Most users don’t know how to delete Xiaohongshu notes. Next, the editor brings users pictures and texts on how to delete Xiaohongshu notes. Tutorial, interested users come and take a look! Xiaohongshu usage tutorial How to delete Xiaohongshu notes 1. First open the Xiaohongshu APP and enter the main page, select [Me] in the lower right corner to enter the special area; 2. Then in the My area, click on the note page shown in the picture below , select the note you want to delete; 3. Enter the note page, click [three dots] in the upper right corner; 4. Finally, the function bar will expand at the bottom, click [Delete] to complete.

Learn to completely uninstall pip and use Python more efficiently

Jan 16, 2024 am 09:01 AM

Learn to completely uninstall pip and use Python more efficiently

Jan 16, 2024 am 09:01 AM

No more need for pip? Come and learn how to uninstall pip effectively! Introduction: pip is one of Python's package management tools, which can easily install, upgrade and uninstall Python packages. However, sometimes we may need to uninstall pip, perhaps because we wish to use another package management tool, or because we need to completely clear the Python environment. This article will explain how to uninstall pip efficiently and provide specific code examples. 1. How to uninstall pip The following will introduce two common methods of uninstalling pip.

What should I do if the notes I posted on Xiaohongshu are missing? What's the reason why the notes it just sent can't be found?

Mar 21, 2024 pm 09:30 PM

What should I do if the notes I posted on Xiaohongshu are missing? What's the reason why the notes it just sent can't be found?

Mar 21, 2024 pm 09:30 PM

As a Xiaohongshu user, we have all encountered the situation where published notes suddenly disappeared, which is undoubtedly confusing and worrying. In this case, what should we do? This article will focus on the topic of "What to do if the notes published by Xiaohongshu are missing" and give you a detailed answer. 1. What should I do if the notes published by Xiaohongshu are missing? First, don't panic. If you find that your notes are missing, staying calm is key and don't panic. This may be caused by platform system failure or operational errors. Checking release records is easy. Just open the Xiaohongshu App and click "Me" → "Publish" → "All Publications" to view your own publishing records. Here you can easily find previously published notes. 3.Repost. If found

How to add product links in notes in Xiaohongshu Tutorial on adding product links in notes in Xiaohongshu

Mar 12, 2024 am 10:40 AM

How to add product links in notes in Xiaohongshu Tutorial on adding product links in notes in Xiaohongshu

Mar 12, 2024 am 10:40 AM

How to add product links in notes in Xiaohongshu? In the Xiaohongshu app, users can not only browse various contents but also shop, so there is a lot of content about shopping recommendations and good product sharing in this app. If If you are an expert on this app, you can also share some shopping experiences, find merchants for cooperation, add links in notes, etc. Many people are willing to use this app for shopping, because it is not only convenient, but also has many Experts will make some recommendations. You can browse interesting content and see if there are any clothing products that suit you. Let’s take a look at how to add product links to notes! How to add product links to Xiaohongshu Notes Open the app on the desktop of your mobile phone. Click on the app homepage

A deep dive into matplotlib's colormap

Jan 09, 2024 pm 03:51 PM

A deep dive into matplotlib's colormap

Jan 09, 2024 pm 03:51 PM

To learn more about the matplotlib color table, you need specific code examples 1. Introduction matplotlib is a powerful Python drawing library. It provides a rich set of drawing functions and tools that can be used to create various types of charts. The colormap (colormap) is an important concept in matplotlib, which determines the color scheme of the chart. In-depth study of the matplotlib color table will help us better master the drawing functions of matplotlib and make drawings more convenient.

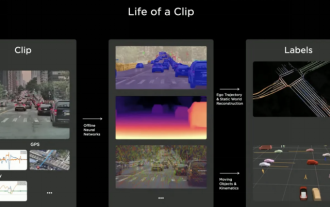

Will the autonomous driving annotation industry be subverted by the world model in 2024?

Mar 01, 2024 pm 10:37 PM

Will the autonomous driving annotation industry be subverted by the world model in 2024?

Mar 01, 2024 pm 10:37 PM

1. Problems faced by data annotation (especially based on BEV tasks) With the rise of BEV transformer-based tasks, the dependence on data has become heavier and heavier, and the annotation based on BEV tasks has also become more and more complex. important. At present, whether it is 2D-3D joint obstacle annotation, lane line annotation based on reconstructed point cloud clips or Occpuancy task annotation, it is still too expensive (compared with 2D annotation tasks, it is much more expensive). Of course, there are also many semi-automatic or automated annotation studies based on large models in the industry. On the other hand, the data collection cycle for autonomous driving is too long and involves a series of data compliance issues. For example, you want to capture the field of a flatbed truck across the camera.

Revealing the appeal of C language: Uncovering the potential of programmers

Feb 24, 2024 pm 11:21 PM

Revealing the appeal of C language: Uncovering the potential of programmers

Feb 24, 2024 pm 11:21 PM

The Charm of Learning C Language: Unlocking the Potential of Programmers With the continuous development of technology, computer programming has become a field that has attracted much attention. Among many programming languages, C language has always been loved by programmers. Its simplicity, efficiency and wide application make learning C language the first step for many people to enter the field of programming. This article will discuss the charm of learning C language and how to unlock the potential of programmers by learning C language. First of all, the charm of learning C language lies in its simplicity. Compared with other programming languages, C language

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

Let's learn how to input the root number in Word together

Mar 19, 2024 pm 08:52 PM

When editing text content in Word, you sometimes need to enter formula symbols. Some guys don’t know how to input the root number in Word, so Xiaomian asked me to share with my friends a tutorial on how to input the root number in Word. Hope it helps my friends. First, open the Word software on your computer, then open the file you want to edit, and move the cursor to the location where you need to insert the root sign, refer to the picture example below. 2. Select [Insert], and then select [Formula] in the symbol. As shown in the red circle in the picture below: 3. Then select [Insert New Formula] below. As shown in the red circle in the picture below: 4. Select [Radical Formula], and then select the appropriate root sign. As shown in the red circle in the picture below: