Directory of this article:

##4.1 Components of the file system

4.2 The complete structure of the file system

##4.3 Data Block

4.4 Basic knowledge of inode

4.5 In-depth inode

4.6 Principles of file operations in a single file system

4.7 Multiple file system associations

4.8 Log function of ext3 file system

4.9 ext4 file system

4.10 ext Disadvantages of class file system

4.11 Virtual file system VFS##Partition the disk, and the partition is Physically divide the disk into cylinders. After dividing the partition, you need to format it and then mount it before it can be used (other methods are not considered). The process of formatting a partition is actually creating a file system.

4.1 Components of the file system

In addition, each file has attributes (such as permissions, size, timestamp, etc.). Where is the metadata of these attribute classes stored? Is it also stored in blocks with the data part of the file? If a file occupies multiple blocks, does each block belonging to the file need to store a file metadata? But if the file system does not store metadata in each block, how can it know whether a certain block belongs to the file? But obviously, storing a copy of metadata in each data block is a waste of space.

File system designers certainly know that this storage method is not ideal, so they need to optimize the storage method. How to optimize? The solution to this similar problem is to use an index to find the corresponding data by scanning the index, and the index can store part of the data.

In the file system, indexing technology is embodied as an index node. Part of the data stored on the index node is the attribute metadata of the file and other small amounts of information. Generally speaking, the space occupied by an index is much smaller than that of the file data it indexes. Scanning it is much faster than scanning the entire data, otherwise the index will have no meaning. This solves all the previous problems.

In file system terms, the index node is called an inode. Metadata information such as inode number, file type, permissions, file owner, size, timestamp, etc. are stored in the inode. The most important thing is that the pointer to the block belonging to the file is also stored, so that when reading the inode, you can find the block belonging to the file. Blocks of the file, and then read these blocks and obtain the data of the file. Since a kind of pointer will be introduced later, for the convenience of naming and distinction, for the time being, the pointer pointing to the file data block in this inode record is called a block pointer.

Generally, the inode size is 128 bytes or 256 bytes, which is much smaller than the file data calculated in MB or GB. However, you must also know that the size of a file may be smaller than the inode size. For example, only A file occupying 1 byte.

When storing data to the hard disk, the file system needs to know which blocks are free and which blocks are occupied. The stupidest method is of course to scan from front to back, store part of the free block when it encounters it, and continue scanning until all the data is stored.

As an optimization method, of course you can also consider using indexes, but a file system of only 1G has a total of 1024*1024=1048576 blocks of 1KB. This is only 1G. What if it is 100G, 500G or even larger? The number and space occupied by just using indexes will also be huge. At this time, a higher-level optimization method appears: using block bitmaps (bitmaps are referred to as bmaps).

The bitmap only uses 0 and 1 to identify whether the corresponding block is free or occupied. The positions of 0 and 1 in the bitmap correspond to the position of the block. The first bit identifies the first block, and the second The ones digit marks the second block, and so on until all blocks are marked.

Consider why block bitmaps are more optimized. There are 8 bits in 1 byte in the bitmap, which can identify 8 blocks. For a file system with a block size of 1KB and a capacity of 1G, the number of blocks is 1024*1024, so 1024*1024 bits are used in the bitmap, a total of 1024*1024/8=131072 bytes=128K, which is 1G The file only needs 128 blocks as bitmaps to complete the one-to-one correspondence. By scanning these more than 100 blocks, you can know which blocks are free, and the speed is greatly improved.

But please note that the optimization of bmap is aimed at write optimization, because only writing requires finding the free block and allocating the free block. For reading, as long as the location of the block is found through the inode, the CPU can quickly calculate the address of the block on the physical disk. The calculation speed of the CPU is extremely fast. The time to calculate the block address is almost negligible, so the reading speed is basically It is thought that it is affected by the performance of the hard disk itself and has nothing to do with the file system.

Although bmap has greatly optimized scanning, it still has its bottleneck: What if the file system is 100G? A 100G file system uses 128*100=12800 1KB blocks, which takes up 12.5M of space. Just imagine that it will take some time to completely scan 12,800 blocks that are likely to be discontinuous. Although it is fast, it cannot bear the huge overhead of scanning every time a file is stored.

So it needs to be optimized again. How to optimize? In short, the file system is divided into block groups. The introduction of block groups will be given later.

Review the inode related information: the inode stores the inode number, file attribute metadata, and pointer to the block occupied by the file; each inode occupies 128 words section or 256 bytes.

Now another problem arises. There can be countless files in a file system, and each file corresponds to an inode. Does each inode of only 128 bytes have to occupy a separate block for storage? ? This is such a waste of space.

So a better way is to combine multiple inodes and store them in a block. For a 128-byte inode, one block stores 8 inodes. For a 256-byte inode, one block stores 4 inodes. This ensures that every block storing an inode is not wasted.

On the ext file system, these blocks that physically store inodes are combined to logically form an inode table to record all inodes.

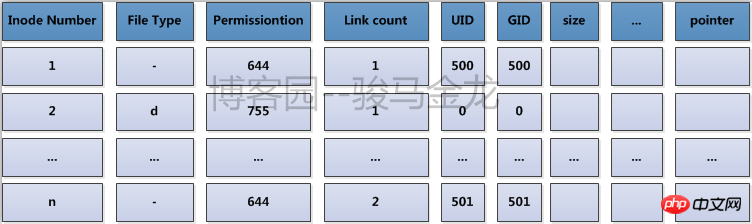

For example, every family must register household registration information with the police station. The household address can be known through the household registration. The police station in each town or street integrates all household registrations in the town or street. When you want to find the address of a certain household, you can quickly find it at the police station. The inode table is the police station here. Its contents are shown below.

In fact, after the file system is created, all inode numbers have been allocated and recorded in the inode table, but the row where the used inode number is located There is also metadata information of file attributes and block location information, and the unused inode number only has one inode number and no other information.

If you think about it more carefully, you can find that a large file system will still occupy a large number of blocks to store inodes. It also requires a lot of overhead to find one of the inode records, even though they have been formed. A logical table, but it cannot handle the table being too big and having too many records. So how to quickly find the inode also needs to be optimized. The optimization method is to divide the blocks of the file system into groups. Each group contains the inode table range, bmap, etc. of the group.

As mentioned earlier, bmap is a block bitmap, which is used to identify which blocks in the file system are free and which blocks are occupied.

The same is true for inodes. When storing files (everything in Linux is a file), you need to assign an inode number to it. However, after formatting and creating the file system, all inode numbers are preset and stored in the inode table, so a question arises: Which inode number should be assigned to the file? How to know whether a certain inode number has been allocated?

Since it is a question of "whether it is occupied", using a bitmap is the best solution, just like bmap records the occupancy of the block. The bitmap that identifies whether the inode number is allocated is called inodemap or imap for short. At this time, to allocate an inode number to a file, just scan the imap to know which inode number is free.

imap has the same problems that need to be solved like bmap and inode table: if the file system is relatively large, imap itself will be very large, and every time a file is stored, it must be scanned, which will lead to insufficient efficiency. Similarly, the optimization method is to divide the blocks occupied by the file system into block groups, and each block group has its own imap range.

The optimization method mentioned above is to divide the blocks occupied by the file system into block groups to solve the problem that bmap, inode table and imap are too large. Big question.

The division at the physical level is to divide the disk into multiple partitions based on cylinders, that is, multiple file systems; the division at the logical level is to divide the file system into block groups. Each file system contains multiple block groups, and each block group contains multiple metadata areas and data areas: the metadata area is the area where bmap, inode table, imap, etc. are stored; the data area is the area where file data is stored. Note that block groups are a logical concept, so they are not actually divided into columns, sectors, tracks, etc. on the disk.

The block group has been divided after the file system is created, that is to say, the block occupied by the metadata area bmap, inode table, imap and other information And the blocks occupied by the data area have been divided. So how does the file system know how many blocks a block metadata area contains and how many blocks the data area contains?

It only needs to determine one piece of data - the size of each block, and then calculate how to divide the block group based on the standard that bmap can only occupy at most one complete block. If the file system is very small and all bmaps cannot occupy one block in total, then the bmap block can only be freed.

The size of each block can be specified manually when creating the file system, and there is a default value if not specified.

If the current block size is 1KB, a bmap completely occupying a block can identify 1024*8= 8192 blocks (of course these 8192 blocks are a total of 8192 data areas and metadata areas, because the metadata area The allocated block also needs to be identified by bmap). Each block is 1K, and each block group is 8192K or 8M. To create a 1G file system, you need to divide 1024/8 = 128 block groups. What if it is a 1.1G file system? 128+12.8=128+13=141 block groups.

The number of blocks in each group has been divided, but how many inode numbers are set for each group? How many blocks does the inode table occupy? This needs to be determined by the system, because the indicator describing "how many blocks in the data area are assigned an inode number" is unknown to us by default. Of course, this indicator or percentage can also be specified manually when creating a file system. See "inode depth" below.

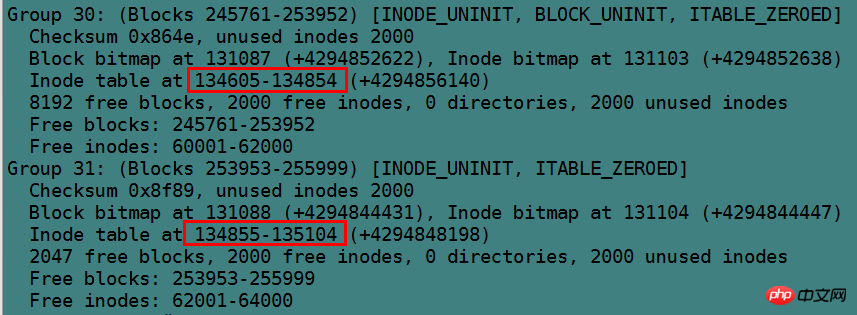

Use dumpe2fs to display all the file system information of the ext class. Of course, bmap fixes one block for each block group and does not need to be displayed. imap is smaller than bmap, so it only takes up 1 block and does not need to be displayed.

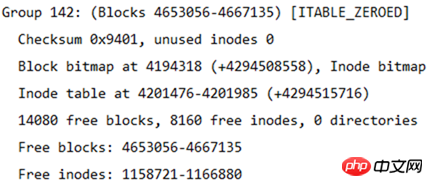

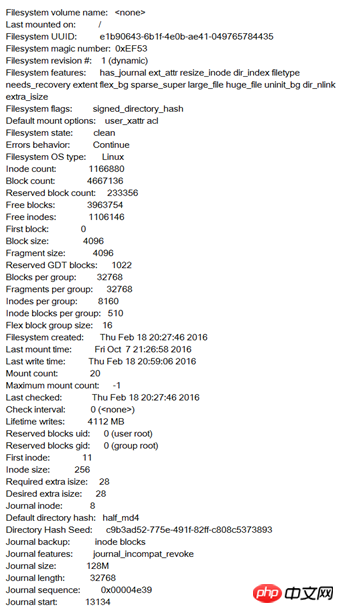

The following figure shows part of the information of a file system. Behind this information is the information of each block group.

The size of the file system can be calculated from this table. The file system has a total of 4667136 blocks, and each block size is 4K, so the file system size is 4667136*4/ 1024/1024=17.8GB.

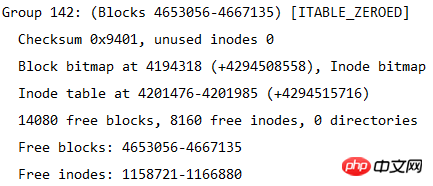

You can also calculate how many block groups are divided, because the number of blocks in each block group is 32768, so the number of block groups is 4667136/32768=142.4, which is 143 block groups. Since block groups are numbered starting from 0, the last block group number is Group 142. As shown in the figure below is the information of the last block group.

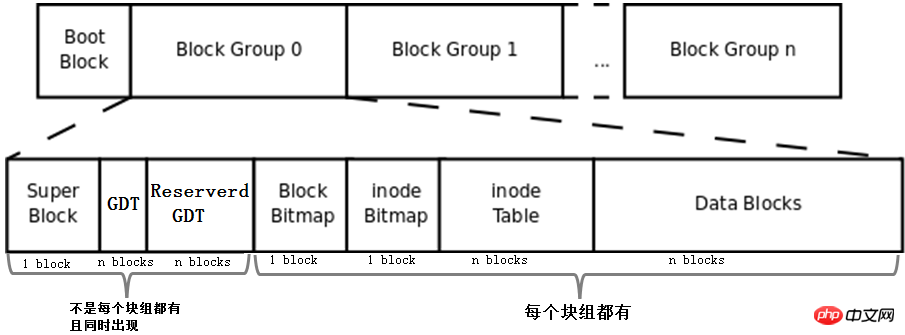

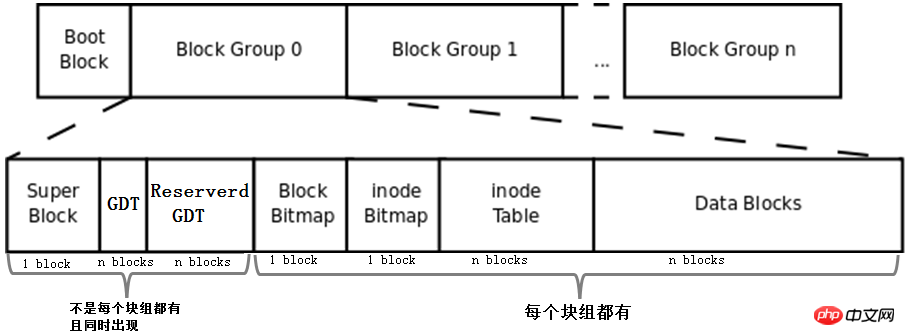

Put the bmap, inode table, imap, and data area described above The concepts of blocks and block groups are combined to form a file system. Of course, this is not a complete file system. The complete file system is shown below.

First of all, there are several concepts such as Boot Block, Super Block, GDT, and Reserve GDT in this picture. They will be introduced separately below.

Then, the figure indicates the number of blocks occupied by each part in the block group. Except for superblock, bmap, and imap, which can determine how many blocks they occupy, the other parts cannot determine how many blocks they occupy.

Finally, the figure indicates that Superblock, GDT and Reserved GDT appear at the same time and do not necessarily exist in each block group. It also indicates that bmap, imap, inode table and data blocks are each block group. All.

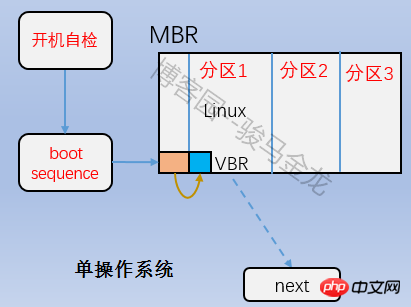

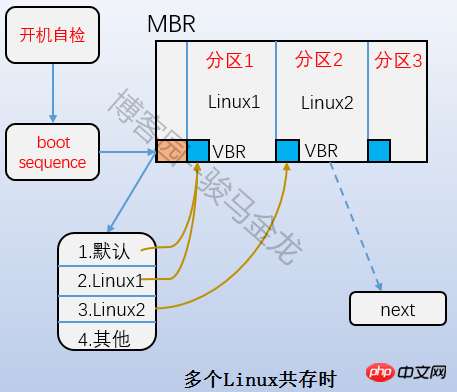

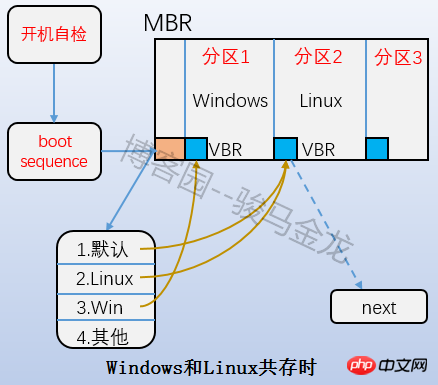

is the Boot Block part in the above figure, also called boot sector. It is located in the first block on the partition and occupies 1024 bytes. Not all partitions have this boot sector. The boot loader is also stored in it. This boot loader becomes VBR. There is a staggered relationship between the boot loader here and the boot loader on the mbr. When booting, first load the bootloader in the mbr, and then locate the boot serctor of the partition where the operating system is located to load the boot loader here. If there are multiple systems, after loading the bootloader in the mbr, the operating system menu will be listed, and each operating system on the menu points to the boot sector of the partition where they are located. The relationship between them is shown in the figure below.

If the block size is 1024K, the boot block occupies exactly one block. The block number is 0, so the superblock number is 1; if the block size is greater than 1024K, the boot block and the super block are co-located in one block. This The block number is 0. In short, the starting and ending position of the superblock is the second 1024 (1024-2047) bytes.

What you use the df command to read is the superblock of each file system, so its statistics are very fast. On the contrary, using the du command to view the used space of a larger directory is very slow because it is inevitable to traverse all the files in the entire directory.[root@xuexi ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on/dev/sda3 ext4 18G 1.7G 15G 11% /tmpfs tmpfs 491M 0 491M 0% /dev/shm/dev/sda1 ext4 190M 32M 149M 18% /boot

Superblock is crucial to the file system. Loss or damage of the superblock will definitely cause damage to the file system. Therefore, the old file system backs up the super block to each block group, but this is a waste of space, so the ext2 file system only saves the super block in block groups 0, 1 and 3, 5, and 7 power block groups. Block information, such as Group9, Group25, etc. Although so many superblocks are saved, the file system only uses the superblock information in the first block group, Group0, to obtain file system attributes. Only when the superblock on Group0 is damaged or lost will it find the next backup superblock and copy it to Group0. to restore the file system.

The picture below shows the superblock information of an ext4 file system. All ext family file systems can be obtained using dumpe2fs -h.

Since the file system is divided into block groups, the information and attribute elements of each block group Where is the data stored?

Each block group information in the ext file system is described using 32 bytes. These 32 bytes are called block group descriptors. The block group descriptors of all block groups form the block group descriptor table GDT (group descriptor table).

Although each block group requires a block group descriptor to record the block group's information and attribute metadata, not every block group stores a block group descriptor. The storage method of the ext file system is to form a GDT and store the GDT in certain block groups. The block group storing the GDT is the same as the block storing the superblock and the backup superblock. That is to say, they appear in a certain block group at the same time. in a block group.

If a file system with a block size of 4KB is divided into 143 block groups, and each block group descriptor is 32 bytes, then GDT requires 143*32=4576 bytes, or two blocks, to store. The block group information of all block groups is recorded in these two GDT blocks, and the GDTs in the block groups storing GDT are exactly the same.

The following figure shows the information of a block group descriptor (obtained through dumpe2fs).

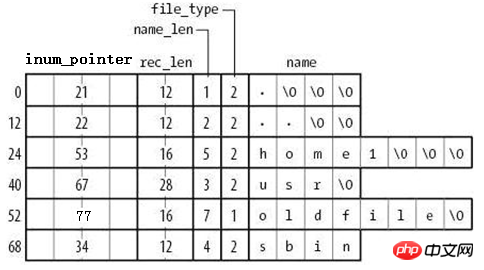

#The file name is not stored in its own inode, but in the data block of the directory in which it is located.

由图可知,在目录文件的数据块中存储了其下的文件名、目录名、目录本身的相对名称"."和上级目录的相对名称"..",还存储了指向inode table中这些文件名对应的inode号的指针(并非直接存储inode号码)、目录项长度rec_len、文件名长度name_len和文件类型file_type。注意到除了文件本身的inode记录了文件类型,其所在的目录的数据块也记录了文件类型。由于rec_len只能是4的倍数,所以需要使用"\0"来填充name_len不够凑满4倍数的部分。至于rec_len具体是什么,只需知道它是一种偏移即可。

目录的data block中并没有直接存储目录中文件的inode号,它存储的是指向inode table中对应文件inode号的指针,暂且称之为inode指针(至此,已经知道了两种指针:一种是inode table中每个inode记录指向其对应data block的block指针,一个此处的inode指针)。一个很有说服力的例子,在目录只有读而没有执行权限的时候,使用"ls -l"是无法获取到其内文件inode号的,这就表明没有直接存储inode号。实际上,因为在创建文件系统的时候,inode号就已经全部划分好并在每个块组的inode table中存放好,inode table在块组中是有具体位置的,如果使用dumpe2fs查看文件系统,会发现每个块组的inode table占用的block数量是完全相同的,如下图是某分区上其中两个块组的信息,它们都占用249个block。

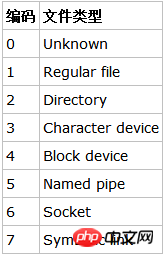

除了inode指针,目录的data block中还使用数字格式记录了文件类型,数字格式和文件类型的对应关系如下图。

注意到目录的data block中前两行存储的是目录本身的相对名称"."和上级目录的相对名称"..",它们实际上是目录本身的硬链接和上级目录的硬链接。硬链接的本质后面说明。

由此也就容易理解目录权限的特殊之处了。目录文件的读权限(r)和写权限(w),都是针对目录文件的数据块本身。由于目录文件内只有文件名、文件类型和inode指针,所以如果只有读权限,只能获取文件名和文件类型信息,无法获取其他信息,尽管目录的data block中也记录着文件的inode指针,但定位指针是需要x权限的,因为其它信息都储存在文件自身对应的inode中,而要读取文件inode信息需要有目录文件的执行权限通过inode指针定位到文件对应的inode记录上。以下是没有目录x权限时的查询状态,可以看到除了文件名和文件类型,其余的全是"?"。

[lisi4@xuexi tmp]$ ll -i d ls: cannot access d/hehe: Permission denied ls: cannot access d/haha: Permission denied total 0? d????????? ? ? ? ? ? haha? -????????? ? ? ? ? ? hehe

注意,xfs文件系统和ext文件系统不一样,它连文件类型都无法获取。

符号链接即为软链接,类似于Windows操作系统中的快捷方式,它的作用是指向原文件或目录。

软链接之所以也被称为特殊文件的原因是:它一般情况下不占用data block,仅仅通过它对应的inode记录就能将其信息描述完成;符号链接的大小是其指向目标路径占用的字符个数,例如某个符号链接的指向方式为"rmt --> ../sbin/rmt",则其文件大小为11字节;只有当符号链接指向的目标的路径名较长(60个字节)时文件系统才会划分一个data block给它;它的权限如何也不重要,因它只是一个指向原文件的"工具",最终决定是否能读写执行的权限由原文件决定,所以很可能ls -l查看到的符号链接权限为777。

注意,软链接的block指针存储的是目标文件名。也就是说,链接文件的一切都依赖于其目标文件名。这就解释了为什么/mnt的软链接/tmp/mnt在/mnt挂载文件系统后,通过软链接就能进入/mnt所挂载的文件系统。究其原因,还是因为其目标文件名"/mnt"并没有改变。

例如以下筛选出了/etc/下的符号链接,注意观察它们的权限和它们占用的空间大小。

[root@xuexi ~]# ll /etc/ | grep '^l'lrwxrwxrwx. 1 root root 56 Feb 18 2016 favicon.png -> /usr/share/icons/hicolor/16x16/apps/system-logo-icon.png lrwxrwxrwx. 1 root root 22 Feb 18 2016 grub.conf -> ../boot/grub/grub.conf lrwxrwxrwx. 1 root root 11 Feb 18 2016 init.d -> rc.d/init.d lrwxrwxrwx. 1 root root 7 Feb 18 2016 rc -> rc.d/rc lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc0.d -> rc.d/rc0.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc1.d -> rc.d/rc1.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc2.d -> rc.d/rc2.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc3.d -> rc.d/rc3.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc4.d -> rc.d/rc4.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc5.d -> rc.d/rc5.d lrwxrwxrwx. 1 root root 10 Feb 18 2016 rc6.d -> rc.d/rc6.d lrwxrwxrwx. 1 root root 13 Feb 18 2016 rc.local -> rc.d/rc.local lrwxrwxrwx. 1 root root 15 Feb 18 2016 rc.sysinit -> rc.d/rc.sysinit lrwxrwxrwx. 1 root root 14 Feb 18 2016 redhat-release -> centos-release lrwxrwxrwx. 1 root root 11 Apr 10 2016 rmt -> ../sbin/rmt lrwxrwxrwx. 1 root root 14 Feb 18 2016 system-release -> centos-release

关于这3种文件类型的文件只需要通过inode就能完全保存它们的信息,它们不占用任何数据块,所以它们是特殊文件。

设备文件的主设备号和次设备号也保存在inode中。以下是/dev/下的部分设备信息。注意到它们的第5列和第6列信息,它们分别是主设备号和次设备号,主设备号标识每一种设备的类型,次设备号标识同种设备类型的不同编号;也注意到这些信息中没有大小的信息,因为设备文件不占用数据块所以没有大小的概念。

[root@xuexi ~]# ll /dev | tailcrw-rw---- 1 vcsa tty 7, 129 Oct 7 21:26 vcsa1 crw-rw---- 1 vcsa tty 7, 130 Oct 7 21:27 vcsa2 crw-rw---- 1 vcsa tty 7, 131 Oct 7 21:27 vcsa3 crw-rw---- 1 vcsa tty 7, 132 Oct 7 21:27 vcsa4 crw-rw---- 1 vcsa tty 7, 133 Oct 7 21:27 vcsa5 crw-rw---- 1 vcsa tty 7, 134 Oct 7 21:27 vcsa6 crw-rw---- 1 root root 10, 63 Oct 7 21:26 vga_arbiter crw------- 1 root root 10, 57 Oct 7 21:26 vmci crw-rw-rw- 1 root root 10, 56 Oct 7 21:27 vsock crw-rw-rw- 1 root root 1, 5 Oct 7 21:26 zero

每个文件都有一个inode,在将inode关联到文件后系统将通过inode号来识别文件,而不是文件名。并且访问文件时将先找到inode,通过inode中记录的block位置找到该文件。

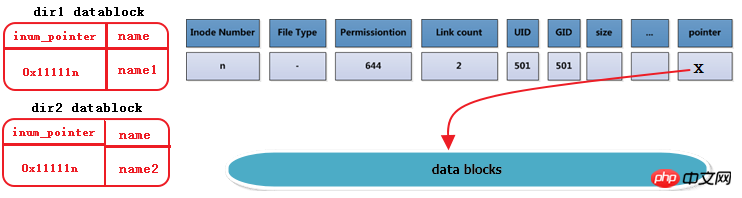

虽然每个文件都有一个inode,但是存在一种可能:多个文件的inode相同,也就即inode号、元数据、block位置都相同,这是一种什么样的情况呢?能够想象这些inode相同的文件使用的都是同一条inode记录,所以代表的都是同一个文件,这些文件所在目录的data block中的inode指针目的地都是一样的,只不过各指针对应的文件名互不相同而已。这种inode相同的文件在Linux中被称为"硬链接"。

硬链接文件的inode都相同,每个文件都有一个"硬链接数"的属性,使用ls -l的第二列就是被硬链接数,它表示的就是该文件有几个硬链接。

[root@xuexi ~]# ls -l total 48drwxr-xr-x 5 root root 4096 Oct 15 18:07 700-rw-------. 1 root root 1082 Feb 18 2016 anaconda-ks.cfg-rw-r--r-- 1 root root 399 Apr 29 2016 Identity.pub-rw-r--r--. 1 root root 21783 Feb 18 2016 install.log-rw-r--r--. 1 root root 6240 Feb 18 2016 install.log.syslog

例如下图描述的是dir1目录中的文件name1及其硬链接dir2/name2,右边分别是它们的inode和datablock。这里也看出了硬链接文件之间唯一不同的就是其所在目录中的记录不同。注意下图中有一列Link Count就是标记硬链接数的属性。

每创建一个文件的硬链接,实质上是多一个指向该inode记录的inode指针,并且硬链接数加1。

删除文件的实质是删除该文件所在目录data block中的对应的inode指针,所以也是减少硬链接次数,由于block指针是存储在inode中的,所以不是真的删除数据,如果仍有其他指针指向该inode,那么该文件的block指针仍然是可用的。当硬链接次数为1时再删除文件就是真的删除文件了,此时inode记录中block指针也将被删除。

不能跨分区创建硬链接,因为不同文件系统的inode号可能会相同,如果允许创建硬链接,复制到另一个分区时inode可能会和此分区已使用的inode号冲突。

硬链接只能对文件创建,无法对目录创建硬链接。之所以无法对目录创建硬链接,是因为文件系统已经把每个目录的硬链接创建好了,它们就是相对路径中的"."和"..",分别标识当前目录的硬链接和上级目录的硬链接。每一个目录中都会包含这两个硬链接,它包含了两个信息:(1)一个没有子目录的目录文件的硬链接数是2,其一是目录本身,其二是".";(2)一个包含子目录的目录文件,其硬链接数是2+子目录数,因为每个子目录都关联一个父目录的硬链接".."。很多人在计算目录的硬链接数时认为由于包含了"."和"..",所以空目录的硬链接数是2,这是错误的,因为".."不是本目录的硬链接。另外,还有一个特殊的目录应该纳入考虑,即"/"目录,它自身是一个文件系统的入口,是自引用(下文中会解释自引用)的,所以"/"目录下的"."和".."的inode号相同,硬链接数除去其内的子目录后应该为3,但结果是2,不知为何?

[root@xuexi ~]# ln /tmp /mydataln: `/tmp': hard link not allowed for directory

为什么文件系统自己创建好了目录的硬链接就不允许人为创建呢?从"."和".."的用法上考虑,如果当前目录为/usr,我们可以使用"./local"来表示/usr/local,但是如果我们人为创建了/usr目录的硬链接/tmp/husr,难道我们也要使用"/tmp/husr/local"来表示/usr/local吗?这其实已经是软链接的作用了。若要将其认为是硬链接的功能,这必将导致硬链接维护的混乱。

不过,通过mount工具的"--bind"选项,可以将一个目录挂载到另一个目录下,实现伪"硬链接",它们的内容和inode号是完全相同的。

硬链接的创建方法:ln file_target link_name。

软链接就是字符链接,链接文件默认指的就是字符文件,使用"l"表示其类型。

软链接在功能上等价与Windows系统中的快捷方式,它指向原文件,原文件损坏或消失,软链接文件就损坏。可以认为软链接inode记录中的指针内容是目标路径的字符串。

创建方式:ln –s file_target softlink_name

查看软链接的值:readlink softlink_name

在设置软链接的时候,target虽然不要求是绝对路径,但建议给绝对路径。是否还记得软链接文件的大小?它是根据软链接所指向路径的字符数计算的,例如某个符号链接的指向方式为"rmt --> ../sbin/rmt",它的文件大小为11字节,也就是说只要建立了软链接后,软链接的指向路径是不会改变的,仍然是"../sbin/rmt"。如果此时移动软链接文件本身,它的指向是不会改变的,仍然是11个字符的"../sbin/rmt",但此时该软链接父目录下可能根本就不存在/sbin/rmt,也就是说此时该软链接是一个被破坏的软链接。

inode大小为128字节的倍数,最小为128字节。它有默认值大小,它的默认值由/etc/mke2fs.conf文件中指定。不同的文件系统默认值可能不同。

[root@xuexi ~]# cat /etc/mke2fs.conf

[defaults]

base_features = sparse_super,filetype,resize_inode,dir_index,ext_attr

enable_periodic_fsck = 1blocksize = 4096inode_size = 256inode_ratio = 16384[fs_types]

ext3 = {

features = has_journal

}

ext4 = {

features = has_journal,extent,huge_file,flex_bg,uninit_bg,dir_nlink,extra_isize

inode_size = 256}同样观察到这个文件中还记录了blocksize的默认值和inode分配比率inode_ratio。inode_ratio=16384表示每16384个字节即16KB就分配一个inode号,由于默认blocksize=4KB,所以每4个block就分配一个inode号。当然分配的这些inode号只是预分配,并不真的代表会全部使用,毕竟每个文件才会分配一个inode号。但是分配的inode自身会占用block,而且其自身大小256字节还不算小,所以inode号的浪费代表着空间的浪费。

既然知道了inode分配比率,就能计算出每个块组分配多少个inode号,也就能计算出inode table占用多少个block。

如果文件系统中大量存储电影等大文件,inode号就浪费很多,inode占用的空间也浪费很多。但是没办法,文件系统又不知道你这个文件系统是用来存什么样的数据,多大的数据,多少数据。

当然inodesize、inode分配比例、blocksize都可以在创建文件系统的时候人为指定。

Ext预留了一些inode做特殊特性使用,如下:某些可能并非总是准确,具体的inode号对应什么文件可以使用"find / -inum NUM"查看。

Ext4的特殊inode

Inode号 用途

0 不存在0号inode

1 虚拟文件系统,如/proc和/sys

2 根目录

3 ACL索引

4 ACL数据

5 Boot loader

6 未删除的目录

7 预留的块组描述符inode

8 日志inode

11 第一个非预留的inode,通常是lost+found目录

所以在ext4文件系统的dumpe2fs信息中,能观察到fisrt inode号可能为11也可能为12。

并且注意到"/"的inode号为2,这个特性在文件访问时会用上。

需要注意的是,每个文件系统都会分配自己的inode号,不同文件系统之间是可能会出现使用相同inode号文件的。例如:

[root@xuexi ~]# find / -ignore_readdir_race -inum 2 -ls 2 4 dr-xr-xr-x 22 root root 4096 Jun 9 09:56 / 2 2 dr-xr-xr-x 5 root root 1024 Feb 25 11:53 /boot 2 0 c--------- 1 root root Jun 7 02:13 /dev/pts/ptmx 2 0 -rw-r--r-- 1 root root 0 Jun 6 18:13 /proc/sys/fs/binfmt_misc/status 2 0 drwxr-xr-x 3 root root 0 Jun 6 18:13 /sys/fs

It can be seen from the results that in addition to the root Inode number being 2, there are also several files with inode numbers also being 2. They all belong to independent file systems, and some are virtual file systems, such as /proc and /sys.

As mentioned before, the blocks pointer is saved in the inode, but the number of pointers that can be saved in an inode record is limited. Otherwise the inode size (128 bytes or 256 bytes) will be exceeded.

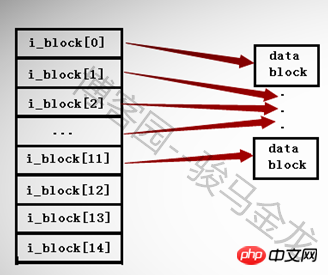

In ext2 and ext3 file systems, there can only be a maximum of 15 pointers in an inode, and each pointer is represented by i_block[n].

The first 12 pointers i_block[0] to i_block[11] are direct addressing pointers, each pointer points to a block in the data area. As shown below.

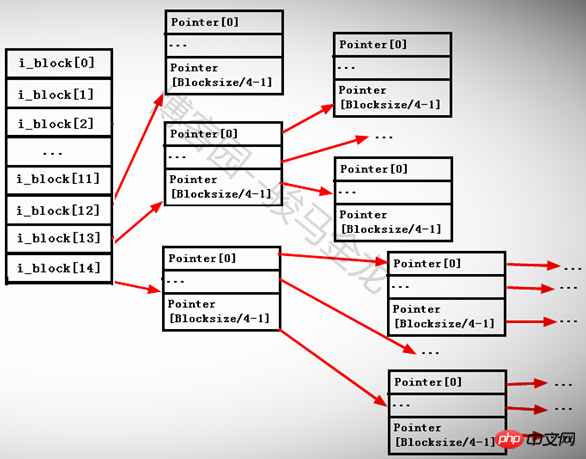

The 13th pointer i_block[12] is a first-level indirect addressing pointer, which points to a block that still stores pointers, that is, i_block[13] --> Pointerblock --> datablock.

The 14th pointer i_block[13] is a secondary indirect addressing pointer. It points to a block that still stores pointers, but the pointers in this block continue to point to other blocks that store pointers, that is, i_block[ 13] --> Pointerblock1 --> PointerBlock2 --> datablock.

The 15th pointer i_block[14] is a third-level indirect addressing pointer. It points to a block that still stores pointers. There are two pointers pointed to under this pointer block. That is, i_block[13] --> Pointerblock1 --> PointerBlock2 --> PointerBlock3 --> datablock.

Since the size of each pointer is 4 bytes, the number of pointers that each pointer block can store is BlockSize/4byte. For example, if the blocksize is 4KB, then a Block can store 4096/4=1024 pointers.

As shown below.

Why are there indirect and direct pointers? If all 15 pointers in an inode are direct pointers, and if the size of each block is 1KB, then the 15 pointers can only point to 15 blocks, which is 15KB in size. Since each file corresponds to an inode number, this limits the size of each block. The maximum size of a file is 15*1=15KB, which is obviously unreasonable.

If you store a file larger than 15KB but not too large, it will occupy the first-level indirect pointer i_block[12]. At this time, the number of pointers that can be stored is 1024/4+12=268, so 268KB can be stored document.

If a file larger than 268K is stored but is not too large, the secondary pointer i_block[13] will continue to be occupied. At this time, the number of pointers that can be stored is [1024/4]^2+1024/4+ 12=65804, so it can store files of about 65804KB=64M.

If the stored file is larger than 64M, then continue to use the three-level indirect pointer i_block[14], and the number of stored pointers is [1024/4]^3+[1024/4]^2+[1024/ 4]+12=16843020 pointers, so it can store files of about 16843020KB=16GB.

What if blocksize=4KB? Then the maximum file size that can be stored is ([4096/4]^3+[4096/4]^2+[4096/4]+12)*4/1024/1024/1024=about 4T.

Of course, what is calculated in this way is not necessarily the maximum file size that can be stored. It is also subject to another condition. The calculations here simply indicate how a large file is addressed and allocated.

In fact, when you see the calculated values here, you know that the access efficiency of ext2 and ext3 to very large files is low. They need to check too many pointers, especially when the blocksize is 4KB. Ext4 has been optimized for this point. Ext4 uses extent management to replace the block mapping of ext2 and ext3, which greatly improves efficiency and reduces fragmentation.

How to perform operations such as deletion, copying, renaming, and moving on Linux What about? And how do you find it when you access the file? In fact, as long as you understand the several terms introduced in the previous article and their functions, it is easy to know the principles of file operations.

Note: What is explained in this section is the behavior under a single file system. For how to use multiple file systems, please see the next section: Multi-file system association.

What steps are performed inside the system when the "cat /var/log/messages" command is executed? The successful execution of this command involves complex processes such as searching for the cat command, judging permissions, and searching for and judging the permissions of the messages file. Here we only explain how to find the /var/log/messages file that is catted and related to the content of this section.

Find the blocks where the block group descriptor table of the root file system is located, read the GDT (already in memory) to find the block number of the inode table.

Because GDT is always in the same block group as superblock, and superblock is always in the 1024-2047th byte of the partition, it is easy to know the first one The block group where GDT is located and which blocks GDT occupies in this block group.

In fact, GDT is already in the memory. It will be mounted in the root file system when the system is booted. All GDTs have been put into the memory when mounting.

Locate the inode of the root "/" in the block of the inode table and find the data block pointed to by "/".

As mentioned earlier, the ext file system reserves some inode numbers, among which the inode number of "/" is 2, so the root directory file can be directly located based on the inode number. data block.

The var directory name and the pointer to the var directory file inode are recorded in the datablock of "/", and the inode record is found. The block pointing to var is stored in the inode record. pointer, so the data block of the var directory file is found.

You can find the inode record of the var directory through the inode pointer of the var directory. However, during the pointer positioning process, you also need to know the block group and location of the inode record. inode table, so GDT needs to be read. Similarly, GDT has been cached in memory.

The log directory name and its inode pointer are recorded in the data block of var. Through this pointer, the block group and the inode table where the inode is located are located, and according to the The inode record finds the data block of the log.

The messages file name and the corresponding inode pointer are recorded in the data block of the log directory file. Through this pointer, the block group and the location of the inode are located. inode table, and find the data block of messages based on the inode record.

#Finally read the datablock corresponding to messages.

It is easier to understand after simplifying the GDT part of the above steps. As follows: Find GDT--> Find the inode of "/"--> Find the data block of /, read the inode of var--> Find the data block of var, read the inode of log--> Find the data of log Block reads the inode of messages --> Find the data blocks of messages and read them.

Note that this is an operation behavior that does not cross the file system.

Deleting files is divided into ordinary files and directory files. If you know the deletion principles of these two types of files, you will know how to delete other types of special files.

For deleting ordinary files: find the inode and data block of the file (find it according to the method in the previous section); mark the inode number of the file as unknown in imap Use; mark the block number corresponding to the data block in the bmap as unused; delete the record line where the file name is located in the data block of the directory where it is located. If the record is deleted, the pointer to the Inode will be lost.

For deleting directory files: find the inode and data block of all files, subdirectories, and subfiles in the directory and directory; mark these inode numbers as unused in imap; change the inode numbers occupied by these files in bmap The block number is marked as unused; delete the record line where the directory name is located in the data block of the directory's parent directory. It should be noted that deleting the records in the parent directory data block is the last step. If this step is taken in advance, a directory non-empty error will be reported because there are still files occupied in the directory.

#Renaming files is divided into renaming within the same directory and renaming within different directories. Renaming in different directories is actually the process of moving files, see below.

#The action of renaming a file in the same directory is only to modify the file name recorded in the data block of the directory where it is located. It is not a process of deletion and reconstruction.

If there is a file name conflict during renaming (the file name already exists in the directory), you will be prompted whether to overwrite it. The overwriting process is to overwrite the records of conflicting files in the directory data block. For example, there are a.txt and a.log under /tmp/. If you rename a.txt to a.log, you will be prompted to overwrite. If you choose to overwrite, the records about a.log in the data block of /tmp will be overwritten. At this time its pointer is pointing to the inode of a.txt.

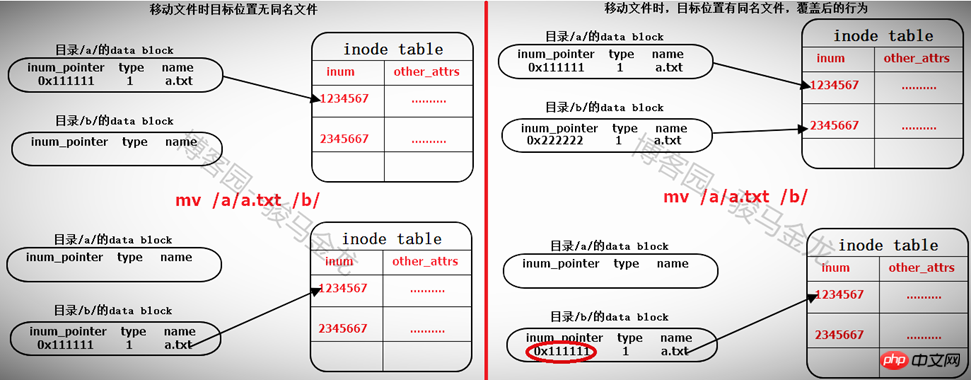

Moving files

#Moving files under the same file system actually modifies the data block of the directory where the target file is located. Add a line to it that points to the inode pointer of the file to be moved in the inode table. If there is a file with the same name in the target path, you will be prompted whether to overwrite it. In fact, the record of the conflicting file in the directory data block is overwritten. Since the inode record pointer of the file with the same name is Overwrite, so the data block of the file can no longer be found, which means that the file is marked for deletion (if there are multiple hard links, it is a different matter).

So moving files within the same file system is very fast. It only adds or overwrites a record in the data block of the directory where it is located. Therefore, when moving a file, the inode number of the file will not change.

For movement within different file systems, it is equivalent to copying first and then deleting. See below.

对于文件存储

(1).读取GDT,找到各个(或部分)块组imap中未使用的inode号,并为待存储文件分配inode号;

(2).在inode table中完善该inode号所在行的记录;

(3).在目录的data block中添加一条该文件的相关记录;

(4).将数据填充到data block中。

注意,填充到data block中的时候会调用block分配器:一次分配4KB大小的block数量,当填充完4KB的data block后会继续调用block分配器分配4KB的block,然后循环直到填充完所有数据。也就是说,如果存储一个100M的文件需要调用block分配器100*1024/4=25600次。

另一方面,在block分配器分配block时,block分配器并不知道真正有多少block要分配,只是每次需要分配时就分配,在每存储一个data block前,就去bmap中标记一次该block已使用,它无法实现一次标记多个bmap位。这一点在ext4中进行了优化。

(5)填充完之后,去inode table中更新该文件inode记录中指向data block的寻址指针。

对于复制,完全就是另一种方式的存储文件。步骤和存储文件的步骤一样。

在单个文件系统中的文件操作和多文件系统中的操作有所不同。本文将对此做出非常详细的说明。

这里要明确的是,任何一个文件系统要在Linux上能正常使用,必须挂载在某个已经挂载好的文件系统中的某个目录下,例如/dev/cdrom挂载在/mnt上,/mnt目录本身是在"/"文件系统下的。而且任意文件系统的一级挂载点必须是在根文件系统的某个目录下,因为只有"/"是自引用的。这里要说明挂载点的级别和自引用的概念。

假如/dev/sdb1挂载在/mydata上,/dev/cdrom挂载在/mydata/cdrom上,那么/mydata就是一级挂载点,此时/mydata已经是文件系统/dev/sdb1的入口了,而/dev/cdrom所挂载的目录/mydata/cdrom是文件系统/dev/sdb1中的某个目录,那么/mydata/cdrom就是二级挂载点。一级挂载点必须在根文件系统下,所以可简述为:文件系统2挂载在文件系统1中的某个目录下,而文件系统1又挂载在根文件系统中的某个目录下。

再解释自引用。首先要说的是,自引用的只能是文件系统,而文件系统表现形式是一个目录,所以自引用是指该目录的data block中,"."和".."的记录中的inode指针都指向inode table中同一个inode记录,所以它们inode号是相同的,即互为硬链接。而根文件系统是唯一可以自引用的文件系统。

[root@xuexi /]# ll -ai /total 102 2 dr-xr-xr-x. 22 root root 4096 Jun 6 18:13 . 2 dr-xr-xr-x. 22 root root 4096 Jun 6 18:13 ..

由此也能解释cd /.和cd /..的结果都还是在根下,这是自引用最直接的表现形式。

[root@xuexi tmp]# cd /. [root@xuexi /]# [root@xuexi tmp]# cd /.. [root@xuexi /]#

但是有一个疑问,根目录下的"."和".."都是"/"目录的硬链接,所以除去根目录下目录数后的硬链接数位3,但实际却为2,不知道这是为何?

[root@server2 tmp]# a=$(ls -al / | grep "^d" |wc -l) [root@server2 tmp]# b=$(ls -l / | grep "^d" |wc -l) [root@server2 tmp]# echo $((a - b))2

挂载文件系统到某个目录下,例如"mount /dev/cdrom /mnt",挂载成功后/mnt目录中的文件全都暂时不可见了,且挂载后权限和所有者(如果指定允许普通用户挂载)等的都改变了,知道为什么吗?

下面就以通过"mount /dev/cdrom /mnt"为例,详细说明挂载过程中涉及的细节。

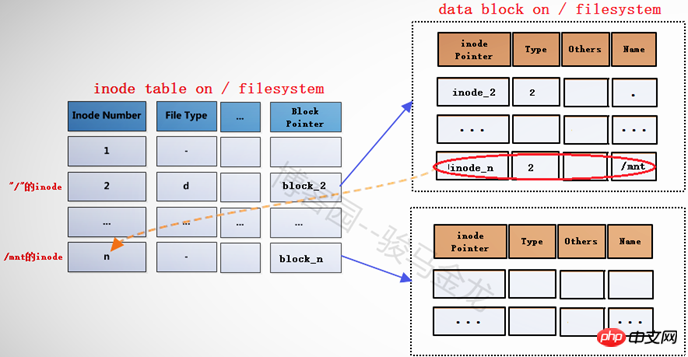

在将文件系统/dev/cdrom(此处暂且认为它是文件系统)挂载到挂载点/mnt之前,挂载点/mnt是根文件系统中的一个目录,"/"的data block中记录了/mnt的一些信息,其中包括inode指针inode_n,而在inode table中,/mnt对应的inode记录中又存储了block指针block_n,此时这两个指针还是普通的指针。

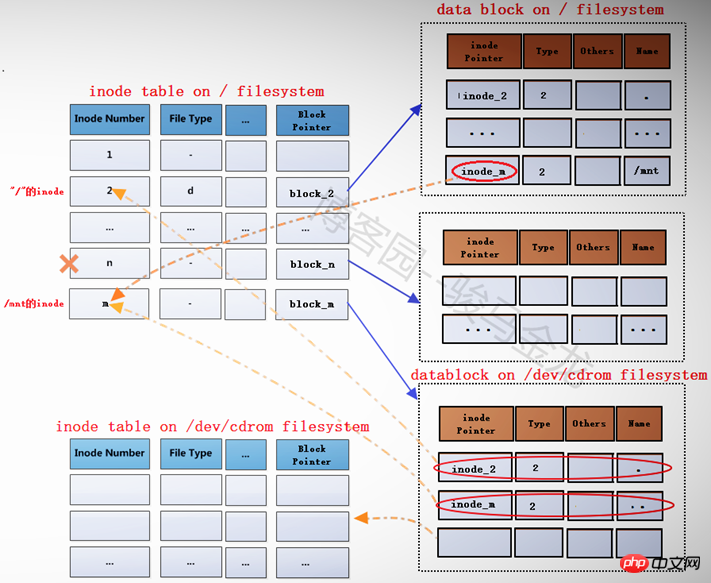

当文件系统/dev/cdrom挂载到/mnt上后,/mnt此时就已经成为另一个文件系统的入口了,因此它需要连接两边文件系统的inode和data block。但是如何连接呢?如下图。

在根文件系统的inode table中,为/mnt重新分配一个inode记录m,该记录的block指针block_m指向文件系统/dev/cdrom中的data block。既然为/mnt分配了新的inode记录m,那么在"/"目录的data block中,也需要修改其inode指针为inode_m以指向m记录。同时,原来inode table中的inode记录n就被标记为暂时不可用。

block_m指向的是文件系统/dev/cdrom的data block,所以严格说起来,除了/mnt的元数据信息即inode记录m还在根文件系统上,/mnt的data block已经是在/dev/cdrom中的了。这就是挂载新文件系统后实现的跨文件系统,它将挂载点的元数据信息和数据信息分别存储在不同的文件系统上。

挂载完成后,将在/proc/self/{mounts,mountstats,mountinfo}这三个文件中写入挂载记录和相关的挂载信息,并会将/proc/self/mounts中的信息同步到/etc/mtab文件中,当然,如果挂载时加了-n参数,将不会同步到/etc/mtab。

而卸载文件系统,其实质是移除临时新建的inode记录(当然,在移除前会检查是否正在使用)及其指针,并将指针指回原来的inode记录,这样inode记录中的block指针也就同时生效而找回对应的data block了。由于卸载只是移除inode记录,所以使用挂载点和文件系统都可以实现卸载,因为它们是联系在一起的。

下面是分析或结论。

(1).挂载点挂载时的inode记录是新分配的。

# 挂载前挂载点/mnt的inode号

[root@server2 tmp]# ll -id /mnt100663447 drwxr-xr-x. 2 root root 6 Aug 12 2015 /mnt [root@server2 tmp]# mount /dev/cdrom /mnt

# 挂载后挂载点的inode号 [root@server2 tmp]# ll -id /mnt 1856 dr-xr-xr-x 8 root root 2048 Dec 10 2015 mnt

由此可以验证,inode号确实是重新分配的。

(2).挂载后,挂载点的内容将暂时不可见、不可用,卸载后文件又再次可见、可用。

# 在挂载前,向挂载点中创建几个文件 [root@server2 tmp]# touch /mnt/a.txt [root@server2 tmp]# mkdir /mnt/abcdir

# 挂载 [root@server2 tmp]# mount /dev/cdrom /mnt # 挂载后,挂载点中将找不到刚创建的文件 [root@server2 tmp]# ll /mnt total 636-r--r--r-- 1 root root 14 Dec 10 2015 CentOS_BuildTag dr-xr-xr-x 3 root root 2048 Dec 10 2015 EFI-r--r--r-- 1 root root 215 Dec 10 2015 EULA-r--r--r-- 1 root root 18009 Dec 10 2015 GPL dr-xr-xr-x 3 root root 2048 Dec 10 2015 images dr-xr-xr-x 2 root root 2048 Dec 10 2015 isolinux dr-xr-xr-x 2 root root 2048 Dec 10 2015 LiveOS dr-xr-xr-x 2 root root 612352 Dec 10 2015 Packages dr-xr-xr-x 2 root root 4096 Dec 10 2015 repodata-r--r--r-- 1 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-7-r--r--r-- 1 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-Testing-7-r--r--r-- 1 root root 2883 Dec 10 2015 TRANS.TBL # 卸载后,挂载点/mnt中的文件将再次可见 [root@server2 tmp]# umount /mnt [root@server2 tmp]# ll /mnt total 0drwxr-xr-x 2 root root 6 Jun 9 08:18 abcdir-rw-r--r-- 1 root root 0 Jun 9 08:18 a.txt

The reason why this happens is that after the file system is mounted, the original inode record of the mount point is temporarily marked as unavailable. The key is that there is no inode pointer pointing to the inode record. After the file system is uninstalled, the original inode record of the mount point is re-enabled, and the inode pointer of mnt in the "/" directory points to the inode record again.

(3). After mounting, the metadata and data block of the mount point are stored on different file systems.

(4). Even after the mount point is mounted, it still belongs to the file in the source file system.

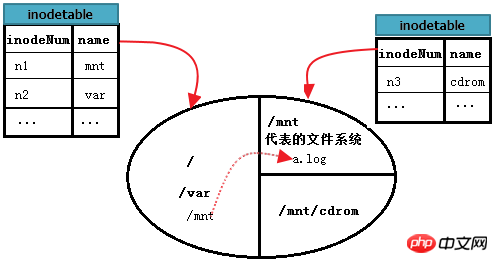

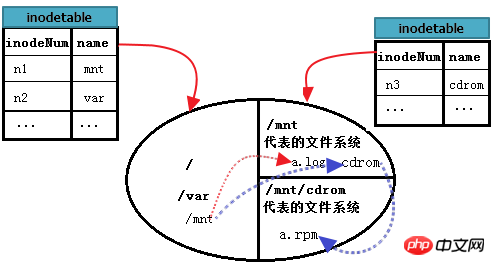

Suppose the circle in the figure below represents a hard disk, which is divided into 3 areas, that is, 3 file systems. Among them, root is the root file system, /mnt is the entry of another file system A, file system A is mounted on /mnt, /mnt/cdrom is also the entry of file system B, and file system B is mounted on /mnt/cdrom. superior. Each file system maintains some inode tables. It is assumed that the inode table in the figure is a collection table of inode tables in all block groups of each file system.

How to read /var/log/messages? This is file reading in the same file system as "/", which has been explained in detail in the previous single file system.

But how to read /mnt/a.log in the A file system? First, find the inode record of /mnt from the root file system. This is a search within a single file system; then locate the data block of /mnt based on the block pointer of this inode record. These blocks are the data blocks of file system A; Then read the a.log record from the data block of /mnt, and locate the inode record corresponding to a.log in the inode table of the A file system according to the inode pointer of a.log; finally, find a from the block pointer of this inode record. log data block. At this point, the contents of the /mnt/a.log file can be read.

The following figure can describe the above process more completely.

So how to read /mnt/cdrom/a.rpm in /mnt/cdrom? The file system B mount point represented by cdrom here is located under /mnt, so there is one more step. First find "/", then find mnt in the root, enter the mnt file system, find the data block of cdrom, and then enter cdrom to find a.rpm. In other words, the location where the mnt directory files are stored is the root, the location where the cdrom directory files are stored is mnt, and finally the location where a.rpm is stored is cdrom.

Continue to improve the picture above. as follows.

Compared with the ext2 file system, ext3 has an additional log function.

In the ext2 file system, there are only two areas: the data area and the metadata area. If there is a sudden power outage while filling data into the data block, the consistency of the data and status in the file system will be checked at the next startup. This check and repair may consume a lot of time, or even cannot be repaired after the check. The reason why this happens is because after the file system suddenly loses power, it does not know where the block of the file being stored last time starts and ends, so it will scan the entire file system to exclude it (maybe it is checked in this way).

When creating an ext3 file system, it will be divided into three areas: data area, log area and metadata area. Each time data is stored, the activities of the metadata area in ext2 are first performed in the log area. It is not until the file is marked with commit that the data in the log area is transferred to the metadata area. When there is a sudden power outage when storing files, the next time you check and repair the file system, you only need to check the records in the log area, mark the data block corresponding to the bmap as unused, and mark the inode number as unused, so that you do not need to scan the entire file. The system consumes a lot of time.

Although ext3 has one more log area than ext2, which transfers the metadata area, the performance of ext3 is slightly worse than that of ext2, especially when writing many small files. However, due to the optimization of other aspects of ext3, there is almost no performance gap between ext3 and ext2.

Recall the previous storage formats of ext2 and ext3 file systems. It uses block as the storage unit, and each block uses bmap. Bits are used to mark whether they are free. Although the efficiency is improved by optimizing the method of dividing block groups, bmap is still used inside a block group to mark the blocks in the block group. For a huge file, scanning the entire bmap will be a huge project. In addition, in terms of inode addressing, ext2/3 uses direct and indirect addressing methods. For three-level indirect pointers, the number of pointers that may be traversed is very, very huge.

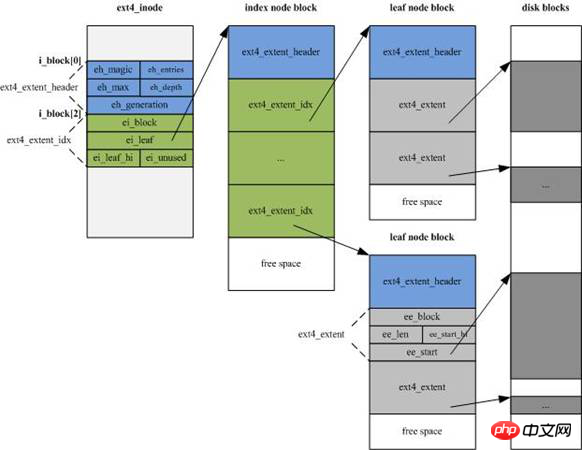

The biggest feature of the ext4 file system is that it is managed using the concept of extent (extent, or segment) based on ext3. An extent should contain as many physically contiguous blocks as possible. Inode addressing has also been improved using the section tree.

By default, EXT4 no longer uses the block mapping allocation method of EXT3, but changes to the Extent allocation method.

(1). Regarding the structural characteristics of EXT4

EXT4 is similar to EXT3 in its overall structure. The large allocation directions are based on block groups of the same size, and the allocation within each block group is fixed. Number of inodes, possible superblock (or backup) and GDT.

The inode structure of EXT4 has been significantly changed. In order to add new information, the size has been increased from EXT3's 128 bytes to the default 256 bytes. At the same time, the inode addressing index no longer uses EXT3's "12 direct The index mode is "addressing block + 1 first-level indirect addressing block + 1 second-level indirect addressing block + 1 third-level indirect addressing block", and is changed to 4 Extent fragment streams, each fragment stream is set The starting block number of the fragment and the number of consecutive blocks (it may point directly to the data area, or it may point to the index block area).

The fragment stream is the green area in the index node block (inde node block) in the figure below, each 15 bytes, a total of 60 bytes.

(2). EXT4 deletes the structural changes of the data.

After EXT4 deletes data, it will release the file system bitmap space bits, update the directory structure, and release the inode space bits in sequence.

(3). ext4 uses multi-block allocation.

When storing data, the block allocator in ext3 can only allocate 4KB blocks at a time, and bmap is marked once before each block is stored. If a 1G file is stored and the blocksize is 4KB, then the block allocator will be called once after each block is stored, that is, the number of calls is 1024*1024/4KB=262144 times, and the number of times bmap is marked is also 1024*1024/4= 262,144 times.

In ext4, allocation is based on sections. It is possible to allocate a bunch of consecutive blocks by calling the block allocator once, and mark the corresponding bmap at once before storing this bunch of blocks. This greatly improves storage efficiency for large files.

The biggest disadvantage is that it divides everything that needs to be divided when it creates the file system. It can be allocated directly when used, which means that it does not support dynamic partitioning and dynamic allocation. For smaller partitions, the speed is fine, but for a very large disk, the speed is extremely slow. For example, if you format a disk array with tens of terabytes into an ext4 file system, you may lose all your patience.

In addition to the super slow formatting speed, the ext4 file system is still very desirable. Of course, the file systems developed by different companies have their own characteristics. The most important thing is to choose the appropriate file system type according to your needs.

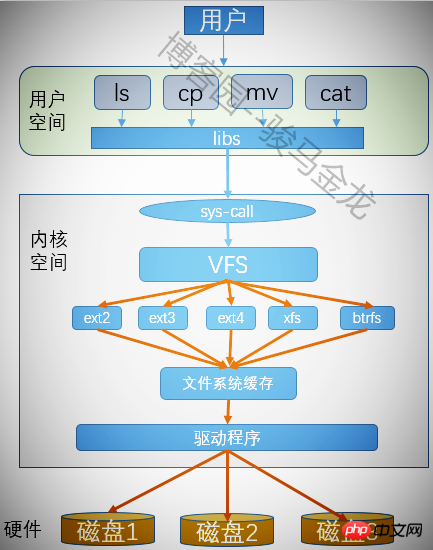

Each partition can create a file system after formatting. Linux can recognize many types of file systems, so it How to identify it? In addition, when we operate the files in the partition, we have not specified which file system it belongs to. How can various different file systems be operated by our users in an indiscriminate manner? This is what a virtual file system does.

The virtual file system provides a common interface for users to operate various file systems, so that users do not need to consider what type of file system the file is on when executing a program, and what kind of system calls should be used. System functions to operate the file. With the virtual file system, you only need to call the system call of VFS for all programs that need to be executed, and VFS will help complete the remaining actions.

The above is the detailed content of ext file system mechanism. For more information, please follow other related articles on the PHP Chinese website!