What is a process?

A program cannot run alone. Only when the program is loaded into memory and the system allocates resources to it can it run. This executed program is called a process. The difference between a program and a process is that a program is a collection of instructions, which is a static description text of the process; a process is an execution activity of the program and is a dynamic concept.

What is a thread?

Thread is the smallest unit that the operating system can perform operation scheduling. It is included in the process and is the actual operating unit in the process. A thread refers to a single sequential control flow in a process. Multiple threads can run concurrently in a process, and each thread performs different tasks in parallel.

What is the difference between process and thread?

Threads share memory space, and the memory of the process is independent.

Threads of the same process can communicate directly, but two processes must communicate with each other through an intermediate agent.

Creating a new thread is very simple. Creating a new process requires cloning its parent process.

A thread can control and operate other threads in the same process, but a process can only operate child processes.

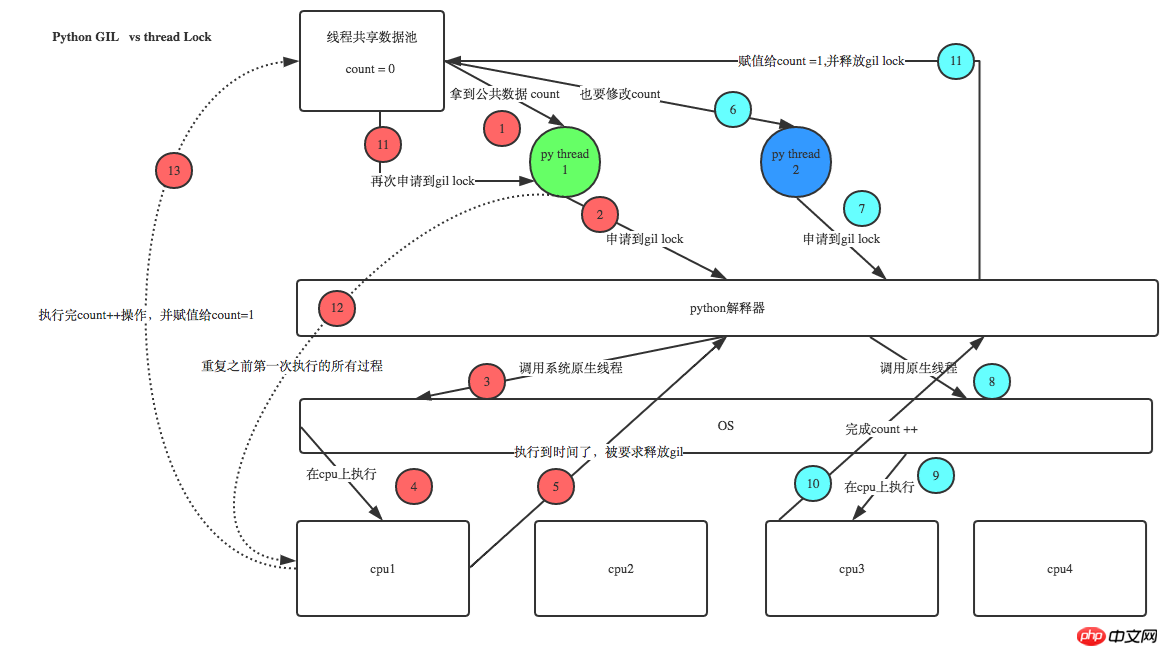

Python GIL (Global Interpreter Lock)

No matter how many threads are opened and how many CPUs there are, python only allows one thread at the same time when executing.

Python threading module

Direct call

import threading,time

def run_num(num):

## """

Define the function to be run by the thread

- ## :param num:

- :

return:

## """

- running on number:%s

"%num)

## time.sleep(3)

-

##if

__name__ == '__main__':

# Generate a thread instance t1

## t1 = threading.Thread(target=run_num,args=(1,) )

# Generate a thread instance t2

t2 = threading.Thread(target =run_num,args=(2,))

# Start thread t1

t1.start()

Start thread t2

## t2.start()

# Get the thread name

print(t1.getName())

print(t2.getName())

Output:

running on number:1

running on number:2

Thread-1

Thread-2

- Inherited calling

import threading,time

class MyThread(threading.Thread):

## def __init__(self,num :

- ## num

- # Define the function to be run by each thread. The function name must be run

- def run(self):

## print("

- running on number:%s

"%self.num)

time.sleep(3)

-

##if

__name__ == '__main__':

## t1 = MyThread(1)

t2 = MyThread(2)

## t1.start()

t2.start()

Output:

##running on number :1

running on number:2

##Join and Daemon

Join The function of Join is to block the main process and cannot execute the program after the join.

In the case of multi-threads and multiple joins, the join methods of each thread are executed sequentially. The execution of the previous thread is completed before the subsequent thread can be executed.

When there are no parameters, wait for the thread to end before executing subsequent programs. After setting the parameters, wait for the time set by the thread and then execute the subsequent main process, regardless of whether the thread ends.

import threading,time

##class

MyThread(threading.Thread):-

## def __init__(self,num):

-

# Define the function to be run by each thread. The function name must be run

## def run(self):-

## print(" running on number:%s- "%self.num)

- time.sleep(3)

## print("- thread:%s

"%self.num)

- __name__ == '__main__':

t1 = MyThread(1)

## t2 = MyThread(2)

t1.start()

t1.join()

t2.start()

## t2.join()

Output:

running on number:1

thread:1

- ##running on number:2

##thread:2-

The effect of setting parameters is as follows:

- if

__name__ == '__main__':

## t1 = MyThread(1)

-

t2 = MyThread(2)

## t1.start()

## t1.join(2)

t2.start()

## t2.join()

Output:

- ##running on number:1

- running on number:2

##thread:1-

##thread:2

Daemon

-

import time,threading

##def run(n):

-

print("

%s ".center(20,"- *

") %n)

-

time.sleep(2)## print("done

".center(20," *- "))

##def main():

- for

i

in range(5):-

## t = threading.Thread(target=run,args=(i,))

t . start()

- t.join(1)

## print("- starting thread

",t.getName())

-

##m = threading.Thread( target=main,args=())

# Set the main thread to the Daemon thread, which serves as the daemon thread of the main thread of the program. When the main thread exits, The m thread will also exit, and other sub-threads started by m will exit at the same time, regardless of whether the execution is completed

-

m.setDaemon(True)

-

m.start()

-

m.join(3)

##print("main thread done". center(20,"*"))

Output:

-

*********0**********

starting thread Thread-2

*********1*********

********done********

starting thread Thread-3

**********2************

**main thread done **

Thread lock (mutex lock Mutex)

Multiple threads can be started under one process, and multiple threads share the memory space of the parent process. This means that each thread can access the same data. At this time, if two threads want to modify the same data at the same time, a thread lock is required.

import time,threading

- ##def addNum():

- #Get this global variable in each thread

- global num

- print("

--get num:",num)

- time.sleep(1)

- Perform -1 operation on this public variable

- num -= 1

- # Set a shared variable

- num = 100

- thread_list = []

- ##for

i in range(100):

## t = threading.Thread(target=addNum)

-

t.start()

## thread_list.append(t)

-

# Wait for all threads to finish executing

-

##for

t in- thread_list:

t.join()

- final num:

",num)

Locked versionLock blocks other threads from sharing Resource access, and the same thread can only acquire once. If more than once, a deadlock will occur and the program cannot continue to execute.

import time,threading

-

##def addNum():

- #Get this global variable in each thread

- global num

- print("

--get num:",num)

time.sleep(1) # Lock before modifying data

##lock.acquire()

# Perform -1 operation on this public variable

num -= 1

# Release after modification

##lock.release()

- # Set a shared variable

##num = 100

-

- lock

= threading.Lock()

##for- i

in range (100):## t = threading.Thread(target=addNum)

t.start()

thread_list.append(t)

# Wait for all Thread execution completed

-

for t - in

thread_list: t.join()

- ##print("

final num:

",num)

GIL VS LockGIL guarantees that only one thread can execute at the same time. Lock is a user-level lock and has nothing to do with GIL.

def run1():

## print("grab the first part data")

lock.acquire()

global num

- ## num += 1

- ##lock

.release()

return num

def run2():

-

## print("

grab the second part data- ")

-

lock.acquire()

## global num2

num2 += 1

-

lock .release()

- return

num2

##def run3():

- ##

lock.acquire()

- res = run1()

- print ("

between run1 and run2".center(50,"*"))

## res2 = run2 ()

- lock

.release()

## print (res,res2)

-

##if

__name__ == '__main__':

num,num2 = 0,0

num,num2 = threading.RLock()

-

for i

in range(10): t = threading.Thread(target=run3)

- # t.start()

-

##while threading.active_count() != 1: print(threading.active_count())

- :

- print("

all threads done".center(50,"*"))

Print(num,num2)The main difference between these two locks is that RLock allows to be acquired multiple times in the same thread. Lock does not allow this to happen. Note that if you use RLock, acquire and release must appear in pairs, that is, acquire is called n times, and release must be called n times to truly release the occupied lock.

The mutex only allows one thread to change data at the same time, while Semaphore allows a certain number of threads to change data at the same time. For example, if the ticket office has 3 windows, then the maximum Only 3 people are allowed to buy tickets at the same time, and those at the back can only buy tickets after the people at any window in front leave.

##def run(n):

semaphore.acquire()

## time.sleep( 1)

## print("run the thread:%s"%n)

semaphore.release()

- __name__ == '__main__':

## # Allows up to 5 threads to run at the same time semaphore = threading.BoundedSemaphore(5)

- i

in range(20):

t = threading.Thread(target=run,args=(i,))

t.start ()

##while threading.active_count() != 1:

# print(threading.active_count())

- ## pass

else:

- all threads done

".center(50,"*"))

Timer (timer)

Timer calls a function at a certain interval , if you want to call a function every once in a while, you must set the Timer again in the function called by the Timer. Timer is a derived class of Thread.

import threading

-

##def hello( ):

-

print(" hello,world!- ")

# Execute the hello function after delay 5 seconds

-

t = threading.Timer(5,hello)

-

t.start()

Event

Set signal

Use the set() method of Event to set the signal flag inside the Event object to true. The Event object provides the isSet() method to determine the state of its internal signal flag. When the set() method of the event object is used, the isSet() method returns true.

Clear the signal

Use the clear() method of Event to clear the signal flag inside the Event object, that is, set it to false. When using After Event's clear() method, the isSet() method returns false.

Waiting

Event's wait() method will only execute and complete the return quickly when the internal signal is true. When the internal signal flag of the Event object is false, the wait() method waits for it to be true before returning.

import threading,time,random

# #def light():

-

if not - event

.isSet():

# Every .

while True:

## ## count < 5:

## print("\033[42;1m--green light on--\033[0m".center(50,"*"))

elif count < 8:

print("\033[43;1m--yellow light on--\033[0m".center(50,"*"))

elif count < 13:

ifevent.isSet():

event.clear()

print("\033[41;1m--red light on--\033[0m".center(50,"*"))

else:

count = 0

event.set()

time.sleep(1)

count += 1

def car(n):

while 1:

time.sleep(random.randrange(10))

ifevent.isSet():

print("car %s is running..."%n)

else:

print("car %s is waiting for the red light..."%n)

if __name__ == "__main__":

event = threading.Event()

Light = threading.Thread(target=light,)

Light.start()

for i in range(3):

t = threading.Thread(target=car,args=(i,))

t.start()

queue queue

Queue in Python is the most commonly used form of exchanging data between threads. The Queue module is a module that provides queue operations.

Create a queue object

import queue

q = queue.Queue(maxsize = 10)

The queue.Queue class is a synchronous implementation of a queue. The queue length can be unlimited or limited. The queue length can be set through the optional parameter maxsize of the Queue constructor. If maxsize is less than 1, the queue length is unlimited.

Put a value into the queue

q.put("a")

Call the put() method of the queue object to insert an item at the end of the queue. put() has two parameters. The first item is required and is the value of the inserted item; the second block is an optional parameter and defaults to 1. If the queue is currently empty and block is 1, the put() method causes the calling thread to pause until a data unit is freed. If block is 0, the put() method will throw a Full exception.

Remove a value from the queue

q.get()

Call the get() method of the queue object to delete and return an item from the head of the queue. The optional parameter is block, which defaults to True. If the queue is empty and block is True, get() causes the calling thread to pause until an item becomes available. If the queue is empty and block is False, the queue will throw an Empty exception.

Python Queue module has three queues and constructors

# First in first out

class queue.Queue(maxsize=0)

First in, last out

class queue.LifoQueue(maxsize=0)

# The lower the priority queue level, the first it goes out

##class queue.PriorityQueue(maxsize=0)

Common methods q = queue.Queue()

# Returns the size of the queue

q.qsize()

# If the queue is empty, return True, otherwise False

q.empty()

If the queue is full, return True, otherwise False

q.full()

Get the queue, timeout waiting time

q.get([block[,timeout]])

# Equivalent to q.get(False)

- ##q.get_nowait()

- # Wait until the queue is empty before performing other operations

- q.join()

Producer-Consumer Model

Using the producer and consumer patterns in development and programming can solve most concurrency problems. This mode improves the overall data processing speed of the program by balancing the work capabilities of the production thread and the consumer thread.

Why use the producer and consumer model

In the thread world, the producer is the thread that produces data, and the consumer is the thread that consumes data. In multi-threaded development, if the producer's processing speed is very fast and the consumer's processing speed is very slow, then the producer must wait for the consumer to finish processing before continuing to produce data. In the same way, if the processing power of the consumer is greater than the producer, then the consumer must wait for the producer. In order to solve this problem, the producer and consumer models were introduced.

What is the producer-consumer model

The producer-consumer model uses a container to solve the strong coupling problem between producers and consumers. Producers and consumers do not communicate directly with each other, but communicate through blocking queues. Therefore, after the producer produces the data, it no longer waits for the consumer to process it, but directly throws it to the blocking queue. The consumer does not ask the producer for data, but Take it directly from the blocking queue. The blocking queue is equivalent to a buffer, balancing the processing capabilities of producers and consumers.

The most basic example of the producer-consumer model.

import queue,threading,time

# #q = queue.Queue(maxsize=10)

- ##def Producer():

- count = 1

- ##while

True:

q.put("- bone%s

"%count)

"- produced bones

",count)

Count += 1

## - q.qsize () > 0:

## print("

[%s] got [%s] and ate it... "%(name,q. - get

()))## time.sleep(1)

c1 = threading.Thread(target=Consumer,args=("王财",))

##c2 = threading.Thread (target=Consumer,args=("来福",))

##p.start()

-

##c2.start()

The above is the detailed content of Python development--detailed explanation of processes, threads, and coroutines. For more information, please follow other related articles on the PHP Chinese website!