Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

Examples of input and output, redirection, and pipelines

Examples of input and output, redirection, and pipelines

Examples of input and output, redirection, and pipelines

The above is the detailed content of Examples of input and output, redirection, and pipelines. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to install Classic Shell on Windows 11?

Apr 21, 2023 pm 09:13 PM

How to install Classic Shell on Windows 11?

Apr 21, 2023 pm 09:13 PM

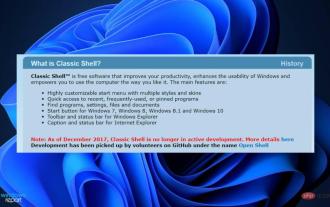

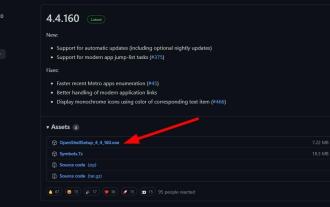

<p>Customizing your operating system is a great way to make your daily life more enjoyable. You can change the user interface, apply custom themes, add widgets, and more. So today we will show you how to install ClassicShell on Windows 11. </p><p>This program has been around for a long time and allows you to modify the operating system. Volunteers have now started running the organization, which disbanded in 2017. The new project is called OpenShell and is currently available on Github for those interested. </p>&a

PowerShell deployment fails with HRESULT 0x80073D02 issue fixed

May 10, 2023 am 11:02 AM

PowerShell deployment fails with HRESULT 0x80073D02 issue fixed

May 10, 2023 am 11:02 AM

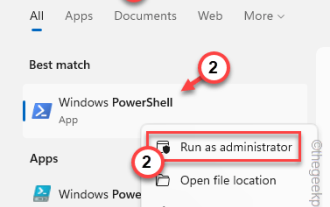

Do you see this error message "Add-AppxPackage: Deployment failed with HRESULT: 0x80073D02, The package cannot be installed because the resource it modifies is currently in use. Error 0x80073D02..." in PowerShell when you run the script? As the error message states, this does occur when the user attempts to re-register one or all WindowsShellExperienceHost applications while the previous process is running. We've got some simple solutions to fix this problem quickly. Fix 1 – Terminate the experience host process You must terminate before executing the powershell command

![Explorer.exe does not start on system startup [Fix]](https://img.php.cn/upload/article/000/887/227/168575230155539.png?x-oss-process=image/resize,m_fill,h_207,w_330) Explorer.exe does not start on system startup [Fix]

Jun 03, 2023 am 08:31 AM

Explorer.exe does not start on system startup [Fix]

Jun 03, 2023 am 08:31 AM

Nowadays, many Windows users start encountering severe Windows system problems. The problem is that Explorer.exe cannot start after the system is loaded, and users cannot open files or folders. Although, Windows users can open Windows Explorer manually using Command Prompt in some cases and this must be done every time the system restarts or after system startup. This can be problematic and is due to the following factors mentioned below. Corrupted system files. Enable fast startup settings. Outdated or problematic display drivers. Changes were made to some services in the system. Modified registry file. Keeping all the above factors in mind, we have come up with some that will surely help the users

How to quickly delete the line at the end of a file in Linux

Mar 01, 2024 pm 09:36 PM

How to quickly delete the line at the end of a file in Linux

Mar 01, 2024 pm 09:36 PM

When processing files under Linux systems, it is sometimes necessary to delete lines at the end of the file. This operation is very common in practical applications and can be achieved through some simple commands. This article will introduce the steps to quickly delete the line at the end of the file in Linux system, and provide specific code examples. Step 1: Check the last line of the file. Before performing the deletion operation, you first need to confirm which line is the last line of the file. You can use the tail command to view the last line of the file. The specific command is as follows: tail-n1filena

Here are the fixes for Open Shell Windows 11 not working issue

Apr 14, 2023 pm 02:07 PM

Here are the fixes for Open Shell Windows 11 not working issue

Apr 14, 2023 pm 02:07 PM

Open shell not running on Windows 11 is not a new problem and has been plaguing users since the advent of this new operating system. The cause of the Open-Shell Windows 11 not working issue is not specific. It can be caused by unexpected errors in programs, the presence of viruses or malware, or corrupted system files. For those who don’t know, Open-Shell is the replacement for Classic Shell, which was discontinued in 2017. You can check out our tutorial on how to install Classic Shell on Windows 11. How to replace Windows 11 Start menu

Different ways to run shell script files on Windows

Apr 13, 2023 am 11:58 AM

Different ways to run shell script files on Windows

Apr 13, 2023 am 11:58 AM

Windows Subsystem for Linux The first option is to use Windows Subsystem for Linux or WSL, which is a compatibility layer for running Linux binary executables natively on Windows systems. It works for most scenarios and allows you to run shell scripts in Windows 11/10. WSL is not automatically available, so you must enable it through your Windows device's developer settings. You can do this by going to Settings > Update & Security > For Developers. Switch to developer mode and confirm the prompt by selecting Yes. Next, look for W

Super hardcore! 11 very practical Python and Shell script examples!

Apr 12, 2023 pm 01:52 PM

Super hardcore! 11 very practical Python and Shell script examples!

Apr 12, 2023 pm 01:52 PM

Some examples of Python scripts: enterprise WeChat alarms, FTP clients, SSH clients, Saltstack clients, vCenter clients, obtaining domain name SSL certificate expiration time, sending today's weather forecast and future weather trend charts; some examples of Shell scripts: SVN Full backup, Zabbix monitoring user password expiration, building local YUM, and the readers' needs in the previous article (when the load is high, find out the process scripts with high occupancy and store or push notifications); it is a bit long, so please read it patiently At the end of the article, there is an Easter egg after all. Python script part of enterprise WeChat alarm This script uses enterprise WeChat application to perform WeChat alarm and can be used

How to install Open Shell to restore the classic Start menu on Windows 11

Apr 18, 2023 pm 10:10 PM

How to install Open Shell to restore the classic Start menu on Windows 11

Apr 18, 2023 pm 10:10 PM

OpenShell is a free software utility that can be used to customize the Windows 11 Start menu to resemble a classic-style menu or a Windows 7-style menu. The Start menu on previous versions of Windows provided users with an easy way to browse the contents of their system. Basically, OpenShell is a replacement for ClassicShell that provides different user interface elements that help to get the functionality of the latter version from previous Windows versions. Once development of ClassicShell ceased in 2017, it was maintained and developed by GitHub volunteers under the name OpenShell. It is related to Win