Introduction and usage instructions of JavaCC

一、JavaCC

JavaCC是java的compiler compiler。JavaCC是LL解析器生成器,可处理的语法范围比较狭窄,但支持无限长的token超前扫描。

安装过程:

我是从github上down下来的zip压缩包,然后安装了下ant, 然后通过ant安装的javacc

1. 首先下载下来ant的源码,然后tar -zvxf apache-ant....tag.gz 解压缩,然后可以在解压出来的bin目录中看到ant的可执行文件

2. 从github下载javacc, 进入解压缩的目录执行xxxxxx/ant。 然后会在target 目录中看到javacc.jar 包

3. 这个时候可以通过如下方法将jar包做成一个可执行文件:

首先创建一个shell脚本:

#!/bin/shMYSELF=`which "$0" 2>/dev/null` [ $? -gt 0 -a -f "$0" ] && MYSELF="./$0"java=javaif test -n "$JAVA_HOME"; thenjava="$JAVA_HOME/bin/java"fiexec "$java" $java_args -cp $MYSELF "$@"exit 1

命名为stub.sh, 然后在jar包的所在目录执行: cat stub.sh javacc.jar > javacc && chmod +x javacc。 这样一个可执行文件就有了,不过在解析.jj文件时需要带一个javacc的参数,像这样: javacc javacc Adder.jj

二、语法描述文件

1、简介

JavaCC的语法描述文件是扩展名为.jj的文件,一般情况下,语法描述文件的内容采用如下形式

options {

JavaCC的选项

}

PARSER_BEGIN(解析器类名)package 包名;import 库名;public class 解析器类名 {

任意的Java代码

}

PARSER_END(解析器类名)

扫描器的描述

解析器的描述JavaCC和java一样将解析器的内容定义在单个类中,因此会在PARSER_BEGIN和PARSER_END之间描述这个类的相关内容。

2、Example

如下代码是一个解析正整数加法运算并进行计算的解析器的语法描述文件。

options {STATIC = false;

}

PARSER_BEGIN(Adder)import java.io.*class Adder {public static void main(String[] args) {for (String arg : args) {try {

System.out.println(evaluate(arg));

} catch (ParseException ex) {

System.err.println(ex.getMessage());

}

}

} public static long evaluate(String src) throws ParseException {

Reader reader = new StringReader(src);return new Adder(reader).expr();

}

}

PARSER_END(Adder)

SKIP: { <[" ", "\t", "\r", "\n"]> }

TOKEN: {

<INTEGER: (["0"-"9"])+>

}long expr():

{

Token x, y

}

{

x=<INTEGER> "+" y=<INTEGER> <EOF>

{return Long.parseLong(x.image) + Long.parseLong(y.image);

}

} options块中将STATIC选项设置为false, 将该选项设置为true的话JavaCC生成的所有成员及方法都将被定义为static,若将STATIC设置为true则所生成的解析器无法在多线程环境下使用,因此该选项总是被设置为false。(STATIC的默认值为true)

从PARSER_BEING(Adder)到PARSER_END(Adder)是解析器类的定义。解析器类中需要定义的成员和方法也写在这里。为了实现即使只有Adder类也能够运行,这里定义了main函数。

之后的SKIP和TOKEN部分定义了扫描器。SKIP表示要跳过空格、制表符(tab)和换行符。TOKEN表示扫描整数字符并生成token。

long expr...开始到最后的部分定义了狭义的解析器。这部分解析token序列并执行某些操作。

3、运行JavaCC

要用JavaCC来处理Adder.jj(图中是demo1.jj),需要使用如下javacc命令

运行如上命令会生成Adder.java和其他辅助类。

要编译生成的Adder.java,只需要javac命令即可:

这样就生成了Adder.class文件。Adder类是从命令行参数获取计算式并进行计算的,因此可以如下这样从命令行输入计算式并执行

三、启动JavaCC生成的解析器

现在解析一下main函数的代码。 main函数将所有命令行参数的字符串作为计算对象的算式,依次用evaluate方法进行计算。

evaluate方法中生成了Adder类的对象实例 。并让Adder对象来计算(解析)参数字符串src。

要运行JavaCC生成的解析器类,需要下面2个步骤:

生成解析器类的对象实例

用生成的对象调用和需要解析的语句同名的方法

第1点: JavaCC4.0生成的解析器中默认定义有如下四种类型的构造函数。

Parser(InputStream s)

Parser(InputStream s, String encoding)

Parser(Reader r)

Parser(x x x x TokenManager tm)

第1种的构造函数是通过传入InputStream对象来构造解析的。这个构造函数无法设定输入字符串的编码,因此无法处理中文字符等。

而地2种的构造函数除了InputStream对象外,还可以设置输入字符串的编码来生成解析器。但如果要解析中文字符串或注释的话,就必须使用第2种/3种构造函数。

第3种的构造函数用于解析Reader对象所读入的内容。

第4种是将扫描器作为参数传入。

解析器生成后,用这个实例调用和需要解析的语法同名的方法。这里调用Adder对象的expr方法,接回开始解析,解析正常结束后会返回语义值。

四、中文的处理

要使JavaCC能够处理中文首先需要将语法描述文件的options快的UNICODE_INPUT选项设置为true:

options {STATUS = false;DEBUG_PARSER = true;UNICODE_PARSER = true;JDK_VERSION = "1.5";

} 这样就会先将输入的字符转换成UNICODE后再进行处理。UNICODE_INPUT选项为false时只能处理ASCII范围的字符。

另外还需要使用第2/3种构造方法为输入的字符串设置适当的编码。

The above is the detailed content of Introduction and usage instructions of JavaCC. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

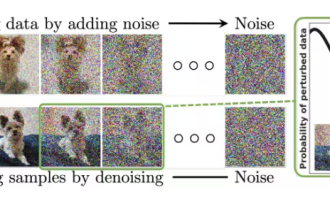

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

A Diffusion Model Tutorial Worth Your Time, from Purdue University

Apr 07, 2024 am 09:01 AM

Diffusion can not only imitate better, but also "create". The diffusion model (DiffusionModel) is an image generation model. Compared with the well-known algorithms such as GAN and VAE in the field of AI, the diffusion model takes a different approach. Its main idea is a process of first adding noise to the image and then gradually denoising it. How to denoise and restore the original image is the core part of the algorithm. The final algorithm is able to generate an image from a random noisy image. In recent years, the phenomenal growth of generative AI has enabled many exciting applications in text-to-image generation, video generation, and more. The basic principle behind these generative tools is the concept of diffusion, a special sampling mechanism that overcomes the limitations of previous methods.

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Generate PPT with one click! Kimi: Let the 'PPT migrant workers' become popular first

Aug 01, 2024 pm 03:28 PM

Kimi: In just one sentence, in just ten seconds, a PPT will be ready. PPT is so annoying! To hold a meeting, you need to have a PPT; to write a weekly report, you need to have a PPT; to make an investment, you need to show a PPT; even when you accuse someone of cheating, you have to send a PPT. College is more like studying a PPT major. You watch PPT in class and do PPT after class. Perhaps, when Dennis Austin invented PPT 37 years ago, he did not expect that one day PPT would become so widespread. Talking about our hard experience of making PPT brings tears to our eyes. "It took three months to make a PPT of more than 20 pages, and I revised it dozens of times. I felt like vomiting when I saw the PPT." "At my peak, I did five PPTs a day, and even my breathing was PPT." If you have an impromptu meeting, you should do it

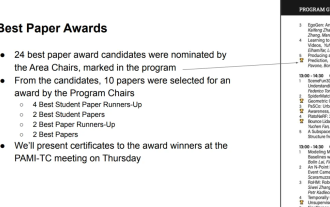

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

All CVPR 2024 awards announced! Nearly 10,000 people attended the conference offline, and a Chinese researcher from Google won the best paper award

Jun 20, 2024 pm 05:43 PM

In the early morning of June 20th, Beijing time, CVPR2024, the top international computer vision conference held in Seattle, officially announced the best paper and other awards. This year, a total of 10 papers won awards, including 2 best papers and 2 best student papers. In addition, there were 2 best paper nominations and 4 best student paper nominations. The top conference in the field of computer vision (CV) is CVPR, which attracts a large number of research institutions and universities every year. According to statistics, a total of 11,532 papers were submitted this year, and 2,719 were accepted, with an acceptance rate of 23.6%. According to Georgia Institute of Technology’s statistical analysis of CVPR2024 data, from the perspective of research topics, the largest number of papers is image and video synthesis and generation (Imageandvideosyn

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

Five programming software for getting started with learning C language

Feb 19, 2024 pm 04:51 PM

As a widely used programming language, C language is one of the basic languages that must be learned for those who want to engage in computer programming. However, for beginners, learning a new programming language can be difficult, especially due to the lack of relevant learning tools and teaching materials. In this article, I will introduce five programming software to help beginners get started with C language and help you get started quickly. The first programming software was Code::Blocks. Code::Blocks is a free, open source integrated development environment (IDE) for

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

PyCharm Community Edition Installation Guide: Quickly master all the steps

Jan 27, 2024 am 09:10 AM

Quick Start with PyCharm Community Edition: Detailed Installation Tutorial Full Analysis Introduction: PyCharm is a powerful Python integrated development environment (IDE) that provides a comprehensive set of tools to help developers write Python code more efficiently. This article will introduce in detail how to install PyCharm Community Edition and provide specific code examples to help beginners get started quickly. Step 1: Download and install PyCharm Community Edition To use PyCharm, you first need to download it from its official website

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

A must-read for technical beginners: Analysis of the difficulty levels of C language and Python

Mar 22, 2024 am 10:21 AM

Title: A must-read for technical beginners: Difficulty analysis of C language and Python, requiring specific code examples In today's digital age, programming technology has become an increasingly important ability. Whether you want to work in fields such as software development, data analysis, artificial intelligence, or just learn programming out of interest, choosing a suitable programming language is the first step. Among many programming languages, C language and Python are two widely used programming languages, each with its own characteristics. This article will analyze the difficulty levels of C language and Python

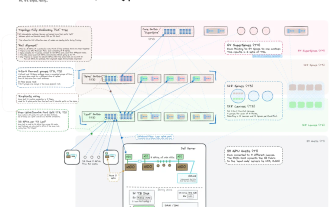

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

From bare metal to a large model with 70 billion parameters, here is a tutorial and ready-to-use scripts

Jul 24, 2024 pm 08:13 PM

We know that LLM is trained on large-scale computer clusters using massive data. This site has introduced many methods and technologies used to assist and improve the LLM training process. Today, what we want to share is an article that goes deep into the underlying technology and introduces how to turn a bunch of "bare metals" without even an operating system into a computer cluster for training LLM. This article comes from Imbue, an AI startup that strives to achieve general intelligence by understanding how machines think. Of course, turning a bunch of "bare metal" without an operating system into a computer cluster for training LLM is not an easy process, full of exploration and trial and error, but Imbue finally successfully trained an LLM with 70 billion parameters. and in the process accumulate

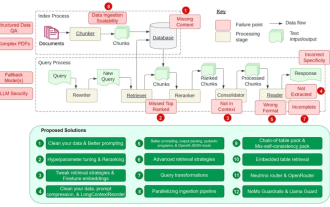

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Counting down the 12 pain points of RAG, NVIDIA senior architect teaches solutions

Jul 11, 2024 pm 01:53 PM

Retrieval-augmented generation (RAG) is a technique that uses retrieval to boost language models. Specifically, before a language model generates an answer, it retrieves relevant information from an extensive document database and then uses this information to guide the generation process. This technology can greatly improve the accuracy and relevance of content, effectively alleviate the problem of hallucinations, increase the speed of knowledge update, and enhance the traceability of content generation. RAG is undoubtedly one of the most exciting areas of artificial intelligence research. For more details about RAG, please refer to the column article on this site "What are the new developments in RAG, which specializes in making up for the shortcomings of large models?" This review explains it clearly." But RAG is not perfect, and users often encounter some "pain points" when using it. Recently, NVIDIA’s advanced generative AI solution