Python collection--data storage

Python Network Data Collection 3-Save data to CSV and MySql

Warm up first and download all the images on a page.

import requestsfrom bs4 import BeautifulSoup

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)' ' Chrome/52.0.2743.116 Safari/537.36 Edge/15.16193'}

start_url = 'https://www.pythonscraping.com'r = requests.get(start_url, headers=headers)

soup = BeautifulSoup(r.text, 'lxml')# 获取所有img标签img_tags = soup.find_all('img')for tag in img_tags:print(tag['src'])http://pythonscraping.com/img/lrg%20(1).jpg

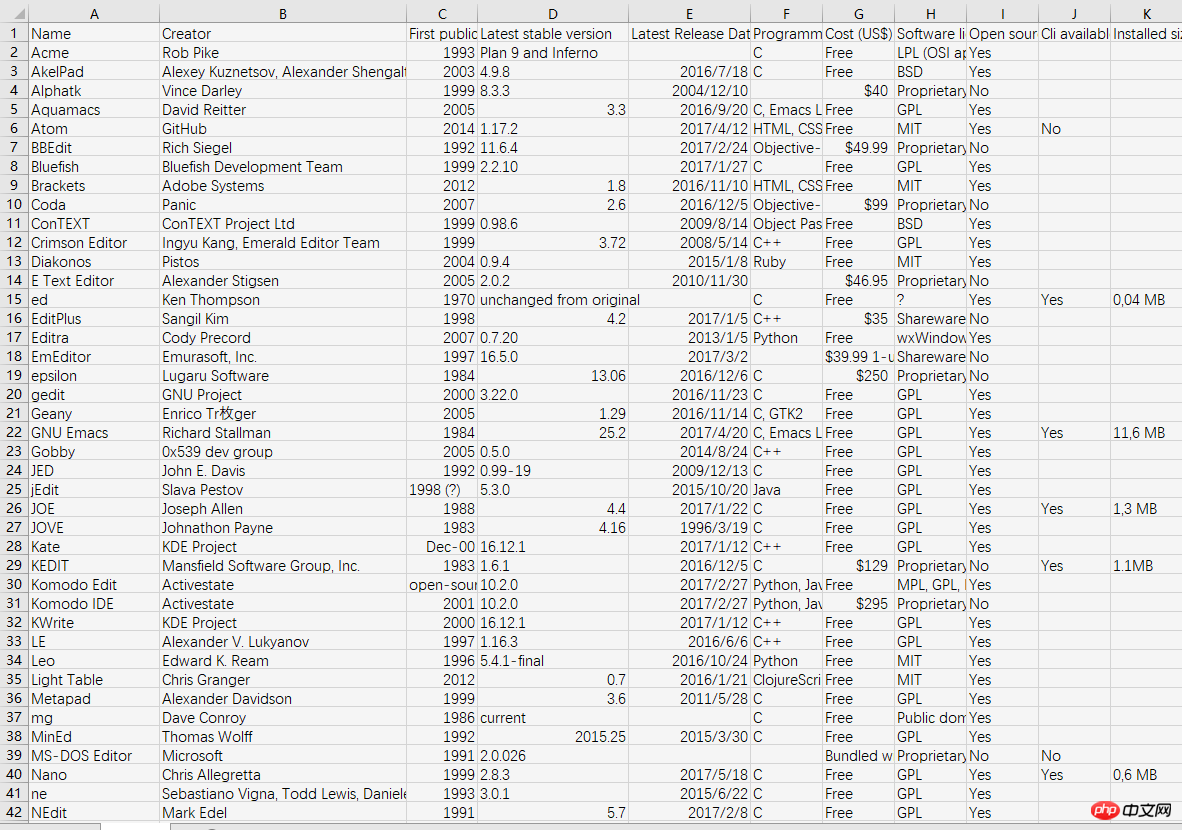

Storing web tables into CSV files

Take this URL as an example. There are several tables. We crawl the first table. Wiki-Comparison of various editors

import csvimport requestsfrom bs4 import BeautifulSoup

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)' ' Chrome/52.0.2743.116 Safari/537.36 Edge/15.16193'}

url = 'https://en.wikipedia.org/wiki/Comparison_of_text_editors'r = requests.get(url, headers=headers)

soup = BeautifulSoup(r.text, 'lxml')# 只要第一个表格rows = soup.find('table', class_='wikitable').find_all('tr')# csv写入时候每写一行会有一空行被写入,所以设置newline为空with open('editors.csv', 'w', newline='', encoding='utf-8') as f:

writer = csv.writer(f)for row in rows:

csv_row = []for cell in row.find_all(['th', 'td']):

csv_row.append(cell.text)

writer.writerow(csv_row)One thing to note is that you need to specify newline='' when opening the file, because when writing a csv file , every time a line is written, an empty line will be written.

Reading CSV files from the network

The above describes saving web page content into CSV files. What if the CSV file was obtained from the Internet? We don't want to download and then read from local. But for network requests, string is returned instead of file object. csv.reader()Needs to pass in a file object. Therefore, the obtained string needs to be converted into a file object. Python's built-in libraries, StringIO and BytesIO, can handle strings/bytes as files. For the csv module, the reader iterator is required to return a string type, so StringIO is used. If binary data is processed, BytesIO is used. Converted to a file object, it can be processed using the CSV module.

The most critical thing about the following code is data_file = StringIO(csv_data.text)Convert the string into a file-like object.

from io import StringIOimport csvimport requests csv_data = requests.get('http://pythonscraping.com/files/MontyPythonAlbums.csv') data_file = StringIO(csv_data.text) reader = csv.reader(data_file)for row in reader:print(row)

['Name', 'Year'] ["Monty Python's Flying Circus", '1970'] ['Another Monty Python Record', '1971'] ["Monty Python's Previous Record", '1972'] ['The Monty Python Matching Tie and Handkerchief', '1973'] ['Monty Python Live at Drury Lane', '1974'] ['An Album of the Soundtrack of the Trailer of the Film of Monty Python and the Holy Grail', '1975'] ['Monty Python Live at City Center', '1977'] ['The Monty Python Instant Record Collection', '1977'] ["Monty Python's Life of Brian", '1979'] ["Monty Python's Cotractual Obligation Album", '1980'] ["Monty Python's The Meaning of Life", '1983'] ['The Final Rip Off', '1987'] ['Monty Python Sings', '1989'] ['The Ultimate Monty Python Rip Off', '1994'] ['Monty Python Sings Again', '2014']

DictReader can obtain data like a dictionary, using the first row of the table (usually the header) as the key. You can access the data corresponding to a certain key in each row.

Each row of data is OrderDict, which can be accessed using Key. Looking at the first line of the printed information above, it shows that there are two keys: Name and Year. You can also use reader.fieldnames to view.

from io import StringIOimport csvimport requests csv_data = requests.get('http://pythonscraping.com/files/MontyPythonAlbums.csv') data_file = StringIO(csv_data.text) reader = csv.DictReader(data_file)# 查看Keyprint(reader.fieldnames)for row in reader:print(row['Year'], row['Name'], sep=': ')

['Name', 'Year'] 1970: Monty Python's Flying Circus 1971: Another Monty Python Record 1972: Monty Python's Previous Record 1973: The Monty Python Matching Tie and Handkerchief 1974: Monty Python Live at Drury Lane 1975: An Album of the Soundtrack of the Trailer of the Film of Monty Python and the Holy Grail 1977: Monty Python Live at City Center 1977: The Monty Python Instant Record Collection 1979: Monty Python's Life of Brian 1980: Monty Python's Cotractual Obligation Album 1983: Monty Python's The Meaning of Life 1987: The Final Rip Off 1989: Monty Python Sings 1994: The Ultimate Monty Python Rip Off 2014: Monty Python Sings Again

存储数据

大数据存储与数据交互能力, 在新式的程序开发中已经是重中之重了.

存储媒体文件的2种主要方式: 只获取url链接, 或直接将源文件下载下来

直接引用url链接的优点:

爬虫运行得更快,耗费的流量更少,因为只要链接,不需要下载文件。

可以节省很多存储空间,因为只需要存储 URL 链接就可以。

存储 URL 的代码更容易写,也不需要实现文件下载代码。

不下载文件能够降低目标主机服务器的负载。

直接引用url链接的缺点:

这些内嵌在网站或应用中的外站 URL 链接被称为盗链(hotlinking), 每个网站都会实施防盗链措施。

因为链接文件在别人的服务器上,所以应用就要跟着别人的节奏运行了。

盗链是很容易改变的。如果盗链图片放在博客上,要是被对方服务器发现,很可能被恶搞。如果 URL 链接存起来准备以后再用,可能用的时候链接已经失效了,或者是变成了完全无关的内容。

python3的urllib.request.urlretrieve可以根据文件的url下载文件:

from urllib.request import urlretrievefrom urllib.request import urlopenfrom bs4 import BeautifulSouphtml = urlopen("http://www.pythonscraping.com")bsObj = BeautifulSoup(html)imageLocation = bsObj.find("a", {"id": "logo"}).find("img")["src"]urlretrieve (imageLocation, "logo.jpg")

csv(comma-separated values, 逗号分隔值)是存储表格数据的常用文件格式

网络数据采集的一个常用功能就是获取html表格并写入csv

除了用户定义的变量名,mysql是不区分大小写的, 习惯上mysql关键字用大写表示

连接与游标(connection/cursor)是数据库编程的2种模式:

连接模式除了要连接数据库之外, 还要发送数据库信息, 处理回滚操作, 创建游标对象等

一个连接可以创建多个游标, 一个游标跟踪一种状态信息, 比如数据库的使用状态. 游标还会包含最后一次查询执行的结果. 通过调用游标函数, 如fetchall获取查询结果

游标与连接使用完毕之后,务必要关闭, 否则会导致连接泄漏, 会一直消耗数据库资源

使用try ... finally语句保证数据库连接与游标的关闭

让数据库更高效的几种方法:

给每张表都增加id字段. 通常数据库很难智能地选择主键

用智能索引, CREATE INDEX definition ON dictionary (id, definition(16));

选择合适的范式

发送Email, 通过爬虫或api获取信息, 设置条件自动发送Email! 那些订阅邮件, 肯定就是这么来的!

保存链接之间的联系

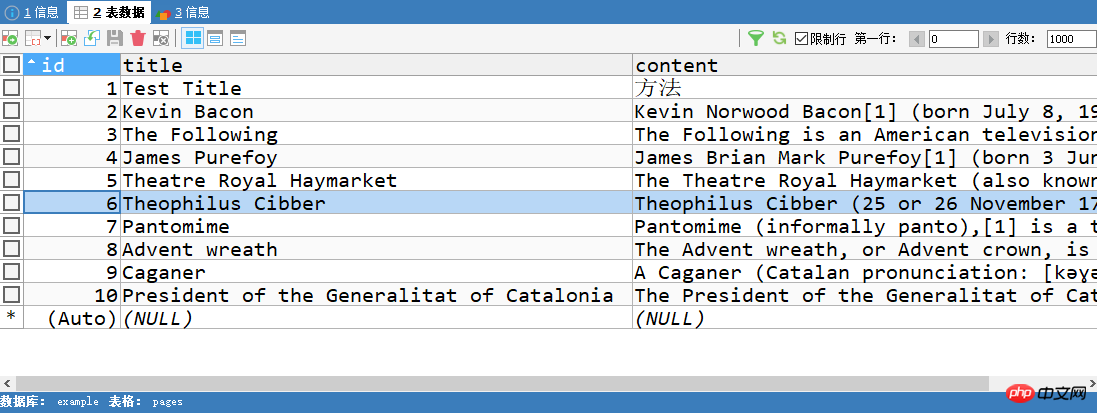

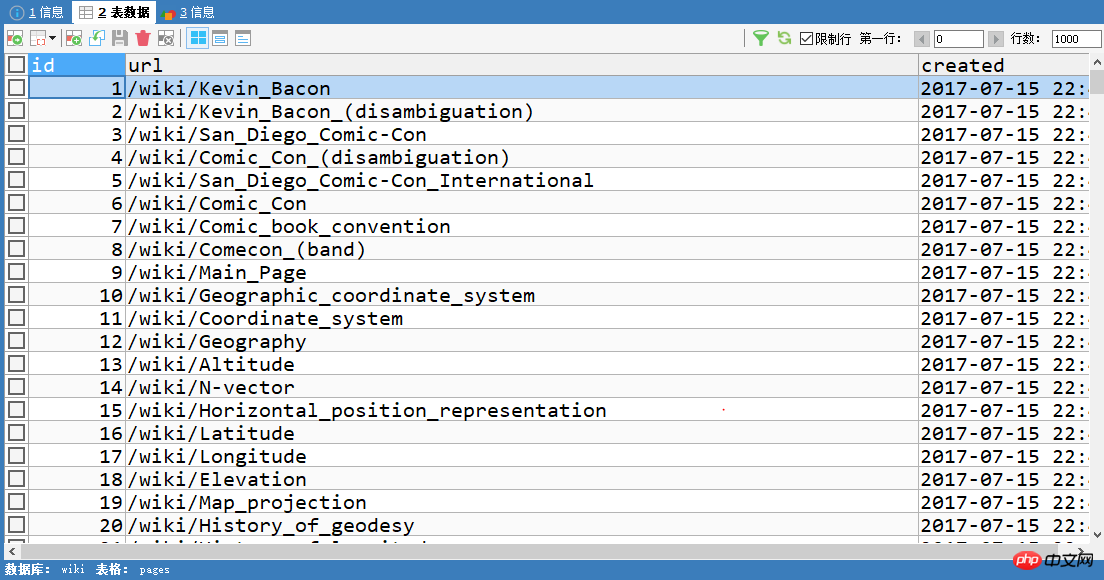

比如链接A,能够在这个页面里找到链接B。则可以表示为A -> B。我们就是要保存这种联系到数据库。先建表:

pages表只保存链接url。

CREATE TABLE `pages` ( `id` int(11) NOT NULL AUTO_INCREMENT, `url` varchar(255) DEFAULT NULL, `created` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`) )

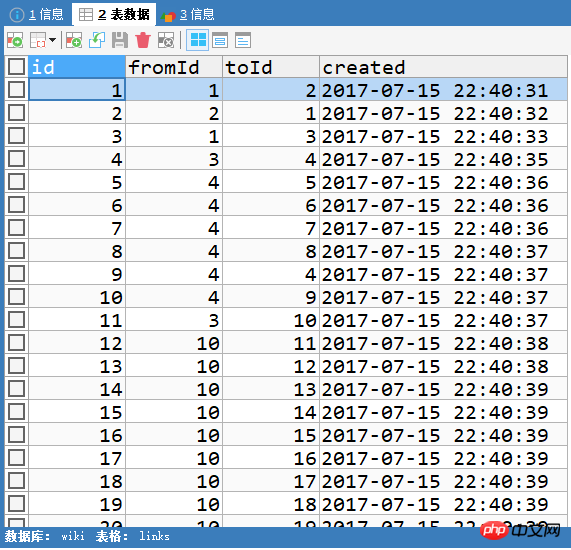

links表保存链接的fromId和toId,这两个id和pages里面的id是一致的。如1 -> 2就是pages里id为1的url页面里可以访问到id为2的url的意思。

CREATE TABLE `links` ( `id` int(11) NOT NULL AUTO_INCREMENT, `fromId` int(11) DEFAULT NULL, `toId` int(11) DEFAULT NULL, `created` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY (`id`)

上面的建表语句看起来有点臃肿,我是先用可视化工具建表后,再用show create table pages这样的语句查看的。

import reimport pymysqlimport requestsfrom bs4 import BeautifulSoup

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)' ' Chrome/52.0.2743.116 Safari/537.36 Edge/15.16193'}

conn = pymysql.connect(host='localhost', user='root', password='admin', db='wiki', charset='utf8')

cur = conn.cursor()def insert_page_if_not_exists(url):

cur.execute(f"SELECT * FROM pages WHERE url='{url}';")# 这条url没有插入的话if cur.rowcount == 0:# 那就插入cur.execute(f"INSERT INTO pages(url) VALUES('{url}');")

conn.commit()# 刚插入数据的idreturn cur.lastrowid# 否则已经存在这条数据,因为url一般是唯一的,所以获取一个就行,取脚标0是获得idelse:return cur.fetchone()[0]def insert_link(from_page, to_page):print(from_page, ' -> ', to_page)

cur.execute(f"SELECT * FROM links WHERE fromId={from_page} AND toId={to_page};")# 如果查询不到数据,则插入,插入需要两个pages的id,即两个urlif cur.rowcount == 0:

cur.execute(f"INSERT INTO links(fromId, toId) VALUES({from_page}, {to_page});")

conn.commit()# 链接去重pages = set()# 得到所有链接def get_links(page_url, recursion_level):global pagesif recursion_level == 0:return# 这是刚插入的链接page_id = insert_page_if_not_exists(page_url)

r = requests.get('https://en.wikipedia.org' + page_url, headers=headers)

soup = BeautifulSoup(r.text, 'lxml')

link_tags = soup.find_all('a', href=re.compile('^/wiki/[^:/]*$'))for link_tag in link_tags:# page_id是刚插入的url,参数里再次调用了insert_page...方法,获得了刚插入的url里能去往的url列表# 由此形成联系,比如刚插入的id为1,id为1的url里能去往的id有2、3、4...,则形成1 -> 2, 1 -> 3这样的联系insert_link(page_id, insert_page_if_not_exists(link_tag['href']))if link_tag['href'] not in pages:

new_page = link_tag['href']

pages.add(new_page)# 递归查找, 只能递归recursion_level次get_links(new_page, recursion_level - 1)if __name__ == '__main__':try:

get_links('/wiki/Kevin_Bacon', 5)except Exception as e:print(e)finally:

cur.close()

conn.close()1 -> 2 2 -> 1 1 -> 2 1 -> 3 3 -> 4 4 -> 5 4 -> 6 4 -> 7 4 -> 8 4 -> 4 4 -> 4 4 -> 9 4 -> 9 3 -> 10 10 -> 11 10 -> 12 10 -> 13 10 -> 14 10 -> 15 10 -> 16 10 -> 17 10 -> 18 10 -> 19 10 -> 20 10 -> 21 ...

看打印的信息,一目了然。看前两行打印,pages表里id为1的url可以访问id为2的url,同时pages表里id为2的url可以访问id为1的url...依次类推。

首先需要使用insert_page_if_not_exists(page_url)获得链接的id,然后使用insert_link(fromId, toId)形成联系。fromId是当前页面的url,toId则是从当前页面能够去往的url的id,这些能去往的url用bs4找到以列表形式返回。当前所处的url即page_id,所以需要在insert_link的第二个参数中,再次调用insert_page_if_not_exists(link)以获得列表中每个url的id。由此形成了联系。比如刚插入的id为1,id为1的url里能去往的id有2、3、4...,则形成1 -> 2, 1 -> 3这样的联系。

看下数据库。下面是pages表,每一个id都对应一个url。

然后下面是links表,fromId和toId就是pages中的id。当然和打印的数据是一样的咯,不过打印了看看就过去了,存下来的话哪天需要分析这些数据就大有用处了。

The above is the detailed content of Python collection--data storage. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents.

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

The speed of mobile XML to PDF depends on the following factors: the complexity of XML structure. Mobile hardware configuration conversion method (library, algorithm) code quality optimization methods (select efficient libraries, optimize algorithms, cache data, and utilize multi-threading). Overall, there is no absolute answer and it needs to be optimized according to the specific situation.

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone.

How to control the size of XML converted to images?

Apr 02, 2025 pm 07:24 PM

How to control the size of XML converted to images?

Apr 02, 2025 pm 07:24 PM

To generate images through XML, you need to use graph libraries (such as Pillow and JFreeChart) as bridges to generate images based on metadata (size, color) in XML. The key to controlling the size of the image is to adjust the values of the <width> and <height> tags in XML. However, in practical applications, the complexity of XML structure, the fineness of graph drawing, the speed of image generation and memory consumption, and the selection of image formats all have an impact on the generated image size. Therefore, it is necessary to have a deep understanding of XML structure, proficient in the graphics library, and consider factors such as optimization algorithms and image format selection.

How to open xml format

Apr 02, 2025 pm 09:00 PM

How to open xml format

Apr 02, 2025 pm 09:00 PM

Use most text editors to open XML files; if you need a more intuitive tree display, you can use an XML editor, such as Oxygen XML Editor or XMLSpy; if you process XML data in a program, you need to use a programming language (such as Python) and XML libraries (such as xml.etree.ElementTree) to parse.

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

XML formatting tools can type code according to rules to improve readability and understanding. When selecting a tool, pay attention to customization capabilities, handling of special circumstances, performance and ease of use. Commonly used tool types include online tools, IDE plug-ins, and command-line tools.

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

There is no APP that can convert all XML files into PDFs because the XML structure is flexible and diverse. The core of XML to PDF is to convert the data structure into a page layout, which requires parsing XML and generating PDF. Common methods include parsing XML using Python libraries such as ElementTree and generating PDFs using ReportLab library. For complex XML, it may be necessary to use XSLT transformation structures. When optimizing performance, consider using multithreaded or multiprocesses and select the appropriate library.

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

There is no built-in sum function in C language, so it needs to be written by yourself. Sum can be achieved by traversing the array and accumulating elements: Loop version: Sum is calculated using for loop and array length. Pointer version: Use pointers to point to array elements, and efficient summing is achieved through self-increment pointers. Dynamically allocate array version: Dynamically allocate arrays and manage memory yourself, ensuring that allocated memory is freed to prevent memory leaks.