Recommended resources for Mahout video tutorials

Mahout provides some scalable implementations of classic algorithms in the field of machine learning, aiming to help developers create intelligent applications more conveniently and quickly. Mahout contains many implementations, including clustering, classification, recommendation filtering, and frequent sub-item mining. Additionally, Mahout can efficiently scale to the cloud by using the Apache Hadoop library.

The teacher’s teaching style:

The teacher’s lectures are simple and easy to understand, clear in structure, analyzed layer by layer, interlocking, and rigorous in argumentation , has a rigorous structure, uses the logical power of thinking to attract students' attention, and uses reason to control the classroom teaching process. By listening to the teacher's lectures, students not only learn knowledge, but also receive thinking training, and are also influenced and influenced by the teacher's rigorous academic attitude

The more difficult point in this video is the logistic regression classifier_Bei Yess Classifier_1:

1. Background

First of all, at the beginning of the article, let’s ask a few questions , if you can answer these questions, then you don’t need to read this article, or your motivation for reading is purely to find faults with this article. Of course, I also welcome it. Please send an email to "Naive Bayesian of Faults" to 297314262 @qq.com, I will read your letter carefully.

By the way, if after reading this article, you still can’t answer the following questions, then please notify me by email and I will try my best to answer your doubts.

The "naive" in the naive Bayes classifier specifically refers to what characteristics of this classifier

Naive Bayes classifier and maximum likelihood estimation (MLE), maximum posterior The relationship between probability (MAP)

The relationship between naive Bayes classification, logistic regression classification, generative model, and decision model

The relationship between supervised learning and Bayesian estimation

2. Agreement

So, this article begins. First of all, regarding the various expression forms that may appear in this article, some conventions are made here

Capital letters, such as X, represent random variables; if X is a multi-dimensional variable, then the subscript i represents the i-th dimension variable, That is, Xi

lowercase letters, such as Xij, represent one value of the variable (the jth value of Xi)

3. Bayesian estimation and supervised learning

Okay, so first answer the fourth question, how to use Bayesian estimation to solve supervised learning problems?

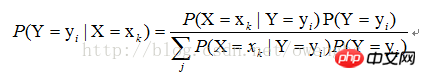

For supervised learning, our goal is actually to estimate an objective function f: X->Y,, or target distribution P(Y|X), where The variable, Y, is the actual classification result of the sample. Assume that the value of sample |X=xk), just find all the estimates of P(X=xk|Y=yi) and all the estimates of P(Y=yi) based on the sample. The subsequent classification process is to find the largest yi of P(Y=yi|X=xk). It can be seen that using Bayesian estimation can solve the problem of supervised learning.

4. The "simple" characteristics of the classifier

As mentioned above, the solution of P(X=xk|Y=yi) can be transformed into the solution of P(X1=x1j1|Y=yi), P(X2=x2j2|Y=yi),... P (Xn=xnjn|Y=yi), then how to use the maximum likelihood estimation method to find these values?

First choice We need to understand what maximum likelihood estimation is. In fact, in our probability theory textbooks, the explanations about maximum likelihood estimation are all about solving unsupervised learning problems. After reading After reading this section, you should understand that using maximum likelihood estimation to solve supervised learning problems under naive characteristics is actually using maximum likelihood estimation to solve unsupervised learning problems under various categories of conditions.

The above is the detailed content of Recommended resources for Mahout video tutorials. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Recommended 2022 NVIDIA 40 series graphics card driver version

Jan 02, 2024 pm 06:43 PM

Recommended 2022 NVIDIA 40 series graphics card driver version

Jan 02, 2024 pm 06:43 PM

NVIDIA 4060 graphics card driver version recommended. When choosing a graphics card driver version on a laptop, it is generally recommended to choose the version recommended by the official website or the latest version. For the Intel HD Graphics 4060 graphics card, it is recommended to choose the latest driver released on Intel's official website for update and installation. The specific steps are as follows: "Words Play Flowers" is a popular word puzzle game with new levels launched every day. One of the levels is called Nostalgic Cleaning. We need to find 12 elements in the picture that are inconsistent with the era. Today, I will bring you a guide to clearing the nostalgic cleansing level of "Word Play Flowers" to help players who have not passed it successfully pass the level. Let’s take a look at the specific steps! VisitIntel

The first choice for CS players: recommended computer configuration

Jan 02, 2024 pm 04:26 PM

The first choice for CS players: recommended computer configuration

Jan 02, 2024 pm 04:26 PM

1. Processor When choosing a computer configuration, the processor is one of the most important components. For playing games like CS, the performance of the processor directly affects the smoothness and response speed of the game. It is recommended to choose Intel Core i5 or i7 series processors because they have powerful multi-core processing capabilities and high frequencies, and can easily cope with the high requirements of CS. 2. Graphics card Graphics card is one of the important factors in game performance. For shooting games such as CS, the performance of the graphics card directly affects the clarity and smoothness of the game screen. It is recommended to choose NVIDIA GeForce GTX series or AMD Radeon RX series graphics cards. They have excellent graphics processing capabilities and high frame rate output, and can provide a better gaming experience. 3. Memory power

How to recommend friends to me on Taobao

Feb 29, 2024 pm 07:07 PM

How to recommend friends to me on Taobao

Feb 29, 2024 pm 07:07 PM

In the process of using Taobao, we will often be recommended by some friends we may know. Here is an introduction to how to turn off this function. Friends who are interested should take a look. After opening the "Taobao" APP on your mobile phone, click "My Taobao" in the lower right corner of the page to enter the personal center page, and then click the "Settings" function in the upper right corner to enter the settings page. 2. After coming to the settings page, find "Privacy" and click on this item to enter. 3. There is a "Recommend friends to me" on the privacy page. When it shows that the current status is "on", click on it to close it. 4. Finally, in the pop-up window, there will be a switch button behind "Recommend friends to me". Click on it to set the button to gray.

Become a C expert: Five must-have compilers recommended

Feb 19, 2024 pm 01:03 PM

Become a C expert: Five must-have compilers recommended

Feb 19, 2024 pm 01:03 PM

From Beginner to Expert: Five Essential C Compiler Recommendations With the development of computer science, more and more people are interested in programming languages. As a high-level language widely used in system-level programming, C language has always been loved by programmers. In order to write efficient and stable code, it is important to choose a C language compiler that suits you. This article will introduce five essential C language compilers for beginners and experts to choose from. GCCGCC, the GNU compiler collection, is one of the most commonly used C language compilers

Huangquan Light Cone Recommendation

Mar 27, 2024 pm 05:31 PM

Huangquan Light Cone Recommendation

Mar 27, 2024 pm 05:31 PM

Huang Quan's light cone can effectively increase the character's critical hit damage and attack power in battle. The light cones recommended by Huang Quan are: Walking on the Passing Shore, Good Night and Sleeping Face, Rain Keeps Falling, Just Wait, and Determination Like Beads of Sweat. Shine, below the editor will bring you recommendations for the Underworld Light Cone of the Collapsed Star Dome Railway. Huangquan Light Cone Recommendation 1. Walking on the Passing Bank 1. Huangquan's special weapon can increase the explosive damage. Attacking the enemy can put the enemy into a bubble negative state, which increases the damage caused. The damage of the finishing move is additionally increased. There are both negative states and The damage is increased, it has to be said that it is a special weapon. 2. The exclusive light cone is very unique among many ethereal light cones. It directly increases direct damage, has high damage and improves the critical damage attribute. 3. Not only that, the light cone also provides a negative status effect, which can cause Huangquan itself to react.

Recommended keyboards for reducing hitting noise in games

Jan 05, 2024 am 10:36 AM

Recommended keyboards for reducing hitting noise in games

Jan 05, 2024 am 10:36 AM

Go to Recommend Silent Gaming Keyboard If you want to enjoy a quiet experience while gaming, you may consider buying a silent gaming keyboard. Recommended products include CherryMXSilent, LogitechG915 and SteelSeriesApexPro. These keyboards are low-noise, lightweight and responsive. In addition, it is recommended to choose a keyboard with features such as adjustable backlight brightness, programmable functions, and comfortable feel to meet better usage needs. Recognized as the quietest keyboard "Duga K320", it is a much-loved electronic product. It is known for its excellent performance and features, making it an ideal choice for many people. Whether it is gaming, entertainment or office work, Duga K320 can provide excellent performance. it

Java emulator recommendations: These five are easy to use and practical!

Feb 22, 2024 pm 08:42 PM

Java emulator recommendations: These five are easy to use and practical!

Feb 22, 2024 pm 08:42 PM

A Java emulator is software that can run Java applications on a computer or device. It can simulate the Java virtual machine and execute Java bytecode, enabling users to run Java programs on different platforms. Java simulators are widely used in software development, learning and testing. This article will introduce five useful and practical Java emulators that can meet the needs of different users and help users develop and run Java programs more efficiently. The first emulator was Eclipse. Ecl

How to find resources on 115 network disk

Feb 23, 2024 pm 05:10 PM

How to find resources on 115 network disk

Feb 23, 2024 pm 05:10 PM

There will be a lot of resources in the 115 network disk, so how to find resources? Users can search for the resources they need in the software, then enter the download interface, and then choose to save to the network disk. This introduction to the method of finding resources on 115 network disk can tell you the specific content. The following is a detailed introduction, come and take a look. How to find resources on 115 network disk? Answer: Search the content in the software, and then click to save to the network disk. Detailed introduction: 1. First enter the resources you want in the app. 2. Then click the keyword link that appears. 3. Then enter the download interface. 4. Click Save to network disk inside.