Backend Development

Backend Development

Python Tutorial

Python Tutorial

Detailed example of python3 using the requests module to crawl page content

Detailed example of python3 using the requests module to crawl page content

Detailed example of python3 using the requests module to crawl page content

This article mainly introduces the actual practice of using python3 to crawl page content using the requests module. It has certain reference value. If you are interested, you can learn more

1. Install pip

My personal desktop system uses linuxmint. The system does not have pip installed by default. Considering that pip will be used to install the requests module later, I will install pip as the first step here.

$ sudo apt install python-pip

The installation is successful, check the PIP version:

$ pip -V

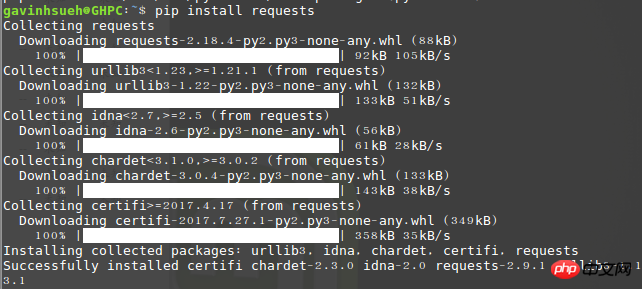

2. Install the requests module

Here I installed it through pip:

$ pip install requests

Run import requests, if no error is prompted, then It means the installation has been successful!

Verify whether the installation is successful

3. Install beautifulsoup4

Beautiful Soup is a tool that can be downloaded from HTML or XML Python library for extracting data from files. It enables customary document navigation, ways to find and modify documents through your favorite converter. Beautiful Soup will save you hours or even days of work.

$ sudo apt-get install python3-bs4

Note: I am using the python3 installation method here. If you are using python2, you can use the following command to install it.

$ sudo pip install beautifulsoup4

4.A brief analysis of the requests module

1) Send a request

First of all, of course, you must import Requests Module:

>>> import requests

Then, get the target crawled web page. Here I take the following as an example:

>>> r = requests.get('http://www.jb51.net/article/124421.htm')

Here returns a response object named r. We can get all the information we want from this object. The get here is the response method of http, so you can also replace it with put, delete, post, and head by analogy.

2) Pass URL parameters

Sometimes we want to pass some kind of data for the query string of the URL. If you build the URL by hand, the data is placed in the URL as key/value pairs, followed by a question mark. For example, cnblogs.com/get?key=val. Requests allow you to use the params keyword argument to provide these parameters as a dictionary of strings.

For example, when we google search for the keyword "python crawler", parameters such as newwindow (new window opens), q and oq (search keywords) can be manually formed into the URL, then you can use the following code :

>>> payload = {'newwindow': '1', 'q': 'python爬虫', 'oq': 'python爬虫'}

>>> r = requests.get("https://www.google.com/search", params=payload)3) Response content

Get the page response content through r.text or r.content.

>>> import requests

>>> r = requests.get('https://github.com/timeline.json')

>>> r.textRequests automatically decode content from the server. Most unicode character sets can be decoded seamlessly. Here is a little addition about the difference between r.text and r.content. To put it simply:

resp.text returns Unicode data;

resp.content returns data of bytes type. It is binary data;

So if you want to get text, you can pass r.text. If you want to get pictures or files, you can pass r.content.

4) Get the web page encoding

>>> r = requests.get('http://www.cnblogs.com/') >>> r.encoding 'utf-8'

5) Get the response status code

We can detect the response status code:

>>> r = requests.get('http://www.cnblogs.com/') >>> r.status_code 200

5. Case Demonstration

The company has just introduced an OA system recently. Here I take its official documentation page as an example, and Only capture useful information such as article titles and content on the page.

Demo environment

Operating system: linuxmint

Python version: python 3.5.2

Using modules: requests, beautifulsoup4

Code As follows:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

_author_ = 'GavinHsueh'

import requests

import bs4

#要抓取的目标页码地址

url = 'http://www.ranzhi.org/book/ranzhi/about-ranzhi-4.html'

#抓取页码内容,返回响应对象

response = requests.get(url)

#查看响应状态码

status_code = response.status_code

#使用BeautifulSoup解析代码,并锁定页码指定标签内容

content = bs4.BeautifulSoup(response.content.decode("utf-8"), "lxml")

element = content.find_all(id='book')

print(status_code)

print(element)The program runs and returns the crawling result:

The crawl is successful

About the problem of garbled crawling results

In fact, at first I directly used the python2 that comes with the system by default, but I struggled for a long time with the problem of garbled encoding of the content returned by crawling, google Various solutions have failed. After being "made crazy" by python2, I had no choice but to use python3 honestly. Regarding the problem of garbled content in python2's crawled pages, seniors are welcome to share their experiences to help future generations like me avoid detours.

Postscript

Python has many crawler-related modules, in addition to the requests module, there are also urllib, pycurl, and tornado, etc. In comparison, I personally feel that the requests module is relatively simple and easy to use. Through text, you can quickly learn to use python's requests module to crawl page content. My ability is limited. If there are any mistakes in the article, please feel free to let me know. Secondly, if you have any questions about the content of the page crawled by python, you are also welcome to discuss with everyone.

The above is the detailed content of Detailed example of python3 using the requests module to crawl page content. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Detailed explanation of the mode function in C++

Nov 18, 2023 pm 03:08 PM

Detailed explanation of the mode function in C++

Nov 18, 2023 pm 03:08 PM

Detailed explanation of the mode function in C++ In statistics, the mode refers to the value that appears most frequently in a set of data. In C++ language, we can find the mode in any set of data by writing a mode function. The mode function can be implemented in many different ways, two of the commonly used methods will be introduced in detail below. The first method is to use a hash table to count the number of occurrences of each number. First, we need to define a hash table with each number as the key and the number of occurrences as the value. Then, for a given data set, we run

Detailed explanation of obtaining administrator rights in Win11

Mar 08, 2024 pm 03:06 PM

Detailed explanation of obtaining administrator rights in Win11

Mar 08, 2024 pm 03:06 PM

Windows operating system is one of the most popular operating systems in the world, and its new version Win11 has attracted much attention. In the Win11 system, obtaining administrator rights is an important operation. Administrator rights allow users to perform more operations and settings on the system. This article will introduce in detail how to obtain administrator permissions in Win11 system and how to effectively manage permissions. In the Win11 system, administrator rights are divided into two types: local administrator and domain administrator. A local administrator has full administrative rights to the local computer

Detailed explanation of division operation in Oracle SQL

Mar 10, 2024 am 09:51 AM

Detailed explanation of division operation in Oracle SQL

Mar 10, 2024 am 09:51 AM

Detailed explanation of division operation in OracleSQL In OracleSQL, division operation is a common and important mathematical operation, used to calculate the result of dividing two numbers. Division is often used in database queries, so understanding the division operation and its usage in OracleSQL is one of the essential skills for database developers. This article will discuss the relevant knowledge of division operations in OracleSQL in detail and provide specific code examples for readers' reference. 1. Division operation in OracleSQL

Detailed explanation of remainder function in C++

Nov 18, 2023 pm 02:41 PM

Detailed explanation of remainder function in C++

Nov 18, 2023 pm 02:41 PM

Detailed explanation of the remainder function in C++ In C++, the remainder operator (%) is used to calculate the remainder of the division of two numbers. It is a binary operator whose operands can be any integer type (including char, short, int, long, etc.) or a floating-point number type (such as float, double). The remainder operator returns a result with the same sign as the dividend. For example, for the remainder operation of integers, we can use the following code to implement: inta=10;intb=3;

Detailed explanation of the usage of Vue.nextTick function and its application in asynchronous updates

Jul 26, 2023 am 08:57 AM

Detailed explanation of the usage of Vue.nextTick function and its application in asynchronous updates

Jul 26, 2023 am 08:57 AM

Detailed explanation of the usage of Vue.nextTick function and its application in asynchronous updates. In Vue development, we often encounter situations where data needs to be updated asynchronously. For example, data needs to be updated immediately after modifying the DOM or related operations need to be performed immediately after the data is updated. The .nextTick function provided by Vue emerged to solve this type of problem. This article will introduce the usage of the Vue.nextTick function in detail, and combine it with code examples to illustrate its application in asynchronous updates. 1. Vue.nex

Detailed explanation of the role and usage of PHP modulo operator

Mar 19, 2024 pm 04:33 PM

Detailed explanation of the role and usage of PHP modulo operator

Mar 19, 2024 pm 04:33 PM

The modulo operator (%) in PHP is used to obtain the remainder of the division of two numbers. In this article, we will discuss the role and usage of the modulo operator in detail, and provide specific code examples to help readers better understand. 1. The role of the modulo operator In mathematics, when we divide an integer by another integer, we get a quotient and a remainder. For example, when we divide 10 by 3, the quotient is 3 and the remainder is 1. The modulo operator is used to obtain this remainder. 2. Usage of the modulo operator In PHP, use the % symbol to represent the modulus

Detailed explanation of the linux system call system() function

Feb 22, 2024 pm 08:21 PM

Detailed explanation of the linux system call system() function

Feb 22, 2024 pm 08:21 PM

Detailed explanation of Linux system call system() function System call is a very important part of the Linux operating system. It provides a way to interact with the system kernel. Among them, the system() function is one of the commonly used system call functions. This article will introduce the use of the system() function in detail and provide corresponding code examples. Basic Concepts of System Calls System calls are a way for user programs to interact with the operating system kernel. User programs request the operating system by calling system call functions

Detailed explanation of Linux curl command

Feb 21, 2024 pm 10:33 PM

Detailed explanation of Linux curl command

Feb 21, 2024 pm 10:33 PM

Detailed explanation of Linux's curl command Summary: curl is a powerful command line tool used for data communication with the server. This article will introduce the basic usage of the curl command and provide actual code examples to help readers better understand and apply the command. 1. What is curl? curl is a command line tool used to send and receive various network requests. It supports multiple protocols, such as HTTP, FTP, TELNET, etc., and provides rich functions, such as file upload, file download, data transmission, proxy