In this article, we will share with you a detailed explanation of Mysql transactions and data consistency processing. At work, we often encounter such problems. We need to update inventory. When we query the available inventory and prepare to modify it, other users may have modified the inventory data. As a result, the inventory data we query There will be problems with the data. Let’s look at the solutions below.

In MySQL's InnoDB, the default Tansaction isolation level is REPEATABLE READ (rereadable)

If you want to UPDATE the same form after SELECT, it is best to use SELECT ... UPDATE.

For example:

Assume that there is a quantity in the product form products to store the quantity of goods. Before the order is established, it must be determined whether the quantity of goods is sufficient (quantity>0), and then the quantity The quantity is updated to 1. The code is as follows:

SELECT quantity FROM products WHERE id=3; UPDATE products SET quantity = 1 WHERE id=3;

There may not be a problem in a small amount of cases, but a large amount of data access will "definitely" cause problems. If we need to deduct inventory when quantity>0, assume that the quantity read by the program in the first SELECT line is 2. It seems that the number is correct, but when MySQL is preparing to UPDATE, someone may have already deducted the inventory. It became 0, but the program didn't know it and continued the UPDATE that was wrong. Therefore, a transaction mechanism must be used to ensure that the data read and submitted are correct.

So we can test this in MySQL. The code is as follows:

SET AUTOCOMMIT=0; BEGIN WORK; SELECT quantity FROM products WHERE id=3 FOR UPDATE;

At this time, the data with id=3 in the products data is locked (Note 3), and other transactions must wait for this transaction It can only be executed after submission

SELECT * FROM products WHERE id=3 FOR UPDATE

This ensures that the numbers read by quantity in other transactions are correct.

UPDATE products SET quantity = '1' WHERE id=3 ; COMMIT WORK;

Commit is written to the database and products are unlocked.

Note 1: BEGIN/COMMIT is the starting and ending point of the transaction. You can use more than two MySQL Command windows to interactively observe the locking status.

Note 2: During the transaction, only SELECT ... FOR UPDATE or LOCK IN SHARE MODE with the same data will wait for the completion of other transactions before executing. Generally, SELECT ... is not affected. this impact.

Note 3: Since InnoDB defaults to Row-level Lock, please refer to this article for locking of data columns.

Note 4: Try not to use the LOCK TABLES command in InnoDB forms. If you have to use it, please read the official instructions for using LOCK TABLES in InnoDB first to avoid frequent deadlocks in the system.

If we need to query first and then update the data, it is best to use the statement like this:

UPDATE products SET quantity = '1' WHERE id=3 AND quantity > 0;

In this way, it can be processed without adding things.

I saw a very good article, so I transferred it here to learn.

Today Mr. Wang taught us another lesson. In fact, Mr. Wang had already mentioned the problem of MySQL handling high concurrency and preventing oversold inventory last year; but it is a pity that even if everyone listened at the time I understand, but in actual development, I still have no awareness of this aspect. Today I will sort out some of my understanding on this issue, and hope that there will be more courses like this in the future.

Let’s first describe the problem of oversold inventory: Generally, e-commerce websites will encounter activities such as group buying, flash sales, and special offers. A common feature of such activities is a surge in visits and online sales. Thousands or even tens of thousands of people rush to buy a product. However, as an active commodity, the inventory is definitely very limited. How to control the inventory to prevent overbought and unnecessary losses is a headache for many e-commerce website programmers. This is also the most basic problem.

From a technical perspective, many people will definitely think of transactions, but transactions are a necessary condition for controlling oversold inventory, but it is not a necessary and sufficient condition.

Example:

Total inventory: 4 products

Requester: a, 1 product b, 2 products c, 3 products

The program is as follows:

beginTranse(开启事务)

try{

$result = $dbca->query('select amount from s_store where postID = 12345');

if(result->amount > 0){

//quantity为请求减掉的库存数量

$dbca->query('update s_store set amount = amount - quantity where postID = 12345');

}

}catch($e Exception){

rollBack(回滚)

}

commit(提交事务)The above code is the code we usually write in the control library. Most people will write it like this. It seems that there is no big problem, but in fact it hides huge loopholes. Database access is actually access to disk files. The tables in the database are actually files saved on the disk, and even one file contains multiple tables. For example, due to high concurrency, currently three users a, b, and c have entered this transaction. At this time, a shared lock will be generated, so when selecting, the inventory quantities found by these three users are all 4. , and also note that the results found by mysql innodb are version controlled. Before other users update and commit (that is, before a new version is generated), the results found by the current user are still the previous version;

Then update, if these three users arrive at update at the same time, the update statement will serialize the concurrency at this time, that is, sort the three users who arrive at the same time, execute them one by one, and generate exclusive Lock, before the current update statement is committed, other users are waiting for execution. After the commit, a new version is generated; after execution, the inventory must be negative. But according to the above description, if we modify the code, the overbought phenomenon will not occur. The code is as follows:

beginTranse(开启事务)

try{

//quantity为请求减掉的库存数量

$dbca->query('update s_store set amount = amount - quantity where postID = 12345');

$result = $dbca->query('select amount from s_store where postID = 12345');

if(result->amount < 0){

throw new Exception('库存不足');

}

}catch($e Exception){

rollBack(回滚)

}

commit(提交事务)In addition, a more concise method:

beginTranse(开启事务)

try{

//quantity为请求减掉的库存数量

$dbca->query('update s_store set amount = amount - quantity where amount>=quantity and postID = 12345');

}catch($e Exception){

rollBack(回滚)

}

commit(提交事务)=====================================================================================

1、在秒杀的情况下,肯定不能如此高频率的去读写数据库,会严重造成性能问题的

必须使用缓存,将需要秒杀的商品放入缓存中,并使用锁来处理其并发情况。当接到用户秒杀提交订单的情况下,先将商品数量递减(加锁/解锁)后再进行其他方面的处理,处理失败在将数据递增1(加锁/解锁),否则表示交易成功。

当商品数量递减到0时,表示商品秒杀完毕,拒绝其他用户的请求。

2、这个肯定不能直接操作数据库的,会挂的。直接读库写库对数据库压力太大,要用缓存。

把你要卖出的商品比如10个商品放到缓存中;然后在memcache里设置一个计数器来记录请求数,这个请求书你可以以你要秒杀卖出的商品数为基数,比如你想卖出10个商品,只允许100个请求进来。那当计数器达到100的时候,后面进来的就显示秒杀结束,这样可以减轻你的服务器的压力。然后根据这100个请求,先付款的先得后付款的提示商品以秒杀完。

3、首先,多用户并发修改同一条记录时,肯定是后提交的用户将覆盖掉前者提交的结果了。

这个直接可以使用加锁机制去解决,乐观锁或者悲观锁。乐观锁:,就是在数据库设计一个版本号的字段,每次修改都使其+1,这样在提交时比对提交前的版本号就知道是不是并发提交了,但是有个缺点就是只能是应用中控制,如果有跨应用修改同一条数据乐观锁就没办法了,这个时候可以考虑悲观锁。

悲观锁:,就是直接在数据库层面将数据锁死,类似于oralce中使用select xxxxx from xxxx where xx=xx for update,这样其他线程将无法提交数据。

除了加锁的方式也可以使用接收锁定的方式,思路是在数据库中设计一个状态标识位,用户在对数据进行修改前,将状态标识位标识为正在编辑的状态,这样其他用户要编辑此条记录时系统将发现有其他用户正在编辑,则拒绝其编辑的请求,类似于你在操作系统中某文件正在执行,然后你要修改该文件时,系统会提醒你该文件不可编辑或删除。

4、不建议在数据库层面加锁,建议通过服务端的内存锁(锁主键)。当某个用户要修改某个id的数据时,把要修改的id存入memcache,若其他用户触发修改此id的数据时,读到memcache有这个id的值时,就阻止那个用户修改。

5、实际应用中,并不是让mysql去直面大并发读写,会借助“外力”,比如缓存、利用主从库实现读写分离、分表、使用队列写入等方法来降低并发读写。

首先,多用户并发修改同一条记录时,肯定是后提交的用户将覆盖掉前者提交的结果了。这个直接可以使用加锁机制去解决,乐观锁或者悲观锁。

悲观锁(Pessimistic Lock), 顾名思义,就是很悲观,每次去拿数据的时候都认为别人会修改,所以每次在拿数据的时候都会上锁,这样别人想拿这个数据就会block直到它拿到锁。传统的关系型数据库里边就用到了很多这种锁机制,比如行锁,表锁等,读锁,写锁等,都是在做操作之前先上锁。

乐观锁(Optimistic Lock), 顾名思义,就是很乐观,每次去拿数据的时候都认为别人不会修改,所以不会上锁,但是在更新的时候会判断一下在此期间别人有没有去更新这个数据,可以使用版本号等机制。乐观锁适用于多读的应用类型,这样可以提高吞吐量,像数据库如果提供类似于write_condition机制的其实都是提供的乐观锁。

两种锁各有优缺点,不能单纯的定义哪个好于哪个。乐观锁比较适合数据修改比较少,读取比较频繁的场景,即使出现了少量的冲突,这样也省去了大量的锁的开销,故而提高了系统的吞吐量。但是如果经常发生冲突(写数据比较多的情况下),上层应用不不断的retry,这样反而降低了性能,对于这种情况使用悲观锁就更合适。

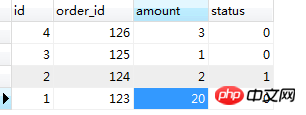

对这个表的 amount 进行修改,开两个命令行窗口

第一个窗口A;

SET AUTOCOMMIT=0; BEGIN WORK; SELECT * FROM order_tbl WHERE order_id='124' FOR UPDATE;

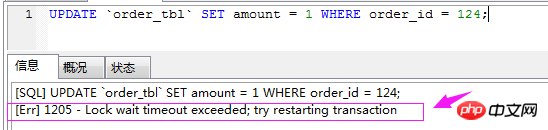

第二个窗口B:

# 更新订单ID 124 的库存数量 UPDATE `order_tbl` SET amount = 1 WHERE order_id = 124;

我们可以看到窗口A加了事物,锁住了这条数据,窗口B执行时会出现这样的问题:

第一个窗口完整的提交事物:

SET AUTOCOMMIT=0; BEGIN WORK; SELECT * FROM order_tbl WHERE order_id='124' FOR UPDATE; UPDATE `order_tbl` SET amount = 10 WHERE order_id = 124; COMMIT WORK;

相关推荐:

The above is the detailed content of Detailed explanation of Mysql transactions and data consistency processing. For more information, please follow other related articles on the PHP Chinese website!