A brief introduction to Python NLP

This article mainly introduces the Python NLP introductory tutorial, Python natural language processing (NLP), using Python's NLTK library. NLTK is Python's natural language processing toolkit. It is the most commonly used Python library in the field of NLP. The editor thinks it’s pretty good, so I’d like to share it with you now and give it as a reference. Let’s follow the editor to take a look, I hope it can help everyone.

What is NLP?

Simply put, natural language processing (NLP) is the development of applications or services that can understand human language.

Here are discussed some practical application examples of natural language processing (NLP), such as speech recognition, speech translation, understanding complete sentences, understanding synonyms of matching words, and generating grammatically correct complete sentences and paragraphs.

This is not all NLP can do.

NLP implementation

Search engines: such as Google, Yahoo, etc. The Google search engine knows you're a techie, so it displays tech-related results;

Social feeds: like Facebook News Feed. If the News Feed algorithm knows that your interests are natural language processing, it will show relevant ads and posts.

Voice engine: such as Apple's Siri.

Spam filtering: Such as Google spam filter. Different from ordinary spam filtering, it determines whether an email is spam by understanding the deeper meaning of the email content.

NLP library

The following are some open source natural language processing libraries (NLP):

Natural language toolkit (NLTK );

Apache OpenNLP;

Stanford NLP suite;

Gate NLP library

Among them, the Natural Language Toolkit (NLTK) is the most popular natural language processing library (NLP). It is written in Python and has very strong community support behind it.

NLTK is also easy to get started with, in fact, it is the simplest natural language processing (NLP) library.

In this NLP tutorial, we will use the Python NLTK library.

Install NLTK

If you are using Windows/Linux/Mac, you can use pip to install NLTK:

pip install nltk

Open python terminal and import NLTK to check if NLTK is installed correctly:

import nltk

If everything goes well, it means you have successfully installed it NLTK library. When you install NLTK for the first time, you need to install the NLTK extension package by running the following code:

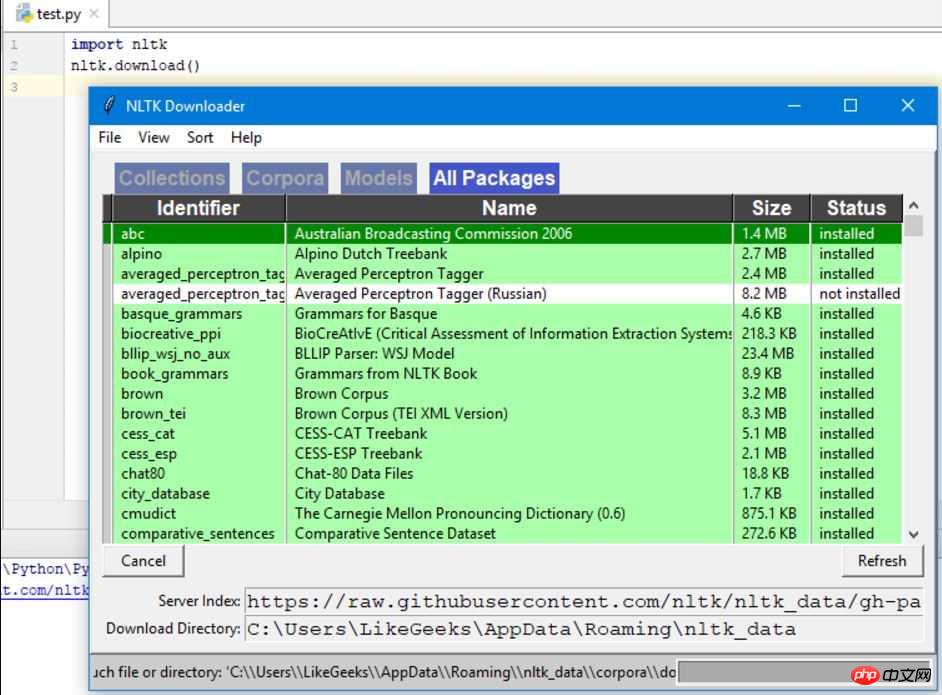

import nltk nltk.download()

This will pop up the NLTK download window to select which packages need to be installed:

You can install all packages without any problems as they are small in size.

Using Python Tokenize text

First, we will crawl the content of a web page, and then analyze the text to understand the content of the page.

We will use the urllib module to crawl web pages:

import urllib.request response = urllib.request.urlopen('http://php.net/') html = response.read() print (html)

As you can see from the printed results, the results contain many that need to be cleaned HTML tag.

Then the BeautifulSoup module cleans text like this:

from bs4 import BeautifulSoup import urllib.request response = urllib.request.urlopen('http://php.net/') html = response.read() soup = BeautifulSoup(html,"html5lib") # 这需要安装html5lib模块 text = soup.get_text(strip=True) print (text)

Now we get a clean text from the crawled web page text.

Next step, convert the text into tokens, like this:

from bs4 import BeautifulSoup import urllib.request response = urllib.request.urlopen('http://php.net/') html = response.read() soup = BeautifulSoup(html,"html5lib") text = soup.get_text(strip=True) tokens = text.split() print (tokens)

Count word frequency

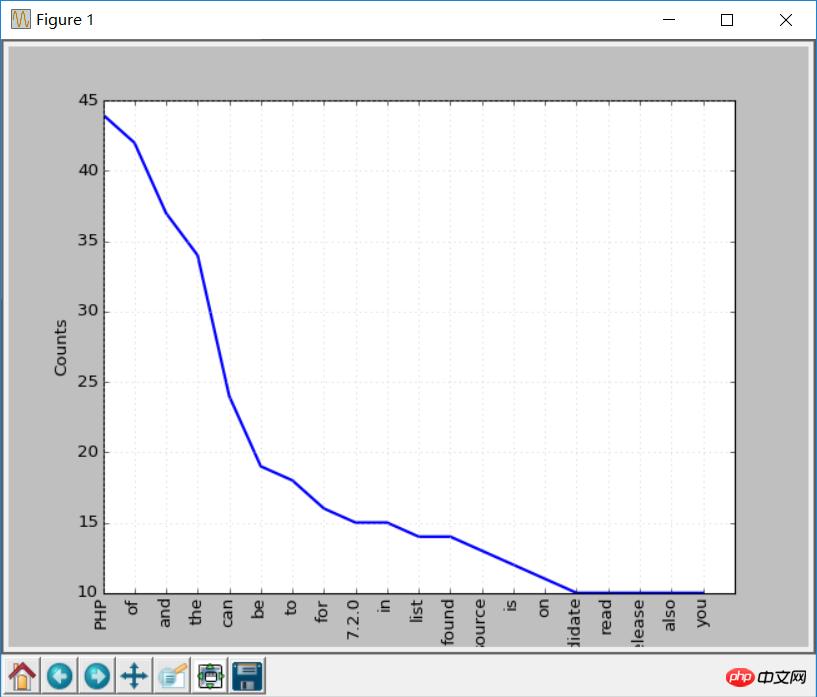

The text has been processed. Now use Python NLTK to count the frequency distribution of tokens.

Can be achieved by calling the FreqDist() method in NLTK:

##

from bs4 import BeautifulSoup import urllib.request import nltk response = urllib.request.urlopen('http://php.net/') html = response.read() soup = BeautifulSoup(html,"html5lib") text = soup.get_text(strip=True) tokens = text.split() freq = nltk.FreqDist(tokens) for key,val in freq.items(): print (str(key) + ':' + str(val))

freq.plot(20, cumulative=False) # 需要安装matplotlib库

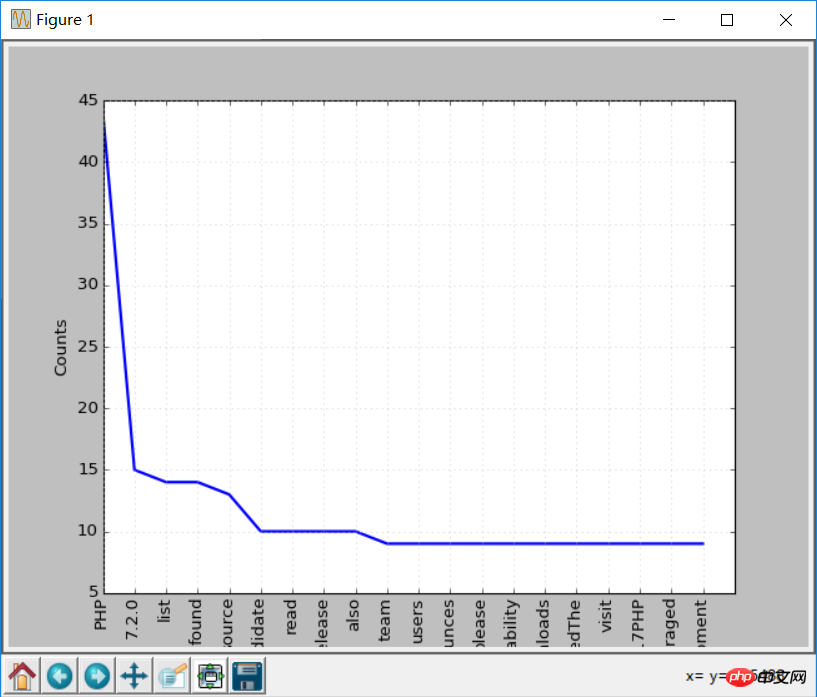

Handling stop words

NLTK comes with stop word lists in many languages. If you get English stop words:from nltk.corpus import stopwords stopwords.words('english')

clean_tokens = list()

sr = stopwords.words('english')

for token in tokens:

if token not in sr:

clean_tokens.append(token)from bs4 import BeautifulSoup

import urllib.request

import nltk

from nltk.corpus import stopwords

response = urllib.request.urlopen('http://php.net/')

html = response.read()

soup = BeautifulSoup(html,"html5lib")

text = soup.get_text(strip=True)

tokens = text.split()

clean_tokens = list()

sr = stopwords.words('english')

for token in tokens:

if not token in sr:

clean_tokens.append(token)

freq = nltk.FreqDist(clean_tokens)

for key,val in freq.items():

print (str(key) + ':' + str(val))

freq.plot(20,cumulative=False)

Using NLTK Tokenize text

Before we used the split method to split the text into tokens, Now we use NLTK to Tokenize text. Text cannot be processed without Tokenization, so it is very important to Tokenize the text. The process of tokenization means splitting large parts into smaller parts.你可以将段落tokenize成句子,将句子tokenize成单个词,NLTK分别提供了句子tokenizer和单词tokenizer。

假如有这样这段文本:

Hello Adam, how are you? I hope everything is going well. Today is a good day, see you dude.

使用句子tokenizer将文本tokenize成句子:

from nltk.tokenize import sent_tokenize mytext = "Hello Adam, how are you? I hope everything is going well. Today is a good day, see you dude." print(sent_tokenize(mytext))

输出如下:

['Hello Adam, how are you?', 'I hope everything is going well.', 'Today is a good day, see you dude.']

这是你可能会想,这也太简单了,不需要使用NLTK的tokenizer都可以,直接使用正则表达式来拆分句子就行,因为每个句子都有标点和空格。

那么再来看下面的文本:

Hello Mr. Adam, how are you? I hope everything is going well. Today is a good day, see you dude.

这样如果使用标点符号拆分,Hello Mr将会被认为是一个句子,如果使用NLTK:

from nltk.tokenize import sent_tokenize mytext = "Hello Mr. Adam, how are you? I hope everything is going well. Today is a good day, see you dude." print(sent_tokenize(mytext))

输出如下:

['Hello Mr. Adam, how are you?', 'I hope everything is going well.', 'Today is a good day, see you dude.']

这才是正确的拆分。

接下来试试单词tokenizer:

from nltk.tokenize import word_tokenize mytext = "Hello Mr. Adam, how are you? I hope everything is going well. Today is a good day, see you dude." print(word_tokenize(mytext))

输出如下:

['Hello', 'Mr.', 'Adam', ',', 'how', 'are', 'you', '?', 'I', 'hope', 'everything', 'is', 'going', 'well', '.', 'Today', 'is', 'a', 'good', 'day', ',', 'see', 'you', 'dude', '.']

Mr.这个词也没有被分开。NLTK使用的是punkt模块的PunktSentenceTokenizer,它是NLTK.tokenize的一部分。而且这个tokenizer经过训练,可以适用于多种语言。

非英文Tokenize

Tokenize时可以指定语言:

from nltk.tokenize import sent_tokenize mytext = "Bonjour M. Adam, comment allez-vous? J'espère que tout va bien. Aujourd'hui est un bon jour." print(sent_tokenize(mytext,"french"))

输出结果如下:

['Bonjour M. Adam, comment allez-vous?', "J'espère que tout va bien.", "Aujourd'hui est un bon jour."]

同义词处理

使用nltk.download()安装界面,其中一个包是WordNet。

WordNet是一个为自然语言处理而建立的数据库。它包括一些同义词组和一些简短的定义。

您可以这样获取某个给定单词的定义和示例:

from nltk.corpus import wordnet

syn = wordnet.synsets("pain")

print(syn[0].definition())

print(syn[0].examples())输出结果是:

a symptom of some physical hurt or disorder

['the patient developed severe pain and distension']

WordNet包含了很多定义:

from nltk.corpus import wordnet

syn = wordnet.synsets("NLP")

print(syn[0].definition())

syn = wordnet.synsets("Python")

print(syn[0].definition())结果如下:

the branch of information science that deals with natural language information

large Old World boas

可以像这样使用WordNet来获取同义词:

from nltk.corpus import wordnet

synonyms = []

for syn in wordnet.synsets('Computer'):

for lemma in syn.lemmas():

synonyms.append(lemma.name())

print(synonyms)输出:

['computer', 'computing_machine', 'computing_device', 'data_processor', 'electronic_computer', 'information_processing_system', 'calculator', 'reckoner', 'figurer', 'estimator', 'computer']

反义词处理

也可以用同样的方法得到反义词:

from nltk.corpus import wordnet

antonyms = []

for syn in wordnet.synsets("small"):

for l in syn.lemmas():

if l.antonyms():

antonyms.append(l.antonyms()[0].name())

print(antonyms)输出:

['large', 'big', 'big']

词干提取

语言形态学和信息检索里,词干提取是去除词缀得到词根的过程,例如working的词干为work。

搜索引擎在索引页面时就会使用这种技术,所以很多人为相同的单词写出不同的版本。

有很多种算法可以避免这种情况,最常见的是波特词干算法。NLTK有一个名为PorterStemmer的类,就是这个算法的实现:

from nltk.stem import PorterStemmer stemmer = PorterStemmer() print(stemmer.stem('working')) print(stemmer.stem('worked'))

输出结果是:

work

work

还有其他的一些词干提取算法,比如 Lancaster词干算法。

非英文词干提取

除了英文之外,SnowballStemmer还支持13种语言。

支持的语言:

from nltk.stem import SnowballStemmer print(SnowballStemmer.languages) 'danish', 'dutch', 'english', 'finnish', 'french', 'german', 'hungarian', 'italian', 'norwegian', 'porter', 'portuguese', 'romanian', 'russian', 'spanish', 'swedish'

你可以使用SnowballStemmer类的stem函数来提取像这样的非英文单词:

from nltk.stem import SnowballStemmer

french_stemmer = SnowballStemmer('french')

print(french_stemmer.stem("French word"))单词变体还原

单词变体还原类似于词干,但不同的是,变体还原的结果是一个真实的单词。不同于词干,当你试图提取某些词时,它会产生类似的词:

from nltk.stem import PorterStemmer stemmer = PorterStemmer() print(stemmer.stem('increases'))

结果:

increas

现在,如果用NLTK的WordNet来对同一个单词进行变体还原,才是正确的结果:

from nltk.stem import WordNetLemmatizer lemmatizer = WordNetLemmatizer() print(lemmatizer.lemmatize('increases'))

结果:

increase

结果可能会是一个同义词或同一个意思的不同单词。

有时候将一个单词做变体还原时,总是得到相同的词。

这是因为语言的默认部分是名词。要得到动词,可以这样指定:

from nltk.stem import WordNetLemmatizer lemmatizer = WordNetLemmatizer() print(lemmatizer.lemmatize('playing', pos="v"))

结果:

play

实际上,这也是一种很好的文本压缩方式,最终得到文本只有原先的50%到60%。

结果还可以是动词(v)、名词(n)、形容词(a)或副词(r):

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

print(lemmatizer.lemmatize('playing', pos="v"))

print(lemmatizer.lemmatize('playing', pos="n"))

print(lemmatizer.lemmatize('playing', pos="a"))

print(lemmatizer.lemmatize('playing', pos="r"))输出:

play

playing

playing

playing

词干和变体的区别

通过下面例子来观察:

from nltk.stem import WordNetLemmatizer from nltk.stem import PorterStemmer stemmer = PorterStemmer() lemmatizer = WordNetLemmatizer() print(stemmer.stem('stones')) print(stemmer.stem('speaking')) print(stemmer.stem('bedroom')) print(stemmer.stem('jokes')) print(stemmer.stem('lisa')) print(stemmer.stem('purple')) print('----------------------') print(lemmatizer.lemmatize('stones')) print(lemmatizer.lemmatize('speaking')) print(lemmatizer.lemmatize('bedroom')) print(lemmatizer.lemmatize('jokes')) print(lemmatizer.lemmatize('lisa')) print(lemmatizer.lemmatize('purple'))

输出:

stone

speak

bedroom

joke

lisa

purpl

---------------------

stone

speaking

bedroom

joke

lisa

purple

词干提取不会考虑语境,这也是为什么词干提取比变体还原快且准确度低的原因。

个人认为,变体还原比词干提取更好。单词变体还原返回一个真实的单词,即使它不是同一个单词,也是同义词,但至少它是一个真实存在的单词。

如果你只关心速度,不在意准确度,这时你可以选用词干提取。

在此NLP教程中讨论的所有步骤都只是文本预处理。在以后的文章中,将会使用Python NLTK来实现文本分析。

相关推荐:

The above is the detailed content of A brief introduction to Python NLP. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents.

What is the process of converting XML into images?

Apr 02, 2025 pm 08:24 PM

What is the process of converting XML into images?

Apr 02, 2025 pm 08:24 PM

To convert XML images, you need to determine the XML data structure first, then select a suitable graphical library (such as Python's matplotlib) and method, select a visualization strategy based on the data structure, consider the data volume and image format, perform batch processing or use efficient libraries, and finally save it as PNG, JPEG, or SVG according to the needs.

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

There is no APP that can convert all XML files into PDFs because the XML structure is flexible and diverse. The core of XML to PDF is to convert the data structure into a page layout, which requires parsing XML and generating PDF. Common methods include parsing XML using Python libraries such as ElementTree and generating PDFs using ReportLab library. For complex XML, it may be necessary to use XSLT transformation structures. When optimizing performance, consider using multithreaded or multiprocesses and select the appropriate library.

How to beautify the XML format

Apr 02, 2025 pm 09:57 PM

How to beautify the XML format

Apr 02, 2025 pm 09:57 PM

XML beautification is essentially improving its readability, including reasonable indentation, line breaks and tag organization. The principle is to traverse the XML tree, add indentation according to the level, and handle empty tags and tags containing text. Python's xml.etree.ElementTree library provides a convenient pretty_xml() function that can implement the above beautification process.

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

The speed of mobile XML to PDF depends on the following factors: the complexity of XML structure. Mobile hardware configuration conversion method (library, algorithm) code quality optimization methods (select efficient libraries, optimize algorithms, cache data, and utilize multi-threading). Overall, there is no absolute answer and it needs to be optimized according to the specific situation.

How to open xml format

Apr 02, 2025 pm 09:00 PM

How to open xml format

Apr 02, 2025 pm 09:00 PM

Use most text editors to open XML files; if you need a more intuitive tree display, you can use an XML editor, such as Oxygen XML Editor or XMLSpy; if you process XML data in a program, you need to use a programming language (such as Python) and XML libraries (such as xml.etree.ElementTree) to parse.

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone.

How to convert XML to PDF on your phone?

Apr 02, 2025 pm 10:18 PM

How to convert XML to PDF on your phone?

Apr 02, 2025 pm 10:18 PM

It is not easy to convert XML to PDF directly on your phone, but it can be achieved with the help of cloud services. It is recommended to use a lightweight mobile app to upload XML files and receive generated PDFs, and convert them with cloud APIs. Cloud APIs use serverless computing services, and choosing the right platform is crucial. Complexity, error handling, security, and optimization strategies need to be considered when handling XML parsing and PDF generation. The entire process requires the front-end app and the back-end API to work together, and it requires some understanding of a variety of technologies.