HTML5 example development Kinect somatosensory game sharing

This article mainly introduces to you the relevant information on the example application of HTML5 to develop Kinect somatosensory games. I hope this article can help you. Friends in need can refer to it. I hope it can help everyone.

HTML5 example application for developing Kinect somatosensory games

1. Introduction

What we are going to do A game?

At the Chengdu TGC2016 exhibition not long ago, we developed a somatosensory game of "Naruto Mobile Game", which mainly simulates the mobile game chapter "Nine-Tails Attack". The user becomes the fourth generation and competes with the Nine-Tails. The duel attracted a large number of players to participate. On the surface, this game is no different from other somatosensory experiences. In fact, it has been running under the browser Chrome. In other words, we only need to master the corresponding front-end technology to develop a web based somatosensory game based on Kinect.

2. Implementation Principle

What is the implementation idea?

Using H5 to develop Kinect-based somatosensory games, the working principle is actually very simple. Kinect collects player and environmental data, such as human skeleton, and uses a certain method to allow the browser to access these data.

1. Collect data

Kinect has three lenses. The middle lens is similar to an ordinary camera and acquires color images. The left and right lenses obtain depth data through infrared rays. We use the SDK provided by Microsoft to read the following types of data:

Color data: color image;

Depth data: color try information;

Human skeleton data: Based on calculations based on the above data, human skeleton data is obtained.

2. Make the Kinect data accessible to the browser

The framework I have tried and understood is basically The socket allows the browser process to communicate with the server for data transmission:

Kinect-HTML5 uses C# to build the server, and color data, test data, and skeleton data are all provided;

ZigFu supports H5, U3D, and Flash development. The API is relatively complete and seems to be charged;

DepthJS provides data access in the form of a browser plug-in;

Node-Kinect2 uses Nodejs to build the server side, providing relatively complete data and many examples.

I finally chose Node-Kinect2. Although there is no documentation, there are many examples. It uses Nodejs, which is familiar to front-end engineers. In addition, the author's feedback is relatively fast.

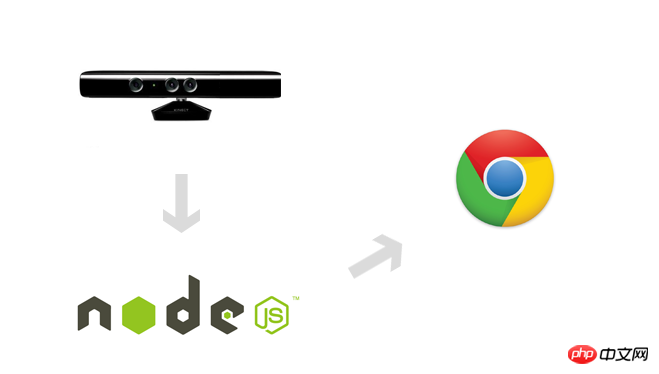

Kinect: Capture player data, such as depth images, color images, etc.;

Node-Kinect2 : Obtain the corresponding data from Kinect and perform secondary processing;

Browser: Listen to the specified interface of the node application, obtain player data and complete game development.

3. Preparation

You must first buy a Kinect

1. System requirements:

This is a hard requirement. I have wasted too much time in an environment that did not meet the requirements.

USB3.0

Graphics card that supports DX11

-

win8 and above systems

Browser that supports Web Sockets

Of course the Kinect v2 sensor is indispensable

2 , Environment building process:

Connect to Kinect v2

Install KinectSDK-v2.0

Install Nodejs

Install Node-Kinect2

1 |

|

4. Example demonstration

There is nothing better than giving me an example!

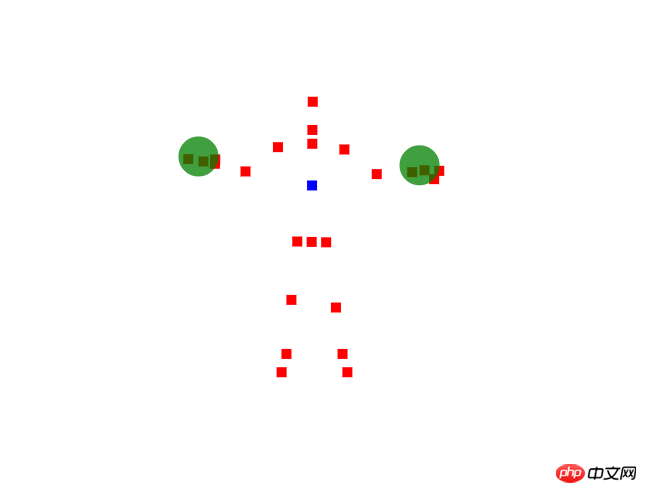

As shown in the figure below, we demonstrate how to obtain the human skeleton and identify the middle segment of the spine and gestures:

1. Server side

Create a web server and send the skeleton data to the browser side. The code is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

|

2. Browser side

The browser side obtains the bone data and uses canvas to draw it. The key code is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

|

With just a few lines of code, we have completed the player skeleton capture. Students with a certain knowledge of JavaScript should be able to understand it easily, but what they don’t understand is what data can we obtain? How to get it? What are the names of bone nodes? There is no documentation for node-kienct2 telling us this.

5. Development Documentation

Node-Kinect2 does not provide documentation. I have compiled the documentation of my test summary as follows:

1 , the data type that the server can provide;

1 |

|

| bodyFrame | 骨骼数据 |

| infraredFrame | 红外数据 |

| longExposureInfraredFrame | 类似infraredFrame,貌似精度更高,优化后的数据 |

| rawDepthFrame | 未经处理的景深数据 |

| depthFrame | 景深数据 |

| colorFrame | 彩色图像 |

| multiSourceFrame | 所有数据 |

| audio | 音频数据,未测试 |

2、骨骼节点类型

1 |

|

| 节点类型 | JointType | 节点名称 |

| 0 | spineBase | 脊椎基部 |

| 1 | spineMid | 脊椎中部 |

| 2 | neck | 颈部 |

| 3 | head | 头部 |

| 4 | shoulderLeft | 左肩 |

| 5 | elbowLeft | 左肘 |

| 6 | wristLeft | 左腕 |

| 7 | handLeft | 左手掌 |

| 8 | shoulderRight | 右肩 |

| 9 | elbowRight | 右肘 |

| 10 | wristRight | 右腕 |

| 11 | handRight | 右手掌 |

| 12 | hipLeft | 左屁 |

| 13 | kneeLeft | 左膝 |

| 14 | ankleLeft | 左踝 |

| 15 | footLeft | 左脚 |

| 16 | hipRight | 右屁 |

| 17 | kneeRight | 右膝 |

| 18 | ankleRight | 右踝 |

| 19 | footRight | 右脚 |

| 20 | spineShoulder | 颈下脊椎 |

| 21 | handTipLeft | 左手指(食中无小) |

| 22 | thumbLeft | 左拇指 |

| 23 | handTipRight | 右手指 |

| 24 | thumbRight | 右拇指 |

3、手势,据测识别并不是太准确,在精度要求不高的情况下使用

| 0 | unknown | 不能识别 |

| 1 | notTracked | 未能检测到 |

| 2 | open | 手掌 |

| 3 | closed | 握拳 |

| 4 | lasso | 剪刀手,并合并中食指 |

4、骨骼数据

1 2 3 4 5 6 7 8 |

|

5、kinect对象

| on | 监听数据 |

| open | 打开Kinect |

| close | 关闭 |

| openBodyReader | 读取骨骼数据 |

| open**Reader | 类似如上方法,读取其它类型数据 |

六、实战总结

火影体感游戏经验总结

接下来,我总结一下TGC2016《火影忍者手游》的体感游戏开发中碰到的一些问题。

1、讲解之前,我们首先需要了解下游戏流程。

1.1、通过手势触发开始游戏 |

1.2、玩家化身四代,左右跑动躲避九尾攻击 |

1.3、摆出手势“奥义”,触发四代大招 |

1.4、用户扫描二维码获取自己现场照片 |

2、服务器端

游戏需要玩家骨骼数据(移动、手势),彩色图像数据(某一手势下触发拍照),所以我们需要向客户端发送这两部分数据。值得注意的是,彩色图像数据体积过大,需要进行压缩。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

|

3、客户端

客户端业务逻辑较复杂,我们提取关键步骤进行讲解。

3.1、用户拍照时,由于处理的数据比较大,为防止页面出现卡顿,我们需要使用web worker

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

|

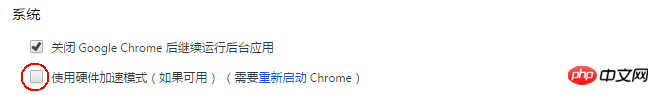

3.2、接投影仪后,如果渲染面积比较大,会出现白屏,需要关闭浏览器硬件加速。

3.3、现场光线较暗,其它玩家干扰,在追踪玩家运动轨迹的过程中,可能会出现抖动的情况,我们需要去除干扰数据。(当突然出现很大位移时,需要将数据移除)

1 2 3 4 5 6 7 8 9 10 |

|

3.4、当玩家站立,只是左右少量晃动时,我们认为玩家是站立状态。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

|

七、展望

1、使用HTML5开发Kinect体感游戏,降低了技术门槛,前端工程师可以轻松的开发体感游戏;

2、大量的框架可以应用,比如用JQuery、CreateJS、Three.js(三种不同渲染方式);

3、无限想象空间,试想下体感游戏结合webAR,结合webAudio、结合移动设备,太可以挖掘的东西了……想想都激动不是么!

相关推荐:

The above is the detailed content of HTML5 example development Kinect somatosensory game sharing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Table Border in HTML

Sep 04, 2024 pm 04:49 PM

Table Border in HTML

Sep 04, 2024 pm 04:49 PM

Guide to Table Border in HTML. Here we discuss multiple ways for defining table-border with examples of the Table Border in HTML.

Nested Table in HTML

Sep 04, 2024 pm 04:49 PM

Nested Table in HTML

Sep 04, 2024 pm 04:49 PM

This is a guide to Nested Table in HTML. Here we discuss how to create a table within the table along with the respective examples.

HTML margin-left

Sep 04, 2024 pm 04:48 PM

HTML margin-left

Sep 04, 2024 pm 04:48 PM

Guide to HTML margin-left. Here we discuss a brief overview on HTML margin-left and its Examples along with its Code Implementation.

HTML Table Layout

Sep 04, 2024 pm 04:54 PM

HTML Table Layout

Sep 04, 2024 pm 04:54 PM

Guide to HTML Table Layout. Here we discuss the Values of HTML Table Layout along with the examples and outputs n detail.

HTML Input Placeholder

Sep 04, 2024 pm 04:54 PM

HTML Input Placeholder

Sep 04, 2024 pm 04:54 PM

Guide to HTML Input Placeholder. Here we discuss the Examples of HTML Input Placeholder along with the codes and outputs.

HTML Ordered List

Sep 04, 2024 pm 04:43 PM

HTML Ordered List

Sep 04, 2024 pm 04:43 PM

Guide to the HTML Ordered List. Here we also discuss introduction of HTML Ordered list and types along with their example respectively

HTML onclick Button

Sep 04, 2024 pm 04:49 PM

HTML onclick Button

Sep 04, 2024 pm 04:49 PM

Guide to HTML onclick Button. Here we discuss their introduction, working, examples and onclick Event in various events respectively.

Moving Text in HTML

Sep 04, 2024 pm 04:45 PM

Moving Text in HTML

Sep 04, 2024 pm 04:45 PM

Guide to Moving Text in HTML. Here we discuss an introduction, how marquee tag work with syntax and examples to implement.