'Unsupervised' machine translation? Can it be translated even without data?

Deep learning is being widely used in various daily tasks, especially in areas involving a certain degree of "humanity", such as image recognition. Unlike other machine learning algorithms, the most prominent feature of deep networks is that their performance can continue to improve as more data is obtained. Therefore, if more data is available, the expected performance becomes better.

One of the tasks that deep networks are best at is machine translation. Currently, it is the most advanced technology capable of this task and is feasible enough that even Google Translate uses it. In machine translation, sentence-level parallel data is needed to train the model, that is, for each sentence in the source language, it needs to be the translated language in the target language. It's not hard to imagine why this would be a problem. Because, for some language pairs, it is difficult to obtain large amounts of data (hence the ability to use deep learning).

How this article is constructed

This article is based on an article recently published by Facebook called "Unsupervised machine translation using only monolingual corpora." This article does not completely follow the structure of the paper. I added some of my own interpretations to make the article more understandable.

Reading this article requires some basic knowledge about neural networks, such as loss functions, autoencoders, etc.

Problems with Machine Translation

As mentioned above, the biggest problem with using neural networks in machine translation is that it requires a dataset of sentence pairs in two languages. It works for widely spoken languages such as English and French, but not for sentence pairs in other languages. If the language is available on the data, then this becomes a supervised task.

Solution

The authors of this paper figured out how to convert this task into an unsupervised task. The only thing required in this task is any two corpora in each of the two languages, such as any novel in English and any novel in Spanish. One thing to note is that the two novels are not necessarily the same.

From the most intuitive perspective, the author discovered how to learn a latent space between two languages.

Autoencoders Overview

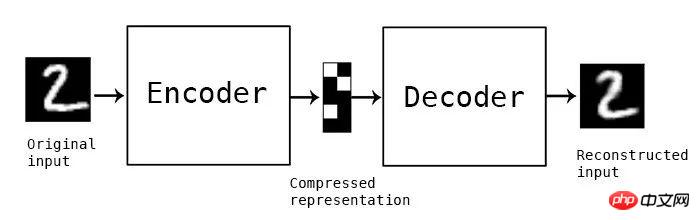

Autoencoders are a broad class of neural networks used for unsupervised tasks. It works by recreating an input identical to the original input. The key to accomplishing this is a network layer in the middle of the network called the bottleneck layer. This network layer is used to capture all useful information about the input and discard useless information.

Conceptual autoencoder, the intermediate module is the bottleneck layer that stores the compressed representation

In short, in the bottleneck layer, the input in the bottleneck layer ( The space now transformed by the encoder) is called latent space.

Denoising Autoencoder

If an autoencoder is trained to reconstruct the input exactly the way it was input, then it may not be able to do anything. In this case, the output will be perfectly reconstructed, but without any useful features in the bottleneck layer. To solve this problem, we use a denoising autoencoder. First, the actual input is slightly disturbed by the addition of some noise. The network is then used to reconstruct the original image (not the noisy version). This way, by learning what noise is (and what its truly useful features are), the network can learn useful features of the image.

A conceptual example of a denoising autoencoder. Use a neural network to reconstruct the left image and generate the right image. In this case, the green neurons together form the bottleneck layer

Why learn a common latent space?

Latent space can capture the characteristics of the data (in our example, the data is sentences). So if it is possible to obtain a space that, when input to language A, produces the same features as input to language B, then it is possible for us to translate between them. Since the model already has the correct "features", it is encoded by the encoder of language A and decoded by the decoder of language B, which will allow the two to do an efficient translation job.

Perhaps as you think, the author uses a denoising autoencoder to learn a feature space. They also figured out how to make the autoencoder learn a common latent space (which they call an aligned latent space) to perform unsupervised machine translation.

Denoising Autoencoders in Languages

The authors use denoising encoders to learn features in an unsupervised manner. The loss function they defined is:

Equation 1.0 Automatic de-noising encoder loss function

Interpretation of Equation 1.0

I is the language (for this setting, there may be two languages). X is the input, and C(x) is the result after adding noise to x. We will soon get the function C created by the noise. e() is the encoder and d() is the decoder. The last term Δ(x hat,x) is the sum of cross-entropy error values at the token level. Since we have an input sequence, and we get an output sequence, we want to make sure that each token is in the correct order. Hence this loss function is used. We can think of it as multi-label classification, where the label of the i-th input is compared with the i-th output label. Among them, the token is a basic unit that cannot be further destroyed. In our example, the token is a word. Equation 1.0 is a loss function that causes the network to minimize the difference between the output (when given a noisy input) and the original, unaffected sentence. The symbolic representation of

□ with ~

□ is the representation we expect, which in this case means that the distribution of the input depends on the language l, and the mean of the loss is taken. This is just a mathematical form, the actual loss during the operation (sum of cross-entropy) will be as usual.

This special symbol ~ means "from a probability distribution".

We will not discuss this detail in this article. You can learn more about this symbol in Chapter 8.1 of the Deep Learning Book article.

How to add noise

For images, you can add noise by simply adding floating point numbers to the pixels, but for languages, you need to use other methods. Therefore, the authors developed their own system to create noise. They denote their noise function as C(). It takes a sentence as input and outputs a noisy version of the sentence.

There are two different ways to add noise.

First, one can simply remove a word from the input with probability P_wd.

Secondly, each word can use the following constraint to shift the original position:

σ represents the i-th The shifted position of the marker. Therefore, equation 2.0 means: "A token can move up to k token positions to the left or right"

The author sets the K value to 3 and the P_wd value to 1 .

Cross-domain training

In order to learn translation between two languages, the input sentence (language A) should be mapped to the output sentence (language B) through some processing. The author calls this process cross domain training. First, the input sentence (x) is sampled. Then, the model from the previous iteration (M()) is used to generate the translated output (y). Putting them together, we get y=M(x). Subsequently, the same noise function C() above is used to interfere with y, and C(y) is obtained. The encoder for language A encodes this perturbed version, and the decoder for language B decodes the output of the encoder for language A and reconstructs a clean version of C(y). The model is trained using the same sum of cross entropy error values as in Equation 1.0.

Using adversarial training to learn a common latent space

So far, there has been no mention of how to learn a common latent space. The cross-domain training mentioned above helps to learn a similar space, but a stronger constraint is needed to push the model to learn a similar latent space.

The author uses adversarial training. They used another model (called a discriminator) that took the output of each encoder and predicted which language the encoded sentences belonged to. Then, the gradients are extracted from the discriminator and the encoder is trained to fool the discriminator. This is conceptually no different from a standard GAN (Generative Adversarial Network). The discriminator receives the feature vector at each time step (because an RNN is used) and predicts which language it comes from.

Combining them together

Add the above 3 different losses (autoencoder loss, translation loss and discriminator loss), and the weights of all models are updated simultaneously.

Since this is a sequence-to-sequence problem, the author uses a long short-term memory network (LSTM). It should be noted that there are two LSTM-based autoencoders, each containing one.

At a high level, training this architecture requires three main steps. They follow an iterative training process. The training loop process looks a bit like this:

1. Use the encoder for language A and the decoder for language B to get the translation.

2. Train each autoencoder to be able to regenerate an uncorrupted sentence when given a corrupted sentence.

3. Improve the translation and recreate it by destroying the translation obtained in step 1. For this step, the encoder for language A is trained together with the decoder for language B (the encoder for language B is trained together with the decoder for language A).

It is worth noting that even if step 2 and step 3 are listed separately, the weights will be updated together.

How to start this framework

As mentioned above, the model uses its own translations from previous iterations to improve its translation capabilities. Therefore, it is important to have some translation skills before the recycling process begins. The author uses FastText to learn word-level bilingual dictionaries. Note that this method is very simple and only requires giving the model a starting point.

The entire framework is given in the flow chart below

The high-level work of the entire translation framework

This article interprets a method that can New techniques for performing unsupervised machine translation tasks. It uses multiple different losses to improve a single task while using adversarial training to enforce constraints on the behavior of the architecture.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How Baidu AI interface optimizes and improves machine translation effects in Java projects

Aug 26, 2023 pm 07:04 PM

How Baidu AI interface optimizes and improves machine translation effects in Java projects

Aug 26, 2023 pm 07:04 PM

How Baidu AI interface optimizes and improves the effectiveness of machine translation in Java projects Introduction: With the continuous development of artificial intelligence technology, machine translation has become one of the important tools to improve the efficiency of cross-language communication. Baidu AI interface provides convenient and efficient machine translation services, which can be used in various application scenarios. This article will introduce how to optimize and improve the machine translation effect of Baidu AI interface in Java projects, and provide corresponding code examples. 1. Introduction to Baidu AI interface machine translation. Optimize and improve machine translation at the beginning.

![[Python NLTK] Machine translation, easy conversion between languages](https://img.php.cn/upload/article/000/465/014/170882684444147.jpg?x-oss-process=image/resize,m_fill,h_207,w_330) [Python NLTK] Machine translation, easy conversion between languages

Feb 25, 2024 am 10:07 AM

[Python NLTK] Machine translation, easy conversion between languages

Feb 25, 2024 am 10:07 AM

pythonNLTK is a powerful natural language processing toolkit that provides a variety of language processing capabilities, including machine translation. Machine translation refers to the use of computers to translate text from one language into text in another language. To use PythonNLTK for machine translation, you first need to install NLTK. NLTK can be installed through the following command: fromnltk.translate.apiimportNLTKTranslatortranslator=NLTKTranslator() Then, you can use the translate method for machine translation. The translate method accepts two parameters, the first parameter is to be translated

What is machine translation technology in Python?

Aug 25, 2023 am 10:13 AM

What is machine translation technology in Python?

Aug 25, 2023 am 10:13 AM

What is machine translation technology in Python? As globalization accelerates, communication between languages becomes increasingly important. Machine translation is an automatic text translation technology that can automatically convert text in one language into another language. With the continuous development of deep learning and natural language processing technology, machine translation technology has made significant progress in application improvement in recent years. As an efficient interpreted language, Python provides powerful support for the development of machine translation. This article will introduce machine translation in Python

A Beginner's Guide to Machine Translation in PHP

Jun 11, 2023 pm 12:29 PM

A Beginner's Guide to Machine Translation in PHP

Jun 11, 2023 pm 12:29 PM

PHP is a very popular development language that is widely used in the field of web development. Machine translation is an emerging technology that automatically translates text from one language into another. In this article, we will introduce you to machine translation in PHP, helping you understand its basic principles and how to use it to implement translation functions. Principle of machine translation Machine translation is an artificial intelligence technology. Its main principle is to use computers to analyze and process source language texts, and then generate equivalent texts in the target language. machine translation

Machine translation technology in C++

Aug 22, 2023 pm 12:37 PM

Machine translation technology in C++

Aug 22, 2023 pm 12:37 PM

C++ has always been a powerful tool for software development, not only limited to the development of system software, but also widely used in the development of artificial intelligence. Machine translation is one of the important applications. This article will elaborate on the basic principles, implementation methods, current status and future prospects of machine translation technology in C++. 1. Basic Principles The basic principle of machine translation is to use computer programs to convert sentences in the source language (usually English) into sentences in the target language (such as Chinese) to achieve cross-language communication. The basis of machine translation is linguistics and computer science

Machine translation technology and applications implemented in Java

Jun 18, 2023 am 10:40 AM

Machine translation technology and applications implemented in Java

Jun 18, 2023 am 10:40 AM

Java is currently the most popular programming language. Its powerful cross-platform features and rich class libraries allow developers to easily implement various applications. Machine translation technology is an important branch in the field of artificial intelligence. Its application has penetrated into fields such as web page translation and machine translation software, and has become one of the indispensable translation tools in modern society. This article mainly introduces machine translation technology implemented in Java and its application. 1. Machine translation technology Machine translation technology refers to the use of computer programs to automatically translate a natural language text

Google open-sources its first 'dialect' data set: making machine translation more authentic

Apr 08, 2023 am 10:51 AM

Google open-sources its first 'dialect' data set: making machine translation more authentic

Apr 08, 2023 am 10:51 AM

Although people all over China speak Chinese, the specific dialects in different places are slightly different. For example, "Hutong" also means alley. When you say "Hutong", you will know it is old Beijing, but when you go to the south, it is called "Nong". When such subtle regional differences are reflected in "machine translation" tasks, the translation results will appear to be insufficiently "authentic". However, almost all current machine translation systems do not consider the impact of regional languages (i.e. dialects). This phenomenon also exists around the world. For example, the official language of Brazil is Portuguese, and there are some regional differences with Portuguese in Europe. Recently, Google released a new data set and evaluation benchmark FRMT that can be used for few-shot Region-aware machine translation, mainly solving dialect translation.

Exclusive interview with ByteDance Wang Mingxuan: Machine translation and manual translation are essentially two tracks | T Frontline

May 24, 2023 pm 09:37 PM

Exclusive interview with ByteDance Wang Mingxuan: Machine translation and manual translation are essentially two tracks | T Frontline

May 24, 2023 pm 09:37 PM

The advancement of technology often means that the evolution of the industry has found a new direction. The translation industry is no exception. As the process of globalization continues to accelerate, people cannot do without cross-language communication when conducting foreign-related activities. The emergence of machine translation has greatly expanded the application scenarios of translation. Although it is far from perfect, it has taken a solid step towards mankind's challenge to the Tower of Babel. 51CTO specially invited Wang Mingxuan, head of machine translation at ByteDance AILab, to talk about the development of machine translation over the years. The development of machine translation from rule-based to statistical model-based to neural network-based machine translation is closely related to the development of computer technology, information theory, linguistics and other disciplines. After entering the 21st century, with the improvement of hardware capabilities and optimization of algorithms, machine translation technology