Innobackupex and mydumper, mysql backup tools

-------------------------------------------------- -

------Physical backup tool Innobackupex------

--------------------- --------------------------

Official Manual: https://www.percona.com/doc/percona- xtrabackup/LATEST/index.html

is mainly used for hot backup of data stored in engines such as InnoDB and MyISAM. During backup, the data to be backed up is loaded into the memory and then written to the backup datafile on the disk. The data changed during the backup is appended to the backup file in the same way as redo log recovery.

============================================== ================================================== ==

innobackupex full backup process:

1. Enable xtrabackup_logfile. Used to record these new data changes in xtrabackup_logfile in real time when new DML operations under the InnoDB storage engine produce data changes during the entire hot backup process. The recording format is the same as redo log

2, in page units. Copy the data files stored in InnoDB: shared table space ibdataX and .ibd files. Since the page may be being written during copying, the head and tail checksum values of the page will be different. Therefore, when generating backup files later, you need to apply log before using them to repair some incomplete pages.

3. flush tables with read lock. Add a read lock to the MyISAM table to copy the data stored in the non-transaction engine MyISAM

4. Copy .frm, .MYD, and .MYI files.

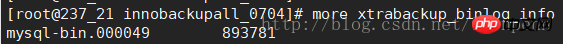

5. Get the latest position of binlog at the moment the backup is completed: xtrabackup_binlog_info (InnoDB data files may be updated).

6. unlock tables;

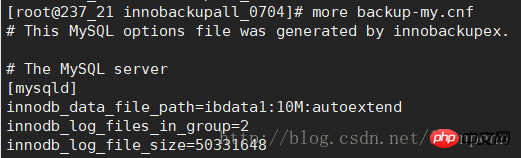

7. (1) After the backup is completed, record the minimum parameters required to start the backup to backup-my.cnf

(2) Record the LSN to xtrabackup_logfile.

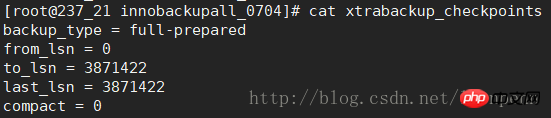

(3) Record the backup type (full-backuped: full, incremental: incremental; backups that have been applied log will be modified to full-prepared) and other information to xtrabackup_checkpoints.

(4) Record some other backup information: In addition, all the generated files are:

(1) backup-my.cnf

(2) xtrabackup_binlog_info: When using MyISAM for data backup, More accurate than xtrabackup_binlog_pos_innodb

(2) xtrabackup_binlog_info: When using MyISAM for data backup, More accurate than xtrabackup_binlog_pos_innodb

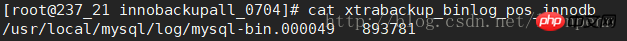

(3) xtrabackup_binlog_pos_innodb: The newly generated file after apply log only records the binlog position of innodb and does not calculate the binlog generated by MyISAM

(3) xtrabackup_binlog_pos_innodb: The newly generated file after apply log only records the binlog position of innodb and does not calculate the binlog generated by MyISAM

(4)xtrabackup_checkpoints

(4)xtrabackup_checkpoints

##(5)xtrabackup_info

(6) xtrabackup_logfile (core file)

When backing up MyISAM, a full backup operation is still performed.

Backup statement example

(1) Full:

Use --tables-file with a text file recording the complete table name (one table name per line)

Use --databases to specify libraries and tables (for example, backup table: mydatabase.mytable and library: mysql)

innobackupex --databases="mydatabase.mytable mysql" /path/to/backup --no -timestamp --user=backup --password=backup

step2:

prepare partial backup: innobackupex --apply-log --export /path/to/backup/

(--databases unspecified database tables will prompt "does note exist" during the prepare phase, you can ignore this message)

(3) Incremental backup (assuming that it is fully prepared, Path: $FULLBACKUP)

step1:

First incremental backup (based on full backup): innobackupex --incremental $INCREMENTALBACKUP_1 --incremental-basedir=$FULLBACKUP --user=USER --password=PASSWORD

Second incremental backup (based on the first incremental backup): innobackupex --incremental $INCREMENTALBACKUP_2 --incremental-basedir=NCREMENTALBACKUP_1 --user=USER -- password=PASSWORD

(......)

Nth time

step2:prepare

innobackupex -- apply-log --redo-only $FULLBACKUP --use-memory=1G --user=USER --password=PASSWORD

innobackupex --apply-log --redo-only $FULLBACKUP--incremental- dir=$INCREMENTALBACKUP_1 --use-memory=1G --user=DVADER --password=D4RKS1D3

innobackupex --apply-log --redo-only $FULLBACKUP --incremental-dir=$ INCREMENTALBACKUP_2 --use-memory=1G --user=DVADER --password=D4RKS1D3

(...)

innobackupex --apply-log--redo -only $FULLBACKUP --incremental-dir=$INCREMENTALBACKUP_N --use-memory=1G --user=DVADER --password=D4RKS1D3

innobackupex --apply-log $FULLBACKUP --use- memory=1G --user=$USERNAME --password=$PASSWORD

--use-memory: Specify the memory that can be used by prepare. Use it in conjunction with --apply-log to speed up prepare.

In the prepare stage, --redo-only needs to be added during the first full backup and incremental backup integration process. Finally, after all incremental backups have been integrated, the full backup files that have been integrated into the incremental backups need to be prepared again.

============================================== ================================================== ==

Some other common parameters:

Use with: --stream=xbstream --compress --compress-threads=8 --parallel=4 > backupfile.xbstream (xbstream option The ibd files of the table will be compressed and streamed one by one, so the innodb-file-per-table parameter needs to be turned on)

--parallel: backup concurrency number (referring to copying the ibd file, different from compress-threads Is the number of threads performing compression)

--stream: tar, xbstream. Often used together with: innobackupex [...] --stream=tar /backupdir/ | gzip - > backupfile.tar.gz

--tmpdir: the temporary directory before streaming to the remote machine Location

--encryption: Backup encryption. In actual situations, the more commonly used one is

(1) openssl, adding encryption options to the above tar+gz method: innobackupex [...] --stream=tar /backupdir/ | gzip - | openssl aes -256-cbc -k "abc" > backupfile.tar.gz.aes-256-cbc

(2)des3,innobackupex [...] --stream=tar /backupdir/ | gzip - | openssl des3 -salt -k "abc" > backupfile.tar.gz.des3

======================== ================================================== ========================

innobackupex recovery process

1. innobackupex -- apply-log, the purpose is to obtain the redo log from xtrabackup_log, update some incomplete pages, make the head and tail checksum values, and update the LSN to the latest LSN number in the backup process; (actually it should be divided into the backup process)

2. Copy the backup data to the database data directory;

3. Modify the permissions of the data directory and start it.

============================================== ================================================== ==

Recovery statement example:

1. Close the instance before recovery

2. Back up the original data directory (the redo log and undo log also need to be backed up if they are separated)

3. innobackupex --copy-back --user=username --password='user_passwd' --socket=/usr/local/mysql/run/mysqld.sock --defaults-file=/usr /local/mysql/my.cnf /tmp/innobackup_all (or directly copy the prepared backup file)

4. Modify directory permissions and start mysql

===== ================================================== =========================================

From Quanbei Export single table data from (The prerequisite is that the innodb_file_per_table option needs to be turned on)

With the Percona XtraBackup, you can export individual tables from any InnoDB database, and import them into Percona Server with XtraDB or MySQL 5.6 (The source doesn' t have to be XtraDB or or MySQL 5.6, but the destination does). This only works on individual .ibd files, and cannot export a table that is not contained in its own .ibd file.

is required in During the prepare phase, a single table is exported through the --export option:

Once a full backup is created, prepare it with the --export option:

$ innobackupex --apply-log --export /path/to/backup

This will create for each InnoDB with its own tablespace a file with .exp extension.

A file ending with .exp will be created for the table space of each innodb table

The output file format is:

/data/backups/mysql/test/export_test.exp

/data/backups/mysql/test/export_test.ibd

/data/backups/mysql/test/export_test.cfg

When importing tables from other servers, you need to first create table (because there is no table structure information in the independent table file):

mysqlfrm --diagnostic /data/2017-03-22_16-13-00/yayun/t1.frm (using the mysql-utilities tool mysqlfrm reads the table structure from the backup file)

mysql> CREATE TABLE mytable (...) ENGINE=InnoDB; (Go to create table based on the table structure read previously)

Delete table space files:

mysql> ALTER TABLE mydatabase.mytable DISCARD TABLESPACE;

Copy the exported .ibd and .exp files to the data directory:

After this , copy mytable.ibd and mytable.exp (or mytable.cfg if importing to MySQL 5.6) files to database's home

Then import tablespace:

mysql> ALTER TABLE mydatabase.mytable IMPORT TABLESPACE;

----------------------------------------- ---

------Logical backup tool mydumper------

------------------- -----------------------

Some English documents are excerpted from the README on GitHub: https://github.com/maxbube/ mydumper

In the MySQL database version 5.5/5.6, compared to using the officially provided mysqldump for single-thread backup, the multi-thread backup tool mydumper has unique advantages. (For versions after MySQL 5.7.11, the official has finally fixed the problem of consistent backup of the parallel logic backup tool mysqlpump. For mysqlpump, please refer to Daniel Jiang Chengyao’s introduction: http://www.tuicool.com/articles/E77bYz7)

Disadvantages: It is difficult to back up to a remote backup center through concurrent streaming, and it is more likely to be directly downloaded locally.

== How does consistent snapshot work? ==

This is all done following best MySQL practices and traditions:

* As a precaution, slow running queries on the server either abort the dump, or get killed

* Global write lock is acquired ("FLUSH TABLES WITH READ LOCK")

* Various metadata is read ("SHOW SLAVE STATUS","SHOW MASTER STATUS")

* Other threads connect and establish snapshots ("START TRANSACTION WITH CONSISTENT SNAPSHOT")

** On pre-4.1.8 it creates dummy InnoDB table, and reads from it.

* Once all worker threads announce the snapshot establishment, master executes "UNLOCK TABLES" and starts queuing jobs.

mydumper's implementation mechanism for consistency:

* When encountering a slow query, either dump stops executing, or mydumper kills the slow query . (The --long-query-guard parameter is used to agree on the time for a slow query. The default is 60 seconds. If --kill-long-queries is added to this parameter, the slow query will be actively killed. If it is not added, mydumper will automatically kill the slow query when it encounters the slow query. In this case, the operation will stop)

* Using "FLUSH TABLES WITH READ LOCK" to apply a global read lock will prevent DML statements

* View metadata: "SHOW SLAVE STATUS", "SHOW MASTER STATUS"

* "START TRANSACTION WITH consistent snapshot": When start transaction opens the transaction, it immediately creates a snapshot of the current transaction's consistent read. Without the with option, the transaction will not actually start until the first statement in the transaction is executed, and a consistent read snapshot will be established

* Starting from version 4.1.8, mydumper creates an InnoDB type virtual table. Reading data from it

* Once all threads report that the consistency snapshot is established, execute "UNLOCK TABLES" and start the queue task.

Backup statement example:

mydumper --user=username --password=user_passwd --socket=/... --regex '^( ?!(mysql))' --output=/backupdir --compress --verbose=3 --logfile=/backupdir/mydumper_backup.log

Explanation of common parameters:

--database Specify the library that needs to be backed up

--tables-list Specify the tables that need to be backed up, separated by, (when it conflicts with the regex option, the regex shall prevail)

--regex '^(?!(mysql |test))': Database filtering options

--output=/backupdir: Backup file output path

--compress: Compression output file (.gz suffix)

--verbose=3: Output log level info to facilitate observation of backup status (0 = silent, 1 = errors, 2 = warnings, 3 = info, default 2)

--logfile=/ backupdir/mydumper_backup.log: Specify the location of the mydumper running log file

--threads Specify the number of threads used during backup, the default is 4

--statement-size : Limit the maximum length of sql statements (mydumper will merge sql during backup)

--rows: Split the table by the number of rows. Improve concurrency performance when myloader

--chunk-filesize: Split table data according to the size of the output file. Improve the concurrency performance of myloader

--no-locks: Do not lock the table (the data may be inconsistent)

--binlogs: Back up the binlog. When the backup fails, you can check the backup binlog and find the cause of the error near the backup location

Output backup file directory:

* Library structure: dbname-schema-create. sql.gz

* Table structure: dbname.tblname1-schema.sql.gz

* Table data: dbname.tblname1.sql.gz

(Each library and table has its own independent backup file. When only a single table needs to be restored, restore the single table through mydumper. Table full data + binlog recovery increment)

* metadata: including the current location of the binlog during backup

------------------ --------------------------------------------------

Started dump at: 2017-07-04 09:45:57

SHOW MASTER STATUS:

Log: mysql-bin.000048

Pos: 107

GTID:(null)

Finished dump at: 2017-07-04 09:45:57

-------------------------- -------------------------------------

* mydumper_backup.log : Record the running status of the backup program

Restore command myloader related parameters explanation

--directory backup file location

--queries-per-transaction The number of sql executed for each transaction, the default is 1000

--overwrite-tables Drop the existing table first and then restore it (it is required to back up the table structure when backing up files)

--database specifies the database that needs to be restored

--enable-binlog is used to restore data Operation record binlog

--threads specifies the number of threads used during restoration, the default is 4

--enable-binlog: restore the backed-up binlog

Note: myloader can only be in the library Restore at the level level. Single table restoration can directly call the corresponding file containing sql statements in the backup file

In addition, innobackupex backs up the data before this point in time when the backup is complete, while mydumper (including mysqldump , mysqlpump, etc.) The time point of the backed up data is the time when the backup starts.

Let me mention the main idea of recovery: whether it is physical backup or logical backup, the most reliable recovery premise is that the database needs to temporarily prohibit data writing. Then restore the full backup first, apply incremental backup to the nearest failure point, and then apply the binlog log and skip the failure point.

Always consider that in order to stop writing operations on the online server for a few misoperation statements on a single table, and use the method of full + incremental recovery, it is a bit of a waste of effort, and the gain outweighs the loss. If there is no standby database with a replication delay strategy, using the files backed up by mydumper to restore a single table, or taking a step back and using flashback is a quick and good solution.

Tips:

After using Innobackupex or mydumper to restore most of the data, use mysqlbing to fill in the data parts that cannot be covered by the above backup program.

Mysqlbinlog parameter explanation:

–start-position=N (included when reading)

Start reading from the event when the first position in the binary log is equal to the N parameter.

–stop-position=N (not included when reading)

Stop reading from the event when the first position in the binary log is equal to or greater than the N parameter.

When using mysqlbinlog to apply binlog logs, if you need to span multiple files, read multiple files at the same time. The start-position is the starting point of the first binlog file, and the stop-position is the ending point of the last file. .

Example: mysql-bin.000048 (pos856), mysql-bin.000051 (pos1042)

/usr/local/mysql/bin/mysqlbinlog mysql-bin.000048 mysql-bin. 000049 mysql-bin.000050 mysql-bin.000051 --start-position=856 --stop-position=1042 > /tmp/backup1/backup_new.sql

Tips:

Always have backup monitoring;

The backup objects of the above two backup tools are mainly included in the data directory. It should be noted that the binlog also contains some data, and the binlog is also required. Make backups.

A brief mention about the backup strategy. The backup strategy we formulate is determined based on the type of business.

For data growth business, a full + incremental strategy is adopted, while for data update type, full backup is adopted.

Logical backup is often used for operations such as MySQL version upgrade or single table recovery.

Considering the above, online databases generally adopt physical backup as the main method, logical backup as the supplement, and binlog backup.

Reference document:

innobackupex backup generation file description:

http://fordba.com/xtrabackup-produce-file-intruduction.html

Recipes for innobackupex:

https://www.percona.com/doc/percona-xtrabackup/LATEST/how-tos.html#recipes-ibk

How to generate from innobackupex Restoring a single table in full backup:

https://www.percona.com/doc/percona-xtrabackup/2.2/innobackupex/restoring_individual_tables_ibk.html

https:// www.percona.com/blog/2012/01/25/how-to-recover-a-single-innodb-table-from-a-full-backup/

https://www .percona.com/blog/2017/03/15/restore-single-innodb-table-full-backup-accidentally-dropping/

Actual single table recovery case:

http://www.cnblogs.com/gomysql/p/6600616.html

Create and restore Partial Backups:

https://www.percona.com /doc/percona-xtrabackup/2.2/innobackupex/partial_backups_innobackupex.html

Mysqldump combined with binlog recovery case:

http://blog.chinaunix.net/uid-25135004-id- 1761635.html

http://www.cnblogs.com/hanyifeng/p/5756462.html

Use mysqldump full file for single database recovery (to be tested)

https://stackoverflow.com/questions/1013852/can-i-restore-a-single-table-from-a-full-mysql-mysqldump-file

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's position in databases and programming is very important. It is an open source relational database management system that is widely used in various application scenarios. 1) MySQL provides efficient data storage, organization and retrieval functions, supporting Web, mobile and enterprise-level systems. 2) It uses a client-server architecture, supports multiple storage engines and index optimization. 3) Basic usages include creating tables and inserting data, and advanced usages involve multi-table JOINs and complex queries. 4) Frequently asked questions such as SQL syntax errors and performance issues can be debugged through the EXPLAIN command and slow query log. 5) Performance optimization methods include rational use of indexes, optimized query and use of caches. Best practices include using transactions and PreparedStatemen

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

MySQL is chosen for its performance, reliability, ease of use, and community support. 1.MySQL provides efficient data storage and retrieval functions, supporting multiple data types and advanced query operations. 2. Adopt client-server architecture and multiple storage engines to support transaction and query optimization. 3. Easy to use, supports a variety of operating systems and programming languages. 4. Have strong community support and provide rich resources and solutions.

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

Apache connects to a database requires the following steps: Install the database driver. Configure the web.xml file to create a connection pool. Create a JDBC data source and specify the connection settings. Use the JDBC API to access the database from Java code, including getting connections, creating statements, binding parameters, executing queries or updates, and processing results.

How to view sql database error

Apr 10, 2025 pm 12:09 PM

How to view sql database error

Apr 10, 2025 pm 12:09 PM

The methods for viewing SQL database errors are: 1. View error messages directly; 2. Use SHOW ERRORS and SHOW WARNINGS commands; 3. Access the error log; 4. Use error codes to find the cause of the error; 5. Check the database connection and query syntax; 6. Use debugging tools.

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Effective monitoring of Redis databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Redis Exporter Service is a powerful utility designed to monitor Redis databases using Prometheus. This tutorial will guide you through the complete setup and configuration of Redis Exporter Service, ensuring you seamlessly build monitoring solutions. By studying this tutorial, you will achieve fully operational monitoring settings