Example analysis of big data processing ideas in PHP

Encountered an issue with a previous version related to large data processing reports. I had previously solved this problem with the sync handler code, but it ran very slowly, causing me to have to extend the maximum script run time by 10 to 15 minutes. Is there a better way to handle large amounts of data in PHP sites? Ideally I'd like to run it in the background and make it as fast as possible. This process included processing thousands of pieces of financial data. I used Laravel to rebuild the site.

Most popular answer (from spin81):

People tell you to use Queues and whatnot, it's a good idea, but the problem doesn't seem to be with PHP. Laravel/OOP is great, but the program that generates the reports you're talking about doesn't seem like you should have a problem. For a different perspective, I'd like to see the SQL query you used to get this data. As others have said, if your form has thousands of rows your report shouldn't take 10 to 15 minutes to complete. In fact, if you do everything right you can probably process thousands of records and complete the same report in a minute.

1. If you are doing thousands of queries, see if you can do just a few queries first. I've previously used a PHP function to reduce 70,000 queries to a dozen queries, thus reducing its run time from minutes to a fraction of a second.

2. Run EXPLAIN on your query to see if you are missing any indexes. I once made a query and the efficiency improved by 4 orders of magnitude by adding an index. This is not an exaggeration. If you are using MySQL, you can learn this. This "black magic" skill will stun you and your friends.

3. If you are doing a SQL query and getting the results and getting a lot of numbers together, see if you can use something like SUM() and Functions like AVG() call the GROUP BY statement. As a general rule, let the database handle as much of the calculation as possible. One very important tip I can give you is this: (at least in MySQL) boolean expressions take the value 0 or 1, and if you're really creative you can do it using SUM() and its friends Some very surprising things.

4. Okay, here’s a final tip from the PHP side: See if you have calculated these equally time-consuming numbers many times. For example, let's say the cost of 1000 bags of potatoes is expensive to calculate, but you don't need to calculate that cost 500 times before you store the cost of 1000 bags of potatoes in an array or something like that, so you don't have to put the same thing Calculations over and over again. This technique is called mnemonics, and it often works wonders when used in reports like yours.

related suggestion:

Solutions to big data, large traffic and high concurrency of PHP websites

Six basic javascript tutorials Detailed explanation of data type usage

Recommended articles about large data volume testing

The above is the detailed content of Example analysis of big data processing ideas in PHP. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

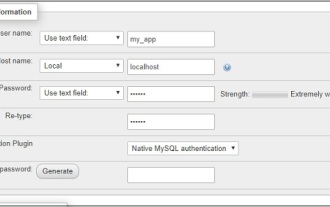

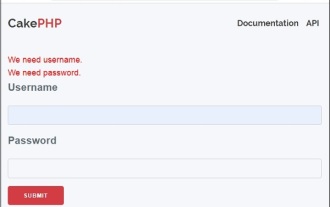

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

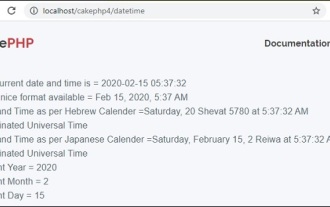

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

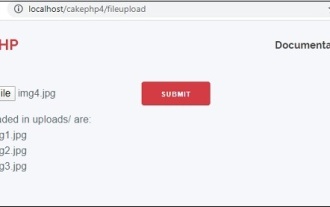

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

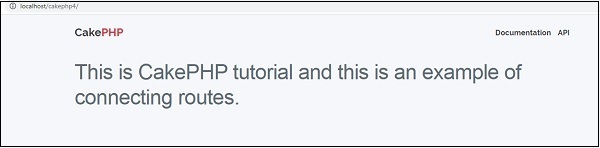

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.