Several key technologies of big data architecture

Rebuilding an enterprise IT infrastructure platform is a complex task. Replatforming is often triggered by a changing set of key business drivers, and that is exactly what is happening now. Simply put, the platforms that have dominated enterprise IT technology for nearly 30 years can no longer meet the demands of the workloads needed to drive business forward.

#The core of digital transformation is data, which has become the most valuable thing in business. Organizations have long been plagued by the data they consume due to incompatible formats, limitations of traditional databases, and the inability to flexibly combine data from multiple sources. The emergence of emerging technologies promises to change all this.

Improving software deployment models is a major aspect of removing barriers to data usage. Greater “data agility” also requires more flexible databases and more scalable real-time streaming platforms. In fact, there are at least seven foundational technologies that can be combined to provide enterprises with a flexible, real-time "data fabric."

Unlike the technologies they are replacing, these seven software innovations are able to scale to meet the needs of many users and many use cases. For businesses, they have the ability to make faster, more informed decisions and create better customer experiences.

1. NoSQL database

RDBMS has dominated the database market for nearly 30 years. However, in the face of the continuous growth of data volume and the acceleration of data processing speed, traditional relational databases have shown their shortcomings. NoSQL databases are taking over due to their speed and ability to scale. In the case of document databases, they provide a simpler model from a software engineering perspective. This simpler development model speeds time to market and helps businesses respond more quickly to customer and internal user needs.

2. Live Streaming Platform

Responding to customers in real time is crucial to customer experience. It’s no mystery that consumer-facing industries have experienced massive disruption over the past decade. This has to do with a business's ability to react to users in real time. Moving to a real-time model requires event streaming.

Message-driven applications have been around for many years. However, today's streaming platforms are much larger and cheaper than ever. Recent advancements in streaming technology have opened the door to many new ways to optimize your business. By providing real-time feedback loops for software development and testing teams, event streaming can also help enterprises improve product quality and develop new software faster.

3. Docker and Containers

Containers have great benefits for developers and operators, as well as the organization itself. The traditional approach to infrastructure isolation is static partitioning, which assigns each workload a separate fixed block of resources (whether it's a physical server or a virtual machine). Static partitioning can make troubleshooting easier, but the cost of substantially underutilized hardware is high. For example, the average web server uses only 10% of the total available computing power.

The huge benefit of container technology is its ability to create a new way of isolation. Those who know containers best may believe they can get the same benefits by using tools like Ansible, Puppet, or Chef, but in fact these technologies are highly complementary. Additionally, no matter how hard enterprises try, these automation tools fail to achieve the isolation needed to freely move workloads between different infrastructure and hardware setups. The same container can run on bare metal hardware in an on-premises data center or on a virtual machine in the public cloud without any changes. This is true workload mobility.

4. Container Repositories

Container repositories are critical to agility. Without a DevOps process for building container images and a recycle bin to store them, each container would have to be built on every machine before it could run. A repository enables container images to be launched on the machine that reads the repository. This becomes more complex when processing across multiple data centers. If you build a container image in one data center, how do you move the image to another data center? Ideally, by leveraging a converged data platform, enterprises will have the ability to mirror repositories between data centers.

A key detail here is that mirroring capabilities between on-premises and cloud computing can differ significantly from mirroring capabilities between an enterprise's data centers. Converged data platforms will solve this problem for enterprises by providing these capabilities regardless of whether data center infrastructure or cloud computing infrastructure is used in the organization.

5. Container Orchestration

Each container appears to have its own private operating system, rather than a static hardware partition. Unlike virtual machines, containers do not require static partitioning of compute and memory. This enables administrators to launch large numbers of containers on a server without having to worry about large amounts of memory. With container orchestration tools like Kubernetes, it becomes very easy to launch containers, move them and restart them elsewhere in the environment.

After the new infrastructure components are in place, document databases like MapR-DB or MongoDB, event streaming platforms like MapR-ES or Apache Kafka (orchestration tools like Kubernetes), and in After implementing the DevOps process for building and deploying software in Docker containers, one must understand the question of which components should be deployed in these containers.

6. Microservices

Historically, the concept of microservices is not new. The difference today is that enabling technologies (NoSQL databases, event streaming, container orchestration) can scale with the creation of thousands of microservices. Without these new approaches to data storage, event streaming, and architectural orchestration, large-scale microservice deployments would not be possible. The infrastructure required to manage large volumes of data, events, and container instances will not scale to the required levels.

Microservices are all about providing agility. Microservices usually consist of a function or a small set of functions. The smaller and more focused the functional units of work are, the easier it is to create, test, and deploy services. These services must be decoupled or the enterprise will lose the promise of microservices with agility. Microservices can depend on other services, but typically through a load-balanced REST API or event streaming. By using event streaming, enterprises can easily track the history of events using request and response topics. This approach has significant troubleshooting benefits since the entire request flow and all data from the request can be replayed at any point in time.

Because microservices encapsulate a small piece of work, and because they are decoupled from each other, there are few barriers to replacing or upgrading services over time. In legacy mode, relying on tight coupling like RPC meant having to close all connections and then re-establish them. Load balancing is a big problem in implementing these because manual configuration makes them error-prone.

7. Functions as a Service

Just as we have seen microservices dominate the industry, so too will we see the rise of serverless computing or perhaps more accurately call it for Functions as a Service (FaaS). FaaS creates microservices in such a way that the code can be wrapped in a lightweight framework, built into a container, executed on demand (based on some kind of trigger), and then load balanced automatically, thanks to the lightweight framework . The beauty of FaaS is that it lets developers focus almost entirely on that functionality. Therefore, FaaS looks like the logical conclusion of the microservices approach.

Triggering events is a key component of FaaS. Without it, functions can be called and resources consumed only when work needs to be done. The automatic invocation of functions makes FaaS truly valuable. Imagine that every time someone reads a user's profile, there is an audit event, a feature that must run to notify the security team. More specifically, it may filter out only certain types of records. It can be optional, after all it is a fully customizable business feature. It is important to note that it is very simple to complete such a workflow using a deployment model like FaaS.

Putting Events Together

The magic behind triggering services is really just events in the event stream. Certain types of events are used as triggers more frequently than others, but any event that a business wishes to be a trigger can become a trigger. The triggering event can be a document update, running the OCR process on the new document, and then adding the text from the OCR process to the NoSQL database. If one thinks in a more interesting way, image recognition and scoring can be done through a machine learning framework whenever an image is uploaded. There are no fundamental limitations here. If a trigger event is defined, some event occurs, the event triggers the function, and the function completes its work.

FaaS will be the next stage in the adoption of microservices. However, there is one major factor that must be considered when approaching FaaS, and that is vendor lock-in. FaaS hides specific storage mechanisms, specific hardware infrastructure and orchestration, which are all great things for developers. But because of this abstraction, hosted FaaS offerings represent one of the greatest opportunities for vendor lock-in the IT industry has ever seen. Because these APIs are not standardized, migrating from a FaaS product in the public cloud is nearly impossible without losing nearly 100% of the work that has been done. If FaaS is approached in a more organized manner by leveraging events from converged data platforms, moving between cloud providers will become easier.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

Big data structure processing skills: Chunking: Break down the data set and process it in chunks to reduce memory consumption. Generator: Generate data items one by one without loading the entire data set, suitable for unlimited data sets. Streaming: Read files or query results line by line, suitable for large files or remote data. External storage: For very large data sets, store the data in a database or NoSQL.

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

Five major development trends in the AEC/O industry in 2024

Apr 19, 2024 pm 02:50 PM

AEC/O (Architecture, Engineering & Construction/Operation) refers to the comprehensive services that provide architectural design, engineering design, construction and operation in the construction industry. In 2024, the AEC/O industry faces changing challenges amid technological advancements. This year is expected to see the integration of advanced technologies, heralding a paradigm shift in design, construction and operations. In response to these changes, industries are redefining work processes, adjusting priorities, and enhancing collaboration to adapt to the needs of a rapidly changing world. The following five major trends in the AEC/O industry will become key themes in 2024, recommending it move towards a more integrated, responsive and sustainable future: integrated supply chain, smart manufacturing

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

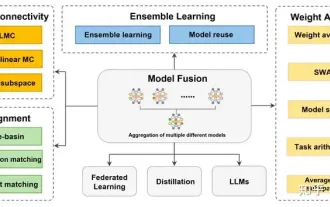

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

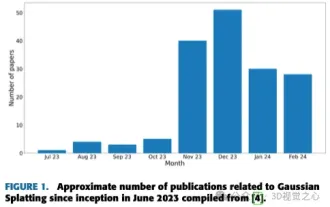

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

Discussion on the reasons and solutions for the lack of big data framework in Go language

Mar 29, 2024 pm 12:24 PM

In today's big data era, data processing and analysis have become an important support for the development of various industries. As a programming language with high development efficiency and superior performance, Go language has gradually attracted attention in the field of big data. However, compared with other languages such as Java and Python, Go language has relatively insufficient support for big data frameworks, which has caused trouble for some developers. This article will explore the main reasons for the lack of big data framework in Go language, propose corresponding solutions, and illustrate it with specific code examples. 1. Go language