Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Concurrent implementation of curl function in PHP reduces backend access time

Concurrent implementation of curl function in PHP reduces backend access time

Concurrent implementation of curl function in PHP reduces backend access time

The content of this article is to share with you the curl function concurrency implementation in PHP to reduce back-end access time. It has a certain reference value. Friends in need can refer to it

First of all, let’s understand PHP curl multi-threaded function in:

# curl_multi_add_handle

# curl_multi_close

# curl_multi_exec

# curl_multi_getcontent

# curl_multi_info_read

# curl_multi_init

# curl_multi_remove_handle

# curl_multi_select

Generally speaking, when you think of using these functions, the purpose should obviously be to request multiple URLs at the same time, rather than requesting them one by one. Otherwise, it is better to loop and adjust curl_exec yourself.

The steps are summarized as follows:

The first step: Call curl_multi_init

The second step: Call curl_multi_add_handle in a loop

It should be noted in this step that the second parameter of curl_multi_add_handle is given by Subhandle from curl_init.

Step 3: Continuously call curl_multi_exec

Step 4: Cyclically call curl_multi_getcontent to obtain the results as needed

Step 5: Call curl_multi_remove_handle, and call curl_close for each word handle

Step 6: Call curl_multi_close

Here is a simple example found online. The author calls it a dirty example (I will explain why dirty later):

/*

Here's a quick and dirty example for curl-multi from PHP, tested on PHP 5.0.0RC1 CLI / FreeBSD 5.2.1

*/

$connomains = array(

"http://www.cnn.com/",

"http://www.canada.com/",

"http://www.yahoo.com/"

);

$mh = curl_multi_init();

foreach ($connomains as $i => $url) {

$conn[$i]=curl_init($url);

curl_setopt($conn[$i],CURLOPT_RETURNTRANSFER,1);

curl_multi_add_handle ($mh,$conn[$i]);

}

do { $n=curl_multi_exec($mh,$active); } while ($active);

foreach ($connomains as $i => $url) {

$res[$i]=curl_multi_getcontent($conn[$i]);

curl_close($conn[$i]);

}

print_r($res);The whole usage process is almost like this , however, this simple code has a fatal weakness, that is, the section of the do loop is an infinite loop during the entire url request, which can easily cause the CPU to occupy 100%.

Now let's improve it. Here we need to use a function curl_multi_select that has almost no documentation. Although C's curl library has instructions for select, the interface and usage in PHP are indeed different from those in C. .

Change the do section above to the following:

do {

$mrc = curl_multi_exec($mh,$active);

} while ($mrc == CURLM_CALL_MULTI_PERFORM);

while ($active and $mrc == CURLM_OK) {

if (curl_multi_select($mh) != -1) {

do {

$mrc = curl_multi_exec($mh, $active);

} while ($mrc == CURLM_CALL_MULTI_PERFORM);

}

} Because $active has to wait until all url data is received before it becomes false, so the return value of curl_multi_exec is used here Determine whether there is still data. When there is data, curl_multi_exec will be called continuously. If there is no data for the time being, it will enter the select stage. Once new data comes, it can be awakened to continue execution. The advantage here is that there is no unnecessary consumption of CPU.

In addition: There are some details that may sometimes be encountered:

Control the timeout of each request, do it through curl_setopt before curl_multi_add_handle:

curl_setopt($ch , CURLOPT_TIMEOUT, $timeout);

To determine whether there is a timeout or other errors, use curl_error($conn[$i]);

before curl_multi_getcontent: curl_error($conn[$i]);

#Here I simply use the dirty example mentioned above (it is enough, and I have not found 100% CPU usage). Simulate concurrency on a certain interface of "Kandian.com". The function is to read and write data to memcache. Due to confidentiality, relevant data and results will not be posted. I simulated 3 times. The first time, 10 threads requested 1000 times at the same time. The second time, 100 threads requested 1000 times at the same time. The third time, 1000 threads requested 100 times at the same time (it is already quite strenuous, I dare not In settings with more than 1000 multithreads). It seems that curl multi-threaded simulation concurrency still has certain limitations. In addition, we also suspect that there may be a large error in the results due to multi-thread delay, and we found out by comparing the data. There is not much difference in the time taken for initialization and set. The difference lies in the get method, so this can be easily eliminated~~~Usually, cURL in PHP runs in a blocking manner, that is, after creating a cURL request You must wait until it executes successfully or times out before executing the next request. The curl_multi_* series of functions make successful concurrent access possible. The PHP documentation does not introduce this function in detail. The usage is as follows: ###$requests = array('http://www.baidu.com', 'http://www.google.com'); $main = curl_multi_init(); $results = array(); $errors = array(); $info = array(); $count = count($requests); for($i = 0; $i < $count; $i++) { $handles[$i] = curl_init($requests[$i]); var_dump($requests[$i]); curl_setopt($handles[$i], CURLOPT_URL, $requests[$i]); curl_setopt($handles[$i], CURLOPT_RETURNTRANSFER, 1); curl_multi_add_handle($main, $handles[$i]); } $running = 0; do { curl_multi_exec($main, $running); } while($running > 0); for($i = 0; $i < $count; $i++) { $results[] = curl_multi_getcontent($handles[$i]); $errors[] = curl_error($handles[$i]); $info[] = curl_getinfo($handles[$i]); curl_multi_remove_handle($main, $handles[$i]); } curl_multi_close($main); var_dump($results); var_dump($errors); var_dump($info);Copy after login

前言:在我们平时的程序中难免出现同时访问几个接口的情况,平时我们用curl进行访问的时候,一般都是单个、顺序访问,假如有3个接口,每个接口耗时500毫秒那么我们三个接口就要花费1500毫秒了,这个问题太头疼了严重影响了页面访问速度,有没有可能并发访问来提高速度呢?今天就简单的说一下,利用curl并发来提高页面访问速度,希望大家多指导。1、老的curl访问方式以及耗时统计

<?php function curl_fetch($url, $timeout=3){ $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, $url); curl_setopt($ch, CURLOPT_TIMEOUT, $timeout); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); $data = curl_exec($ch); $errno = curl_errno($ch); if ($errno>0) { $data = false; } curl_close($ch); return $data; } function microtime_float() { list($usec, $sec) = explode(" ", microtime()); return ((float)$usec + (float)$sec); } $url_arr=array( "taobao"=>"http://www.taobao.com", "sohu"=>"http://www.sohu.com", "sina"=>"http://www.sina.com.cn", ); $time_start = microtime_float(); $data=array(); foreach ($url_arr as $key=>$val) { $data[$key]=curl_fetch($val); } $time_end = microtime_float(); $time = $time_end - $time_start; echo "耗时:{$time}"; ?>Copy after login

耗时:0.614秒

2、curl并发访问方式以及耗时统计

<?php function curl_multi_fetch($urlarr=array()){ $result=$res=$ch=array(); $nch = 0; $mh = curl_multi_init(); foreach ($urlarr as $nk => $url) { $timeout=2; $ch[$nch] = curl_init(); curl_setopt_array($ch[$nch], array( CURLOPT_URL => $url, CURLOPT_HEADER => false, CURLOPT_RETURNTRANSFER => true, CURLOPT_TIMEOUT => $timeout, )); curl_multi_add_handle($mh, $ch[$nch]); ++$nch; } /* wait for performing request */ do { $mrc = curl_multi_exec($mh, $running); } while (CURLM_CALL_MULTI_PERFORM == $mrc); while ($running && $mrc == CURLM_OK) { // wait for network if (curl_multi_select($mh, 0.5) > -1) { // pull in new data; do { $mrc = curl_multi_exec($mh, $running); } while (CURLM_CALL_MULTI_PERFORM == $mrc); } } if ($mrc != CURLM_OK) { error_log("CURL Data Error"); } /* get data */ $nch = 0; foreach ($urlarr as $moudle=>$node) { if (($err = curl_error($ch[$nch])) == '') { $res[$nch]=curl_multi_getcontent($ch[$nch]); $result[$moudle]=$res[$nch]; } else { error_log("curl error"); } curl_multi_remove_handle($mh,$ch[$nch]); curl_close($ch[$nch]); ++$nch; } curl_multi_close($mh); return $result; } $url_arr=array( "taobao"=>"http://www.taobao.com", "sohu"=>"http://www.sohu.com", "sina"=>"http://www.sina.com.cn", ); function microtime_float() { list($usec, $sec) = explode(" ", microtime()); return ((float)$usec + (float)$sec); } $time_start = microtime_float(); $data=curl_multi_fetch($url_arr); $time_end = microtime_float(); $time = $time_end - $time_start; echo "耗时:{$time}"; ?>Copy after login耗时:0.316秒

帅气吧整个页面访问后端接口的时间节省了一半3、curl相关参数

curl_close — Close a cURL session

curl_copy_handle — Copy a cURL handle along with all of its preferences

curl_errno — Return the last error number

curl_error — Return a string containing the last error for the current session

curl_exec — Perform a cURL session

curl_getinfo — Get information regarding a specific transfer

curl_init — Initialize a cURL session

curl_multi_add_handle — Add a normal cURL handle to a cURL multi handle

curl_multi_close — Close a set of cURL handles

curl_multi_exec — Run the sub-connections of the current cURL handle

curl_multi_getcontent — Return the content of a cURL handle if CURLOPT_RETURNTRANSFER is set

curl_multi_info_read — Get information about the current transfers

curl_multi_init — Returns a new cURL multi handle

curl_multi_remove_handle — Remove a multi handle from a set of cURL handles

curl_multi_select — Wait for activity on any curl_multi connection

curl_setopt_array — Set multiple options for a cURL transfer

curl_setopt — Set an option for a cURL transfer

curl_version — Gets cURL version informationThe above is the detailed content of Concurrent implementation of curl function in PHP reduces backend access time. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

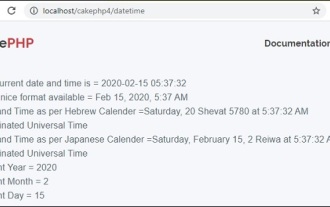

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

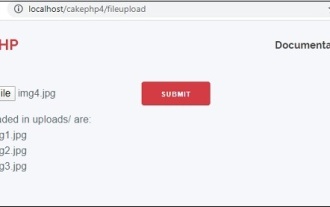

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

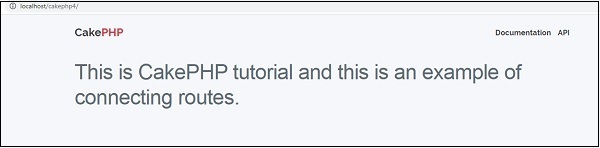

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

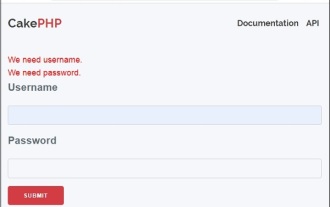

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

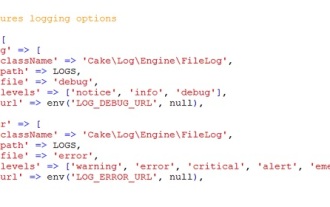

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

Logging in CakePHP is a very easy task. You just have to use one function. You can log errors, exceptions, user activities, action taken by users, for any background process like cronjob. Logging data in CakePHP is easy. The log() function is provide