Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

PHP interview question 7: How to configure nginx load balancing

PHP interview question 7: How to configure nginx load balancing

PHP interview question 7: How to configure nginx load balancing

The content of this article is about how to configure the load balancing of nginx in PHP interview question seven. It has a certain reference value. Now I share it with you. Friends in need can refer to it

load balancing

nginx has 4 load balancing modes:

1), Polling (default)

Each request is assigned to a different back-end server one by one in chronological order. If the back-end server goes down, it can be automatically eliminated.

2)、weight

Specify the polling probability, the weight is proportional to the access ratio, and is used when the back-end server performance is uneven.

2)、ip_hash

Each request is allocated according to the hash result of the access IP, so that each visitor has fixed access to a back-end server, which can solve the session problem.

3), fair (third party)

Requests are allocated according to the response time of the back-end server, and those with short response times are allocated first.

4), url_hash (third party)

Configuration method:

Open the nginx.cnf file

Add the upstream node under the http node :

upstream webname {

server 192.168.0.1:8080;

server 192.168.0.2:8080;

}The webname is the name you choose. Finally, it will be accessed in the URL through this name. Like the above example, adding nothing is the default polling. The first request comes to visit the first one. server, the second request comes to access the second server. Come in turn.

upstream webname {

server 192.168.0.1:8080 weight 2;

server 192.168.0.2:8080 weight 1;

}This weight is also easy to understand. The greater the weight, the greater the probability of being accessed. In the above example, server1 is visited twice and server2 is visited once.

upstream webname {

ip_hash;

server 192.168.0.1:8080;

server 192.168.0.2:8080;

}The configuration of ip_hash is also very simple. , just add a line, so that anyone coming from the same IP will go to the same server

Then configure it under the server node:

location /name {

proxy_pass http://webname/name/;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}Use the webname configured above in proxy_pass Replaced the original ip address.

This basically completes the load balancing configuration.

The following is the configuration of the active and backup:

Still in upstream

upstream webname {

server 192.168.0.1:8080;

server 192.168.0.2:8080 backup;

}Set a certain node as backup, then under normal circumstances all requests access server1, when server1 hangs Server2 will be accessed only when it is down or busy. Set a node to down, then this server will not participate in the load.

Implementation Example

Load balancing is something that our high-traffic website needs to do. Now I will introduce to you the load balancing configuration method on the Nginx server. I hope it will be helpful to students in need. So helpful.

Load Balancing

First let’s briefly understand what load balancing is. To understand it literally, it can explain that N servers share the load equally, and it will not be because a certain server has a high load. A situation where a server is down and a server is idle. Then the premise of load balancing is that it can be achieved by multiple servers, that is, more than two servers are enough.

Test environment

Since there is no server, this test directly hosts the specified domain name, and then installs three CentOS in VMware.

Test domain name: a.com

A server IP: 192.168.5.149 (main)

B server IP: 192.168.5.27

C server IP :192.168.5.126

Deployment idea

A server serves as the main server, the domain name is directly resolved to A server (192.168.5.149), and the A server load balances to B server (192.168.5.27) and C on the server (192.168.5.126).

Domain name resolution

Since it is not a real environment, the domain name is just a.com for testing, so the resolution of a.com can only be set in the hosts file.

Open:

C:WindowsSystem32driversetchostsAdd

upstream webname {

server 192.168.0.1:8080;

server 192.168.0.2:8080 down;

}at the end and save and exit, then start the command mode and ping to see if the setting is successful

Judging from the screenshot, a.com has been successfully parsed to 192.168.5.149IP

A server nginx.conf settings

Open nginx.conf, the file location is in the conf directory of the nginx installation directory .

Add the following code to the http section

192.168.5.149 a.com

Save and restart nginx

B and C server nginx.conf settings

Open nginx.confi and add the following code to the http sectionupstream a.com {

server 192.168.5.126:80;

server 192.168.5.27:80;

}

server{

listen 80;

server_name a.com;

location / {

proxy_pass http://a.com;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}Save and restart nginx

Test

When accessing a.com, in order to distinguish which server to turn to for processing, I write an index with different content under servers B and C respectively. .html file to distinguish.

Open the browser to access a.com. Refresh and you will find that all requests are allocated by the main server (192.168.5.149) to server B (192.168.5.27) and server C (192.168.5.126). Achieved load balancing effect.

B server processing page

C server processing page

What if one of the servers goes down?

When a certain server goes down, will access be affected?

Let’s take a look at the example first. Based on the above example, assume that the machine C server 192.168.5.126 is down (since it is impossible to simulate the downtime, so I shut down the C server) and then visit it again.

Access results:

We found that although the C server (192.168.5.126) was down, it did not affect website access. In this way, you won't have to worry about dragging down the entire site because a certain machine is down in load balancing mode.

如果b.com也要设置负载均衡怎么办?

很简单,跟a.com设置一样。如下:

假设b.com的主服务器IP是192.168.5.149,负载均衡到192.168.5.150和192.168.5.151机器上

现将域名b.com解析到192.168.5.149IP上。

在主服务器(192.168.5.149)的nginx.conf加入以下代码:

upstream b.com {

server 192.168.5.150:80;

server 192.168.5.151:80;

}

server{

listen 80;

server_name b.com;

location / {

proxy_pass http://b.com;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}保存重启nginx

在192.168.5.150与192.168.5.151机器上设置nginx,打开nginx.conf在末尾添加以下代码:

server{

listen 80;

server_name b.com;

index index.html;

root /data0/htdocs/www;

}保存重启nginx

完成以后步骤后即可实现b.com的负载均衡配置。

主服务器不能提供服务吗?

以上例子中,我们都是应用到了主服务器负载均衡到其它服务器上,那么主服务器本身能不能也加在服务器列表中,这样就不会白白浪费拿一台服务器纯当做转发功能,而是也参与到提供服务中来。

如以上案例三台服务器:

A服务器IP :192.168.5.149 (主)

B服务器IP :192.168.5.27

C服务器IP :192.168.5.126

我们把域名解析到A服务器,然后由A服务器转发到B服务器与C服务器,那么A服务器只做一个转发功能,现在我们让A服务器也提供站点服务。

我们先来分析一下,如果添加主服务器到upstream中,那么可能会有以下两种情况发生:

1、主服务器转发到了其它IP上,其它IP服务器正常处理;

2、主服务器转发到了自己IP上,然后又进到主服务器分配IP那里,假如一直分配到本机,则会造成一个死循环。

怎么解决这个问题呢?因为80端口已经用来监听负载均衡的处理,那么本服务器上就不能再使用80端口来处理a.com的访问请求,得用一个新的。于是我们把主服务器的nginx.conf加入以下一段代码:

server{

listen 8080;

server_name a.com;

index index.html;

root /data0/htdocs/www;

}重启nginx,在浏览器输入a.com:8080试试看能不能访问。结果可以正常访问

既然能正常访问,那么我们就可以把主服务器添加到upstream中,但是端口要改一下,如下代码:

upstream a.com {

server 192.168.5.126:80;

server 192.168.5.27:80;

server 127.0.0.1:8080;

}由于这里可以添加主服务器IP192.168.5.149或者127.0.0.1均可以,都表示访问自己。

重启Nginx,然后再来访问a.com看看会不会分配到主服务器上。

主服务器也能正常加入服务了。

最后

一、负载均衡不是nginx独有,著名鼎鼎的apache也有,但性能可能不如nginx。

二、多台服务器提供服务,但域名只解析到主服务器,而真正的服务器IP不会被ping下即可获得,增加一定安全性。

三、upstream里的IP不一定是内网,外网IP也可以。不过经典的案例是,局域网中某台IP暴露在外网下,域名直接解析到此IP。然后又这台主服务器转发到内网服务器IP中。

四、某台服务器宕机、不会影响网站正常运行,Nginx不会把请求转发到已宕机的IP上

相关推荐:

php面试题五之nginx如何调用php和php-fpm的作用和工作原理

The above is the detailed content of PHP interview question 7: How to configure nginx load balancing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

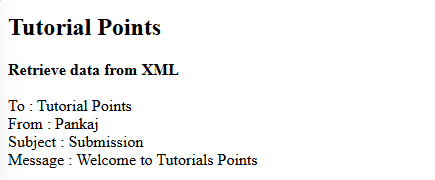

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

WordPress site file access is restricted: Why is my .txt file not accessible through domain name?

Apr 01, 2025 pm 03:00 PM

WordPress site file access is restricted: Why is my .txt file not accessible through domain name?

Apr 01, 2025 pm 03:00 PM

Wordpress site file access is restricted: troubleshooting the reason why .txt file cannot be accessed recently. Some users encountered a problem when configuring the mini program business domain name: �...

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Static binding (static::) implements late static binding (LSB) in PHP, allowing calling classes to be referenced in static contexts rather than defining classes. 1) The parsing process is performed at runtime, 2) Look up the call class in the inheritance relationship, 3) It may bring performance overhead.

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,