How to use Saver in Tensorflow

This article mainly introduces the detailed usage of Tensorflow's Saver. Now I will share it with you and give you a reference. Let’s come and take a look

How to use Saver

1. Background introduction to Saver

We often want to save the training results after training a model. These results refer to the parameters of the model for training in the next iteration or for testing. Tensorflow provides the Saver class for this requirement.

The Saver class provides related methods for saving to checkpoints files and restoring variables from checkpoints files. The checkpoints file is a binary file that maps variable names to corresponding tensor values.

As long as a counter is provided, the Saver class can automatically generate a checkpoint file when the counter is triggered. This allows us to save multiple intermediate results during training. For example, we can save the results of each training step.

To avoid filling up the entire disk, Saver can automatically manage Checkpoints files. For example, we can specify to save the most recent N Checkpoints files.

2. Saver instance

The following is an example to describe how to use the Saver class

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

|

isTrain: used to distinguish the training phase and the testing phase, True represents training, False represents testing

train_steps: represents the number of training times , 100

checkpoint_steps is used in the example: indicates how many times to save checkpoints during training, 50

checkpoint_dir is used in the example: checkpoints file is saved Path, the current path is used in the example

2.1 Training phase

Use the Saver.save() method to save the model:

sess: indicates the current session, which records the current variable value

checkpoint_dir 'model.ckpt': indicates the stored file name

global_step: Indicates the current step

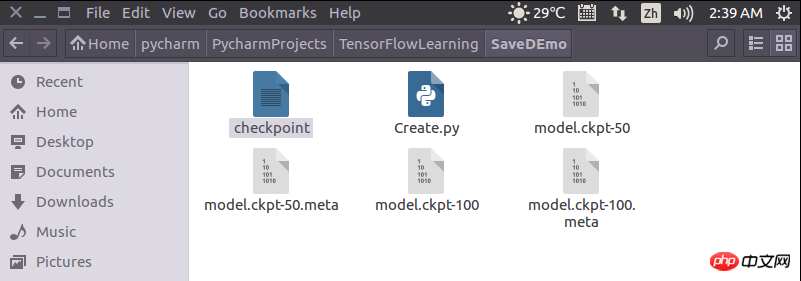

After the training is completed, there will be 5 more files in the current directory.

Open the file named "checkpoint", you can see the save record and the latest model storage location.

2.1 Test Phase

The saver.restore() method is used to restore variables during the test phase:

sess: represents the current session , the previously saved results will be loaded into this session

ckpt.model_checkpoint_path: Indicates the location where the model is stored. There is no need to provide the name of the model. It will check the checkpoint file to see who is the latest. , what is it called.

The running results are shown in the figure below, loading the results of the previously trained parameters w and b

Related recommendations:

tensorflow How to use flags to define command line parameters

Save and restore the model learned by tensorflow1.0 (Saver)_python

The above is the detailed content of How to use Saver in Tensorflow. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

37

37

110

110

Analyze the usage and classification of JSP comments

Feb 01, 2024 am 08:01 AM

Analyze the usage and classification of JSP comments

Feb 01, 2024 am 08:01 AM

Classification and Usage Analysis of JSP Comments JSP comments are divided into two types: single-line comments: ending with, only a single line of code can be commented. Multi-line comments: starting with /* and ending with */, you can comment multiple lines of code. Single-line comment example Multi-line comment example/**This is a multi-line comment*Can comment on multiple lines of code*/Usage of JSP comments JSP comments can be used to comment JSP code to make it easier to read

How to install tensorflow in conda

Dec 05, 2023 am 11:26 AM

How to install tensorflow in conda

Dec 05, 2023 am 11:26 AM

Installation steps: 1. Download and install Miniconda, select the appropriate Miniconda version according to the operating system, and install according to the official guide; 2. Use the "conda create -n tensorflow_env python=3.7" command to create a new Conda environment; 3. Activate Conda environment; 4. Use the "conda install tensorflow" command to install the latest version of TensorFlow; 5. Verify the installation.

How to correctly use the exit function in C language

Feb 18, 2024 pm 03:40 PM

How to correctly use the exit function in C language

Feb 18, 2024 pm 03:40 PM

How to use the exit function in C language requires specific code examples. In C language, we often need to terminate the execution of the program early in the program, or exit the program under certain conditions. C language provides the exit() function to implement this function. This article will introduce the usage of exit() function and provide corresponding code examples. The exit() function is a standard library function in C language and is included in the header file. Its function is to terminate the execution of the program, and can take an integer

Usage of WPSdatedif function

Feb 20, 2024 pm 10:27 PM

Usage of WPSdatedif function

Feb 20, 2024 pm 10:27 PM

WPS is a commonly used office software suite, and the WPS table function is widely used for data processing and calculations. In the WPS table, there is a very useful function, the DATEDIF function, which is used to calculate the time difference between two dates. The DATEDIF function is the abbreviation of the English word DateDifference. Its syntax is as follows: DATEDIF(start_date,end_date,unit) where start_date represents the starting date.

Introduction to Python functions: Usage and examples of abs function

Nov 03, 2023 pm 12:05 PM

Introduction to Python functions: Usage and examples of abs function

Nov 03, 2023 pm 12:05 PM

Introduction to Python functions: usage and examples of the abs function 1. Introduction to the usage of the abs function In Python, the abs function is a built-in function used to calculate the absolute value of a given value. It can accept a numeric argument and return the absolute value of that number. The basic syntax of the abs function is as follows: abs(x) where x is the numerical parameter to calculate the absolute value, which can be an integer or a floating point number. 2. Examples of abs function Below we will show the usage of abs function through some specific examples: Example 1: Calculation

Introduction to Python functions: Usage and examples of isinstance function

Nov 04, 2023 pm 03:15 PM

Introduction to Python functions: Usage and examples of isinstance function

Nov 04, 2023 pm 03:15 PM

Introduction to Python functions: Usage and examples of the isinstance function Python is a powerful programming language that provides many built-in functions to make programming more convenient and efficient. One of the very useful built-in functions is the isinstance() function. This article will introduce the usage and examples of the isinstance function and provide specific code examples. The isinstance() function is used to determine whether an object is an instance of a specified class or type. The syntax of this function is as follows

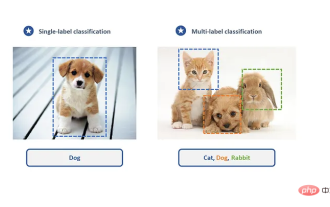

Create a deep learning classifier for cat and dog pictures using TensorFlow and Keras

May 16, 2023 am 09:34 AM

Create a deep learning classifier for cat and dog pictures using TensorFlow and Keras

May 16, 2023 am 09:34 AM

In this article, we will use TensorFlow and Keras to create an image classifier that can distinguish between images of cats and dogs. To do this, we will use the cats_vs_dogs dataset from the TensorFlow dataset. The dataset consists of 25,000 labeled images of cats and dogs, of which 80% are used for training, 10% for validation, and 10% for testing. Loading data We start by loading the dataset using TensorFlowDatasets. Split the data set into training set, validation set and test set, accounting for 80%, 10% and 10% of the data respectively, and define a function to display some sample images in the data set. importtenso

Detailed explanation and usage introduction of MySQL ISNULL function

Mar 01, 2024 pm 05:24 PM

Detailed explanation and usage introduction of MySQL ISNULL function

Mar 01, 2024 pm 05:24 PM

The ISNULL() function in MySQL is a function used to determine whether a specified expression or column is NULL. It returns a Boolean value, 1 if the expression is NULL, 0 otherwise. The ISNULL() function can be used in the SELECT statement or for conditional judgment in the WHERE clause. 1. The basic syntax of the ISNULL() function: ISNULL(expression) where expression is the expression to determine whether it is NULL or