WeChat Applet

WeChat Applet

WeChat Development

WeChat Development

Let's see how good you look! Public account developed based on Python

Let's see how good you look! Public account developed based on Python

Let's see how good you look! Public account developed based on Python

This is a Python-based WeChat public account development for appearance detection. Today we analyze the user's pictures through Tencent's AI platform and then return them to the user. Let’s experience the beauty test of public accounts together

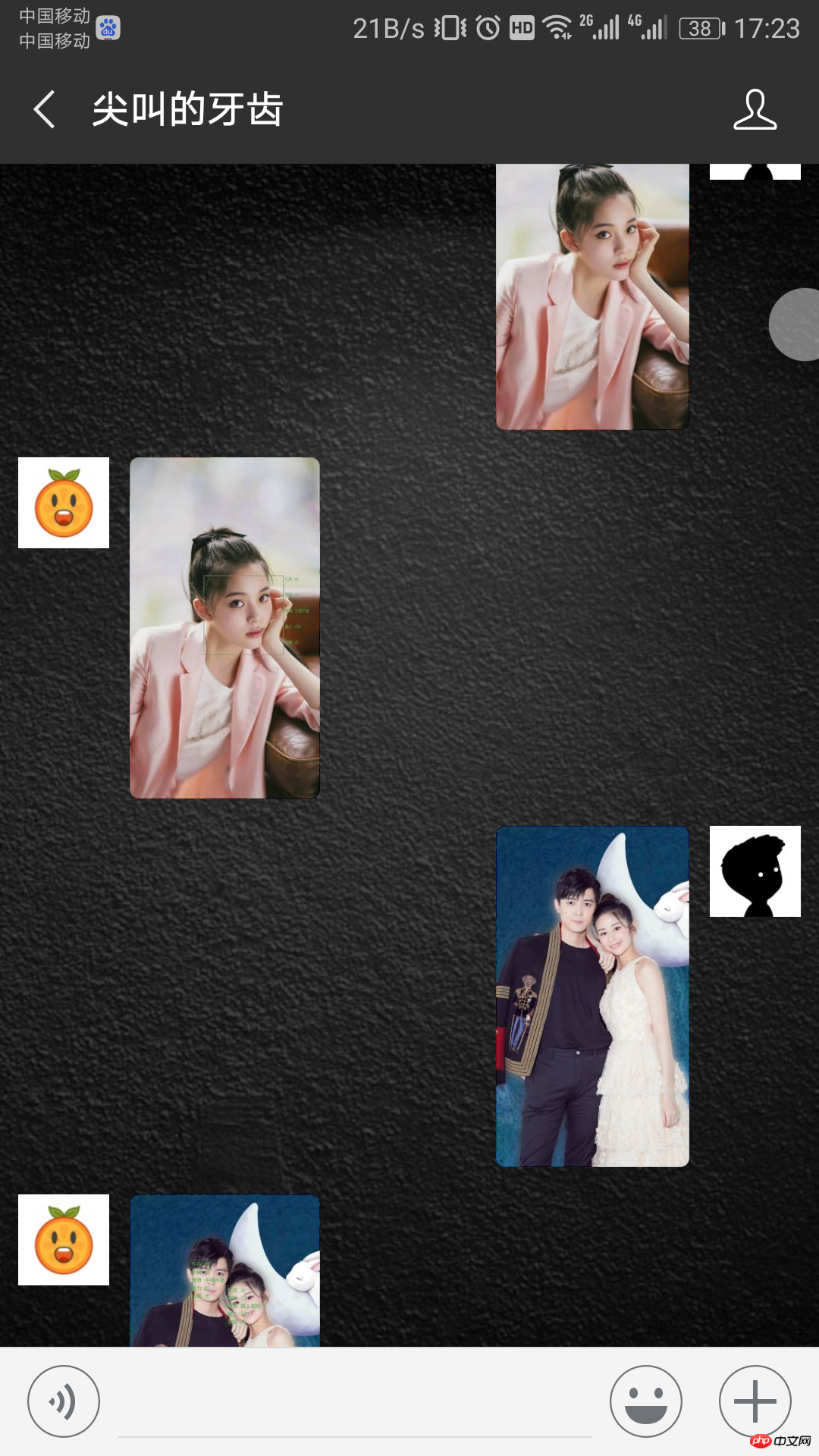

Rendering

- app_id application identification, we can get the app_id after registering on the AI platform

- time_stamp timestamp

- nonce_str random string

- sign signature information, we need to calculate it ourselves

- image Images to be detected (upper limit 1M)

- mode Detection mode

- Sort the

request parameter pairs in ascending dictionary order by key to obtain an ordered list of parameter pairs N - The parameter pairs in list N are spliced into strings in the format of URL key-value pairs to obtain string T (for example: key1=value1&key2=value2). The value part of the URL key-value splicing process requires URL encoding. The URL encoding algorithm uses capital letters. For example, instead of lowercase �

- , use app_key as the key name of the application key to form a URL key value and splice it to the end of the string T to obtain the string S (such as: key1=value1&key2 =value2&app_key=Key)

- Perform MD5 operation on the string S, convert all characters of the obtained MD5 value into uppercase, and obtain the interface request signature

pip install requestsInstall requests.

pip install pillow and pip install opencv-python to install.

import time

import random

import base64

import hashlib

import requests

from urllib.parse import urlencode

import cv2

import numpy as np

from PIL import Image, ImageDraw, ImageFont

import os

# 一.计算接口鉴权,构造请求参数

def random_str():

'''得到随机字符串nonce_str'''

str = 'abcdefghijklmnopqrstuvwxyz'

r = ''

for i in range(15):

index = random.randint(0,25)

r += str[index]

return r

def image(name):

with open(name, 'rb') as f:

content = f.read()

return base64.b64encode(content)

def get_params(img):

'''组织接口请求的参数形式,并且计算sign接口鉴权信息,

最终返回接口请求所需要的参数字典'''

params = {

'app_id': '1106860829',

'time_stamp': str(int(time.time())),

'nonce_str': random_str(),

'image': img,

'mode': '0'

}

sort_dict = sorted(params.items(), key=lambda item: item[0], reverse=False) # 排序

sort_dict.append(('app_key', 'P8Gt8nxi6k8vLKbS')) # 添加app_key

rawtext = urlencode(sort_dict).encode() # URL编码

sha = hashlib.md5()

sha.update(rawtext)

md5text = sha.hexdigest().upper() # 计算出sign,接口鉴权

params['sign'] = md5text # 添加到请求参数列表中

return params

# 二.请求接口URL

def access_api(img):

frame = cv2.imread(img)

nparry_encode = cv2.imencode('.jpg', frame)[1]

data_encode = np.array(nparry_encode)

img_encode = base64.b64encode(data_encode) # 图片转为base64编码格式

url = 'https://api.ai.qq.com/fcgi-bin/face/face_detectface'

res = requests.post(url, get_params(img_encode)).json() # 请求URL,得到json信息

# 把信息显示到图片上

if res['ret'] == 0: # 0代表请求成功

pil_img = Image.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)) # 把opencv格式转换为PIL格式,方便写汉字

draw = ImageDraw.Draw(pil_img)

for obj in res['data']['face_list']:

img_width = res['data']['image_width'] # 图像宽度

img_height = res['data']['image_height'] # 图像高度

# print(obj)

x = obj['x'] # 人脸框左上角x坐标

y = obj['y'] # 人脸框左上角y坐标

w = obj['width'] # 人脸框宽度

h = obj['height'] # 人脸框高度

# 根据返回的值,自定义一下显示的文字内容

if obj['glass'] == 1: # 眼镜

glass = '有'

else:

glass = '无'

if obj['gender'] >= 70: # 性别值从0-100表示从女性到男性

gender = '男'

elif 50 <= obj['gender'] < 70:

gender = "娘"

elif obj['gender'] < 30:

gender = '女'

else:

gender = '女汉子'

if 90 < obj['expression'] <= 100: # 表情从0-100,表示笑的程度

expression = '一笑倾城'

elif 80 < obj['expression'] <= 90:

expression = '心花怒放'

elif 70 < obj['expression'] <= 80:

expression = '兴高采烈'

elif 60 < obj['expression'] <= 70:

expression = '眉开眼笑'

elif 50 < obj['expression'] <= 60:

expression = '喜上眉梢'

elif 40 < obj['expression'] <= 50:

expression = '喜气洋洋'

elif 30 < obj['expression'] <= 40:

expression = '笑逐颜开'

elif 20 < obj['expression'] <= 30:

expression = '似笑非笑'

elif 10 < obj['expression'] <= 20:

expression = '半嗔半喜'

elif 0 <= obj['expression'] <= 10:

expression = '黯然伤神'

delt = h // 5 # 确定文字垂直距离

# 写入图片

if len(res['data']['face_list']) > 1: # 检测到多个人脸,就把信息写入人脸框内

font = ImageFont.truetype('yahei.ttf', w // 8, encoding='utf-8') # 提前把字体文件下载好

draw.text((x + 10, y + 10), '性别 :' + gender, (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 1), '年龄 :' + str(obj['age']), (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 2), '表情 :' + expression, (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 3), '魅力 :' + str(obj['beauty']), (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 4), '眼镜 :' + glass, (76, 176, 80), font=font)

elif img_width - x - w < 170: # 避免图片太窄,导致文字显示不完全

font = ImageFont.truetype('yahei.ttf', w // 8, encoding='utf-8')

draw.text((x + 10, y + 10), '性别 :' + gender, (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 1), '年龄 :' + str(obj['age']), (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 2), '表情 :' + expression, (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 3), '魅力 :' + str(obj['beauty']), (76, 176, 80), font=font)

draw.text((x + 10, y + 10 + delt * 4), '眼镜 :' + glass, (76, 176, 80), font=font)

else:

font = ImageFont.truetype('yahei.ttf', 20, encoding='utf-8')

draw.text((x + w + 10, y + 10), '性别 :' + gender, (76, 176, 80), font=font)

draw.text((x + w + 10, y + 10 + delt * 1), '年龄 :' + str(obj['age']), (76, 176, 80), font=font)

draw.text((x + w + 10, y + 10 + delt * 2), '表情 :' + expression, (76, 176, 80), font=font)

draw.text((x + w + 10, y + 10 + delt * 3), '魅力 :' + str(obj['beauty']), (76, 176, 80), font=font)

draw.text((x + w + 10, y + 10 + delt * 4), '眼镜 :' + glass, (76, 176, 80), font=font)

draw.rectangle((x, y, x + w, y + h), outline="#4CB050") # 画出人脸方框

cv2img = cv2.cvtColor(np.array(pil_img), cv2.COLOR_RGB2BGR) # 把 pil 格式转换为 cv

cv2.imwrite('faces/{}'.format(os.path.basename(img)), cv2img) # 保存图片到 face 文件夹下

return '检测成功'

else:

return '检测失败'To obtain the access_token, we must add our own IP address to the whitelist, otherwise it will not be obtained. Please log in to "WeChat Public Platform-Development-Basic Configuration" to add the server IP address to the IP whitelist in advance. You can view the IP of this machine at http://ip.qq.com/...

Start writing code, we create a new utils.py to download and upload picturesimport requests

import json

import threading

import time

import os

token = ''

app_id = 'wxfc6adcdd7593a712'

secret = '429d85da0244792be19e0deb29615128'

def img_download(url, name):

r = requests.get(url)

with open('images/{}-{}.jpg'.format(name, time.strftime("%Y_%m_%d%H_%M_%S", time.localtime())), 'wb') as fd:

fd.write(r.content)

if os.path.getsize(fd.name) >= 1048576:

return 'large'

# print('namename', os.path.basename(fd.name))

return os.path.basename(fd.name)

def get_access_token(appid, secret):

'''获取access_token,100分钟刷新一次'''

url = 'https://api.weixin.qq.com/cgi-bin/token?grant_type=client_credential&appid={}&secret={}'.format(appid, secret)

r = requests.get(url)

parse_json = json.loads(r.text)

global token

token = parse_json['access_token']

global timer

timer = threading.Timer(6000, get_access_token)

timer.start()

def img_upload(mediaType, name):

global token

url = "https://api.weixin.qq.com/cgi-bin/media/upload?access_token=%s&type=%s" % (token, mediaType)

files = {'media': open('{}'.format(name), 'rb')}

r = requests.post(url, files=files)

parse_json = json.loads(r.text)

return parse_json['media_id']

get_access_token(app_id, secret)import falcon

from falcon import uri

from wechatpy.utils import check_signature

from wechatpy.exceptions import InvalidSignatureException

from wechatpy import parse_message

from wechatpy.replies import TextReply, ImageReply

from utils import img_download, img_upload

from face_id import access_api

class Connect(object):

def on_get(self, req, resp):

query_string = req.query_string

query_list = query_string.split('&')

b = {}

for i in query_list:

b[i.split('=')[0]] = i.split('=')[1]

try:

check_signature(token='lengxiao', signature=b['signature'], timestamp=b['timestamp'], nonce=b['nonce'])

resp.body = (b['echostr'])

except InvalidSignatureException:

pass

resp.status = falcon.HTTP_200

def on_post(self, req, resp):

xml = req.stream.read()

msg = parse_message(xml)

if msg.type == 'text':

reply = TextReply(content=msg.content, message=msg)

xml = reply.render()

resp.body = (xml)

resp.status = falcon.HTTP_200

elif msg.type == 'image':

name = img_download(msg.image, msg.source) # 下载图片

r = access_api('images/' + name)

if r == '检测成功':

media_id = img_upload('image', 'faces/' + name) # 上传图片,得到 media_id

reply = ImageReply(media_id=media_id, message=msg)

else:

reply = TextReply(content='人脸检测失败,请上传1M以下人脸清晰的照片', message=msg)

xml = reply.render()

resp.body = (xml)

resp.status = falcon.HTTP_200

app = falcon.API()

connect = Connect()

app.add_route('/connect', connect)WeChat’s mechanism, our program must respond within 5s. Otherwise, it will report 'The service provided by the official account is faulty'. However, image processing is sometimes slow, often exceeding 5 seconds. Therefore, the correct processing method should be to return an empty string immediately after receiving the user's request to indicate that we have received it, and then create a separate thread to process the image. When the image is processed, it is sent to the user through the customer service interface. Unfortunately, uncertified public accounts do not have a customer service interface, so there is nothing you can do. If it takes more than 5 seconds, an error will be reported.

The menu cannot be customized. Once custom development is enabled, the menu also needs to be customized. However, uncertified official accounts do not have permission to configure the menu through the program and can only configure it in the WeChat background. configuration.

So, I have not enabled this program on my official account, but if you have a certified official account, you can try to develop various fun functions.

Related recommendations:

WeChat public platform development One-click follow WeChat public platform account

WeChat public platform development attempt, WeChat public platform

Video: Chuanzhi and Dark Horse WeChat public platform development video tutorial

The above is the detailed content of Let's see how good you look! Public account developed based on Python. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

Is the conversion speed fast when converting XML to PDF on mobile phone?

Apr 02, 2025 pm 10:09 PM

The speed of mobile XML to PDF depends on the following factors: the complexity of XML structure. Mobile hardware configuration conversion method (library, algorithm) code quality optimization methods (select efficient libraries, optimize algorithms, cache data, and utilize multi-threading). Overall, there is no absolute answer and it needs to be optimized according to the specific situation.

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

What is the function of C language sum?

Apr 03, 2025 pm 02:21 PM

There is no built-in sum function in C language, so it needs to be written by yourself. Sum can be achieved by traversing the array and accumulating elements: Loop version: Sum is calculated using for loop and array length. Pointer version: Use pointers to point to array elements, and efficient summing is achieved through self-increment pointers. Dynamically allocate array version: Dynamically allocate arrays and manage memory yourself, ensuring that allocated memory is freed to prevent memory leaks.

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone.

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents.

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

How to convert xml into pictures

Apr 03, 2025 am 07:39 AM

XML can be converted to images by using an XSLT converter or image library. XSLT Converter: Use an XSLT processor and stylesheet to convert XML to images. Image Library: Use libraries such as PIL or ImageMagick to create images from XML data, such as drawing shapes and text.

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

Recommended XML formatting tool

Apr 02, 2025 pm 09:03 PM

XML formatting tools can type code according to rules to improve readability and understanding. When selecting a tool, pay attention to customization capabilities, handling of special circumstances, performance and ease of use. Commonly used tool types include online tools, IDE plug-ins, and command-line tools.

How to open xml format

Apr 02, 2025 pm 09:00 PM

How to open xml format

Apr 02, 2025 pm 09:00 PM

Use most text editors to open XML files; if you need a more intuitive tree display, you can use an XML editor, such as Oxygen XML Editor or XMLSpy; if you process XML data in a program, you need to use a programming language (such as Python) and XML libraries (such as xml.etree.ElementTree) to parse.

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

Is there a mobile app that can convert XML into PDF?

Apr 02, 2025 pm 09:45 PM

There is no APP that can convert all XML files into PDFs because the XML structure is flexible and diverse. The core of XML to PDF is to convert the data structure into a page layout, which requires parsing XML and generating PDF. Common methods include parsing XML using Python libraries such as ElementTree and generating PDFs using ReportLab library. For complex XML, it may be necessary to use XSLT transformation structures. When optimizing performance, consider using multithreaded or multiprocesses and select the appropriate library.