collect! Will be used in interviews, common MySQL interview questions

The following are some MySQL questions that are often encountered in interviews and studies. Example SQL statement optimization: 1) Try to avoid using != or operators in where clauses, otherwise the engine will give up using the index and perform a full table scan. 2) Try to avoid judging the null value of the field in the where clause, otherwise the engine will give up using the index and perform a full table scan, such as: select id from t where num is null

【 Related topic recommendations: mysql interview questions(2020)】

1. Primary key, super key, candidate key, foreign key

Primary key:

A combination of data columns or attributes in a database table that uniquely and completely identifies the stored data object. A data column can only have one primary key, and the value of the primary key cannot be missing, that is, it cannot be null.

Super key:

The set of attributes that can uniquely identify a tuple in a relationship is called the super key of the relationship schema. An attribute can be used as a super key, and multiple attributes combined can also be used as a super key. Super key contains candidate key and primary key.

Candidate key:

is the minimum superkey, that is, a superkey without redundant elements.

Foreign key:

The primary key of another table that exists in one table is called the foreign key of this table. 2. Four characteristics and meanings of database transactions

Four basic elements for the correct execution of database transaction transaction. ACID, Atomicity, Correspondence, Isolation, Durability.

Atomicity

: All operations in the entire transaction are either completed or not completed, and it is impossible to stagnate in some intermediate link. If an error occurs during the execution of the transaction, it will be rolled back to the state before the transaction started, as if the transaction had never been executed. Consistency

: The integrity constraints of the database are not violated before the transaction starts and after the transaction ends. Isolation

: The isolation state executes transactions as if they were the only operations performed by the system at a given time. If there are two transactions, running at the same time, performing the same function, transaction isolation will ensure that each transaction in the system thinks that only that transaction is using the system. This property is sometimes called serialization. To prevent confusion between transaction operations, requests must be serialized, or serialized, so that there is only one request for the same data at the same time. Persistence

: After the transaction is completed, the changes made by the transaction to the database are persisted in the database and will not be rolled back. 3. The role of the view, can the view be changed?

A view is a virtual table. Unlike a table containing data, a view only contains queries that dynamically retrieve data when used; it does not contain any columns or data. Using views can simplify complex SQL operations, hide specific details, and protect data; once views are created, they can be utilized in the same way as tables.

Views cannot be indexed, nor can they have associated triggers or default values. If there is order by in the view itself, order by again on the view will be overwritten.Create view: create view XXX as XXXXXXXXXXXXXX;

For some views, such as the Distinct Union that does not use the join subquery grouping aggregation function, etc., it can be updated, and updates to the view will update the base table; However, views are mainly used to simplify retrieval and protect data, and are not used for updates, and most views cannot be updated.

4. The difference between drop, delete and truncate

drop deletes the table directly and truncate deletes the data in the table. When inserting again, the id will increase automatically from 1 and delete will delete the data in the table. You can add where words.

(1) The DELETE statement deletes one row from the table each time, and at the same time saves the row's deletion operation as a transaction record in the log for rollback operation. TRUNCATE TABLE deletes all data from the table at once and does not record individual deletion operation records in the log. Deleted rows cannot be recovered. And the delete trigger related to the table will not be activated during the delete process. Execution speed is fast.

(2) Space occupied by tables and indexes. When a table is TRUNCATE, the space occupied by the table and indexes will be restored to the original size, and the DELETE operation will not reduce the space occupied by the table or indexes. The drop statement releases all the space occupied by the table.

(3) Generally speaking, drop > truncate > delete

(4) Application scope. TRUNCATE can only be used for TABLE; DELETE can be table and view

(5) TRUNCATE and DELETE only delete data, while DROP deletes the entire table (structure and data).

(6) truncate and delete without where: only delete the data, but not the structure (definition) of the table. The drop statement will delete the constraints (constrain), trigger (trigger) index (index) that the table structure depends on. );The stored procedures/functions that depend on the table will be retained, but their status will become: invalid.

(7) The delete statement is DML (data maintain language). This operation will be placed in the rollback segment and will take effect only after the transaction is submitted. If there is a corresponding tigger, it will be triggered during execution.

(8) truncate and drop are DLLs (data define language), and the operations take effect immediately. The original data is not placed in the rollback segment and cannot be rolled back.

(9) In the absence of backup , use drop and truncate with caution. To delete some data rows, use delete and combine it with where to limit the scope of influence. The rollback segment must be large enough. To delete a table, use drop; if you want to retain the table but delete the data in the table, if it has nothing to do with the transaction, use truncate. If it is related to a transaction, or the teacher wants to trigger a trigger, still use delete.

(10) Truncate table table name is fast and efficient because:

Truncate table is functionally the same as the DELETE statement without a WHERE clause: both delete all rows in the table . However, TRUNCATE TABLE is faster than DELETE and uses fewer system and transaction log resources. The DELETE statement deletes one row at a time and records an entry in the transaction log for each row deleted. TRUNCATE TABLE deletes data by freeing the data pages used to store table data, and only records the page freeing in the transaction log.

(11) TRUNCATE TABLE deletes all rows in the table, but the table structure and its columns, constraints, indexes, etc. remain unchanged. The count used to identify new rows is reset to the seed of that column. If you want to preserve the identity count value, use DELETE instead. If you want to delete a table definition and its data, use the DROP TABLE statement.

(12) For tables referenced by FOREIGN KEY constraints, TRUNCATE TABLE cannot be used, but a DELETE statement without a WHERE clause should be used. Because TRUNCATE TABLE is not logged, it cannot activate the trigger.

5. The working principle and types of indexes

Database index is a sorted data structure in the database management system to assist in quick query and update of database tables. data. Index implementation usually uses B-tree and its variant B-tree.

In addition to data, the database system also maintains data structures that satisfy specific search algorithms. These data structures reference (point to) the data in some way, so that advanced search algorithms can be implemented on these data structures. This data structure is an index.

There are costs to be paid for setting indexes on tables: First, the storage space of the database is increased, and second, it takes more time to insert and modify data (because the index will also change accordingly).

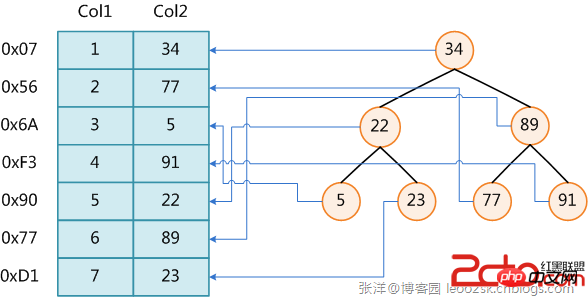

The figure shows one possible indexing method. On the left is a data table with a total of two columns and seven records. The leftmost one is the physical address of the data record (note that logically adjacent records are not necessarily physically adjacent on the disk). In order to speed up the search of Col2, you can maintain a binary search tree as shown on the right. Each node contains the index key value and a pointer to the physical address of the corresponding data record. In this way, you can use binary search in O(log## The corresponding data is obtained within the complexity of #2n).

Creating indexes can greatly improve system performance. First, by creating a unique index, the uniqueness of each row of data in the database table can be guaranteed. Second, it can greatly speed up data retrieval, which is also the main reason for creating an index. Third, it can speed up the connection between tables, which is particularly meaningful in achieving referential integrity of data. Fourth, when using grouping and sorting clauses for data retrieval, the time for grouping and sorting in the query can also be significantly reduced. Fifth, by using indexes, you can use optimization hiders during the query process to improve system performance. Some people may ask: There are so many advantages to adding an index, why not create an index for every column in the table? Because, adding indexes also has many disadvantages. First, creating and maintaining indexes takes time, and this time increases as the amount of data increases. Second, the index needs to occupy physical space. In addition to the data space occupied by the data table, each index also occupies a certain amount of physical space. If you want to build a clustered index, the space required will be larger. . Third, when adding, deleting, and modifying data in the table, the index must be dynamically maintained, which reduces the data maintenance speed.Indexes are built on certain columns in the database table. When creating an index, you should consider which columns can be indexed on and which columns cannot be indexed on. Generally speaking, indexes should be created on these columns: On columns that are frequently searched, you can speed up searches; on columns that serve as primary keys, enforce the uniqueness of the column and organize the data in the table The arrangement structure; on columns that are often used in connections, these columns are mainly foreign keys, which can speed up the connection; create indexes on columns that often need to be searched based on range, because the index has been sorted, and its specified range is Continuous; create indexes on columns that often need to be sorted, because the index has been sorted, so that queries can use the sorting of the index to speed up sorting query time; create indexes on columns that are often used in the WHERE clause to speed up the judgment of conditions .

Similarly, indexes should not be created for some columns. Generally speaking, these columns that should not be indexed have the following characteristics:

First, indexes should not be created for columns that are rarely used or referenced in queries. This is because, since these columns are rarely used, indexing or not indexing does not improve query speed. On the contrary, due to the addition of indexes, the maintenance speed of the system is reduced and the space requirements are increased.

Second, indexes should not be increased for columns with few data values. This is because, since these columns have very few values, such as the gender column of the personnel table, in the query results, the data rows in the result set account for a large proportion of the data rows in the table, that is, the data that needs to be searched in the table The proportion of rows is huge. Increasing the index does not significantly speed up retrieval.

Third, indexes should not be added to columns defined as text, image and bit data types. This is because the data volume of these columns is either quite large or has very few values.

Fourth, when the modification performance is much greater than the retrieval performance, the index should not be created. This is because modification performance and retrieval performance are contradictory. When adding indexes, retrieval performance will be improved, but modification performance will be reduced. When reducing indexes, modification performance will increase and retrieval performance will decrease. Therefore, when modification performance is much greater than retrieval performance, indexes should not be created.

According to the functions of the database, three types of indexes can be created in the database designer: Unique index, primary key index and clustered index.

Unique Index

A unique index is one that does not allow any two rows to have the same index value.

Most databases do not allow newly created unique indexes to be saved with the table when there are duplicate key values in the existing data. The database may also prevent adding new data that would create duplicate key values in the table. For example, if a unique index is created on the employee's last name (lname) in the employee table, no two employees can have the same last name. Primary Key Index Database tables often have a column or combination of columns whose value uniquely identifies each row in the table. This column is called the primary key of the table. Defining a primary key for a table in a database diagram automatically creates a primary key index, which is a specific type of unique index. The index requires each value in the primary key to be unique. It also allows fast access to data when using a primary key index in a query. Clustered Index In a clustered index, the physical order of the rows in the table is the same as the logical (index) order of the key values. A table can only contain one clustered index.

If an index is not a clustered index, the physical order of the rows in the table does not match the logical order of the key values. Clustered indexes generally provide faster data access compared to non-clustered indexes.

Locality Principle and Disk Read Ahead

Due to the characteristics of the storage medium, the disk itself is much slower to access than the main memory. Coupled with the cost of mechanical movement, the disk access The speed is often one hundredth of that of main memory, so in order to improve efficiency, disk I/O should be minimized. In order to achieve this goal, the disk often does not read strictly on demand, but reads in advance every time. Even if only one byte is needed, the disk will start from this position and sequentially read a certain length of data backwards into the memory. The theoretical basis for doing this is the famous Locality Principle in computer science: When a piece of data is used, the data nearby is usually used immediately. The data required during program execution is usually concentrated.

Since disk sequential reads are very efficient (no seek time required, very little spin time), read-ahead can improve I/O for programs with locality. Oefficiency.

The length of read-ahead is generally an integral multiple of page. Pages are logical blocks of computer-managed memory. Hardware and operating systems often divide main memory and disk storage areas into consecutive equal-sized blocks. Each storage block is called a page (in many operating systems, the page size is usually 4k), main memory and disk exchange data in units of pages. When the data to be read by the program is not in the main memory, a page fault exception will be triggered. At this time, the system will send a read signal to the disk, and the disk will find the starting position of the data and read one or more pages backwards. Load into memory, then return abnormally, and the program continues to run.

Performance analysis of B-/Tree index

At this point we can finally analyze the performance of B-/Tree index.

As mentioned above, the number of disk I/Os is generally used to evaluate the quality of the index structure. Let’s start with the B-Tree analysis. According to the definition of B-Tree, it can be seen that a maximum of h nodes need to be visited for one retrieval. The designers of the database system cleverly took advantage of the disk read-ahead principle and set the size of a node to be equal to one page, so that each node can be fully loaded with only one I/O. In order to achieve this goal, the following techniques need to be used in the actual implementation of B-Tree:

Every time a new node is created, a page of space is directly applied for, thus ensuring that a node is physically stored in a page. In addition, computer storage allocation is page-aligned, which means that only one I/O is required for a node.

A retrieval in B-Tree requires at most h-1 I/O (the root node is resident in memory), and the asymptotic complexity is O(h)=O(logd N). In general practical applications, the out-degree d is a very large number, usually more than 100, so h is very small (usually no more than 3).

As for the red-black tree structure, h is obviously much deeper. Since nodes (parents and children) that are logically close may be physically far away and locality cannot be exploited, the I/O asymptotic complexity of the red-black tree is also O(h), and the efficiency is obviously much worse than that of the B-Tree.

To sum up, using B-Tree as an index structure is very efficient.

6. Types of connections

Executed in the query analyzer:

-Create tables table1, table2:

create table table1(id int, name varchar(10))

create table table2(id int,score int)

insert into table1 select 1,'lee'

insert into table1 select 2,'zhang'

insert into table1 select 4,'wang'

insert into table2 select 1,90

insert into table2 select 2,100

insert into table2 select 3,70

such as table

————————————————-

table1 | table2 |

————————————————-

id name |id score |

1 lee |1 90|

2 zhang| 2 100|

4 wang| 3 70|

————————————————-

The following are all executed in the query analyzer

1. Outer join

1. Concept: including left outer join, right outer join or complete outer join

2. Left join: left join or left outer join

(1)Left outer join The result set includes all rows from the left table specified in the LEFT OUTER clause, not just the rows matched by the join columns. If a row in the left table has no matching row in the right table, all select list columns of the right table will be null in the associated result set row.

(2)sql statement

select * from table1 left join table2 on table1.id=table2.id

————-Result————-

idnameidscore

———— ————————

1lee190

2zhang2100

4wangNULLNULL

——————————

Note: Contains all clauses of table1, and returns the corresponding results of table2 according to the specified conditions Fields that do not match are displayed as null

3. Right join: right join or right outer join

(1) Right outer join is the reverse join of left outer join. All rows from the right table will be returned. If a row in the right table has no matching row in the left table, null will be returned for the left table.

(2)sql statement

select * from table1 right join table2 on table1.id=table2.id

————-Result————-

idnameidscore

———— ————————

1lee190

2zhang2100

NULLNULL370

——————————

Note: Contains all clauses of table2 and returns the corresponding results of table1 according to the specified conditions Fields that do not match are displayed as null

4. Complete outer join: full join or full outer join

(1) Full outer join returns all rows in the left table and right table. When a row has no matching row in another table, the other table's select list column contains null values. If there are matching rows between tables, the entire result set row contains the data values of the base table.

(2)sql statement

select * from table1 full join table2 on table1.id=table2.id

————-Result————-

idnameidscore

——————————

1lee190

2zhang2100

4wangNULLNULL

NULLNULL370

——————————

Note: Return left and right connections and (see left and right joins above)

2. Inner join

1. Concept: Inner join is a join that uses comparison operators to compare the values of the columns to be joined

2.Inner join: join or inner join

3.sql statement

select * from table1 join table2 on table1.id=table2.id

————-Result———— -

idnameidscore

——————————

1lee190

2zhang2100

——————————

Note: Only returns matching Conditional columns of table1 and table2

4. Equivalent (same execution effect as the following)

A:select a.*,b.* from table1 a,table2 b where a.id =b.id

B:select * from table1 cross join table2 where table1.id=table2.id (Note: You can only use where to add conditions after cross join, not on)

3. Cross join(Complete)

1. Concept: A cross join without a WHERE clause will produce a Cartesian product of the tables involved in the join. The number of rows in the first table multiplied by the number of rows in the second table equals the size of the Cartesian product result set. (The cross join of table1 and table2 produces 3*3=9 records)

2. Cross join: cross join (without condition where...)

3.sql Statement

select * from table1 cross join table2

————-Result————-

idnameidscore

——————————

1lee190

2zhang190

4wang190

1lee2100

2zhang2100

4wang2100

1lee370

2zhang370

4wang370

——————————

Note: Return 3*3 =9 records, that is, Cartesian product

4. Equivalent (same as the following execution effect)

A:select * from table1,table2

7. Database paradigm

1 First normal form (1NF)

In any relational database, the first normal form (1NF) is the relational model Basic requirements, a database that does not meet the first normal form (1NF) is not a relational database.

The so-called first normal form (1NF) means that each column of the database table is an indivisible basic data item. There cannot be multiple values in the same column, that is, an attribute in the entity cannot have multiple values or cannot have Duplicate attributes. If repeated attributes appear, you may need to define a new entity. The new entity is composed of repeated attributes. There is a one-to-many relationship between the new entity and the original entity. In first normal form (1NF) each row of the table contains information about only one instance. In short, The first normal form is a non-duplicate column.

2 Second Normal Form (2NF)

The second normal form (2NF) is established on the basis of the first normal form (1NF), that is To satisfy the second normal form (2NF), you must first satisfy the first normal form (1NF). Second Normal Form (2NF) requires that each instance or row in a database table must be uniquely distinguishable. To achieve differentiation, it is usually necessary to add a column to the table to store the unique identification of each instance. This unique attribute column is called the primary key or primary key or primary key.

Second Normal Form (2NF) requires that the attributes of an entity completely depend on the primary key. The so-called complete dependence means that there cannot be an attribute that only depends on part of the primary key. If it exists, then this attribute and this part of the primary key should be separated to form a new entity. The new entity and the original entity are one-to-many. relation. To achieve differentiation, it is usually necessary to add a column to the table to store the unique identification of each instance. In short, The second normal form is that non-primary attributes are not partially dependent on the primary keyword.

3 Third Normal Form (3NF)

To satisfy the third normal form (3NF), you must first satisfy the second normal form (2NF). In short, third normal form (3NF) requires that a database table does not contain non-primary key information that is already contained in other tables. For example, there is a department information table, in which each department has department number (dept_id), department name, department profile and other information. Then after the department number is listed in the employee information table, department name, department profile and other department-related information cannot be added to the employee information table. If the department information table does not exist, it should be constructed according to the third normal form (3NF), otherwise there will be a lot of data redundancy. In short, The third normal form is that attributes do not depend on other non-primary attributes. (My understanding is to eliminate redundancy)

8. The idea of database optimization

I drew on the courses on database optimization on MOOC.

1.SQL statement optimization

1) Try to avoid using != or operators in the where clause, otherwise the engine will give up using the index and perform a full table scan.

2) Try to avoid making null value judgments on fields in the where clause, otherwise the engine will give up using the index and perform a full table scan, such as:

select id from t where num is null

You can set the default value 0 on num to ensure that there is no null value in the num column in the table, and then query like this:

select id from t where num=0

3) Many times exists is used instead of in. A good choice

4) Replace the HAVING clause with the Where clause because HAVING will only filter the result set after retrieving all records

2. Index optimization

See Index above

3. Database structure optimization

1) Paradigm optimization: For example, eliminating redundancy (saving space...) 2) Anti-paradigm optimization: For example, adding appropriate redundancy (reduce joins) 3) Split the table : Partitions physically separate data. Data in different partitions can be stored in data files on different disks. In this way, when querying this table, you only need to scan the table partition instead of scanning the entire table, which significantly shortens the query time. In addition, the partitions on different disks will also disperse the data transmission of this table in different places. Disk I/O, a carefully configured partition can evenly spread the data transfer competition for disk I/O. This method can be used for timetables with large amounts of data. Table partitions can be automatically created on a monthly basis.

4) Splitting is actually divided into vertical splitting and horizontal splitting: Case: A simple shopping system temporarily involves the following tables: 1. Product table (data volume: 100,000, stable) 2. Order table (data volume: 2 million, and There is a growth trend) 3. User table (the data volume is 1 million, and there is a growth trend) Taking mysql as an example to describe horizontal split and vertical split, the order of magnitude that mysql can tolerate can range from one million static data to tens of millions Vertical Split: Solve the problem: io competition between tables does not solve the problem: the pressure caused by the increase in the amount of data in a single table Solution: Put the product table and user table on one server and put the order table on a separate server Horizontal splitting: Solve the problem: The pressure caused by the growth of data volume in a single table does not solve the problem: io competition between tables

Solution: The user table is split into male user tables by gender The order table of the female user table is split into completed orders and unfinished orders through completed and completed. The product table and unfinished orders are placed on a server. The completed order table box is placed on a male user table and placed on a server. The female user table is placed on a server. (Women love shopping haha)

4. Server hardware optimization

This costs a lot of money!

9. The difference between stored procedures and triggers

Triggers are very similar to stored procedures. Triggers are also sets of SQL statements. The only difference between the two is that EXECUTE cannot be used in triggers statement call, but automatically triggers (activates) execution when the user executes a Transact-SQL statement. A trigger is a stored procedure that is executed when data in a specified table is modified. Typically, triggers are created to enforce the referential integrity and consistency of logically related data in different tables. Since users cannot bypass triggers, they can be used to enforce complex business rules to ensure data integrity. Triggers are different from stored procedures. Triggers are mainly executed by event execution triggers, while stored procedures can be directly called through the name of the stored procedure. When operations such as UPDATE, INSERT, and DELETE are performed on a certain table, SQLSERVER will automatically execute the SQL statements defined by the trigger, thereby ensuring that the data processing must comply with the rules defined by these SQL statements. Related articles:

PHP common interview questions

jQuery common interview questions

Related videos:

The above is the detailed content of collect! Will be used in interviews, common MySQL interview questions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Explain InnoDB Full-Text Search capabilities.

Apr 02, 2025 pm 06:09 PM

Explain InnoDB Full-Text Search capabilities.

Apr 02, 2025 pm 06:09 PM

InnoDB's full-text search capabilities are very powerful, which can significantly improve database query efficiency and ability to process large amounts of text data. 1) InnoDB implements full-text search through inverted indexing, supporting basic and advanced search queries. 2) Use MATCH and AGAINST keywords to search, support Boolean mode and phrase search. 3) Optimization methods include using word segmentation technology, periodic rebuilding of indexes and adjusting cache size to improve performance and accuracy.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

Difference between clustered index and non-clustered index (secondary index) in InnoDB.

Apr 02, 2025 pm 06:25 PM

Difference between clustered index and non-clustered index (secondary index) in InnoDB.

Apr 02, 2025 pm 06:25 PM

The difference between clustered index and non-clustered index is: 1. Clustered index stores data rows in the index structure, which is suitable for querying by primary key and range. 2. The non-clustered index stores index key values and pointers to data rows, and is suitable for non-primary key column queries.

What are some popular MySQL GUI tools (e.g., MySQL Workbench, phpMyAdmin)?

Mar 21, 2025 pm 06:28 PM

What are some popular MySQL GUI tools (e.g., MySQL Workbench, phpMyAdmin)?

Mar 21, 2025 pm 06:28 PM

Article discusses popular MySQL GUI tools like MySQL Workbench and phpMyAdmin, comparing their features and suitability for beginners and advanced users.[159 characters]

How do you handle large datasets in MySQL?

Mar 21, 2025 pm 12:15 PM

How do you handle large datasets in MySQL?

Mar 21, 2025 pm 12:15 PM

Article discusses strategies for handling large datasets in MySQL, including partitioning, sharding, indexing, and query optimization.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.