Some suggestions to help improve lock performance

##Reduce The lock holding time

is only synchronized when necessary, which significantly reduces the lock holding time, reduces the possibility of lock conflicts, and improves concurrency capabilities

For example , use synchronize synchronization lock, try to add it when the object needs to share variable state, instead of blindly adding synchronize before the entire method, directly lock the object calling this method, which increases the probability of lock competition

Read-write lock separation replaces exclusive lock

I have talked about using ReadWriteLock read-write separation to improve efficiency.

The further extension is the lock separation strategy

Separating exclusive locks A typical reference scenario for this technology is the LinkedBlockingQueue task queue. As we said before, it is an unbounded task queue, implemented based on a linked list. Its take() method and put() method act on the front end and tail end of the queue respectively, and are complementary. Impact, so in the implementation of jdk, two locks are provided for these two operations.

For example, when multi-threads execute the put() operation, they most need to compete for putLock, and the take operation competes for takeLock

/** Lock held by take, poll, etc */

private final ReentrantLock takeLock = new ReentrantLock();

/** Wait queue for waiting takes */

private final Condition notEmpty = takeLock.newCondition();

/** Lock held by put, offer, etc */

private final ReentrantLock putLock = new ReentrantLock();

/** Wait queue for waiting puts */

private final Condition notFull = putLock.newCondition();Copy after login

Lock coarsening

Locks that have been continuously applying for and releasing the same resource can be integrated into a one-time request for the lock, thereby reducing the consumption of application and release actions.

For example:

for(int i=0;i<n;i++){

synchronized(lock){}

}Copy after login

Optimize to

synchronized(lock){

for(int i=0;i<n;i++){}

}Copy after login

Reduce lock granularity

The typical application scenario of this technology is the implementation of ConcurrentHashMap. Compared with HashMap, it is thread-safe, and compared with HashTable, it is efficient concurrency

ConcurrentHashMap implementation principle

There is a big difference between the implementation of ConcurrentHashMap in jdk1.7 and jdk1.8

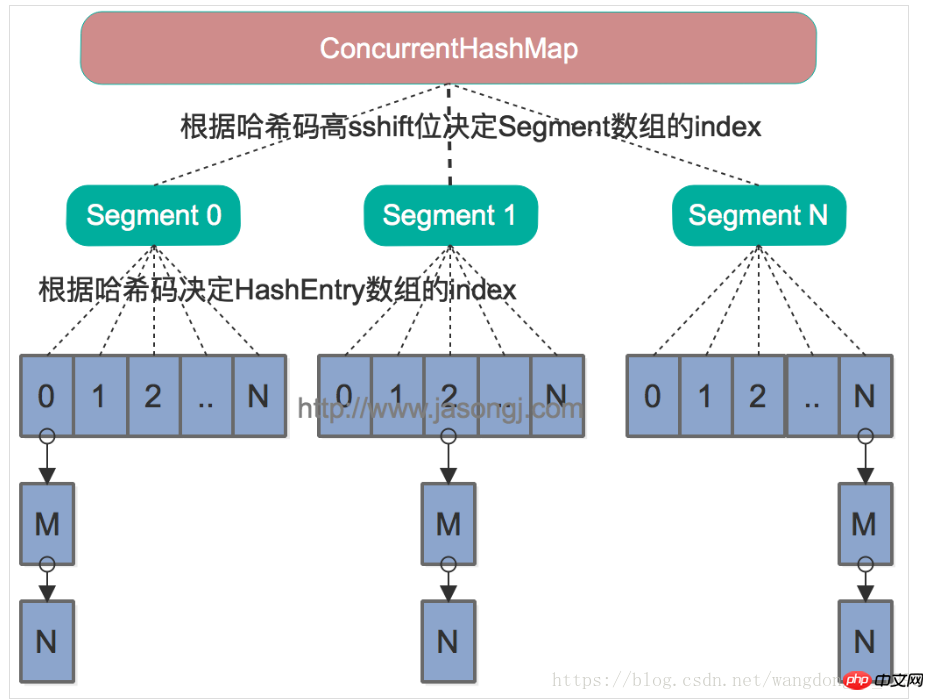

The underlying structure of ConcurrentHashMap is a Segment array. The default size is 16. Each Segment array can be regarded as a small HashMap, which means that the Segment array is implemented using a linked list and an array.

-

jdk 1.7’s implementation of ConcurrentHashMap based on segmentation lock

For example, if we need to insert a new key-value pair into the Map, we first find which segment it should be inserted into according to the hashcode of the key, and then lock this segment to complete the put operation. Here segment acts as a lock, because the

Segment class inherits from the ReentrantLock class. When acquiring the lock, you do not directly use lock to acquire it, because this method will hang when it fails to acquire the lock. In fact, it uses Spin lock. If tryLock fails to acquire the lock, it means that the lock is occupied by other threads. At this time, the lock is applied again through tryLock through the loop. So in multi-threading, as long as the inserted data is not located in a segment and lock competition will not cause blocking, true concurrency can be achieved between threads.

Problem: When cross-segment operations are required, that is, when the system needs to obtain a global lock, it is more troublesome. It needs to check the lock status of each segment, and only successfully obtains the locks of all segments. To obtain global information. For example, the size() method of ConcurrentHashMap requires the lock of all sub-segments

-

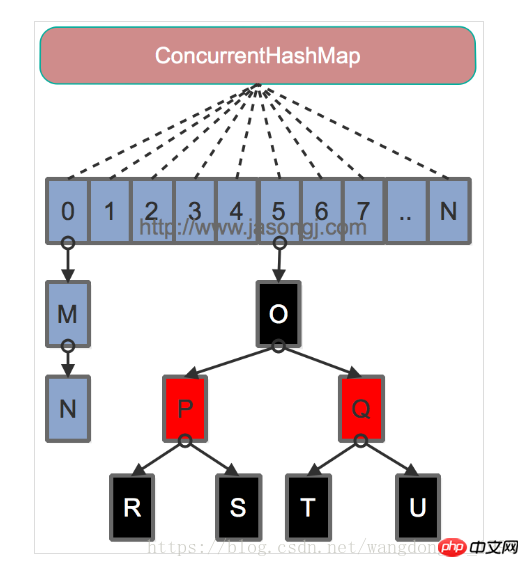

The implementation of jdk 1.8 is based on CAS ConcurrentHashMap

The maximum concurrency of jdk1.7's ConcurrentHashMap is equal to the number of segments. In order to further improve concurrency, jdk 1.8 abandoned the segmentation lock solution and directly used a large array. At the same time, in order to improve the addressing performance under hash collision, Java 8 converts the linked list (addressing time complexity is O(N)) into a red-black tree (addressing time complexity is O(N)) when the length of the linked list exceeds a certain threshold (8) O(long(N))). The index of the Key in the array is also determined by taking the modulo of the Key's hash value and the array length

For the put operation, if the array element corresponding to the Key is null, then Set it to the current value via a CAS operation. If the array element corresponding to Key (that is, the head of the linked list or the root element of the tree) is not null, use the synchronized keyword to apply for a lock on the element, and then perform the operation. If the put operation causes the length of the current linked list to exceed a certain threshold, the linked list is converted into a tree, thereby improving addressing efficiency.

For read operations, since the array is modified with the volatile keyword, there is no need to worry about the visibility of the array. At the same time, each element is a Node instance (each element in Java 7 is a HashEntry). Its Key value and hash value are modified by final and cannot be changed. There is no need to care about their visibility after modification. Its Value and reference to the next element are modified by volatile, and visibility is also guaranteed.

size operation: Each large array maintains a counter. The put method and the remove method both maintain the size of the Map through the addCount method. The size method uses sumCount to obtain the size of the Map maintained by the addCount method.

It is important to note that the reason why each array contains a counter instead of using a global counter in ConcurrentHashMap is to consider the concurrency of ConcurrentHashMap: Because this is When you need to update the counter, you do not need to lock the entire ConcurrentHashMapIt is important to note that count is volatile, which makes any updates to count immediately visible to other threads

Related articles:

Java framework Bootstrap, jQuery, SpringMVC, Hibernate high performance and high concurrency

Solution to high concurrency problems in Java system

Related videos:

Java video tutorial

The above is the detailed content of Learn Java concurrency: lock optimization, ConcurrentHashMap, lock separation. For more information, please follow other related articles on the PHP Chinese website!