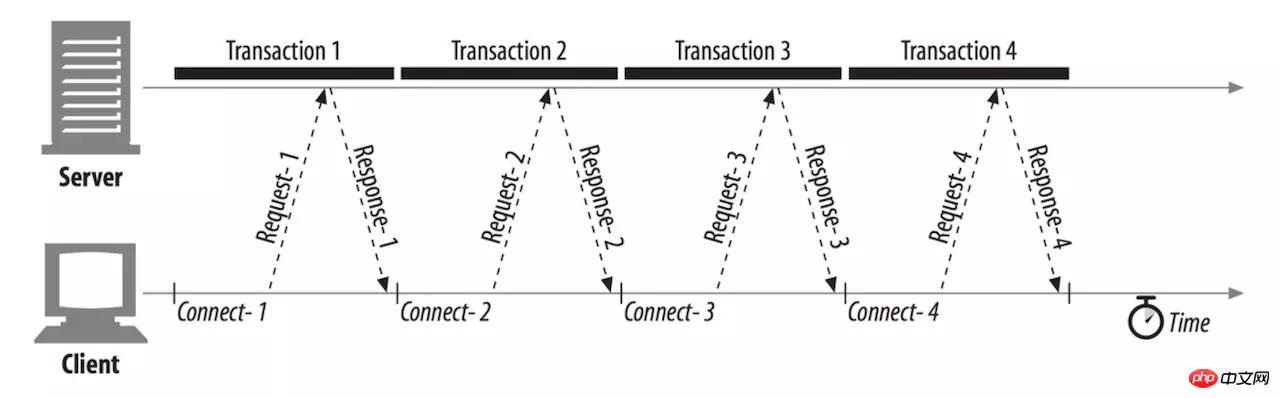

Serial Connection

HTTP/0.9 and the early HTTP/1.0 protocol serialize HTTP request processing. Suppose a page contains 3 style files, all belonging to the same protocol, domain name, and port. Then, the browser needs to initiate a total of four requests, and can only open one TCP channel each time. After a requested resource is downloaded, the connection is immediately disconnected, and a new connection is opened to process the next request in the queue. As the size and number of page resources continue to expand, network latency will continue to accumulate. Users will face a blank screen and lose patience after waiting for too long.

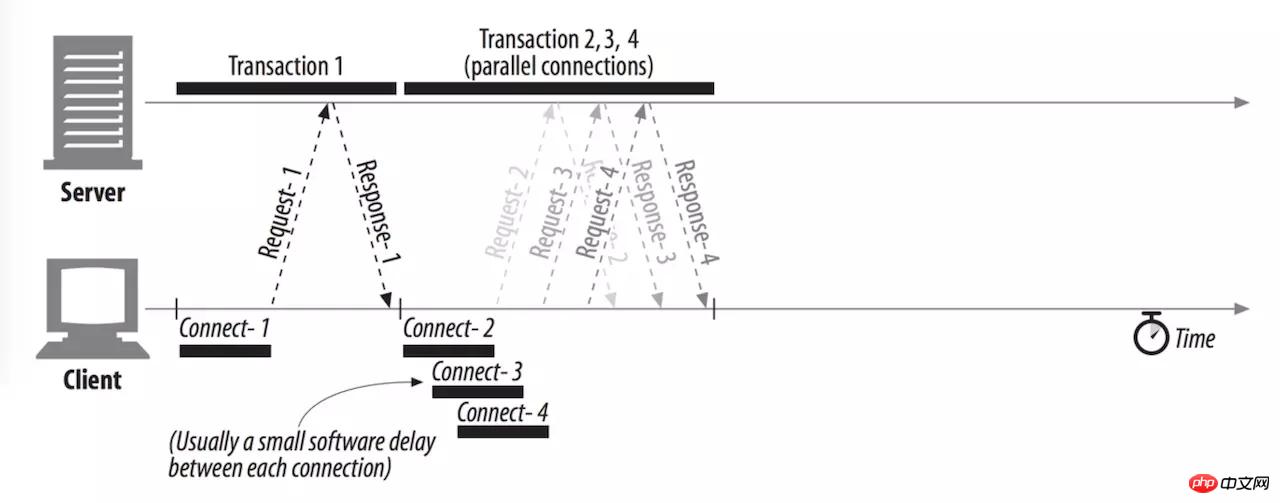

Parallel connection

In order to improve the throughput of the network, the improved HTTP protocol allows the client to open multiple TCP connections at the same time , request multiple resources in parallel and make full use of bandwidth. Usually, there will be a certain delay between each connection, but the transmission time of the requests overlaps, and the overall delay is much lower than that of the serial connection. Considering that each connection consumes system resources and the server needs to handle a large number of concurrent user requests, the browser will set certain limits on the number of concurrent requests. Even though the RFC does not specify a specific limit, each browser manufacturer will have its own standards:

IE 7: 2

IE 8/9: 6

IE 10: 8

IE 11: 13

Firefox: 6

Chrome: 6

Safari: 6

Opera: 6

iOS WebView: 6

Android WebView: 6

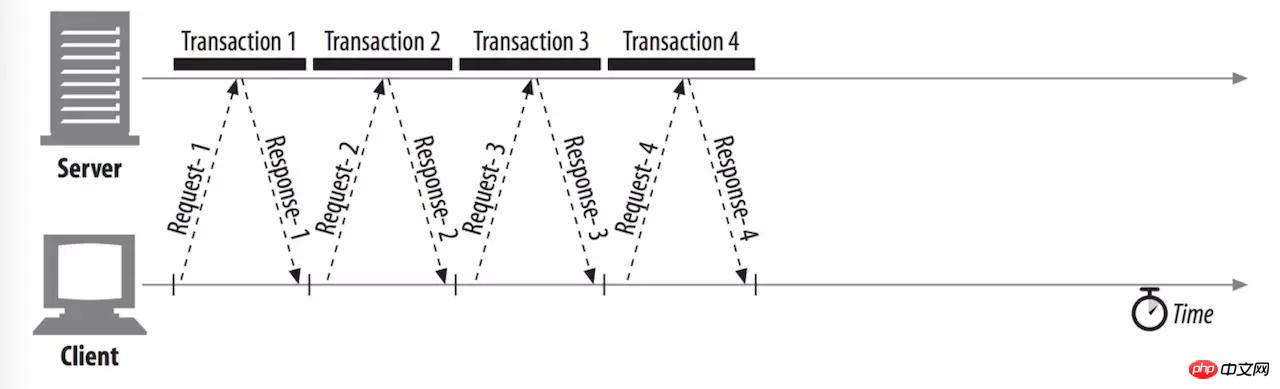

Persistent connection (long connection)

The early HTTP protocol occupied an independent TCP connection for each request, which undoubtedly increased the TCP connection establishment overhead. , congestion control overhead, release connection overhead, improved HTTP/1.0 and HTTP/1.1 (default) both support persistent connections. If a request is completed, the connection will not be disconnected immediately, but the connection will be maintained for a certain period of time to quickly process upcoming HTTP requests and reuse the same TCP channel until the client heartbeat detection fails or the server connection times out. This feature can be activated through the HTTP header Connection: keep-alive. The client can also send Connection: close to actively close the connection. Therefore, we see that the two optimizations of parallel connections and persistent connections complement each other. Parallel connections allow the first loading page to open multiple TCP connections at the same time, while persistent connections ensure that subsequent requests reuse the opened TCP connections. This It is also a common mechanism for modern Web pages.

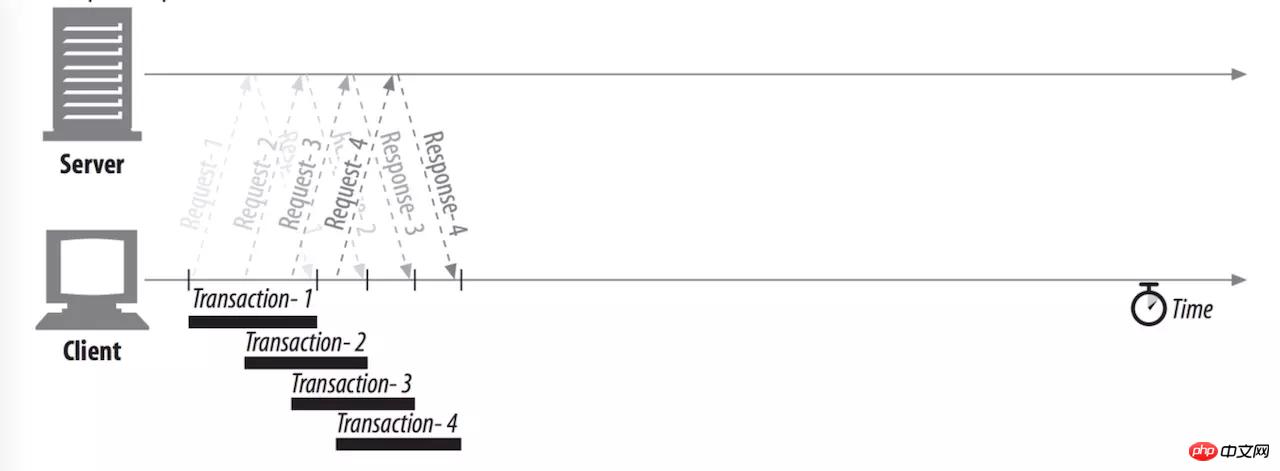

Pipelined connection

Persistent connections allow us to reuse the connection to complete multiple requests, but it must satisfy The queue order of FIFO must ensure that the previous request successfully reaches the server, is processed successfully, and the first byte returned by the server is received before the next request in the queue can be initiated. HTTP pipes allow clients to initiate multiple requests in succession within the same TCP channel without having to wait for a response, eliminating round-trip latency differences. However, in reality, due to the limitations of the HTTP/1.x protocol, data is not allowed to arrive interleaved on a link (IO multiplexing). Imagine a situation where the client and server send an HTML and multiple CSS requests at the same time. The server processes all requests in parallel. When all CSS requests are processed and added to the buffer queue, it is found that the HTML request processing encounters a problem and is hung indefinitely. In serious cases, it may even cause buffer overflow. This situation is called head-of-line blocking. Therefore, this solution has not been adopted in the HTTP/1.x protocol.

Head-of-line blocking is not a unique concept in HTTP, but a common phenomenon in cached communication network exchanges

Head-of-line blocking is not a unique concept in HTTP, but a common phenomenon in cached communication network exchanges

Summary

1. For the same protocol, domain name, and port, the browser allows multiple TCP connections to be opened at the same time, and the general upper limit is 6.

2. The same TCP connection is allowed to initiate multiple HTTP requests, but you must wait for the first byte response of the previous request to reach the client.

3. Due to the queue head blocking problem, the client is not allowed to send all requests in the queue at the same time. This problem has been solved in HTTP/2.0.

The above is the detailed content of Concurrency limit and head-of-line blocking problem in HTTP protocol. For more information, please follow other related articles on the PHP Chinese website!

C# Tutorial

C# Tutorial

Unknown error 3004 solution

Unknown error 3004 solution

winkawaksrom

winkawaksrom

What is the inscription in the blockchain?

What is the inscription in the blockchain?

How to solve cpu fan error

How to solve cpu fan error

What should I do if the web video cannot be opened?

What should I do if the web video cannot be opened?

How to solve the problem that cad cannot be copied to the clipboard

How to solve the problem that cad cannot be copied to the clipboard

What to do if the CPU temperature is too high

What to do if the CPU temperature is too high

How to install ssl certificate

How to install ssl certificate