The content of this article is about what is the GIL of python? The introduction of GIL in python has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

GIL (Global Interpreter Lock) is not a feature of python, but a concept introduced by the Python interpreter Cpython. The interpreter of python is not only Cpython. If the interpreter is Jpython, then python does not have GIL.

Let’s take a look at the official explanation:

In CPython, the global interpreter lock, or GIL, is a mutex that prevents multiple native threads from executing Python bytecodes at once. This lock is necessary mainly because CPython's memory management is not thread-safe. (However, since the GIL exists, other features have grown to depend on the guarantees that it enforces.)

A Mutex (mutex lock) that prevents multiple threads from concurrently executing machine code. The reason is: Cpython’s memory management is not thread-safe

Because Due to physical limitations, the competition between CPU manufacturers in core frequency has been replaced by multi-core. In order to more effectively utilize the performance of multi-core processors, multi-threaded programming has emerged, and with it comes the difficulty of data consistency and status synchronization between threads. Even the Cache inside the CPU is no exception. In order to effectively solve the problem of data synchronization between multiple caches, various manufacturers have spent a lot of effort, which inevitably brings about a certain performance loss.

Of course Python cannot escape. In order to take advantage of multi-core, Python began to support multi-threading. The easiest way to solve data integrity and status synchronization between multiple threads is naturally to lock. So there is the super big lock of GIL, and when more and more code base developers accept this setting, they begin to rely heavily on this feature (that is, the default Python internal objects are thread-safe, and there is no need to Implementation takes into account additional memory locks and synchronization operations).

Slowly this implementation method was found to be painful and inefficient. But when everyone tried to split and remove the GIL, they found that a large number of library code developers have relied heavily on the GIL and it is very difficult to remove it. How difficult is it? To give an analogy, a "small project" like MySQL took nearly five years to split the big lock of Buffer Pool Mutex into various small locks, from 5.5 to 5.6 to 5.7, and it is still Continue. MySQL, a product with company support and a fixed development team behind it, is having such a difficult time, not to mention a highly community-based team of core developers and code contributors like Python?

So to put it simply, the existence of GIL is more for historical reasons. If we had to do it all over again, we would still have to face the problem of multi-threading, but at least it would be more elegant than the current GIL approach.

Judging from the above introduction and official definition, GIL is undoubtedly a global exclusive lock. There is no doubt that the existence of global locks will have a great impact on the efficiency of multi-threading. It's almost as if Python is a single-threaded program. Then readers will say that as long as the global lock is released, the efficiency will not be bad. As long as the GIL can be released when performing time-consuming IO operations, operating efficiency can still be improved. In other words, no matter how bad it is, it will not be worse than the efficiency of single thread. This is true in theory, but in practice? Python is worse than you think.

Let’s compare the efficiency of Python in multi-threading and single-threading. The test method is very simple, a counter function that loops 100 million times. One is executed twice through a single thread, and one is executed through multiple threads. Finally compare the total execution time. The test environment is a dual-core Mac pro. Note: In order to reduce the impact of the performance loss of the thread library itself on the test results, the single-threaded code here also uses threads. Just execute it twice sequentially to simulate a single thread.

#! /usr/bin/python

from threading import Thread

import time

def my_counter():

i = 0

for _ in range(100000000):

i = i + 1

return True

def main():

thread_array = {}

start_time = time.time()

for tid in range(2):

t = Thread(target=my_counter)

t.start()

t.join()

end_time = time.time()

print("Total time: {}".format(end_time - start_time))

if __name__ == '__main__':

main()#! /usr/bin/python

from threading import Thread

import time

def my_counter():

i = 0

for _ in range(100000000):

i = i + 1

return True

def main():

thread_array = {}

start_time = time.time()

for tid in range(2):

t = Thread(target=my_counter)

t.start()

thread_array[tid] = t

for i in range(2):

thread_array[i].join()

end_time = time.time()

print("Total time: {}".format(end_time - start_time))

if __name__ == '__main__':

main()

It can be seen that python is actually 45% slower than single thread in multi-threaded case. According to the previous analysis, even with the existence of GIL global lock, serialized multi-threading should have the same efficiency as single-threading. So how could there be such a bad result?

Let us analyze the reasons for this through the implementation principles of GIL.

According to the ideas of the Python community, the thread scheduling of the operating system itself is very mature and stable, and there is no need to do it yourself Make a set. Therefore, a Python thread is a pthread in C language and is scheduled through the operating system scheduling algorithm (for example, Linux is CFS). In order to allow each thread to utilize CPU time evenly, Python will calculate the number of currently executed microcodes and force the GIL to be released when it reaches a certain threshold. At this time, the operating system's thread scheduling will also be triggered (of course, whether the context switch is actually performed is determined by the operating system).

pseudocode

while True: acquire GIL for i in 1000: do something release GIL /* Give Operating System a chance to do thread scheduling */

这种模式在只有一个CPU核心的情况下毫无问题。任何一个线程被唤起时都能成功获得到GIL(因为只有释放了GIL才会引发线程调度)。但当CPU有多个核心的时候,问题就来了。从伪代码可以看到,从release GIL到acquire GIL之间几乎是没有间隙的。所以当其他在其他核心上的线程被唤醒时,大部分情况下主线程已经又再一次获取到GIL了。这个时候被唤醒执行的线程只能白白的浪费CPU时间,看着另一个线程拿着GIL欢快的执行着。然后达到切换时间后进入待调度状态,再被唤醒,再等待,以此往复恶性循环。

PS:当然这种实现方式是原始而丑陋的,Python的每个版本中也在逐渐改进GIL和线程调度之间的互动关系。例如先尝试持有GIL在做线程上下文切换,在IO等待时释放GIL等尝试。但是无法改变的是GIL的存在使得操作系统线程调度的这个本来就昂贵的操作变得更奢侈了。

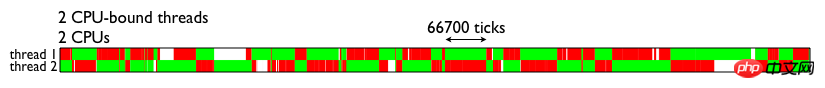

为了直观的理解GIL对于多线程带来的性能影响,这里直接借用的一张测试结果图(见下图)。图中表示的是两个线程在双核CPU上得执行情况。两个线程均为CPU密集型运算线程。绿色部分表示该线程在运行,且在执行有用的计算,红色部分为线程被调度唤醒,但是无法获取GIL导致无法进行有效运算等待的时间。

由图可见,GIL的存在导致多线程无法很好的立即多核CPU的并发处理能力。

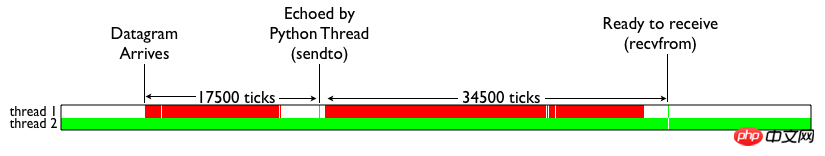

那么Python的IO密集型线程能否从多线程中受益呢?我们来看下面这张测试结果。颜色代表的含义和上图一致。白色部分表示IO线程处于等待。可见,当IO线程收到数据包引起终端切换后,仍然由于一个CPU密集型线程的存在,导致无法获取GIL锁,从而进行无尽的循环等待。

简单的总结下就是:Python的多线程在多核CPU上,只对于IO密集型计算产生正面效果;而当有至少有一个CPU密集型线程存在,那么多线程效率会由于GIL而大幅下降。

multiprocessing库的出现很大程度上是为了弥补thread库因为GIL而低效的缺陷。它完整的复制了一套thread所提供的接口方便迁移。唯一的不同就是它使用了多进程而不是多线程。每个进程有自己的独立的GIL,因此也不会出现进程之间的GIL争抢。

当然multiprocessing也不是万能良药。它的引入会增加程序实现时线程间数据通讯和同步的困难。就拿计数器来举例子,如果我们要多个线程累加同一个变量,对于thread来说,申明一个global变量,用thread.Lock的context包裹住三行就搞定了。而multiprocessing由于进程之间无法看到对方的数据,只能通过在主线程申明一个Queue,put再get或者用share memory的方法。这个额外的实现成本使得本来就非常痛苦的多线程程序编码,变得更加痛苦了。

之前也提到了既然GIL只是CPython的产物,那么其他解析器是不是更好呢?没错,像JPython和IronPython这样的解析器由于实现语言的特性,他们不需要GIL的帮助。然而由于用了Java/C#用于解析器实现,他们也失去了利用社区众多C语言模块有用特性的机会。所以这些解析器也因此一直都比较小众。毕竟功能和性能大家在初期都会选择前者,Done is better than perfect。

Python GIL其实是功能和性能之间权衡后的产物,它尤其存在的合理性,也有较难改变的客观因素。从本分的分析中,我们可以做以下一些简单的总结:

因为GIL的存在,只有IO Bound场景下得多线程会得到较好的性能

如果对并行计算性能较高的程序可以考虑把核心部分也成C模块,或者索性用其他语言实现

GIL在较长一段时间内将会继续存在,但是会不断对其进行改进

The above is the detailed content of What is python's GIL? Introduction to GIL in python. For more information, please follow other related articles on the PHP Chinese website!