Database

Database

Mysql Tutorial

Mysql Tutorial

A brief discussion on the communication principle of MySQL JDBC StreamResult

A brief discussion on the communication principle of MySQL JDBC StreamResult

A brief discussion on the communication principle of MySQL JDBC StreamResult

This article brings you a brief discussion of the MySQL JDBC StreamResult communication principle. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Anyone who has used MySQL JDBC to read a large amount of data (for example, more than 1GB) should know that the memory is likely to overflow the Java heap when reading, and our solution is statement .setFetchSize(Integer.MIN_VALUE) and ensure that the cursor is read-only and scrolls forward (the default value of the cursor). You can also cast the type to com.mysql.jdbc.StatementImpl and then call its internal method: enableStreamingResults() In this way, reading the data memory will not hang, and the effect achieved by the two is the same. Of course, useCursorFetch can also be used, but the test result performance of this method is much slower than that of StreamResult. Why? This article will explain its general principles.

I have introduced in some previous articles and books that the internal processing code of MySQL JDBC is divided into three different classes to complete, but I have never delved into the relationship between the database and JDBC. How is the communication process between them? For a while, I have always thought that this is a server-side behavior or a behavior of cooperation between the client and the server, but it is not the case. Today we will talk about what this behavior is.

[Review simple communication first]:

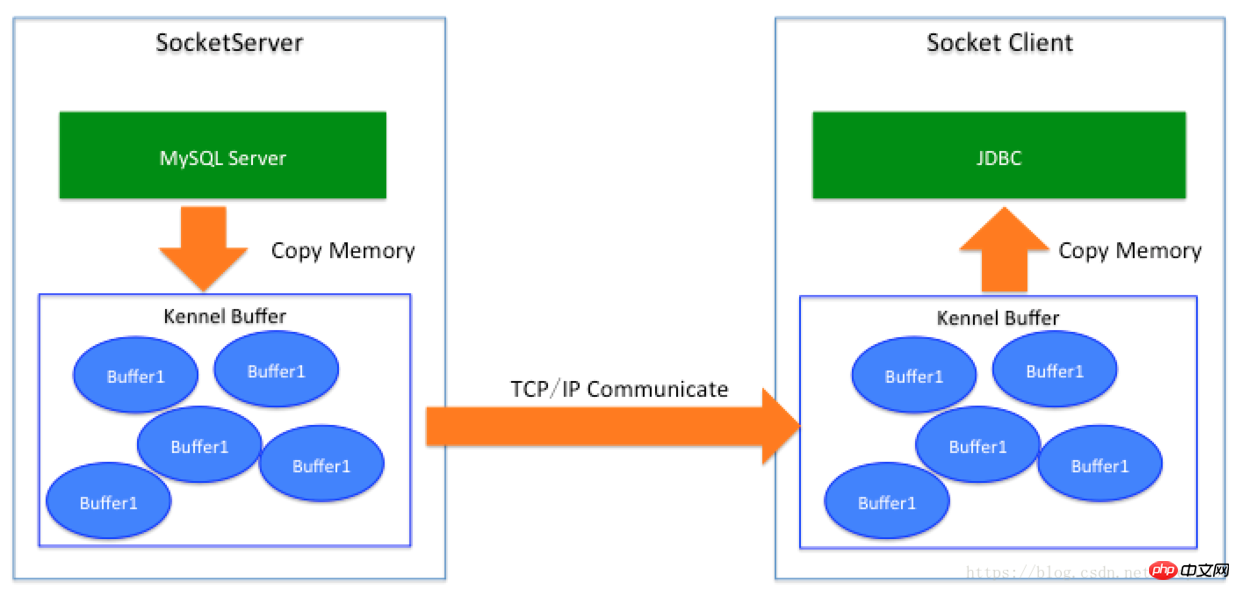

The communication between JDBC and the database is completed through Socket, so we can treat the database as a SocketServer provider Square, so when SocketServer returns data (similar to the return of SQL result sets) the process is: server program data (database) -> Kernel Socket Buffer -> Network -> Client Socket Buffer -> Client End program (JVM memory where JDBC is located)

So far, the JDBC that everyone has seen in the IT industry is: MySQL JDBC, SQL Server JDBC, PG JDBC, Oracle JDBC. Even for NoSQL Clients: Redis Client, MongoDB Client, Memcached, data return basically follows the same logic.

[Why does it hang when using MySQL JDBC to read data directly by default? 】

(1) The SQL result set initiated by MySQL Server all outputs data through OutputStream, that is, writes data to the socket buffer corresponding to the local Kennel. This is a memory copy. (Memory copying is not the focus of this article).

(2) At this time, when the Kennel's Buffer has data, it will send the data back through the TCP link (Socket link actively initiated by JDBC). At this time, the data will be sent back to the machine where JDBC is located. On, it will first enter the Kennel area and also enter a Buffer area.

(3) After JDBC initiates a SQL operation, the Java code blocks on the inputStream.read() operation. When there is data in the buffer, it will be awakened and then read the data in the buffer. To Java memory, this is a memory copy on the JDBC side.

(4) Next, MySQL JDBC will continue to read the buffer data into Java memory, and MySQL Server will continue to send data. Note that before the data is completely assembled, the SQL operation initiated by the client will not respond, which means that it gives you the feeling that the MySQL server has not responded yet. In fact, the data has been sent locally, and JDBC has not yet returned the result set to the place where the execute method was called. the first piece of data, but continuously reads data from the buffer.

(5) The key is that this fool will read the contents of the entire table into Java memory like a handful of data, regardless of whether it is stored at home. First, FULL GC, The next step is memory overflow.

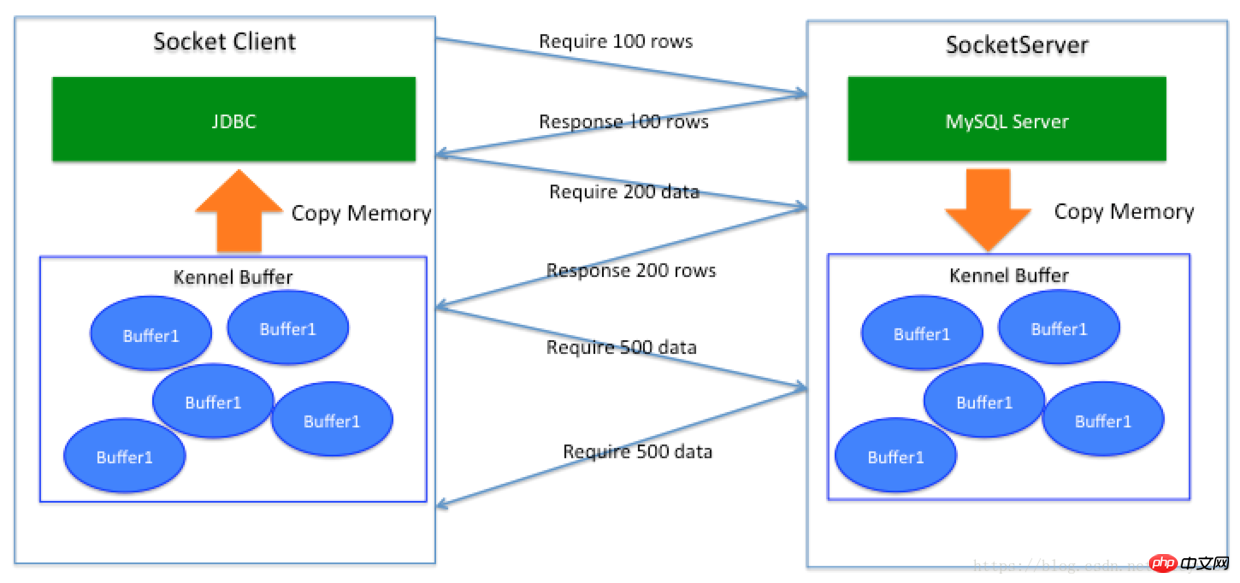

[Setting useCursorFetch=true on the JDBC parameters can solve the problem]

This solution can indeed solve the problem with the FetchSize setting. This solution actually tells the MySQL server that I want to How much data, how much data is required each time, the communication process is a bit like this:

This is just like in our lives, what I need is Go to the supermarket and buy as much as you need. However, this kind of interaction is not like online shopping now. You can have things delivered to your home while sitting at home. It must be walked (network link), which means it requires network time overhead. If the data has 100 million data, set FetchSize to If it is 1000, there will be 100,000 round-trip communications; if the network delay is 0.02ms in the same computer room, then 100,000 communications will add 2 seconds, which is not a big deal. So if the delay time is 2ms across computer rooms, it will be 200 seconds longer (that is, 3 minutes and 20 seconds). If the delay time is 10~40ms across cities in China, then the time will be 1000~4000 seconds. What if it is 200~300ms across countries? The time will be more than ten hours longer.

In the calculations here, we have not included the increased number of system calls, the increased number of contexts for threads to wait and wake up, and the impact of network packet retransmissions on overall performance. Therefore, this solution is It seems reasonable, but the performance is indeed not very good.

In addition, since MySQL does not know when the client has finished consuming the data, and its corresponding table may have DML write operations, MySQL needs to create a temporary table space to store the data that needs to be taken away. Therefore, when you enable useCursorFetch to read a large table, you will see several phenomena on MySQL:

(1) IOPS surges because there are a large number of IO reads. If it is an ordinary hard disk, it may Will cause jitter in business writing

(2) The disk space soars. This temporary space may be larger than the original table. If this table occupies a large proportion in the entire database, it may cause database disk write If full, the space will be reclaimed by MySQL after the result set is read or when the client initiates Result.close().

(3) The CPU and memory will increase by a certain percentage, which is determined by the CPU’s capabilities.

(4) After the client JDBC initiates SQL, it waits for a long time for SQL response data. During this time, the server is preparing data. This waiting is different from the original JDBC method of not setting any parameters. It also shows waiting. The internal principles are different. The former keeps reading data from the network buffer and does not respond to the business. Now the MySQL database is preparing temporary data space and does not respond to JDBC.

[Stream reading data]

We know that the first method will cause Java to hang, and the second method is inefficient and has a greater impact on the MySQL database. , the client response is also slow, it can only solve the problem, so now let’s look at the Stream reading method.

As mentioned earlier, when you use statement.setFetchSize(Integer.MIN_VALUE) or com.mysql.jdbc.StatementImpl.enableStreamingResults(), you can enable Stream to read the result set. FetchSize cannot be used before initiating execute. Set it manually and make sure the cursor is FORWARD_ONLY.

This method is amazing. It seems that the memory is no longer hung up, the response is faster, and the impact on MySQL is also smaller. At least the IOPS will not be so large, and the disk usage will be gone. In the past, I only saw separate code in JDBC and thought it was another communication protocol between MySQL and JDBC. Little did I know that it turned out to be "client behavior". Yes, you read that right, it is the client. Behavior.

When it initiates enableStreamingResults(), it will hardly do any interaction with the server. That is, the server will return the data according to method 1, then the server will push the data into the buffer hard, and the client will How do you withstand the pressure?

In JDBC, when you enable Stream result set processing, it does not read all the data into Java memory at once, that is, the data in Figure 1 is not read at once. The Java buffer reads one package at a time (this package can be understood as a byte[] array in Java). It can read up to so many at a time, and then it will see whether to continue reading downwards to ensure the integrity of the data. . The business code is parsed into lines based on bytes and used by the business side.

The server just starts to push data into the buffer, and the data will also fill up the client's kernel buffer. When the buffers on both sides are full, a Buffer on the server tries to pass through TCP When the data is sent to the receiver, the consumer's buffer is also full at this time, so the sender's thread will be blocked, waiting for the other party to consume. If the other party consumes part of it, it can push part of the data to it. The connection seems to be that before the JDBC Stream data has time to be consumed, if the buffer data is full, then the thread of MySQL sending data will be blocked, thus ensuring a balance (for this, you can use Java's Socket to try Is this the case below).

For the JDBC client, the data is obtained in the local kernel buffer every time, just a distance away from the express parcel box in the community, so it is naturally more expensive than the RT every time it goes to the supermarket. It is much smaller, and this process is prepared data, so there is no IO blocking process (unless the data passed by the MySQL server is not as fast as the consumer to process the data, then generally only the consumer does not do any business and gets This happens only when the data is directly discarded in the test code). At this time, no matter: across computer rooms, across regions, or across countries, as long as the server starts to respond, data will be continuously passed over, and this action is even the first one. The method is also a process that must be experienced.

Compared with the first method, JDBC will not cause memory overflow when used. Even if a large table is read without memory overflow, it will take a long time to respond; however, this method is more suitable than method 1. The impact on the database is relatively large. During the process of transferring data, the corresponding data rows will be locked (to prevent modification). Using InnoDB will perform segmented locking, while using MyISAM will add full table locks, which may cause business blocking.

[Theoretically you can go further, as long as you are willing]

Theoretically this method is better, but in terms of perfectionism, we can continue Let's talk about it. For lazy people, we don't even have the motivation to go to the express parcel box downstairs in the community to get it. What we think about is if someone brings it to my home and puts it in my mouth, even in my mouth. It would be great if I broke it apart.

Technically, this can indeed be done in theory, because it takes time for JDBC to copy memory from the kernel to Java. If another person does this, it will not work while I am doing other things at home. It was delivered to my home. When I need it, I come directly from home. This saves time. Every mistake is indeed saved for you, but the question is who will send it?

You must add a thread to the program to do this, copy the kernel data to the application memory, and even parse it into rows of data for the application to use directly, but is this necessarily perfect? In fact, there is a coordination problem in the middle. For example, if you want to cook at home and you are short of a packet of seasonings, you could have bought them downstairs yourself, but you have to ask someone to deliver them to your home. By this time, all the other dishes have been cooked, and only one packet is left. seasoning, then you have no other choice but to wait for the package of seasoning to be delivered to your home before you can proceed to the next step of cooking. Therefore, under ideal circumstances, it can save a lot of memory copy time, but will increase some coordination lock overhead.

So is it possible to read data directly from the kernel buffer?

Theoretically it is possible. Before explaining this problem, let us first understand what else is there besides this memory copy:

JDBC reads the data from the kernel buffer in binary format After retrieval, it will be further parsed into specific structured data, because at this time the structured data of the specific rows of the ResultSet needs to be returned to the business party, that is, there must be a copy of the data generated by RowData, and JDBC returns certain object types. When it comes to data (such as byte [] array), in the implementation of some scenarios, it does not want you to modify the content of the byte [] in the returned result (byte [1] = 0xFF) through the result set to modify the content of the ResultSet itself. Another memory copy will be made. During the use of business code, there will also be string strings, network output, etc., and there will be a bunch of memory copies. These are unavoidable at the business level. Compared with this little copy, it is simply It's trivial, so we didn't do it, thinking that it is almost trivial on the whole, unless your program bottleneck is here.

Therefore, from an overall point of view, memory copying is unavoidable. This time it is nothing more than system-level calls, and the overhead will be larger. Technically speaking, we can do it directly from the kernel state. Reading data; but at this time, the data from the Buffer needs to be taken away in bytes to allow more remote data to be transferred. There is no third location to store the Buffer, otherwise it will return to the memory copy from the kernel to the application. .

Relatively speaking, the server can optimize the transmission of data directly through direct IO (but the data protocol in this way is consistent with the data storage format, which is obviously only theoretical). To truly To implement a custom protocol and send data directly through the kernel state, you need to modify the OS-level file system protocol to achieve the purpose of conversion.

The above is the detailed content of A brief discussion on the communication principle of MySQL JDBC StreamResult. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

PHP vs. Python: Understanding the Differences

Apr 11, 2025 am 12:15 AM

PHP vs. Python: Understanding the Differences

Apr 11, 2025 am 12:15 AM

PHP and Python each have their own advantages, and the choice should be based on project requirements. 1.PHP is suitable for web development, with simple syntax and high execution efficiency. 2. Python is suitable for data science and machine learning, with concise syntax and rich libraries.

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL are essential skills for developers. 1.MySQL is an open source relational database management system, and SQL is the standard language used to manage and operate databases. 2.MySQL supports multiple storage engines through efficient data storage and retrieval functions, and SQL completes complex data operations through simple statements. 3. Examples of usage include basic queries and advanced queries, such as filtering and sorting by condition. 4. Common errors include syntax errors and performance issues, which can be optimized by checking SQL statements and using EXPLAIN commands. 5. Performance optimization techniques include using indexes, avoiding full table scanning, optimizing JOIN operations and improving code readability.

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

Recovering deleted rows directly from the database is usually impossible unless there is a backup or transaction rollback mechanism. Key point: Transaction rollback: Execute ROLLBACK before the transaction is committed to recover data. Backup: Regular backup of the database can be used to quickly restore data. Database snapshot: You can create a read-only copy of the database and restore the data after the data is deleted accidentally. Use DELETE statement with caution: Check the conditions carefully to avoid accidentally deleting data. Use the WHERE clause: explicitly specify the data to be deleted. Use the test environment: Test before performing a DELETE operation.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

MySQL is chosen for its performance, reliability, ease of use, and community support. 1.MySQL provides efficient data storage and retrieval functions, supporting multiple data types and advanced query operations. 2. Adopt client-server architecture and multiple storage engines to support transaction and query optimization. 3. Easy to use, supports a variety of operating systems and programming languages. 4. Have strong community support and provide rich resources and solutions.