This article brings you a tutorial on python multi-process control (with examples). It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Introduction to multiprocessing

Multiprocessing is a multi-process module that comes with python. It can generate processes in large batches. The effect is better when the server is a multi-core CPU, similar to threading. module. Compared with multi-threading, multi-process is more stable and secure due to its exclusive memory space. When doing batch operations in operation and maintenance, multi-process has more applicable scenarios

The multiprocessing package provides both local and remote Concurrent operations effectively avoid using child processes instead of threads with global interpretation locks. Therefore, multiprocessing can effectively utilize multi-core processing

Process class

In multiporcessing, processes are generated in batches through Process class objects, and the start() method is used to start the process

1. Syntax

multiprocessing.Process(group=None,target=None,name=None,args=(),kwargs={},*)

group: 这个参数一般为空,它只是为了兼容threading.Tread

target: 这个参数就是通过run()可调用对象的方法,默认为空,表示没有方法被调用

name: 表示进程名

args: 传给target调用方法的tuple(元组)参数

kwargs: 传给target调用方法的dict(字典)参数2.Methods and objects of the Process class

run()

This method is the running process of the process and can be repeated in subclasses When writing this method, it is generally rarely reconstructed

start()

Start the process, each process object must be called by this method

join([timeout])

Wait for the process to terminate before continuing. You can set the timeout period

name

You can get the process name, multiple processes can also have the same name

is_alive()

Returns whether the process is still alive, True or False, process survival refers to the start of start() to the termination of the child process

daemon

The mark of the daemon process, a Boolean value, after start() Set this value to indicate whether to run in the background

Note: If background running is set, the background program will not run and then create a child process

pid

You can get the process ID

exitcode

The value when the child process exits. If the process has not terminated, the value will be None. If it is a negative value, it means The child process is terminated

terminate()

Terminate the process. If it is Windows, use terminateprocess(). This method treats the process that has exited and ended. , will not be executed

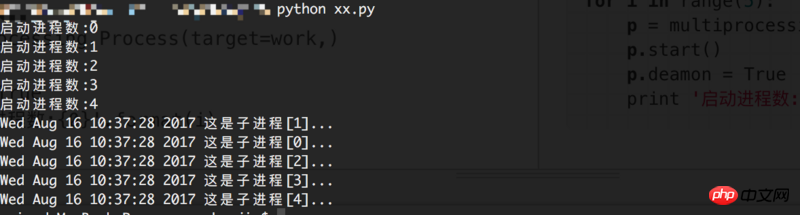

The following is a simple example:

#-*- coding:utf8 -*-

import multiprocessing

import time

def work(x):

time.sleep(1)

print time.ctime(),'这是子进程[{0}]...'.format(x)

if __name__ == '__main__':

for i in range(5):

p = multiprocessing.Process(target=work,args=(i,))

print '启动进程数:{0}'.format(i)

p.start()

p.deamon = True

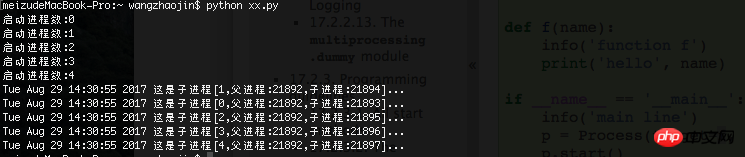

Of course, the ID of each process can also be displayed

#-*- coding:utf8 -*-

import multiprocessing

import time

import os

def work(x):

time.sleep(1)

ppid = os.getppid()

pid = os.getpid()

print time.ctime(),'这是子进程[{0},父进程:{1},子进程:{2}]...'.format(x,ppid,pid)

if __name__ == '__main__':

for i in range(5):

p = multiprocessing.Process(target=work,args=(i,))

print '启动进程数:{0}'.format(i)

p.start()

p.deamon = True

But in the actual use process, it is not enough to complete the concurrency. For example, there are 30 tasks. Due to limited server resources, 5 tasks are concurrent each time. , this also involves the issue of how to obtain 30 tasks. In addition, it is difficult to ensure consistent execution time of concurrent process tasks, especially tasks that require time. There may be 5 tasks concurrently, 3 of which have been completed, and 2 of which still require a lot of time. For long-term execution, you cannot wait until these two processes have finished executing before continuing to execute subsequent tasks. Therefore, process control has usage scenarios here. You can use the Process method in combination with some multiprocessing packages and classes.

Common classes for process control and communication

1. Queue class

is similar to Queue.Queue that comes with python, mainly used in

Syntax for smaller queues:

multiprocessing.Queue([maxsize])

Class method:

qsize()

Returns the approximate size of the queue, because multiple processes or threads have been consuming Queue, so the data is not necessarily correct

empty()

Determine whether the queue is empty, if so, return True, otherwise False

full()

Determine whether the queue is full, if so, return True, otherwise False

put(obj[, block[, timeout]])

Put the object into the queue, the optional parameter block is True, and the timeout is None

get()

Remove the object from the queue

#-*- coding:utf8 -*- from multiprocessing import Process, Queue def f(q): q.put([42,None,'hi']) if __name__ == '__main__': q = Queue() p = Process(target=f, args=(q,)) p.start() print q.get() #打印内容: [42,None,'hi'] p.join()

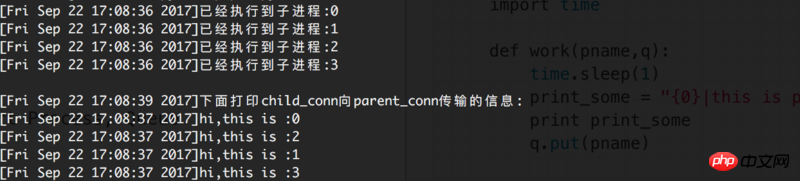

二, Pipe class

pipe() function returns a pair of object connections, which can transmit messages between processes. It is useful for printing some logs and process control. The Pip() object returns two objects. Connection represents two channels. Each connection object has send() and recv() methods. It should be noted that two or more processes read or write to the same pipe at the same time, which may cause data confusion. I tested it. , is directly covered. In addition, if one of the two connections returned is send() data, then the other one can only receive data with recv()

#-*- coding:utf8 -*-

from multiprocessing import Process, Pipe

import time

def f(conn,i):

print '[{0}]已经执行到子进程:{1}'.format(time.ctime(),i)

time.sleep(1)

w = "[{0}]hi,this is :{1}".format(time.ctime(),i)

conn.send(w)

conn.close()

if __name__ == '__main__':

reader = []

parent_conn, child_conn = Pipe()

for i in range(4):

p = Process(target=f, args=(child_conn,i))

p.start()

reader.append(parent_conn)

p.deamon=True

# 等待所有子进程跑完

time.sleep(3)

print '\n[{0}]下面打印child_conn向parent_conn传输的信息:'.format(time.ctime())

for i in reader:

print i.recv()The output is:

三、Value,Array

在进行并发编程时,应尽量避免使用共享状态,因为多进程同时修改数据会导致数据破坏。但如果确实需要在多进程间共享数据,multiprocessing也提供了方法Value、Array

from multiprocessing import Process, Value, Array

def f(n, a):

n.value = 3.1415927

for i in range(len(a)):

a[i] = -a[i]

if __name__ == '__main__':

num = Value('d',0.0)

arr = Array('i', range(10))

p = Process(target=f, args=(num, arr))

p.start()

p.join()

print num.value

print arr[:]*print

3.1415927

[0, -1, -2, -3, -4, -5, -6, -7, -8, -9]*

四、Manager进程管理模块

Manager类管理进程使用得较多,它返回对象可以操控子进程,并且支持很多类型的操作,如: list, dict, Namespace、lock, RLock, Semaphore, BoundedSemaphore, Condition, Event, Barrier, Queue, Value, Array,因此使用Manager基本上就够了

from multiprocessing import Process, Manager def f(d, l): d[1] = '1' d['2'] = 2 d[0.25] = None l.reverse() if __name__ == '__main__': with Manager() as manager: d = manager.dict() l = manager.list(range(10)) p = Process(target=f, args=(d, l)) p.start() p.join() #等待进程结束后往下执行 print d,'\n',l

输出:

{0.25: None, 1: '1', '2': 2}

[9, 8, 7, 6, 5, 4, 3, 2, 1, 0]

可以看到,跟共享数据一样的效果,大部分管理进程的方法都集成到了Manager()模块了

五、对多进程控制的应用实例

#-*- coding:utf8 -*-

from multiprocessing import Process, Queue

import time

def work(pname,q):

time.sleep(1)

print_some = "{0}|this is process: {1}".format(time.ctime(),pname)

print print_some

q.put(pname)

if __name__ == '__main__':

p_manag_num = 2 # 进程并发控制数量2

# 并发的进程名

q_process = ['process_1','process_2','process_3','process_4','process_5']

q_a = Queue() # 将进程名放入队列

q_b = Queue() # 将q_a的进程名放往q_b进程,由子进程完成

for i in q_process:

q_a.put(i)

p_list = [] # 完成的进程队列

while not q_a.empty():

if len(p_list) <p>执行结果:</p><p><img src="/static/imghw/default1.png" data-src="https://img.php.cn//upload/image/167/872/710/1542261551928622.png" class="lazy" title="1542261551928622.png" alt="Tutorial explanation of python multi-process control (with examples)"></p>The above is the detailed content of Tutorial explanation of python multi-process control (with examples). For more information, please follow other related articles on the PHP Chinese website!