What is GPU

The full English name of GPU is Graphic Processing Unit, which is translated as "graphics processor" in Chinese; GPU is the "brain" of the graphics card, which determines the grade and most of the performance of the graphics card. On the mobile phone motherboard, the GPU chip Usually it is next to the CPU chip.

#The operating environment of this article: Windows 7 system, Dell G3 computer.

I believe many people are not unfamiliar with CPUs, but many people don’t know what a GPU is? Let’s summarize what is a GPU?

1: What is GPU

GPU is the abbreviation of graphics processing unit. It is a semiconductor chip (processor) that can perform rendering of 3D graphics and other images. As a semiconductor chip installed on personal computers and servers, the GPU is the central processing unit of the PC or server and is used to describe images such as 3D graphics. As for the calculation processing regarding the depiction of 3D graphics, the CPU leaves it to the GPU. [Recommended reading: What is cpu】

Recently, many GPGPU graphics processing units have appeared for general computing, which take advantage of the high performance of GPUs. Computing performance to perform effects other than 3D graphics, by using GPGPU, server performance higher than that of a supercomputer can be built at a lower cost.

Two: The difference between GPU and CPU

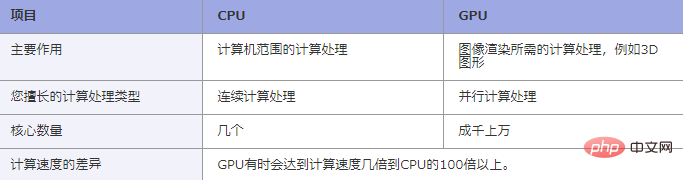

CPU and GPU both perform the same "computing process" required for PC and server operation, CPU is the same as PC or The corresponding brain of the server, GPU is a brain specialized for image description. However, image processing experts sometimes perform other computational processing (GPGPU). As follows:

For example , in a 3D game, the image is projected on the monitor as if it is flowing. This process requires a lot of calculations by the GPU. However, a 3D game cannot be built just by projecting the image. Various processing is required, such as reading the game from the hard disk. The CPU is responsible for describing these videos based on the data and processing commands that the user inputs with the keyboard and mouse according to the program. The CPU relies on the GPU for image rendering.

GPU is a processor suitable for conventional and large-scale computing processing in order to render images , one of the CPUs is a command processor that processes information sent from the entire computer, including HDD, memory, operating system, programs, keyboard, mouse, etc. For example, the CPU performs better in complex processing if the CPU is executing the entire process Commander, you can compare the GPU with a factory that quickly processes a large number of forms. The difference between the two is the number of cores in the above table.

三: GPU structure and processing capabilities

The GPU is installed on a component called the graphics board, which is the component displayed on the monitor in the computer. Connect it to the personal computer, the CPU and memory installed on the graphics board, etc. The component is called the motherboard and responds to commands from the CPU on the motherboard so that the GPU renders the image. There is also a so-called "built-in GPU" where the GPU is built into the chipset installed on the motherboard. As an advantage of using onboard graphics, saving money is achieved. On the other hand, the image processing performance of a graphics board is much higher. When using 3D graphics, it is recommended to install a graphics board.

The above is a complete introduction to what a GPU is. If you want to know more For more information on operation and maintenance, please pay attention to php Chinese website.

The above is the detailed content of What is GPU. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

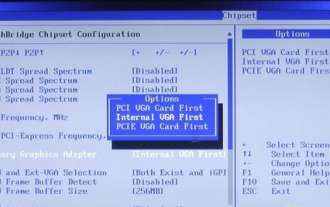

Friends who know something about computers must know that GPUs have shared memory, and many friends are worried that shared memory will reduce the number of memory and affect the computer, so they want to turn it off. Here is how to turn it off. Let's see. Turn off win10gpu shared memory: Note: The shared memory of the GPU cannot be turned off, but its value can be set to the minimum value. 1. Press DEL to enter the BIOS when booting. Some motherboards need to press F2/F9/F12 to enter. There are many tabs at the top of the BIOS interface, including "Main, Advanced" and other settings. Find the "Chipset" option. Find the SouthBridge setting option in the interface below and click Enter to enter.

What does shared gpu memory mean?

Mar 07, 2023 am 10:17 AM

What does shared gpu memory mean?

Mar 07, 2023 am 10:17 AM

Shared gpu memory means the priority memory capacity specially divided by the WINDOWS10 system for the graphics card; when the graphics card memory is not enough, the system will give priority to this part of the "shared GPU memory"; in the WIN10 system, half of the physical memory capacity will be divided into "Shared GPU memory".

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Is it necessary to enable hardware accelerated GPU? With the continuous development and advancement of technology, GPU (Graphics Processing Unit), as the core component of computer graphics processing, plays a vital role. However, some users may have questions about whether hardware acceleration needs to be turned on. This article will discuss the necessity of hardware acceleration for GPU and the impact of turning on hardware acceleration on computer performance and user experience. First, we need to understand how hardware-accelerated GPUs work. GPU is a specialized

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

According to news from this site on January 2, according to TechPowerUp, AMD will soon launch notebook graphics cards based on Navi32 GPU. The specific models may be RX7700M and RX7800M. Currently, AMD has launched a variety of RX7000 series notebook GPUs, including the high-end RX7900M (72CU) and the mainstream RX7600M/7600MXT (28/32CU) series and RX7600S/7700S (28/32CU) series. Navi32GPU has 60CU. AMD may make it into RX7700M and RX7800M, or it may make a low-power RX7900S model. AMD is expected to

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

One of the standout features of the recently launched Beelink GTi 14is that the mini PC has a hidden PCIe x8 slot underneath. At launch, the company said that this would make it easier to connect an external graphics card to the system. Beelink has n

OpenGL rendering gpu should choose automatic or graphics card?

Feb 27, 2023 pm 03:35 PM

OpenGL rendering gpu should choose automatic or graphics card?

Feb 27, 2023 pm 03:35 PM

Select "Auto" for opengl rendering gpu; generally select the automatic mode for opengl rendering. The rendering will be automatically selected according to the actual hardware of the computer; if you want to specify, then specify the appropriate graphics card, because the graphics card is more suitable for rendering 2D and 3D vector graphics Content, support for OpenGL general computing API is stronger than CPU.

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD delivers on its initial March ‘24 promise to launch FSR 3.1 in Q2 this year. What really sets the 3.1 release apart is the decoupling of the frame generation side from the upscaling one. This allows Nvidia and Intel GPU owners to apply the FSR 3.

Deep Learning GPU Selection Guide: Which graphics card is worthy of my alchemy furnace?

Apr 12, 2023 pm 04:31 PM

Deep Learning GPU Selection Guide: Which graphics card is worthy of my alchemy furnace?

Apr 12, 2023 pm 04:31 PM

As we all know, when dealing with deep learning and neural network tasks, it is better to use a GPU instead of a CPU because even a relatively low-end GPU will outperform a CPU when it comes to neural networks. Deep learning is a field that requires a lot of computing. To a certain extent, the choice of GPU will fundamentally determine the deep learning experience. But here comes the problem, how to choose a suitable GPU is also a headache and brain-burning thing. How to avoid pitfalls and how to make a cost-effective choice? Tim Dettmers, a well-known evaluation blogger who has received PhD offers from Stanford, UCL, CMU, NYU, and UW and is currently studying for a PhD at the University of Washington, focuses on what kind of GPU is needed in the field of deep learning, combined with his own