Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

Establishment and optimization of caching mechanism for website system

Establishment and optimization of caching mechanism for website system

Establishment and optimization of caching mechanism for website system

After talking about the external network environment of the Web system, now we start to pay attention to the performance issues of our Web system itself.

As the number of visits to our website increases, we will encounter many challenges. Solving these problems is not just as simple as expanding the machine, but establishing and using an appropriate caching mechanism is fundamental.

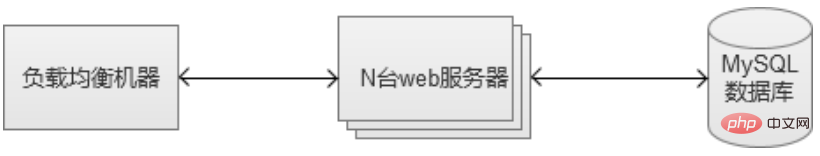

In the beginning, our Web system architecture may be like this. Each link may have only one machine.

1. The internal cache of MySQL database uses

MySQL’s caching mechanism. Let’s start from the inside of MySQL. The following content It will be based on the most common InnoDB storage engine.

1. Create an appropriate index

The simplest is to create an index. When the table data is relatively large, the index plays a role in quickly retrieving data, but the cost There are also some. First of all, it occupies a certain amount of disk space. Among them, the combined index is the most prominent. It needs to be used with caution. The index it generates may even be larger than the source data. Secondly, operations such as data insert/update/delete after index creation will take more time because the original index needs to be updated. Of course, in fact, our system as a whole is dominated by select query operations. Therefore, the use of indexes can still significantly improve system performance.

2. Database connection thread pool cache

If every database operation request needs to create and destroy a connection, it will undoubtedly be a huge overhead for the database. In order to reduce this type of overhead, thread_cache_size can be configured in MySQL to indicate how many threads are reserved for reuse. When there are not enough threads, they are created again, and when there are too many idle threads, they are destroyed.

In fact, there is a more radical approach, using pconnect (database long connection), once the thread is created, it will be maintained for a long time. However, when the amount of access is relatively large and there are many machines, this usage is likely to lead to "the number of database connections is exhausted", because the connections are not recycled, and eventually the max_connections (maximum number of connections) of the database are reached. Therefore, the usage of long connections usually requires the implementation of a "connection pool" service between CGI and MySQL to control the number of connections created "blindly" by the CGI machine.

3. Innodb cache settings (innodb_buffer_pool_size)

innodb_buffer_pool_size This is a memory cache area used to save indexes and data. If the machine is exclusive to MySQL, it is generally recommended to be 80 of the machine's physical memory. %. In the scenario of fetching table data, it can reduce disk IO. Generally speaking, the larger this value is set, the higher the cache hit rate will be.

4. Sub-library/table/partition.

MySQL database tables generally withstand data volume in the millions. If it increases further, the performance will drop significantly. Therefore, when we foresee that the data volume will exceed this level, it is recommended to Operations such as sub-database/table/partition. The best approach is to design the service into a sub-database and sub-table storage model from the beginning, to fundamentally eliminate risks in the middle and later stages. However, some conveniences, such as list-based queries, will be sacrificed, and at the same time, maintenance complexity will be increased. However, when the amount of data reaches tens of millions or more, we will find that they are all worth it.

2. Set up multiple MySQL database services

One MySQL machine is actually a high-risk single point, because if it hangs up, our web service will No longer available. Moreover, as the number of visits to the Web system continued to increase, one day, we found that one MySQL server could not support it, and we began to need to use more MySQL machines. When multiple MySQL machines are introduced, many new problems will arise.

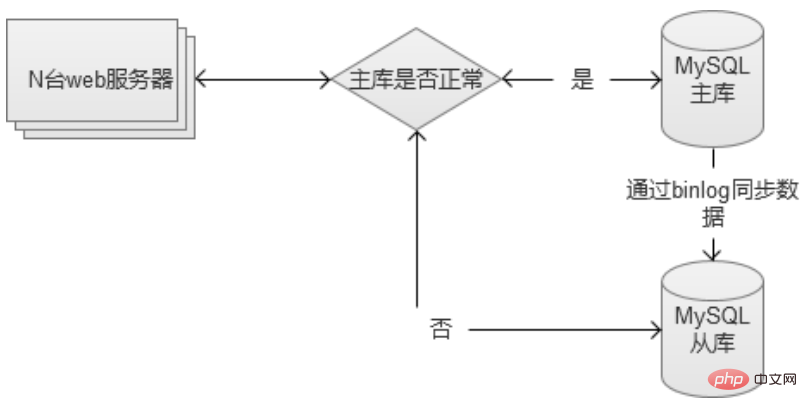

1. Establish MySQL master-slave, with the slave database as a backup.

This approach is purely to solve the problem of "single point of failure". When the master database fails, switch to the slave database. However, this approach is actually a bit of a waste of resources, because the slave library is actually idle.

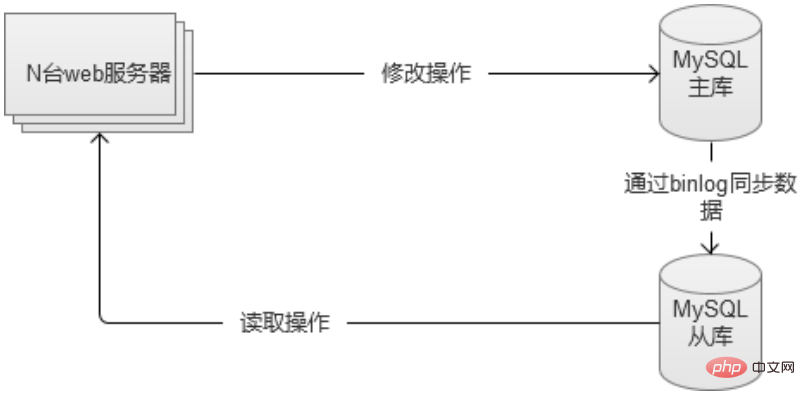

#2. MySQL separates reading and writing, writing to the main database and reading from the slave database.

The two databases separate reading and writing. The main database is responsible for writing classes, and the slave database is responsible for reading operations. Moreover, if the main database fails, the reading operation will not be affected. At the same time, all reading and writing can be temporarily switched to the slave database (you need to pay attention to the traffic, because the traffic may be too large and the slave database will be brought down).

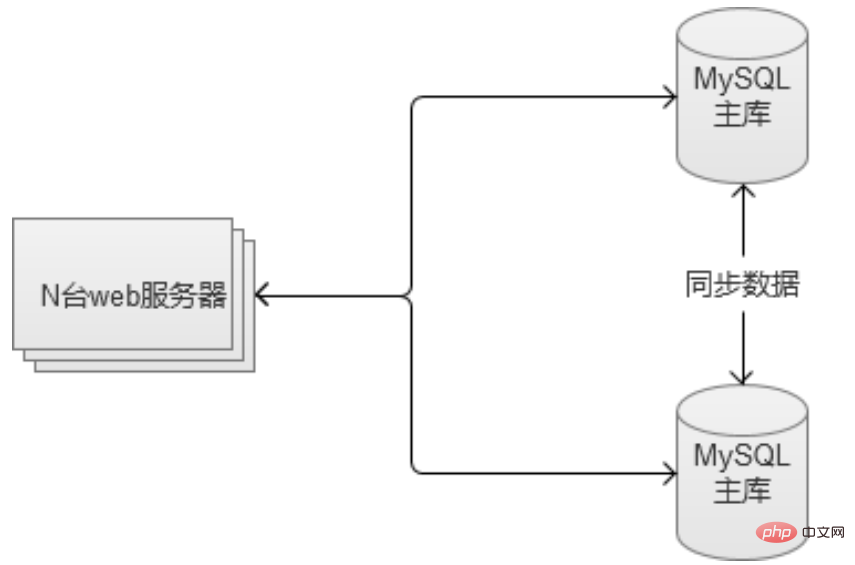

#3. Primary and secondary backup.

The two MySQL servers are each other's slave database and the master database at the same time. This solution not only diverts traffic pressure, but also solves the problem of "single point of failure". If any unit fails, there is another set of services available.

However, this solution can only be used in the scenario of two machines. If the business is still expanding rapidly, you can choose to separate the business and establish multiple master-master and mutual-backup services.

3. Establish a cache between the Web server and the database

In fact, to solve the problem of large visits, we cannot just focus on the database level. According to the "80/20 rule", 80% of requests only focus on 20% of hot data. Therefore, we should establish a caching mechanism between the web server and the database. This mechanism can use disk as cache or memory cache. Through them, most hot data queries are blocked in front of the database.

1. Page staticization

When a user visits a certain page on the website, most of the content on the page may not change for a long time. For example, a news report will almost never be modified once it is published. In this case, the static html page generated by CGI is cached locally on the disk of the web server. Except for the first time, which is obtained through dynamic CGI query database, the local disk file is returned directly to the user.

When the scale of the Web system was relatively small, this approach seemed perfect. However, once the scale of the Web system becomes larger, for example, when I have 100 Web servers. In this way, there will be 100 copies of these disk files, which is a waste of resources and difficult to maintain. At this time, some people may think that they can centralize a server to store it. Haha, why not take a look at the following caching method, which is how it does it.

2. Single memory cache

Through the example of page staticization, we can know that it is difficult to maintain the "cache" on the Web machine itself, and it will bring more Problem (in fact, through PHP's apc extension, the native memory of the web server can be manipulated through Key/value). Therefore, the memory cache service we choose to build must also be an independent service.

The choice of memory cache mainly includes redis/memcache. In terms of performance, there is not much difference between the two. In terms of feature richness, Redis is superior.

3. Memory cache cluster

When we build a single memory cache, we will face the problem of single point of failure, so we must turn it into a cluster. The simple way is to add a slave as a backup machine. However, what if there are really a lot of requests and we find that the cache hit rate is not high and more machine memory is needed? Therefore, we recommend configuring it as a cluster. For example, similar to redis cluster.

Redis cluster The Redis in the cluster are multiple sets of masters and slaves. At the same time, each node can accept requests, which is more convenient when expanding the cluster. The client can send a request to any node, and if it is the content it is "responsible for", the content will be returned directly. Otherwise, find the actual responsible Redis node, then inform the client of the address, and the client requests again.

All this is transparent to clients using the cache service.

There are certain risks when switching the memory cache service. In the process of switching from cluster A to cluster B, it is necessary to ensure that cluster B is "warmed up" in advance (the hot data in the memory of cluster B should be the same as that of cluster A as much as possible, otherwise, a large number of content requests will be requested at the moment of switching. It cannot be found in the memory cache of cluster B. The traffic directly impacts the back-end database service, which is likely to cause database downtime).

4. Reduce database “writes”

The above mechanisms all achieve the reduction of database “read” operations, but the write operation is also a big pressure. Although the write operation cannot be reduced, it can reduce the pressure by merging requests. At this time, we need to establish a modification synchronization mechanism between the memory cache cluster and the database cluster.

First put the modification request into effect in the cache, so that external queries can display normally, and then put these SQL modifications into a queue and store them. When the queue is full or every once in a while, they are merged into one request and sent to the database. Update the database.

In addition to improving the writing performance by changing the system architecture mentioned above, MySQL itself can also adjust the writing strategy to the disk by configuring the parameter innodb_flush_log_at_trx_commit. If the machine cost allows, to solve the problem from the hardware level, you can choose the older RAID (Redundant Arrays of independent Disks, disk array) or the newer SSD (Solid State Drives, solid state drives).

5. NoSQL storage

Regardless of whether the database is read or written, when the traffic increases further, the scenario of "when manpower is limited" will eventually be reached. The cost of adding more machines is relatively high and may not really solve the problem. At this time, you can consider using NoSQL database for some core data. Most NoSQL storage uses the key-value method. It is recommended to use Redis as introduced above. Redis itself is a memory cache and can also be used as a storage, allowing it to directly store data on the disk.

In this case, we will separate some of the frequently read and written data in the database and put it in our newly built Redis storage cluster, which will further reduce the pressure on the original MySQL database. At the same time, because Redis itself is a memory level Cache, the performance of reading and writing will be greatly improved.

Domestic first-tier Internet companies adopt many solutions similar to the above solutions in terms of architecture. However, the cache service used is not necessarily Redis. They will have richer other options, and even based on Develop its own NoSQL service based on its own business characteristics.

6. Empty node query problem

When we have built all the services mentioned above and think that the Web system is already very strong. We still say the same thing, new problems will still come. Empty node queries refer to data requests that do not exist in the database at all. For example, if I request to query a person's information that does not exist, the system will search from the cache at all levels step by step, and finally find the database itself, and then draw the conclusion that it cannot be found, and return it to the front end. Because caches at all levels are invalid for it, this request consumes a lot of system resources, and if a large number of empty node queries are made, it can impact system services.

The above is the detailed content of Establishment and optimization of caching mechanism for website system. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

A caching mechanism to implement efficient e-commerce recommendation algorithms in Golang.

Jun 20, 2023 pm 08:33 PM

A caching mechanism to implement efficient e-commerce recommendation algorithms in Golang.

Jun 20, 2023 pm 08:33 PM

With the vigorous development of e-commerce business, recommendation algorithms have become one of the keys to competition among major e-commerce platforms. As an efficient and high-performance language, Golang has great advantages in implementing e-commerce recommendation algorithms. However, while implementing efficient recommendation algorithms, the caching mechanism is also an issue that cannot be ignored. This article will introduce how to implement the caching mechanism of efficient e-commerce recommendation algorithm in Golang. 1. Why is the caching mechanism needed? In the e-commerce recommendation algorithm, the generation of recommendation results requires a large amount of computing resources. For high-concurrency e-commerce

Analyze the caching mechanism of MyBatis: compare the characteristics and usage of first-level cache and second-level cache

Feb 25, 2024 pm 12:30 PM

Analyze the caching mechanism of MyBatis: compare the characteristics and usage of first-level cache and second-level cache

Feb 25, 2024 pm 12:30 PM

Analysis of MyBatis' caching mechanism: The difference and application of first-level cache and second-level cache In the MyBatis framework, caching is a very important feature that can effectively improve the performance of database operations. Among them, first-level cache and second-level cache are two commonly used caching mechanisms in MyBatis. This article will analyze the differences and applications of first-level cache and second-level cache in detail, and provide specific code examples to illustrate. 1. Level 1 Cache Level 1 cache is also called local cache. It is enabled by default and cannot be turned off. The first level cache is SqlSes

Detailed explanation of caching mechanism in Django framework

Jun 18, 2023 pm 01:14 PM

Detailed explanation of caching mechanism in Django framework

Jun 18, 2023 pm 01:14 PM

In web applications, caching is often an important means to optimize performance. As a well-known web framework, Django naturally provides a complete caching mechanism to help developers further improve application performance. This article will provide a detailed explanation of the caching mechanism in the Django framework, including cache usage scenarios, recommended caching strategies, cache implementation and usage, etc. I hope it will be helpful to Django developers or readers who are interested in the caching mechanism. 1. Cache usage scenariosCache usage scenarios

What are the java caching mechanisms?

Nov 16, 2023 am 11:21 AM

What are the java caching mechanisms?

Nov 16, 2023 am 11:21 AM

Java cache mechanisms include memory cache, data structure cache, cache framework, distributed cache, cache strategy, cache synchronization, cache invalidation mechanism, compression and encoding, etc. Detailed introduction: 1. Memory cache, Java's memory management mechanism will automatically cache frequently used objects to reduce the cost of memory allocation and garbage collection; 2. Data structure cache, Java's built-in data structures, such as HashMap, LinkedList, HashSet, etc. , with efficient caching mechanisms, these data structures use internal hash tables to store elements and more.

What are Alibaba Cloud's caching mechanisms?

Nov 15, 2023 am 11:22 AM

What are Alibaba Cloud's caching mechanisms?

Nov 15, 2023 am 11:22 AM

Alibaba Cloud caching mechanisms include Alibaba Cloud Redis, Alibaba Cloud Memcache, distributed cache service DSC, Alibaba Cloud Table Store, CDN, etc. Detailed introduction: 1. Alibaba Cloud Redis: A distributed memory database provided by Alibaba Cloud that supports high-speed reading and writing and data persistence. By storing data in memory, it can provide low-latency data access and high concurrency processing capabilities; 2. Alibaba Cloud Memcache: the cache system provided by Alibaba Cloud, etc.

Detailed explanation of MyBatis cache mechanism: understand the cache storage principle in one article

Feb 23, 2024 pm 04:09 PM

Detailed explanation of MyBatis cache mechanism: understand the cache storage principle in one article

Feb 23, 2024 pm 04:09 PM

Detailed explanation of MyBatis caching mechanism: One article to understand the principle of cache storage Introduction When using MyBatis for database access, caching is a very important mechanism, which can effectively reduce access to the database and improve system performance. This article will introduce the caching mechanism of MyBatis in detail, including cache classification, storage principles and specific code examples. 1. Cache classification MyBatis cache is mainly divided into two types: first-level cache and second-level cache. The first-level cache is a SqlSession-level cache. When

What are the browser caching mechanisms?

Nov 15, 2023 pm 03:25 PM

What are the browser caching mechanisms?

Nov 15, 2023 pm 03:25 PM

Browser caching mechanisms include strong cache, negotiation cache, Service Worker and IndexedDB, etc. Detailed introduction: 1. Strong caching. When the browser requests a resource, it will first check whether there is a copy of the resource in the local cache and whether the copy has expired. If the copy of the resource has not expired, the browser will directly use the local cache and will not Send a request to the server, thus speeding up the loading of web pages; 2. Negotiate cache. When the copy of the resource expires or the browser's cache is cleared, the browser will send a request to the server, etc.

A caching mechanism to implement efficient online advertising delivery algorithm in Golang.

Jun 21, 2023 am 08:42 AM

A caching mechanism to implement efficient online advertising delivery algorithm in Golang.

Jun 21, 2023 am 08:42 AM

As an efficient programming language, Golang has been welcomed by more and more developers in recent years and is widely used in various scenarios. In the advertising platform scenario, in order to achieve accurate advertising delivery, it is necessary to quickly calculate the selection, sorting, filtering and other processes of ads to achieve efficient advertising delivery. In order to optimize this process, the caching mechanism has become an inevitable part. Generally speaking, the process of an advertising platform is as follows: when a user browses a web page, the advertising platform collects the user’s information through various methods and