This article mainly talks about how to implement database file statistics and deduplication in Linux. Friends who are interested can learn it!

1. Export the database table to a text file

mysql -h host -P port -u user -p password -A database -e "select email,domain,time from ent_login_01_000" > ent_login_01_000.txt

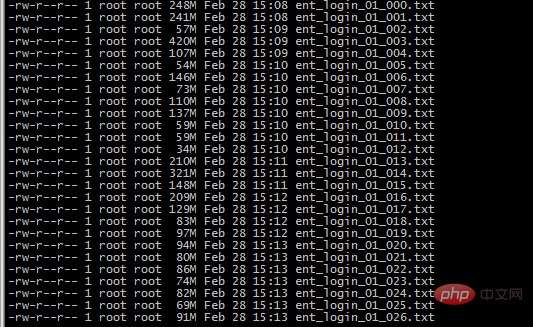

A total of logged-in users in the last 3 months will be counted, divided into tables by month, and there are 128 tables per month, all exported to files, a total of 80G

2. grep finds all 2018-12 2019-01 2019-02

find ./ -type f -name "ent_login_*" | xargs cat |grep "2018-12" > 2018-12.txt

find ./ -type f -name "ent_login_*" |xargs cat |grep "2019-01" > 2019-01.txt

find ./ -type f -name "ent_login_*" |xargs cat |grep "2019-02" > 2019-02.txt

3. Use awk sort and uniq to only remove the previous user, and First go to the duplicate lines

cat 2019-02.txt|awk -F " " '{print $1"@"$2}'|sort -T /mnt/public/phpdev/187_test/tmp/|uniq > 2019-02-awk-sort-uniq.txt

cat 2019-01.txt|awk -F " " '{print $1"@"$2}'|sort -T /mnt/public/ phpdev/187_test/tmp/|uniq > 2019-01-awk-sort-uniq.txt

cat 2018-12.txt|awk -F " " '{print $1"@"$2}'| sort -T /mnt/public/phpdev/187_test/tmp/|uniq > 2018-12-awk-sort-uniq.txt

uniq only removes consecutive duplicate lines, sort can arrange the lines into consecutive The -T is because the temporary directory of /tmp is occupied by default. The root directory is not enough for me, so I changed the temporary directory.

These files occupy more than 100 G

I want to learn more For Linux tutorials, please pay attention to Linux Video Tutorials on the PHP Chinese website!

The above is the detailed content of Export database files under Linux for statistics + deduplication. For more information, please follow other related articles on the PHP Chinese website!