First of all, we need to know what a crawler is! When I first heard the word crawler, I thought it was a crawling insect. It was so funny to think about it... Later I found out that it was a data scraping tool on the Internet!

Web crawler (also known as web spider, web robot, more commonly known as web page chaser in the FOAF community) is a kind of web crawler based on A program or script that automatically captures World Wide Web information based on certain rules. Other less commonly used names include ants, autoindexers, emulators, or worms.

What can a crawler do?

1. Simulate the browser to open the web page and obtain the part of the data we want in the web page.

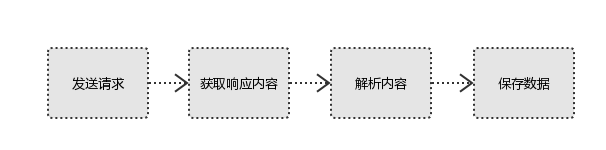

2. From a technical perspective, the program simulates the behavior of the browser requesting the site, crawls the HTML code/JSON data/binary data (pictures, videos) returned by the site to the local, and then extracts what you need The data is stored and used. 3. If you observe carefully, it is not difficult to find that more and more people understand and learn crawlers. On the one hand, more and more data can be obtained from the Internet. On the other hand, like Python, The programming language provides more and more excellent tools to make crawling simple and easy to use. 4. Using crawlers, we can obtain a large amount of valuable data, thereby obtaining information that cannot be obtained in perceptual knowledge, such as: Zhihu: Crawl high-quality answers, Screening out the best quality content on each topic for you.Send request Get response content > The process is very simple, isn’t it? Therefore, the browser results that users see are composed of HTML code. Our crawler is to obtain this content by analyzing and filtering the HTML code to obtain the resources we want.

Related learning recommendations:

python tutorial

The above is the detailed content of What can python crawler do?. For more information, please follow other related articles on the PHP Chinese website!