ResNet was jointly proposed by He Kaiming, Zhang Xiangyu, Ren Shaoqing, and Sun Jian in 2015. By using Residual Unit to successfully train a 152-layer deep neural network, it won the championship in the ILSVRC 2015 competition, achieving 3.57% Top5 error rate, while the number of parameters is lower than VGGNet, and the effect is very outstanding. The structure of ResNet can accelerate the training of ultra-deep neural networks extremely quickly, and the accuracy of the model is also greatly improved.

The original inspiration of ResNet came from this problem: when the depth of the neural network continues to increase, a Degradation problem will occur, that is, accuracy The rate will first increase and then reach saturation, and continuing to increase the depth will cause the accuracy to decrease. This is not a problem of overfitting, because not only the error on the test set increases, but the error on the training set itself will also increase. (Recommended learning: PHP video tutorial)

ResNet uses a new idea. The idea of ResNet is to assume that we involve a network layer and there is an optimized network layer, then Often the deep networks we design have many network layers that are redundant. Then we hope that these redundant layers can complete the identity mapping to ensure that the input and output through the identity layer are exactly the same. Specifically which layers are identity layers can be determined by yourself during network training. Change several layers of the original network into a residual block.

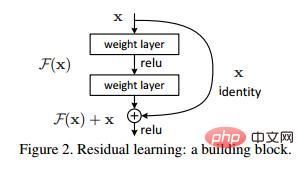

Assume that there is a relatively shallow network that reaches saturated accuracy, then add several congruent mappings of y=x layer, at least the error will not increase, that is, a deeper network should not bring about an increase in the error on the training set. The idea mentioned here of using congruent mapping to directly transmit the output of the previous layer to the later layer is the source of inspiration for ResNet. Assume that the input of a certain neural network is x and the expected output is H(x). If we directly transfer the input x to the output as the initial result, then the goal we need to learn at this time is F(x) = H(x) - x. As shown in the figure, this is a ResNet residual learning unit (Residual Unit).

ResNet is equivalent to changing the learning goal. It no longer learns a complete output H(x), but only outputs and The input difference H(x)-x is the residual.

You can see that The output after linear change and activation of the first layer. This figure shows that in the residual network, after the linear change of the second layer and before activation, F(x) adds the input value X of this layer, and then outputs after activation. . Add X before the second layer output value is activated. This path is called a shortcut connection.

After using the structure of ResNet, it can be found that the phenomenon of increasing errors on the training set caused by the increasing number of layers is eliminated. The training error of the ResNet network will gradually decrease as the number of layers increases, and The performance on the test set will also get better. Shortly after the launch of ResNet, Google borrowed the essence of ResNet and proposed Inception V4 and Inception ResNet V2. By fusing these two models, it achieved an astonishing 3.08% error rate on the ILSVRC data set. It can be seen that the contribution of ResNet and its ideas to the research of convolutional neural networks is indeed very significant and has strong generalizability.

For more PHP-related technical articles, please visit the PHP Graphic Tutorial column to learn!

The above is the detailed content of resnet network structure. For more information, please follow other related articles on the PHP Chinese website!

Introduction to Java special effects implementation methods

Introduction to Java special effects implementation methods

How to solve the 504 error in cdn

How to solve the 504 error in cdn

What are the common secondary developments in PHP?

What are the common secondary developments in PHP?

How to obtain the serial number of a physical hard disk under Windows

How to obtain the serial number of a physical hard disk under Windows

C language data structure

C language data structure

Introduction to the meaning of invalid password

Introduction to the meaning of invalid password

Is Bitcoin trading allowed in China?

Is Bitcoin trading allowed in China?

What are the network security technologies?

What are the network security technologies?

How to import data in access

How to import data in access