What nginx can do

The production of Nginx

Have you never heard of Nginx? Then you must have heard of its "peer" Apache! Nginx, like Apache, is a WEB server. Based on the REST architectural style, using Uniform Resources Identifier URI or Uniform Resources Locator URL as the basis for communication, various network services are provided through the HTTP protocol.

However, these servers were limited by the environment at the time when they were originally designed, such as the user scale, network bandwidth, product features and other limitations at the time, and their respective positioning and development were different. This also makes each WEB server have its own distinctive characteristics.

Apache has a long development period and is the undisputed number one server in the world. It has many advantages: stable, open source, cross-platform, etc. It has been around for too long. In the era when it emerged, the Internet industry was far inferior to what it is now. So it's designed to be a heavyweight. It does not support high-concurrency servers. Running tens of thousands of concurrent accesses on Apache will cause the server to consume a lot of memory. The switching between processes or threads by the operating system also consumes a large amount of CPU resources, resulting in a reduction in the average response speed of HTTP requests.

These all determine that Apache cannot become a high-performance WEB server, and the lightweight high-concurrency server Nginx came into being.

Russian engineer Igor Sysoev developed Nginx using C language while working for Rambler Media. As a WEB server, Nginx has always provided Rambler Media with excellent and stable services.

Then, Igor Sysoev open sourced the Nginx code and granted it a free software license.

Because:

- Nginx uses an event-driven architecture, which allows it to support millions of TCP connections

- Highly modular and free software license Certificates enable third-party modules to emerge in endlessly (this is an era of open source~)

- Nginx is a cross-platform server that can run on Linux, Windows, FreeBSD, Solaris, AIX, Mac OS and other operating systems

- These excellent designs bring great stability

So, Nginx is popular!

Where Nginx comes in

Nginx is a free, open source, high-performance HTTP server and reverse proxy server; it is also an IMAP, POP3, and SMTP proxy server; Nginx It can be used as an HTTP server to publish the website, and Nginx can be used as a reverse proxy to implement load balancing.

About agency

Speaking of agency, first of all we have to clarify a concept. The so-called agency is a representative and a channel;

At this time, two roles are involved, one One is the agent role, and the other is the target role. The process in which the agent character accesses the target role to complete some tasks through this agent is called the agent operation process; just like a specialty store in life ~ a customer went to an adidas specialty store and bought a pair of shoes. This specialty store It is an agent, the agent role is the adidas manufacturer, and the target role is the user.

Forward proxy

Before talking about reverse proxy, let’s take a look at forward proxy. Forward proxy is also the most common proxy mode that everyone comes into contact with. We will look at it from two aspects. Talking about the processing model of forward proxy, I will explain what forward proxy is from the aspects of software and life.

In today's network environment, if we need to access certain foreign websites due to technical needs, you will find that we cannot access a certain foreign website through a browser. At this time, everyone An operation FQ may be used for access. The main method of FQ is to find a proxy server that can access foreign websites. We send the request to the proxy server, and the proxy server accesses the foreign website and then passes the accessed data to us!

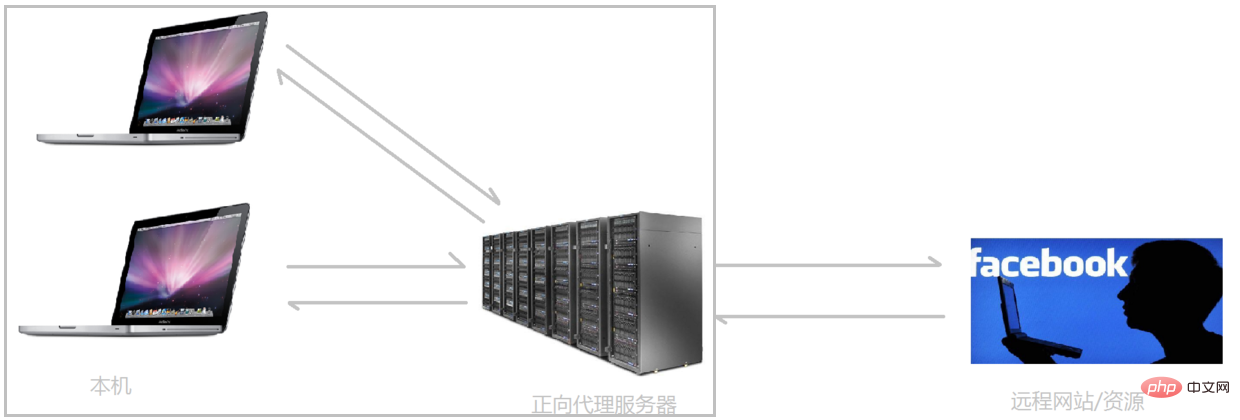

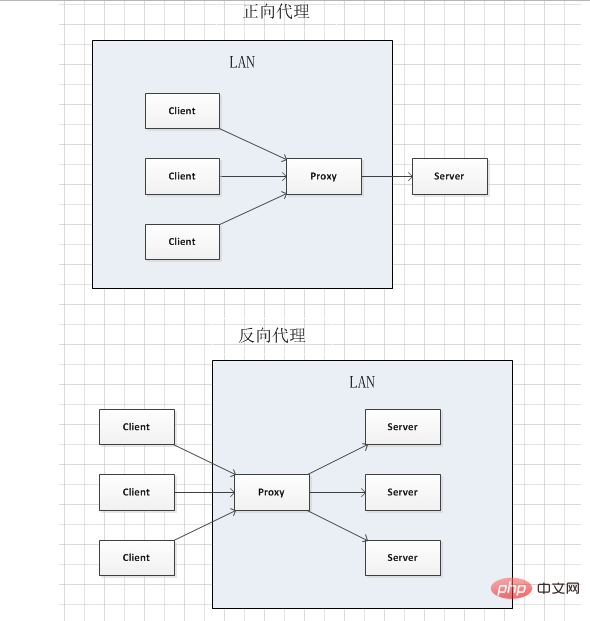

The above proxy mode is called forward proxy. The biggest feature of forward proxy is that the client is very clear about the server address it wants to access; The server only knows which proxy server the request comes from, and It is unclear which specific client it comes from; the forward proxy mode shields or hides the real client information. Let’s look at a schematic diagram (I put the client and the forward proxy together, they belong to the same environment, I will introduce them later):

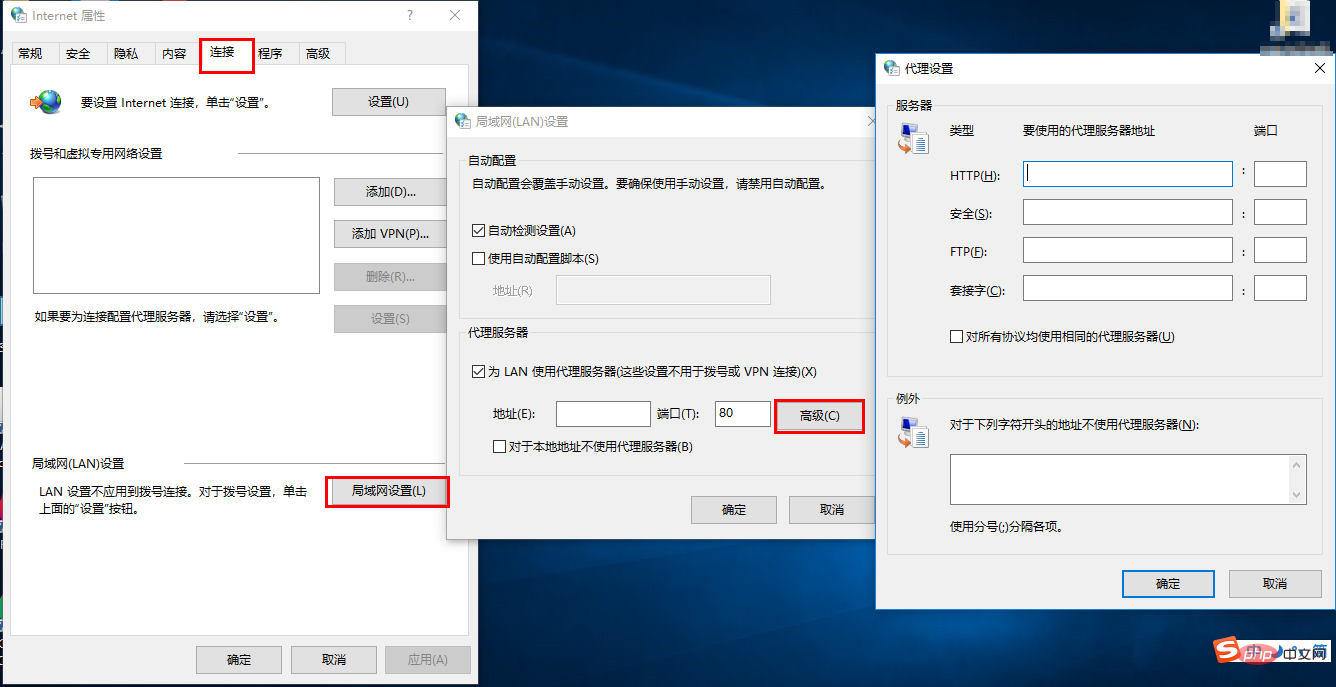

Client A forward proxy server must be set up. Of course, the premise is to know the IP address of the forward proxy server and the port of the agent program. As shown in the picture.

In summary: Forward proxy, "It acts as a proxy for the client and makes requests on behalf of the client", is a proxy located between the client and A server between origin servers. In order to obtain content from the origin server, the client sends a request to the proxy and specifies the target (origin server). The proxy then forwards the request to the origin server and returns the obtained content to the client. The client must make some special settings to use the forward proxy.

Use of forward proxy:

(1) Access resources that were originally inaccessible, such as Google

(2) Caching can be done to speed up access to resources

(3) Client access Authorize and authenticate online

(4) The agent can record user access records (online behavior management) and hide user information from the outside

Reverse proxy

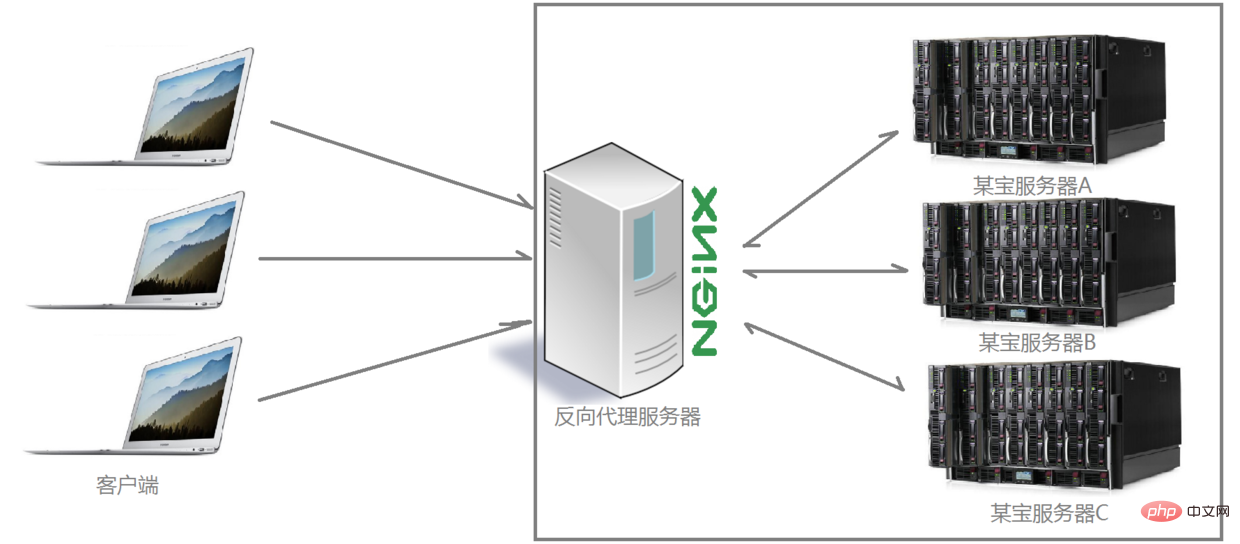

Understand what a forward proxy is, we Continue to look at the processing methods of reverse proxy. For example, for a certain website in my country, the number of visitors connected to the website at the same time every day has exploded. A single server is far from being able to satisfy the people's growing desire to buy. At this time, there is A familiar term is used: distributed deployment; that is, by deploying multiple servers to solve the problem of limiting the number of visitors; most functions of a certain website are also implemented directly using Nginx for reverse proxy, and by encapsulating Nginx and other The component later got a fancy name: Tengine. Interested children can visit Tengine's official website to view specific information: http://tengine.taobao.org/. So in what way does the reverse proxy implement distributed cluster operations? Let’s first look at a schematic diagram (I frame the server and the reverse proxy together, and they both belong to the same environment. I will introduce them later):

You can see clearly from the above diagram that after receiving the requests sent by multiple clients to the server, the Nginx server distributes them to the back-end business according to certain rules. The processing server has processed it. At this time, the source of the request, that is, the client, is clear, but it is not clear which server handles the request. Nginx plays the role of a reverse proxy.

The client is unaware of the existence of the proxy. The reverse proxy is transparent to the outside world. Visitors do not know that they are visiting a proxy. Because the client does not require any configuration to access.

Reverse proxy, "It acts as a proxy for the server and receives requests on behalf of the server", is mainly used for servers In the case of cluster distributed deployment, reverse proxy hides server information.

The role of the reverse proxy:

(1) To ensure the security of the internal network, the reverse proxy is usually used as the public network access address, and the Web server is the internal network

(2) Load Balance, optimize the load of the website through the reverse proxy server

Project scenario

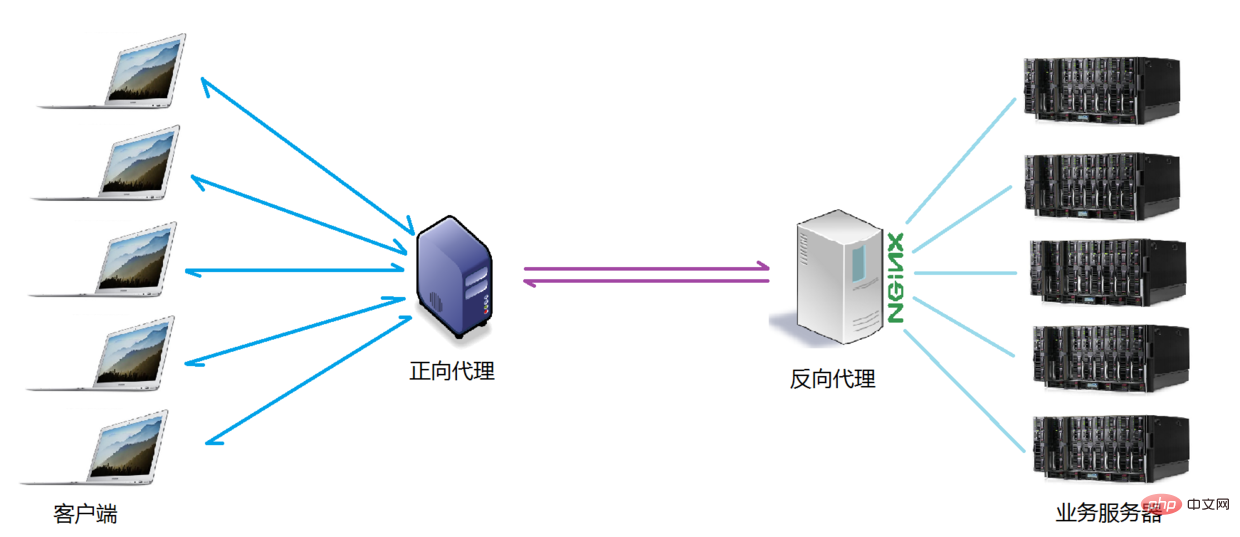

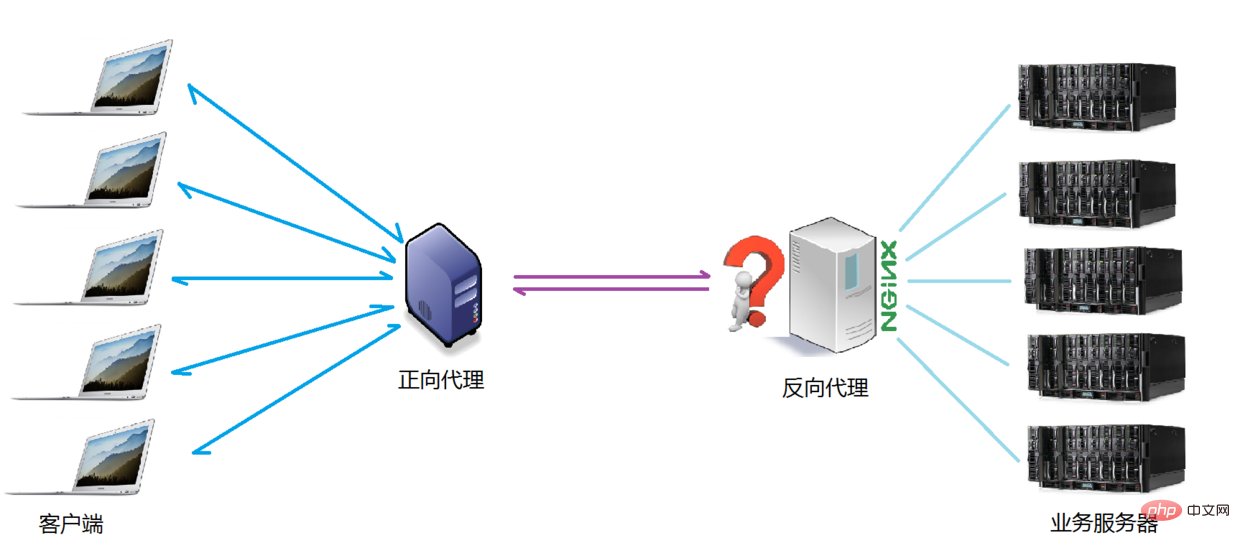

Normally, when we operate the actual project, the forward proxy and reverse proxy are likely to exist. In one application scenario, the forward proxy proxy client requests to access the target server. The target server is a reverse single-interest server, which reversely proxies multiple real business processing servers. The specific topology diagram is as follows:

The difference between the two

I took a screenshot to illustrate the difference between forward proxy and reverse proxy. , as shown in the figure.

Illustration:

In the forward proxy, the Proxy and the Client belong to the same LAN (in the box in the picture), and the client information is hidden;

In the reverse proxy, Proxy and Server belong to the same LAN (inside the box in the picture), hiding the server information;

In fact, Proxy does the same thing in both proxies. It sends and receives requests and responses on behalf of the server, but from a structural point of view, the left and right are interchanged, so the proxy method that appeared later is called a reverse proxy.

Load Balancing

We have clarified the concept of the so-called proxy server, so next, Nginx plays the role of a reverse proxy server, and what rules it distributes requests according to Woolen cloth? Can the distribution rules be controlled for different project application scenarios?

The number of requests sent by the client and received by the Nginx reverse proxy server mentioned here is what we call the load.

The rule that the number of requests is distributed to different servers for processing according to certain rules is a balancing rule.

So~The process of distributing requests received by the server according to rules is called load balancing.

In the actual project operation process, load balancing has two types: hardware load balancing and software load balancing. Hardware load balancing is also called hard load, such as F5 load balancing, which is relatively expensive and expensive, but the data is stable and safe. There is a very good guarantee for performance, etc. Only companies such as China Mobile and China Unicom will choose hard load operations; more companies will choose to use software load balancing for cost reasons. Software load balancing uses existing technology. A message queue distribution mechanism implemented in combination with host hardware.

The load balancing scheduling algorithm supported by Nginx is as follows:

- Weight polling (default, commonly used): received requests are distributed according to weight To different backend servers, even if a certain backend server goes down during use, Nginx will automatically remove the server from the queue, and the request acceptance will not be affected in any way. In this way, a weight value (weight) can be set for different back-end servers to adjust the allocation rate of requests on different servers; the larger the weight data, the greater the probability of being allocated to the request; the weight value, It is mainly adjusted for different back-end server hardware configurations in actual working environments.

- ip_hash (commonly used): Each request is matched according to the hash result of the initiating client's IP. Under this algorithm, a client with a fixed IP address will always access the same back-end server, which is also certain. To a certain extent, it solves the problem of session sharing in a cluster deployment environment.

- fair: Intelligent adjustment of the scheduling algorithm, dynamic and balanced allocation based on the time from request processing to response of the back-end server. Servers with short response times and high processing efficiency have a high probability of being assigned to requests, and servers with long response times have high processing efficiency. Low servers are assigned fewer requests; a scheduling algorithm that combines the advantages of the first two. However, it should be noted that Nginx does not support the fair algorithm by default. If you want to use this scheduling algorithm, please install the upstream_fair module.

- url_hash: Distribute requests according to the hash result of the accessed URL. The URL of each request will point to a fixed server on the backend, which can improve caching efficiency when Nginx is used as a static server. It should also be noted that Nginx does not support this scheduling algorithm by default. If you want to use it, you need to install the Nginx hash software package.

Comparison of several commonly used web servers

| Comparison items\server | Apache | Nginx | Lighttpd |

| Proxy proxy | Very Good | Very good | Average |

| Rewriter | Good | Very good | Average |

| Fcgi | Bad | Good | Very good |

| Not supported | Supported | Not supported | |

| Very high | Very small | Relatively small | |

| Good | Very good | Bad | |

| Good | General | General | |

| Average | Very good | Good | |

| Average | Very good Good | General |

For more Nginx related technical articles, please visitNginx Tutorial column to study!

The above is the detailed content of What nginx can do. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

To allow the Tomcat server to access the external network, you need to: modify the Tomcat configuration file to allow external connections. Add a firewall rule to allow access to the Tomcat server port. Create a DNS record pointing the domain name to the Tomcat server public IP. Optional: Use a reverse proxy to improve security and performance. Optional: Set up HTTPS for increased security.

How to run thinkphp

Apr 09, 2024 pm 05:39 PM

How to run thinkphp

Apr 09, 2024 pm 05:39 PM

Steps to run ThinkPHP Framework locally: Download and unzip ThinkPHP Framework to a local directory. Create a virtual host (optional) pointing to the ThinkPHP root directory. Configure database connection parameters. Start the web server. Initialize the ThinkPHP application. Access the ThinkPHP application URL and run it.

Welcome to nginx!How to solve it?

Apr 17, 2024 am 05:12 AM

Welcome to nginx!How to solve it?

Apr 17, 2024 am 05:12 AM

To solve the "Welcome to nginx!" error, you need to check the virtual host configuration, enable the virtual host, reload Nginx, if the virtual host configuration file cannot be found, create a default page and reload Nginx, then the error message will disappear and the website will be normal show.

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

Converting an HTML file to a URL requires a web server, which involves the following steps: Obtain a web server. Set up a web server. Upload HTML file. Create a domain name. Route the request.

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

Server deployment steps for a Node.js project: Prepare the deployment environment: obtain server access, install Node.js, set up a Git repository. Build the application: Use npm run build to generate deployable code and dependencies. Upload code to the server: via Git or File Transfer Protocol. Install dependencies: SSH into the server and use npm install to install application dependencies. Start the application: Use a command such as node index.js to start the application, or use a process manager such as pm2. Configure a reverse proxy (optional): Use a reverse proxy such as Nginx or Apache to route traffic to your application

What are the most common instructions in a dockerfile

Apr 07, 2024 pm 07:21 PM

What are the most common instructions in a dockerfile

Apr 07, 2024 pm 07:21 PM

The most commonly used instructions in Dockerfile are: FROM: Create a new image or derive a new image RUN: Execute commands (install software, configure the system) COPY: Copy local files to the image ADD: Similar to COPY, it can automatically decompress tar archives or obtain URL files CMD: Specify the command when the container starts EXPOSE: Declare the container listening port (but not public) ENV: Set the environment variable VOLUME: Mount the host directory or anonymous volume WORKDIR: Set the working directory in the container ENTRYPOINT: Specify what to execute when the container starts Executable file (similar to CMD, but cannot be overwritten)

Can nodejs be accessed from the outside?

Apr 21, 2024 am 04:43 AM

Can nodejs be accessed from the outside?

Apr 21, 2024 am 04:43 AM

Yes, Node.js can be accessed from the outside. You can use the following methods: Use Cloud Functions to deploy the function and make it publicly accessible. Use the Express framework to create routes and define endpoints. Use Nginx to reverse proxy requests to Node.js applications. Use Docker containers to run Node.js applications and expose them through port mapping.

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

To successfully deploy and maintain a PHP website, you need to perform the following steps: Select a web server (such as Apache or Nginx) Install PHP Create a database and connect PHP Upload code to the server Set up domain name and DNS Monitoring website maintenance steps include updating PHP and web servers, and backing up the website , monitor error logs and update content.