Best Practices for MongoDB Indexes

Preface

Most developers know that indexing is faster. But in the actual process, we often encounter some questions & difficulties:

- The fields we query will have various cases. Do all fields involved in the query need to be indexed?

- How to choose between compound index and single field? Is it better to add both or a single field for each?

- Are there any side effects of adding index?

- The index has been added, but it’s still not fast enough? what to do?

This article attempts to explain the basic knowledge of indexing & answer the above questions.

1. What exactly is an index?

Most developers who come into contact with indexes probably know that indexes are similar to the catalog of books. You need to find the content you want, find the qualified keywords through the catalog, then find the pageno of the corresponding chapter, and then find the specific content. .

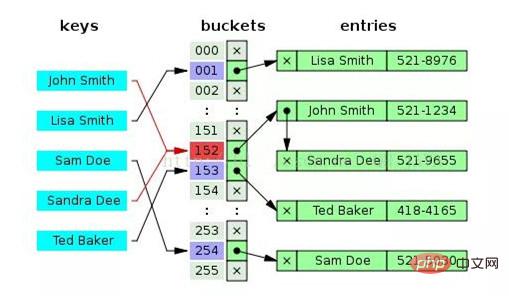

In the data structure, the simplest index implementation is similar to a hashmap, which maps to a specific location through the keyword key to find the specific content. But in addition to hashing, there are many ways to implement indexing.

(1) Multiple implementation methods and features of index

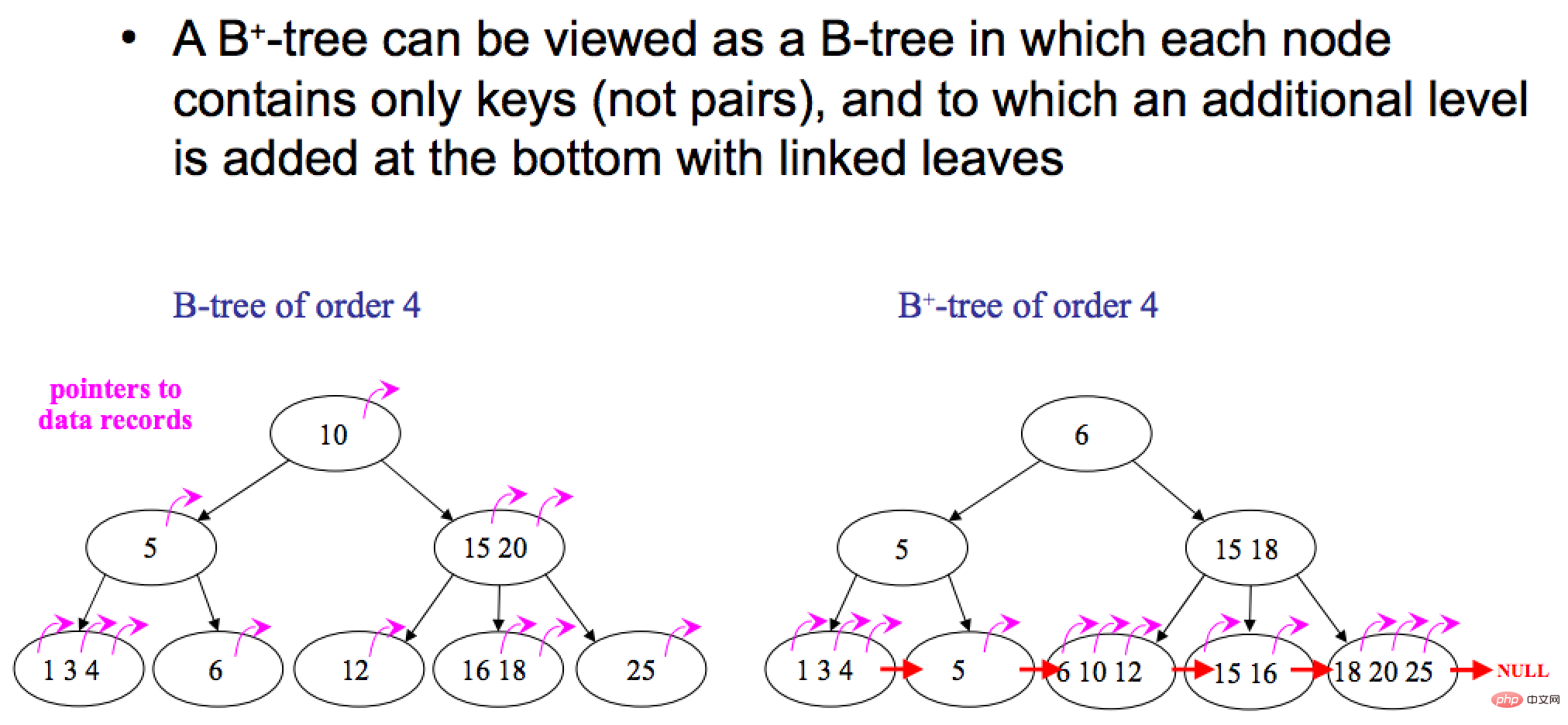

hash / b-tree / b -tree redis HSET / MongoDB&PostgreSQL / MySQL

hashmap

- b -tree leaves store data, non-leaves store indexes, no data is stored, and there are links between leaves

- b-tree non-leaves can store data

- hash is close to O(1)

- b-tree O(1)~ O(Log(n)) faster average search time , unstable query time

- b tree O(Log(n)) continuous data, query stability

There are many articles on the Internet that explain this, but it is not the focus of this article.

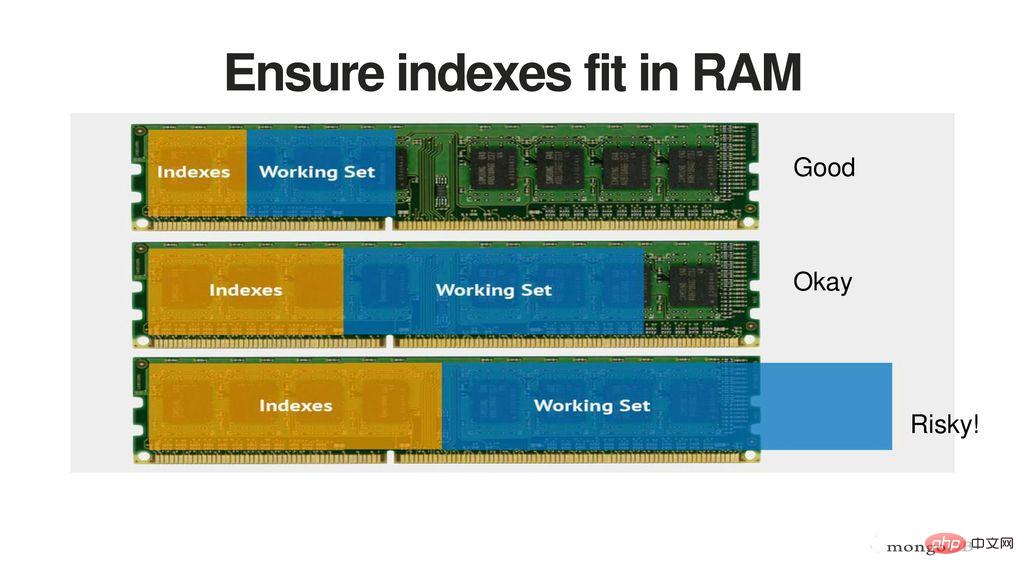

Index should be stored in memory as much as possible, followed by data.  Be careful to keep only necessary indexes, and use memory as wisely as possible.

Be careful to keep only necessary indexes, and use memory as wisely as possible.

If the index memory is close to filling up the memory, it will be easy to read the disk and the speed will slow down.

Take the simplest hashmap as an example, why is the complexity not O(1), but so-called close to O(1). Because there are key conflicts/duplications, when the DB is looking for it, if there is a lot of data with key conflicts, it still has to take turns to continue looking. The same goes for b-tree looking at key selection.

So a mistake that most developers often make is to index keys that have no distinction. For example: many collections have only centralized categories of type/status documents with a count of hundreds of thousands or more. Usually this kind of index is not helpful.

A loans collection is created here. Simplified to only have 100 pieces of data. This is a loan table with _id, userId, status (loan status), amount (amount).

db.loans.count()100

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

|

step 1 First create {userId:1, status:1}

1 2 3 4 5 6 7 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

|

step2: Create a typical index userId

1 2 3 4 5 6 7 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

|

Interesting part: status does not hit the index, Full table scanNext step, add a sort:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 |

|

Interesting part: status does not hit the index

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 |

|

Come back to the interesting part, we delete the index {userId:1}

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

|

1 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

|

Let’s play again and confirm the leading filed test:

1 2 |

|

1 2 3 4 5 6 7 8 9 10 11 |

|

1 2 3 4 5 6 7 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

|

看完这个试验,明白了 {userId:1, status:1} vs {status:1,userId:1} 的差别了吗?

PS:这个case 里面其实status 区分度不高,这里只是作为实例展示。

三、总结:

- 注意使用上、使用频率上、区分高的/常用的在前面;

- 如果需要减少索引以节省memory/提高修改数据的性能的话,可以保留区分度高,常用的,去除区分度不高,不常用的索引。

- 学会用explain()验证分析性能:

DB 一般都有执行器优化的分析,MySQL & MongoDB 都是 用explain 来做分析。

语法上MySQL :

explain your_sql

MongoDB:

yoursql.explain()

总结典型:理想的查询是结合explain 的指标,他们通常是多个的混合:

- IXSCAN : 索引命中;

- Limit : 带limit;

- Projection : 相当于非 select * ;

- Docs Size less is better ;

- Docs Examined less is better ;

- nReturned=totalDocsExamined=totalKeysExamined ;

- SORT in index :sort 也是命中索引,否则,需要拿到数据后,再执行一遍排序;

- Limit Array elements : 限定数组返回的条数,数组也不应该太多数据,否则schema 设计不合理。

彩蛋

文末,还有最开头1个问题没回答:如果我的索引改加的都加了,还不够快,怎么办?

留个悬念,之后再写一篇。

更多PHP相关技术文章,请访问PHP教程栏目进行学习!

The above is the detailed content of Best Practices for MongoDB Indexes. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

What is the use of net4.0

May 10, 2024 am 01:09 AM

What is the use of net4.0

May 10, 2024 am 01:09 AM

.NET 4.0 is used to create a variety of applications and it provides application developers with rich features including: object-oriented programming, flexibility, powerful architecture, cloud computing integration, performance optimization, extensive libraries, security, Scalability, data access, and mobile development support.

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

This article introduces how to configure MongoDB on Debian system to achieve automatic expansion. The main steps include setting up the MongoDB replica set and disk space monitoring. 1. MongoDB installation First, make sure that MongoDB is installed on the Debian system. Install using the following command: sudoaptupdatesudoaptinstall-ymongodb-org 2. Configuring MongoDB replica set MongoDB replica set ensures high availability and data redundancy, which is the basis for achieving automatic capacity expansion. Start MongoDB service: sudosystemctlstartmongodsudosys

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

This article describes how to build a highly available MongoDB database on a Debian system. We will explore multiple ways to ensure data security and services continue to operate. Key strategy: ReplicaSet: ReplicaSet: Use replicasets to achieve data redundancy and automatic failover. When a master node fails, the replica set will automatically elect a new master node to ensure the continuous availability of the service. Data backup and recovery: Regularly use the mongodump command to backup the database and formulate effective recovery strategies to deal with the risk of data loss. Monitoring and Alarms: Deploy monitoring tools (such as Prometheus, Grafana) to monitor the running status of MongoDB in real time, and

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

It is impossible to view MongoDB password directly through Navicat because it is stored as hash values. How to retrieve lost passwords: 1. Reset passwords; 2. Check configuration files (may contain hash values); 3. Check codes (may hardcode passwords).

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

Detailed explanation of MongoDB efficient backup strategy under CentOS system This article will introduce in detail the various strategies for implementing MongoDB backup on CentOS system to ensure data security and business continuity. We will cover manual backups, timed backups, automated script backups, and backup methods in Docker container environments, and provide best practices for backup file management. Manual backup: Use the mongodump command to perform manual full backup, for example: mongodump-hlocalhost:27017-u username-p password-d database name-o/backup directory This command will export the data and metadata of the specified database to the specified backup directory.

Major update of Pi Coin: Pi Bank is coming!

Mar 03, 2025 pm 06:18 PM

Major update of Pi Coin: Pi Bank is coming!

Mar 03, 2025 pm 06:18 PM

PiNetwork is about to launch PiBank, a revolutionary mobile banking platform! PiNetwork today released a major update on Elmahrosa (Face) PIMISRBank, referred to as PiBank, which perfectly integrates traditional banking services with PiNetwork cryptocurrency functions to realize the atomic exchange of fiat currencies and cryptocurrencies (supports the swap between fiat currencies such as the US dollar, euro, and Indonesian rupiah with cryptocurrencies such as PiCoin, USDT, and USDC). What is the charm of PiBank? Let's find out! PiBank's main functions: One-stop management of bank accounts and cryptocurrency assets. Support real-time transactions and adopt biospecies

How to encrypt data in Debian MongoDB

Apr 12, 2025 pm 08:03 PM

How to encrypt data in Debian MongoDB

Apr 12, 2025 pm 08:03 PM

Encrypting MongoDB database on a Debian system requires following the following steps: Step 1: Install MongoDB First, make sure your Debian system has MongoDB installed. If not, please refer to the official MongoDB document for installation: https://docs.mongodb.com/manual/tutorial/install-mongodb-on-debian/Step 2: Generate the encryption key file Create a file containing the encryption key and set the correct permissions: ddif=/dev/urandomof=/etc/mongodb-keyfilebs=512

MongoDB and relational database: a comprehensive comparison

Apr 08, 2025 pm 06:30 PM

MongoDB and relational database: a comprehensive comparison

Apr 08, 2025 pm 06:30 PM

MongoDB and relational database: In-depth comparison This article will explore in-depth the differences between NoSQL database MongoDB and traditional relational databases (such as MySQL and SQLServer). Relational databases use table structures of rows and columns to organize data, while MongoDB uses flexible document-oriented models to better suit the needs of modern applications. Mainly differentiates data structures: Relational databases use predefined schema tables to store data, and relationships between tables are established through primary keys and foreign keys; MongoDB uses JSON-like BSON documents to store them in a collection, and each document structure can be independently changed to achieve pattern-free design. Architectural design: Relational databases need to pre-defined fixed schema; MongoDB supports