How to solve the avalanche caused by redis

Cause of avalanche:

The simple understanding of cache avalanche is: due to the failure of the original cache (or the data is not loaded into the cache), the new cache has not arrived. During this period (the cache is normally obtained from Redis, as shown below) all requests that should have accessed the cache are querying the database, which puts huge pressure on the database CPU and memory. In severe cases, it can cause database downtime and system collapse.

The basic solution is as follows:

First, most system designers consider using locks or queues to ensure that there will not be a large number of threads reading and writing the database at one time. Avoiding excessive pressure on the database when the cache fails, although it can alleviate the pressure on the database to a certain extent, it also reduces the throughput of the system.

Second, analyze user behavior and try to evenly distribute cache invalidation times.

Third, if a certain cache server is down, you can consider primary and backup, such as: redis primary and backup, but double caching involves update transactions, and update may read dirty data, which needs to be solved .

Solution to the Redis avalanche effect:

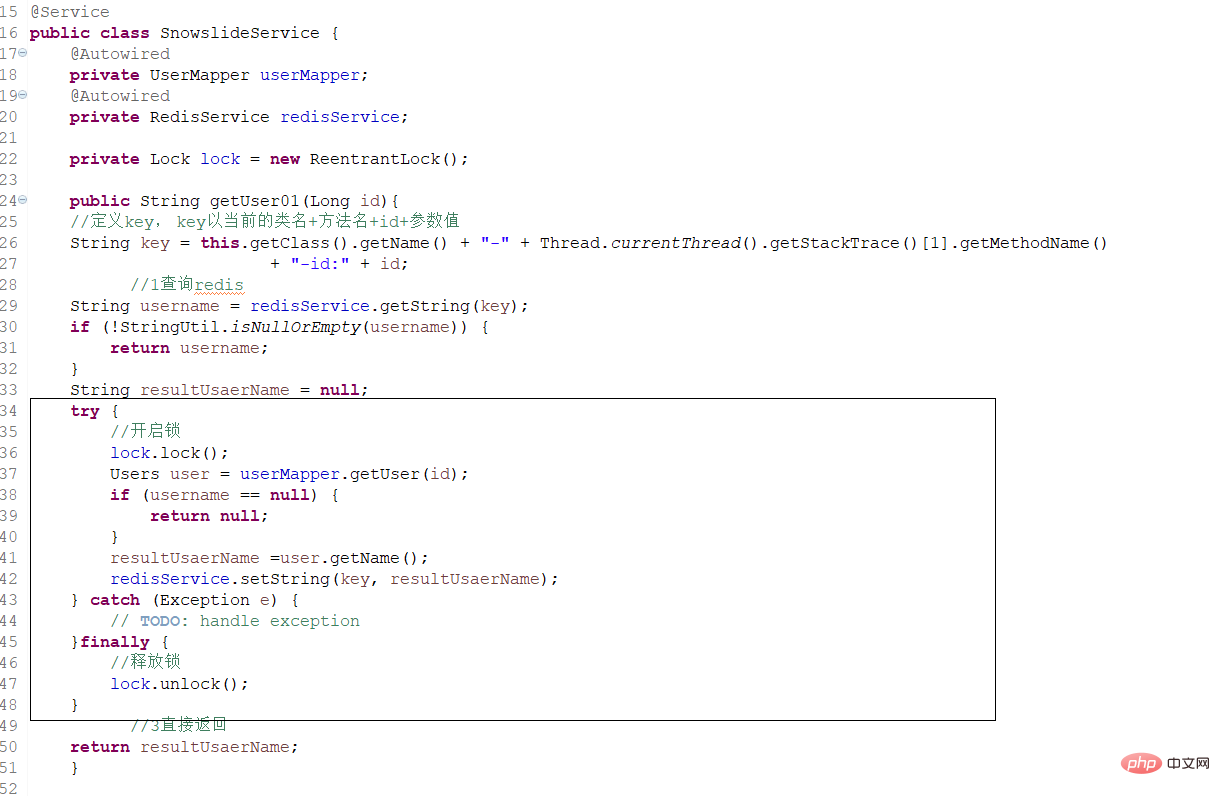

1. Distributed locks can be used. For stand-alone version, local locks

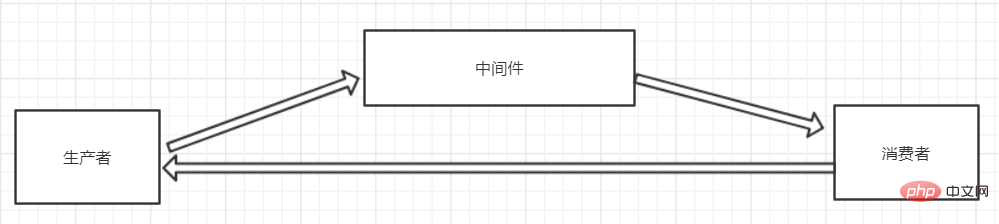

2. Message middleware method

3. First-level and second-level cache Redis Ehchache

4. Evenly distributed Redis key expiration time

Explanation:

1. When there are suddenly a large number of requests to the database server time, perform request restrictions. Using the above mechanism, it is guaranteed that only one thread (request) operates. Otherwise, queue and wait (cluster distributed lock, stand-alone local lock). Reduce server throughput and low efficiency.

Add lock!

Ensure that only one thread can enter. In fact, only one request can perform the query operation.

You can also use the current limiting strategy here. ~

2. Use message middleware to solve

This solution is the most reliable solution!

Message middleware can solve high concurrency! ! !

If a large number of requests are accessed and Redis has no value, the query results will be stored in the message middleware (using the MQ asynchronous synchronization feature)

3. Make a second-level cache. A1 is the original cache and A2 is the copy cache. When A1 fails, you can access A2. The cache expiration time of A1 is set to short-term and A2 is set to long-term (this point is supplementary)

4. Set different expiration times for different keys to make the cache invalidation time points as even as possible.

For more Redis related knowledge, please visit the Redis usage tutorial column!

The above is the detailed content of How to solve the avalanche caused by redis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How do I choose a shard key in Redis Cluster?

Mar 17, 2025 pm 06:55 PM

How do I choose a shard key in Redis Cluster?

Mar 17, 2025 pm 06:55 PM

The article discusses choosing shard keys in Redis Cluster, emphasizing their impact on performance, scalability, and data distribution. Key issues include ensuring even data distribution, aligning with access patterns, and avoiding common mistakes l

How do I implement authentication and authorization in Redis?

Mar 17, 2025 pm 06:57 PM

How do I implement authentication and authorization in Redis?

Mar 17, 2025 pm 06:57 PM

The article discusses implementing authentication and authorization in Redis, focusing on enabling authentication, using ACLs, and best practices for securing Redis. It also covers managing user permissions and tools to enhance Redis security.

How do I use Redis for job queues and background processing?

Mar 17, 2025 pm 06:51 PM

How do I use Redis for job queues and background processing?

Mar 17, 2025 pm 06:51 PM

The article discusses using Redis for job queues and background processing, detailing setup, job definition, and execution. It covers best practices like atomic operations and job prioritization, and explains how Redis enhances processing efficiency.

How do I implement cache invalidation strategies in Redis?

Mar 17, 2025 pm 06:46 PM

How do I implement cache invalidation strategies in Redis?

Mar 17, 2025 pm 06:46 PM

The article discusses strategies for implementing and managing cache invalidation in Redis, including time-based expiration, event-driven methods, and versioning. It also covers best practices for cache expiration and tools for monitoring and automat

How do I monitor the performance of a Redis Cluster?

Mar 17, 2025 pm 06:56 PM

How do I monitor the performance of a Redis Cluster?

Mar 17, 2025 pm 06:56 PM

Article discusses monitoring Redis Cluster performance and health using tools like Redis CLI, Redis Insight, and third-party solutions like Datadog and Prometheus.

How do I use Redis for pub/sub messaging?

Mar 17, 2025 pm 06:48 PM

How do I use Redis for pub/sub messaging?

Mar 17, 2025 pm 06:48 PM

The article explains how to use Redis for pub/sub messaging, covering setup, best practices, ensuring message reliability, and monitoring performance.

How do I use Redis for session management in web applications?

Mar 17, 2025 pm 06:47 PM

How do I use Redis for session management in web applications?

Mar 17, 2025 pm 06:47 PM

The article discusses using Redis for session management in web applications, detailing setup, benefits like scalability and performance, and security measures.

How do I secure Redis against common vulnerabilities?

Mar 17, 2025 pm 06:57 PM

How do I secure Redis against common vulnerabilities?

Mar 17, 2025 pm 06:57 PM

Article discusses securing Redis against vulnerabilities, focusing on strong passwords, network binding, command disabling, authentication, encryption, updates, and monitoring.