Can spark run Python?

spark can run Python programs. Algorithms written in python or extension libraries such as sklearn can be run on spark. It is also possible to directly use spark's mllib, which is available for most algorithms.

Spark is a general-purpose engine that can be used to complete a variety of operations, including SQL queries, text processing, machine learning, etc.

This experiment was run under Linux with a spark environment. The spark version is 1.6.1, which is equivalent to executing it locally in spark. The spark file is placed in /opt/moudles/spark-1.6.1/ (You will see it in the code)

Write a python test program

#test.py文件

# -*- coding:utf-8 -*-

import os

import sys

#配置环境变量并导入pyspark

os.environ['SPARK_HOME'] = r'/opt/moudles/spark-1.6.1'

sys.path.append("/opt/moudles/spark-1.6.1/python")

sys.path.append("/opt/moudles/spark-1.6.1/python/lib/py4j-0.9-src.zip")

from pyspark import SparkContext, SparkConf

appName ="spark_1" #应用程序名称

master= "spark://hadoop01:7077"#hadoop01为主节点hostname,请换成自己的主节点主机名称

conf = SparkConf().setAppName(appName).setMaster(master)

sc = SparkContext(conf=conf)

data = [1, 2, 3, 4, 5]

distData = sc.parallelize(data)

res = distData.reduce(lambda a, b: a + b)

print("===========================================")

print (res)

print("===========================================")Execute the python program

Execute the following command

python test.py

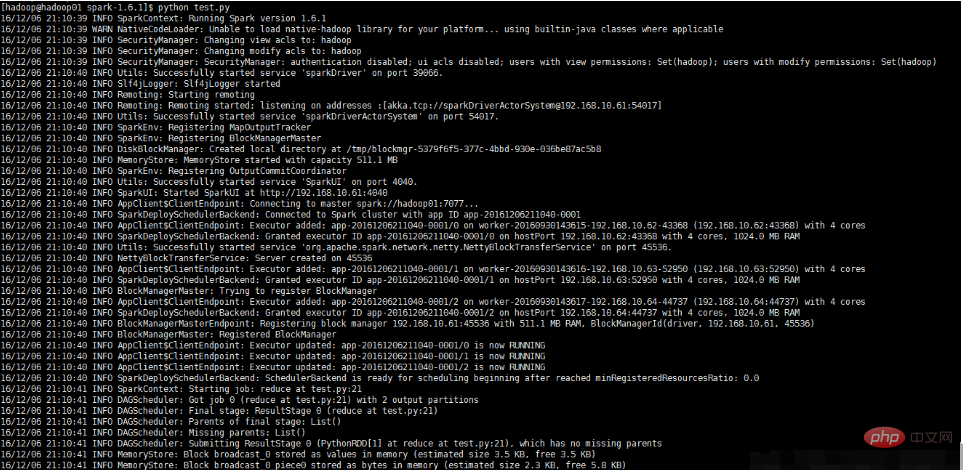

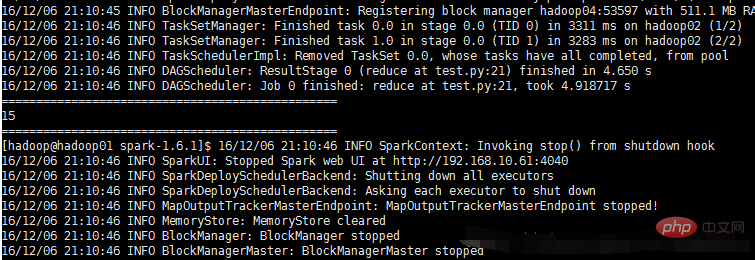

The execution and results are as shown in the figure below Show:

The above is the detailed content of Can spark run Python?. For more information, please follow other related articles on the PHP Chinese website!