Lock is a mechanism for computers to coordinate multiple processes or threads to access a resource concurrently. In a database, in addition to traditional competition for computing resources (such as CPU, RAM, I/O, etc.), data is also a resource shared by many users. How to ensure the consistency and effectiveness of concurrent access to data is a problem that all databases must solve. Lock conflicts are also an important factor affecting the performance of concurrent access to databases. From this perspective, locks are particularly important and complex for databases. In this chapter, we focus on the characteristics of the MySQL lock mechanism, common lock problems, and some methods or suggestions for solving MySQL lock problems.

Mysql uses many such lock mechanisms, such as row locks, table locks, read locks, write locks, etc., which are all locked before operations. These locks are collectively called pessimistic locks.

InnoDB lock

The biggest difference between InnoDB and MyISAM is two points:

First, it supports transactions (TRANSACTION);

The second is the use of row-level locks. There are many differences between row-level locks and table-level locks. In addition, the introduction of transactions also brings some new problems.

1. Transaction (Transaction) and its ACID attributes

A transaction is a logical processing unit composed of a set of SQL statements. A transaction has 4 attributes, which are usually called the ACID attributes of the transaction. .

1. Atomicity: A transaction is an atomic operation unit, and all modifications to the data are either executed or not executed at all.

2. Consistent: The data must remain consistent at the beginning and completion of the transaction. This means that all relevant data rules must be applied to transaction modifications to maintain integrity; at the end of the transaction, all internal data structures (such as B-tree indexes or doubly linked lists) must also be correct.

3. Isolation: The database system provides a certain isolation mechanism to ensure that transactions are executed in an "independent" environment that is not affected by external concurrent operations. This means that intermediate states during a transaction are not visible to the outside world, and vice versa.

4. Durable: After the transaction is completed, its modification to the data is permanent and can be maintained even if a system failure occurs.

2. Problems caused by concurrent transactions

Compared with serial processing, concurrent transaction processing can greatly increase the utilization of database resources and improve the transaction throughput of the database system. , thus supporting more users. However, concurrent transaction processing will also bring some problems, mainly including the following situations.

1. Lost Update: When two or more transactions select the same row and then update the row based on the originally selected value, since each transaction does not know the existence of the other transactions, A lost update problem occurs - the last update overwrites updates made by other transactions. For example, two editors make electronic copies of the same document. Each editor independently changes their copy and then saves the changed copy, overwriting the original document. The editor who last saved a copy of his or her changes overwrites the changes made by another editor. This problem can be avoided if one editor cannot access the same file until another editor completes and commits the transaction.

2. Dirty Reads: A transaction is modifying a record. Before the transaction is committed, the data of this record is in an inconsistent state; at this time, another transaction also comes to read If the same record is taken without control, the second transaction reads the "dirty" data and performs further processing accordingly, which will result in uncommitted data dependencies. This phenomenon is vividly called "dirty reading".

3. Non-Repeatable Reads: A transaction has changed when reading some data, or some records have been deleted! This phenomenon is called "non-repeatable reading".

4. Phantom Reads: A transaction re-reads previously retrieved data according to the same query conditions, but finds that other transactions have inserted new data that satisfies its query conditions. This phenomenon is called It is "phantom reading".

3. Transaction isolation level

Among the problems caused by concurrent transaction processing, "update loss" should usually be completely avoided. However, preventing update loss cannot be solved by the database transaction controller alone. The application needs to add necessary locks to the data to be updated. Therefore, preventing update loss should be the responsibility of the application.

"Dirty read", "non-repeatable read" and "phantom read" are actually database read consistency problems, which must be solved by the database providing a certain transaction isolation mechanism. The ways in which databases implement transaction isolation can basically be divided into the following two types.

1. One is to lock the data before reading it to prevent other transactions from modifying the data.

2. The other is to generate a consistent data snapshot (Snapshot) of the data request time point through a certain mechanism without adding any locks, and use this snapshot to provide a certain level (statement level or transaction level) of consistent reading. Pick. From a user's perspective, it seems that the database can provide multiple versions of the same data. Therefore, this technology is called data multi-version concurrency control (MVCC or MCC for short), which is also often called a multi-version database.

In MVCC concurrency control, read operations can be divided into two categories: snapshot read and current read. Snapshot reading reads the visible version of the record (which may be the historical version) without locking. The current read reads the latest version of the record, and the record returned by the current read will be locked to ensure that other transactions will not modify this record concurrently.

In a system that supports MVCC concurrency control, which read operations are snapshot reads? Which operations are currently being read? Take MySQL InnoDB as an example:

Snapshot read: A simple select operation, which is a snapshot read and does not require locking. (Of course, there are exceptions)

select * from table where ?;

Current read: Special read operations, insert/update/delete operations, belong to current read and need to be locked.

The following statements are all current reads, reading the latest version of the record. Moreover, after reading, it is also necessary to ensure that other concurrent transactions cannot modify the current record and lock the read record. Among them, except for the first statement, which adds S lock (shared lock) to the read record, all other operations add X lock (exclusive lock).

The stricter the transaction isolation of the database, the smaller the concurrent side effects, but the greater the price paid, because transaction isolation essentially makes transactions "serialized" to a certain extent, which is obviously inconsistent with " "Concurrency" is an oxymoron. At the same time, different applications have different requirements for read consistency and transaction isolation. For example, many applications are not sensitive to "non-repeatable reads" and "phantom reads" and may be more concerned about the ability to access data concurrently.

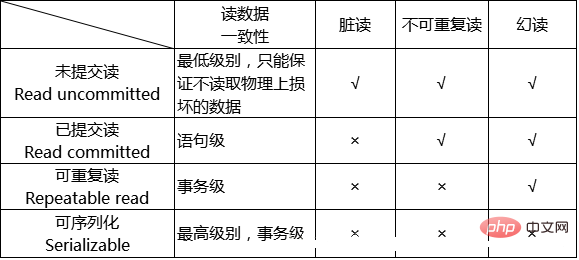

In order to solve the contradiction between "isolation" and "concurrency", ISO/ANSI SQL92 defines 4 transaction isolation levels. Each level has a different degree of isolation and allows different side effects. Applications can be based on their own Business logic requires that the contradiction between "isolation" and "concurrency" be balanced by selecting different isolation levels. The following table provides a good summary of the characteristics of these 4 isolation levels.

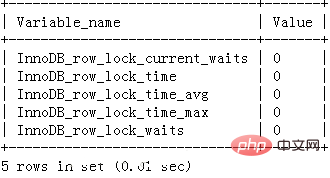

Get InonoD row lock contention

You can analyze the system by checking the InnoDB_row_lock status variable Row lock contention:

mysql> show status like 'innodb_row_lock%';

If you find that the lock contention is serious, such as the values of InnoDB_row_lock_waits and InnoDB_row_lock_time_avg are relatively high, you can also set InnoDB Monitors to further observe the occurrence. Tables, data rows, etc. that have lock conflicts, and analyze the causes of lock contention.

InnoDB’s row lock mode and locking method

InnoDB implements the following two types of row locks.

Shared lock(s): also called read lock. Allows one transaction to read a row, preventing other transactions from obtaining an exclusive lock on the same data set. If transaction T adds S lock to data object A, transaction T can read A but cannot modify A. Other transactions can only add S lock to A, but cannot add X lock, until T releases the S lock on A. This guarantees that other transactions can read A, but cannot make any modifications to A until T releases the S lock on A.

Exclusive lock (X): also known as write lock. Allow transactions that acquire exclusive locks to update data and prevent other transactions from acquiring shared read locks and exclusive write locks on the same data set. If transaction T adds an X lock to data object A, transaction T can read or modify A. Other transactions cannot add any more locks to A until T releases the lock on A.

Everyone may have a good understanding of shared locks, that is, multiple transactions can only read data but cannot change data.

对于排他锁大家的理解可能就有些差别,我当初就犯了一个错误,以为排他锁锁住一行数据后,其他事务就不能读取和修改该行数据,其实不是这样的。排他锁指的是一个事务在一行数据加上排他锁后,其他事务不能再在其上加其他的锁。mysql InnoDB引擎默认的修改数据语句:update,delete,insert都会自动给涉及到的数据加上排他锁,select语句默认不会加任何锁类型,如果加排他锁可以使用select …for update语句,加共享锁可以使用select … lock in share mode语句。所以加过排他锁的数据行在其他事务种是不能修改数据的,也不能通过for update和lock in share mode锁的方式查询数据,但可以直接通过select …from…查询数据,因为普通查询没有任何锁机制。

另外,为了允许行锁和表锁共存,实现多粒度锁机制,InnoDB还有两种内部使用的意向锁(Intention Locks),这两种意向锁都是表锁。

意向共享锁(IS):事务打算给数据行共享锁,事务在给一个数据行加共享锁前必须先取得该表的IS锁。意向排他锁(IX):事务打算给数据行加排他锁,事务在给一个数据行加排他锁前必须先取得该表的IX锁。

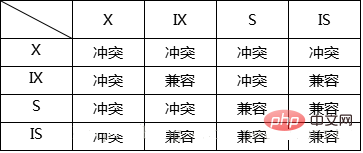

InnoDB行锁模式兼容性列表:

如果一个事务请求的锁模式与当前的锁兼容,InnoDB就请求的锁授予该事务;反之,如果两者两者不兼容,该事务就要等待锁释放。

意向锁是InnoDB自动加的,不需用户干预。对于UPDATE、DELETE和INSERT语句,InnoDB会自动给涉及数据集加排他锁(X);对于普通SELECT语句,InnoDB不会加任何锁。

事务可以通过以下语句显式给记录集加共享锁或排他锁:

1、共享锁(S):SELECT * FROM table_name WHERE ... LOCK IN SHARE MODE。

2、排他锁(X):SELECT * FROM table_name WHERE ... FOR UPDATE。

用SELECT ... IN SHARE MODE获得共享锁,主要用在需要数据依存关系时来确认某行记录是否存在,并确保没有人对这个记录进行UPDATE或者DELETE操作。但是如果当前事务也需要对该记录进行更新操作,则很有可能造成死锁,对于锁定行记录后需要进行更新操作的应用,应该使用SELECT… FOR UPDATE方式获得排他锁。

InnoDB行锁实现方式

InnoDB行锁是通过给索引上的索引项加锁来实现的,这一点MySQL与Oracle不同,后者是通过在数据块中对相应数据行加锁来实现的。InnoDB这种行锁实现特点意味着:只有通过索引条件检索数据,InnoDB才使用行级锁,否则,InnoDB将使用表锁!

在实际应用中,要特别注意InnoDB行锁的这一特性,不然的话,可能导致大量的锁冲突,从而影响并发性能。下面通过一些实际例子来加以说明。

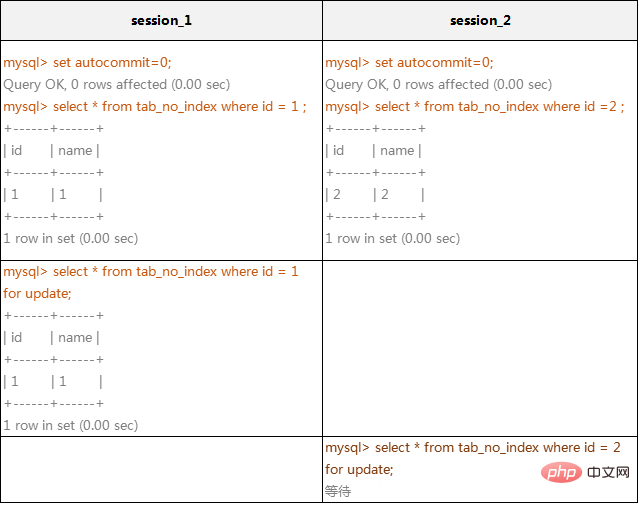

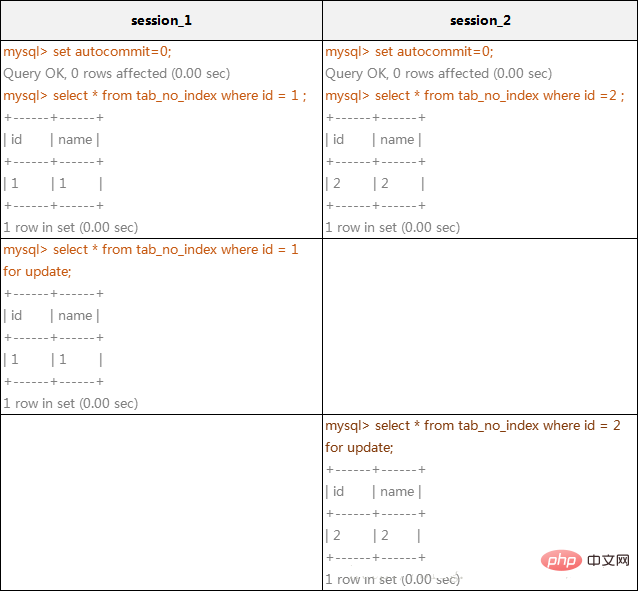

(1)在不通过索引条件查询的时候,InnoDB确实使用的是表锁,而不是行锁。

mysql> create table tab_no_index(id int,name varchar(10)) engine=innodb; Query OK, 0 rows affected (0.15 sec) mysql> insert into tab_no_index values(1,'1'),(2,'2'),(3,'3'),(4,'4');Query OK, 4 rows affected (0.00 sec) Records: 4 Duplicates: 0 Warnings: 0

在上面的例子中,看起来session_1只给一行加了排他锁,但session_2在请求其他行的排他锁时,却出现了锁等待!原因就是在没有索引的情况下,InnoDB只能使用表锁。当我们给其增加一个索引后,InnoDB就只锁定了符合条件的行,如下例所示:

创建tab_with_index表,id字段有普通索引:

mysql> create table tab_with_index(id int,name varchar(10)) engine=innodb; mysql> alter table tab_with_index add index id(id);

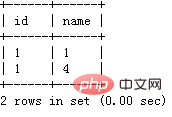

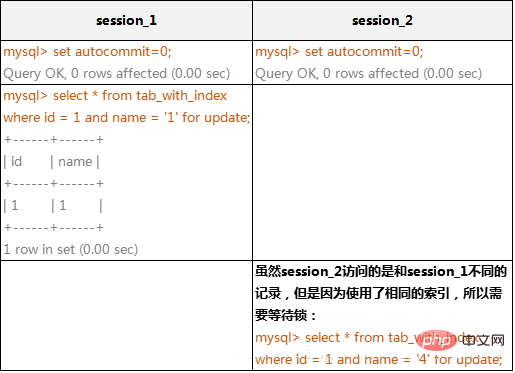

(2)由于MySQL的行锁是针对索引加的锁,不是针对记录加的锁,所以虽然是访问不同行的记录,但是如果是使用相同的索引键,是会出现锁冲突的。应用设计的时候要注意这一点。

在下面的例子中,表tab_with_index的id字段有索引,name字段没有索引:

mysql> alter table tab_with_index drop index name; 1 Query OK, 4 rows affected (0.22 sec) Records: 4 Duplicates: 0 Warnings: 0 mysql> insert into tab_with_index values(1,'4'); 1 Query OK, 1 row affected (0.00 sec) mysql> select * from tab_with_index where id = 1;

InnoDB存储引擎使用相同索引键的阻塞例子 :

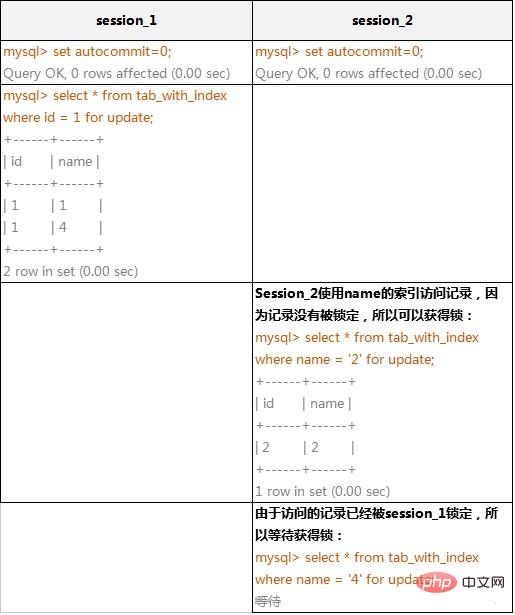

(3)当表有多个索引的时候,不同的事务可以使用不同的索引锁定不同的行,另外,不论是使用主键索引、唯一索引或普通索引,InnoDB都会使用行锁来对数据加锁。

在下面的例子中,表tab_with_index的id字段有主键索引,name字段有普通索引:

mysql> alter table tab_with_index add index name(name); 1Query OK, 5 rows affected (0.23 sec) Records: 5 Duplicates: 0 Warnings: 0

InnoDB存储引擎的表使用不同索引的阻塞例子 :

(4)即便在条件中使用了索引字段,但是否使用索引来检索数据是由MySQL通过判断不同执行计划的代价来决 定的,如果MySQL认为全表扫描效率更高,比如对一些很小的表,它就不会使用索引,这种情况下InnoDB将使用表锁,而不是行锁。因此,在分析锁冲突 时,别忘了检查SQL的执行计划,以确认是否真正使用了索引。

比如,在tab_with_index表里的name字段有索引,但是name字段是varchar类型的,检索值的数据类型与索引字段不同,虽然MySQL能够进行数据类型转换,但却不会使用索引,从而导致InnoDB使用表锁。通过用explain检查两条SQL的执行计划,我们可以清楚地看到了这一点。

mysql> explain select * from tab_with_index where name = 1 \G mysql> explain select * from tab_with_index where name = '1' \G

间隙锁(Next-Key锁)

当我们用范围条件而不是相等条件检索数据,并请求共享或排他锁时,InnoDB会给符合条件的已有数据记录的 索引项加锁;对于键值在条件范围内但并不存在的记录,叫做“间隙(GAP)”,InnoDB也会对这个“间隙”加锁,这种锁机制就是所谓的间隙锁 (Next-Key锁)。

举例来说,假如emp表中只有101条记录,其empid的值分别是 1,2,…,100,101,下面的SQL:

Select * from emp where empid > 100 for update;

是一个范围条件的检索,InnoDB不仅会对符合条件的empid值为101的记录加锁,也会对empid大于101(这些记录并不存在)的“间隙”加锁。

InnoDB使用间隙锁的目的,一方面是为了防止幻读,以满足相关隔离级别的要求,对于上面的例子,要是不使 用间隙锁,如果其他事务插入了empid大于100的任何记录,那么本事务如果再次执行上述语句,就会发生幻读;另外一方面,是为了满足其恢复和复制的需 要。有关其恢复和复制对锁机制的影响,以及不同隔离级别下InnoDB使用间隙锁的情况,在后续的章节中会做进一步介绍。

很显然,在使用范围条件检索并锁定记录时,InnoDB这种加锁机制会阻塞符合条件范围内键值的并发插入,这往往会造成严重的锁等待。因此,在实际应用开发中,尤其是并发插入比较多的应用,我们要尽量优化业务逻辑,尽量使用相等条件来访问更新数据,避免使用范围条件。

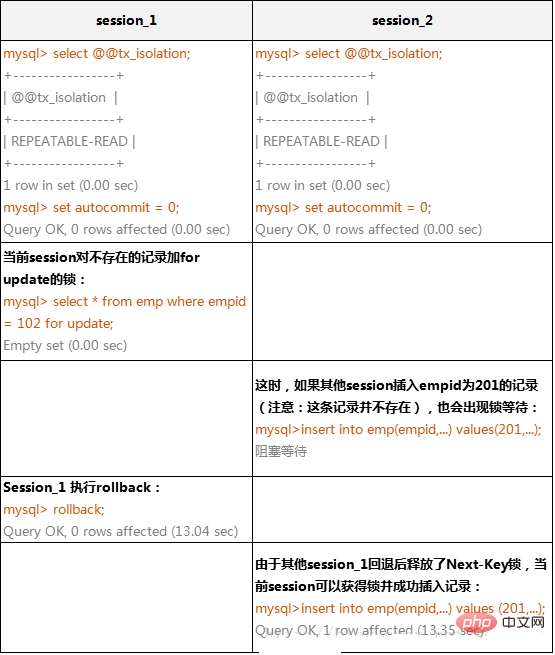

还要特别说明的是,InnoDB除了通过范围条件加锁时使用间隙锁外,如果使用相等条件请求给一个不存在的记录加锁,InnoDB也会使用间隙锁!下面这个例子假设emp表中只有101条记录,其empid的值分别是1,2,……,100,101。

InnoDB存储引擎的间隙锁阻塞例子

小结

本文重点介绍了MySQL中MyISAM表级锁和InnoDB行级锁的实现特点,并讨论了两种存储引擎经常遇到的锁问题和解决办法。

Regarding MyISAM table locks, the following points are mainly discussed:

(1) Shared read locks (S) are compatible, but shared read locks (S) and exclusive write locks (X) are compatible , and the exclusive write lock (X) are mutually exclusive, which means that reading and writing are serial.

(2) Under certain conditions, MyISAM allows queries and inserts to be executed concurrently. We can use this to solve the lock contention problem for queries and inserts into the same table in applications.

(3) MyISAM's default lock scheduling mechanism is write priority, which is not necessarily suitable for all applications. Users can adjust the read-write lock contention by setting the LOW_PRIORITY_UPDATES parameter or specifying the LOW_PRIORITY option in the INSERT, UPDATE, and DELETE statements. use.

(4) Since the locking granularity of the table lock is large and the reading and writing are serial, if there are many update operations, the MyISAM table may have serious lock waiting. You can consider using the InnoDB table to reduce locks. conflict.

For InnoDB tables, this article mainly discusses the following contents:

(1) InnoDB's row locks are based on indexes. If data is not accessed through indexes, InnoDB will use table locks.

(2) Introduces the InnoDB gap lock (Next-key) mechanism and the reasons why InnoDB uses gap locks.

Under different isolation levels, InnoDB’s locking mechanism and consistent read strategy are different.

After understanding the lock characteristics of InnoDB, users can reduce lock conflicts and deadlocks through design and SQL adjustments, including:

Try to use a lower isolation level; carefully design indexes, and Try to use indexes to access data to make locking more accurate, thereby reducing the chance of lock conflicts; choose a reasonable transaction size, and the probability of lock conflicts for small transactions will be smaller; when explicitly locking the record set, it is best to request it in one go Sufficient level of lock. For example, if you want to modify data, it is best to apply for an exclusive lock directly instead of applying for a shared lock first and then request an exclusive lock when modifying. This is prone to deadlock; when different programs access a group of tables, they should try to agree to access in the same order. Each table, for a table, accesses the rows in the table in a fixed order as much as possible. This can greatly reduce the chance of deadlock; try to use equal conditions to access data, so as to avoid the impact of gap locks on concurrent insertions; do not apply for more lock levels than actually required; do not display locks when querying unless necessary; for some specific Transactions can use table locks to increase processing speed or reduce the possibility of deadlock.

If you want to know more related content, please visit the PHP Chinese website: mysql video tutorial

The above is the detailed content of Detailed explanation of the principle of mysql lock mechanism (2). For more information, please follow other related articles on the PHP Chinese website!

How to activate Douyin group buying

How to activate Douyin group buying

How to connect php to mssql database

How to connect php to mssql database

How to set up ftp server

How to set up ftp server

How to set Chinese in vscode

How to set Chinese in vscode

What is the difference between JD International self-operated and JD self-operated

What is the difference between JD International self-operated and JD self-operated

What does vram mean?

What does vram mean?

The difference between random and pseudo-random

The difference between random and pseudo-random

connectionresetSolution

connectionresetSolution