Detailed explanation of I/O model in JAVA (with examples)

Perhaps many friends will find it a bit difficult when learning NIO, and many concepts in it are not so clear. Before entering Java NIO programming, let's discuss some basic knowledge today: I/O model. The following article starts with the concepts of synchronization and asynchronous, then explains the difference between blocking and non-blocking, then introduces the difference between blocking IO and non-blocking IO, then introduces the difference between synchronous IO and asynchronous IO, and then introduces 5 IO models, and finally introduces two design models (Reactor and Proactor) related to high-performance IO design

The following is the table of contents outline of this article:

1. What is synchronization? What is asynchronous?

2. What is blocking? What is non-blocking?

3. What is blocking IO? What is non-blocking IO?

4. What is synchronous IO? What is asynchronous IO?

Five. Five IO models

Six. Two high-performance IO design patterns

1. What is synchronization? What is asynchronous?

The concepts of synchronization and asynchronousness have been around for a long time, and there are many opinions about synchronization and asynchronousness on the Internet. The following is my personal understanding:

Synchronization means: if there are multiple tasks or events to occur, these tasks or events must be carried out one by one. The execution of one event or task will cause the entire process to wait temporarily. These Events cannot be executed concurrently;

Asynchronous means: if multiple tasks or events occur, these events can be executed concurrently, and the execution of one event or task will not cause the entire process to wait temporarily.

This is synchronous and asynchronous. To give a simple example, if there is a task that includes two subtasks A and B. For synchronization, when A is executing, B can only wait until A is completed before B can be executed; for asynchronous, A and B can be executed concurrently, and B does not have to wait for A to finish executing, so that the execution of A will not cause the entire task to wait temporarily.

If you still don’t understand, you can read the following two pieces of code first:

void fun1() {

}

void fun2() {

}

void function(){

fun1();

fun2()

.....

.....

}This code is a typical synchronization. In the method function, fun1 will cause subsequent fun2 cannot be executed, fun2 must wait for fun1 to complete execution before it can be executed.

Then look at the following code:

void fun1() {

}

void fun2() {

}

void function(){

new Thread(){

public void run() {

fun1();

}

}.start();

new Thread(){

public void run() {

fun2();

}

}.start();

.....

.....

}This code is a typical asynchronous. The execution of fun1 will not affect the execution of fun2, and the execution of fun1 and fun2 will not cause Its subsequent execution process is temporarily pending.

In fact, synchronization and asynchronousness are very broad concepts. Their focus lies in whether the occurrence or execution of an event will cause the entire process to wait temporarily when multiple tasks and events occur.. I think an analogy can be made between synchronization and asynchronousness with the synchronized keyword in Java. When multiple threads access a variable at the same time, each thread's access to the variable is an event. For synchronization, these threads must access the variable one by one. While one thread is accessing the variable, other threads must wait. ; For asynchronous, multiple threads do not have to access the variable one by one, but can access it at the same time.

Therefore, I personally feel that synchronization and asynchronousness can be expressed in many ways, but the key to remember is that when multiple tasks and events occur, whether the occurrence or execution of an event will cause the entire process to wait temporarily. Generally speaking, asynchronous can be achieved through multi-threading, but remember not to equate multi-threading with asynchronous. Asynchrony is just a macro pattern, and using multi-threading to achieve asynchronous is just a means, and Asynchronous implementation can also be achieved through multi-processing.

2. What is blocking? What is non-blocking?

The difference between synchronization and asynchronousness was introduced earlier. In this section, we will look at the difference between blocking and non-blocking.

Blocking is: When an event or task is being executed, it issues a request operation, but because the conditions required for the request operation are not met, it will always wait there. , until the conditions are met;

Non-blocking means: When an event or task is being executed, it issues a request operation. If the conditions required for the request operation are not met, it will immediately Returns a flag message to inform that the condition is not met and will not wait there forever.

This is the difference between blocking and non-blocking. That is to say, the key difference between blocking and non-blocking is When a request is made for an operation, if the conditions are not met, whether it will wait forever or return a flag message.

Give a simple example:

If I want to read the contents of a file, if there is no readable content in the file at this time, for synchronization, it will always wait there. Until there is readable content in the file; for non-blocking, a flag message will be returned directly to inform that there is no readable content in the file.

Some friends on the Internet equate synchronization and asynchronousness with blocking and non-blocking respectively. In fact, they are two completely different concepts. Note that understanding the difference between these two sets of concepts is very important for understanding the subsequent IO model.

The focus of synchronous and asynchronous is on whether the execution of one task will cause the entire process to wait temporarily during the execution of multiple tasks;

The focus of blocking and non-blocking is on issuing a request During operation, if the conditions for the operation are not met, whether a flag message will be returned to inform that the conditions are not met.

Understanding blocking and non-blocking can be understood by analogy with thread blocking. When a thread performs a request operation, if the condition is not met, it will be blocked, that is, waiting for the condition to be met.

3. What is blocking I/O? What is non-blocking I/O?

In understanding blocking IO and non-blocking IO Before blocking IO, first look at how the next specific IO operation process is performed.

Generally speaking, IO operations include: reading and writing to the hard disk, reading and writing to the socket, and reading and writing to the peripherals.

When a user thread initiates an IO request operation (this article takes a read request operation as an example), the kernel will check whether the data to be read is ready. For blocking IO, if the data is not ready, it will continue to Wait there until the data is ready; for non-blocking IO, if the data is not ready, a flag message will be returned to inform the user thread that the data currently to be read is not ready. When the data is ready, the data is copied to the user thread, so that a complete IO read request operation is completed. That is to say, a complete IO read request operation includes two stages:

1) View data Is it ready?

2) Copy data (the kernel copies the data to the user thread).

Then the difference between blocking (blocking IO) and non-blocking (non-blocking IO) is that in the first stage, if the data is not ready, whether to wait all the time in the process of checking whether the data is ready, or to return directly A flag message.

Traditional IO in Java is blocking IO, such as reading data through a socket. After calling the read() method, if the data is not ready, the current thread will be blocked at the read method call until there is data. and if it is non-blocking IO, when the data is not ready, the read() method should return a flag message to inform the current thread that the data is not ready, instead of waiting there all the time.

4. What is synchronous I/O? What is asynchronous I/O?

Let’s first take a look at the definitions of synchronous IO and asynchronous IO. The definitions of synchronous IO and asynchronous IO in the book "Unix Network Programming" are as follows:

A synchronous I/O operation causes the requesting process to be blocked until that I/O operation completes.<br/> An asynchronous I/O operation does not cause the requesting process to be blocked.

It can be seen from the literal meaning: synchronous IO means that if a thread requests an IO operation, the thread will be blocked before the IO operation is completed;

Asynchronous IO means that if a thread requests an IO operation, the thread will be blocked. IO operations, IO operations will not cause the request thread to be blocked.

In fact, the synchronous IO and asynchronous IO models are aimed at the interaction between the user thread and the kernel:

For synchronous IO: after the user issues an IO request operation, if the data is not ready, It is necessary to continuously poll whether the data is ready through the user thread or the kernel. When the data is ready, the data is copied from the kernel to the user thread;

Asynchronous IO: Only the IO request operation is issued by the user thread The two stages of the IO operation are automatically completed by the kernel, and then a notification is sent to inform the user thread that the IO operation has been completed. That is to say, in asynchronous IO, there will be no blocking of user threads.

This is the key difference between synchronous IO and asynchronous IO. The key difference between synchronous IO and asynchronous IO is reflected in whether the data copy phase is completed by the user thread or the kernel. Therefore, asynchronous IO must have underlying support from the operating system.

Note that synchronous IO and asynchronous IO are two different concepts from blocking IO and non-blocking IO.

Blocking IO and non-blocking IO are reflected in the fact that when the user requests an IO operation, if the data is not ready, the user thread will still receive a flag message if it waits for the data to be ready. In other words, blocking IO and non-blocking IO are reflected in the first stage of the IO operation, how it is processed when checking whether the data is ready.

5. Five I/O models

Five IO models are mentioned in the book "Unix Network Programming" , respectively: blocking IO, non-blocking IO, multiplexed IO, signal-driven IO and asynchronous IO.

Now let’s introduce the similarities and differences of these 5 IO models respectively.

1. Blocking IO model

The most traditional IO model, that is, blocking occurs during the process of reading and writing data.

当用户线程发出IO请求之后,内核会去查看数据是否就绪,如果没有就绪就会等待数据就绪,而用户线程就会处于阻塞状态,用户线程交出CPU。当数据就绪之后,内核会将数据拷贝到用户线程,并返回结果给用户线程,用户线程才解除block状态。

典型的阻塞IO模型的例子为:

data = socket.read();

如果数据没有就绪,就会一直阻塞在read方法。

2.非阻塞IO模型

当用户线程发起一个read操作后,并不需要等待,而是马上就得到了一个结果。如果结果是一个error时,它就知道数据还没有准备好,于是它可以再次发送read操作。一旦内核中的数据准备好了,并且又再次收到了用户线程的请求,那么它马上就将数据拷贝到了用户线程,然后返回。

所以事实上,在非阻塞IO模型中,用户线程需要不断地询问内核数据是否就绪,也就说非阻塞IO不会交出CPU,而会一直占用CPU。

典型的非阻塞IO模型一般如下:

while(true){

data = socket.read();

if(data!= error){

处理数据

break;

}

}但是对于非阻塞IO就有一个非常严重的问题,在while循环中需要不断地去询问内核数据是否就绪,这样会导致CPU占用率非常高,因此一般情况下很少使用while循环这种方式来读取数据。

3.多路复用IO模型

多路复用IO模型是目前使用得比较多的模型。Java NIO实际上就是多路复用IO。

在多路复用IO模型中,会有一个线程不断去轮询多个socket的状态,只有当socket真正有读写事件时,才真正调用实际的IO读写操作。因为在多路复用IO模型中,只需要使用一个线程就可以管理多个socket,系统不需要建立新的进程或者线程,也不必维护这些线程和进程,并且只有在真正有socket读写事件进行时,才会使用IO资源,所以它大大减少了资源占用。

在Java NIO中,是通过selector.select()去查询每个通道是否有到达事件,如果没有事件,则一直阻塞在那里,因此这种方式会导致用户线程的阻塞。

也许有朋友会说,我可以采用 多线程+ 阻塞IO 达到类似的效果,但是由于在多线程 + 阻塞IO 中,每个socket对应一个线程,这样会造成很大的资源占用,并且尤其是对于长连接来说,线程的资源一直不会释放,如果后面陆续有很多连接的话,就会造成性能上的瓶颈。

而多路复用IO模式,通过一个线程就可以管理多个socket,只有当socket真正有读写事件发生才会占用资源来进行实际的读写操作。因此,多路复用IO比较适合连接数比较多的情况。

另外多路复用IO为何比非阻塞IO模型的效率高是因为在非阻塞IO中,不断地询问socket状态时通过用户线程去进行的,而在多路复用IO中,轮询每个socket状态是内核在进行的,这个效率要比用户线程要高的多。

不过要注意的是,多路复用IO模型是通过轮询的方式来检测是否有事件到达,并且对到达的事件逐一进行响应。因此对于多路复用IO模型来说,一旦事件响应体很大,那么就会导致后续的事件迟迟得不到处理,并且会影响新的事件轮询。

4.信号驱动IO模型

在信号驱动IO模型中,当用户线程发起一个IO请求操作,会给对应的socket注册一个信号函数,然后用户线程会继续执行,当内核数据就绪时会发送一个信号给用户线程,用户线程接收到信号之后,便在信号函数中调用IO读写操作来进行实际的IO请求操作。

5.异步IO模型

异步IO模型才是最理想的IO模型,在异步IO模型中,当用户线程发起read操作之后,立刻就可以开始去做其它的事。而另一方面,从内核的角度,当它受到一个asynchronous read之后,它会立刻返回,说明read请求已经成功发起了,因此不会对用户线程产生任何block。然后,内核会等待数据准备完成,然后将数据拷贝到用户线程,当这一切都完成之后,内核会给用户线程发送一个信号,告诉它read操作完成了。也就说用户线程完全不需要实际的整个IO操作是如何进行的,只需要先发起一个请求,当接收内核返回的成功信号时表示IO操作已经完成,可以直接去使用数据了。

In other words, in the asynchronous IO model, neither phase of the IO operation will block the user thread. Both phases are automatically completed by the kernel, and then a signal is sent to inform the user thread that the operation has been completed. There is no need to call the IO function again in the user thread for specific reading and writing. This is different from the signal-driven model. In the signal-driven model, when the user thread receives the signal, it indicates that the data is ready, and then the user thread needs to call the IO function to perform the actual read and write operations; in the asynchronous IO model, Receiving the signal indicates that the IO operation has been completed, and there is no need to call the IO function in the user thread for actual read and write operations.

Note that asynchronous IO requires underlying support from the operating system. In Java 7, Asynchronous IO is provided.

The first four IO models are actually synchronous IO, and only the last one is truly asynchronous IO, because whether it is a multiplexed IO or a signal-driven model, the second one of the IO operations Each stage will cause user threads to block, that is, the process of data copying by the kernel will cause user threads to block.

6. Two high-performance I/O design patterns

In the traditional network service design pattern, there are two more classic patterns:

One is multi- Threads, one is a thread pool.

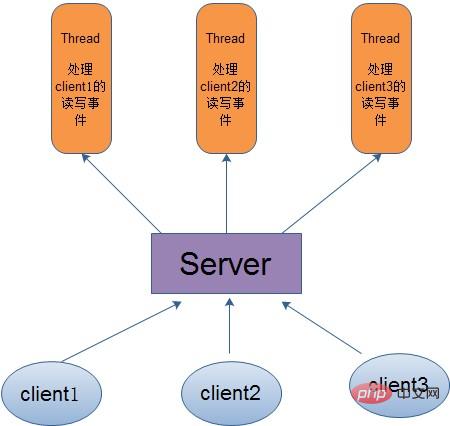

For multi-threaded mode, that is to say, when the client comes, the server will create a new thread to handle the read and write events of the client, as shown in the following figure:

Although this mode is simple and convenient to handle, because the server uses a thread to process each client connection, it takes up a lot of resources. Therefore, when the number of connections reaches the upper limit, and another user requests a connection, it will directly cause a resource bottleneck, and in severe cases, it may directly cause the server to crash.

Therefore, in order to solve the problem caused by one thread corresponding to one client mode, the thread pool method is proposed, which means to create a thread pool of a fixed size, and when a client comes, it starts from The thread pool takes an idle thread for processing. When the client completes the read and write operations, it hands over the occupation of the thread. Therefore, this avoids the waste of resources caused by creating threads for each client, so that threads can be reused.

But the thread pool also has its drawbacks. If most of the connections are long connections, it may cause all the threads in the thread pool to be occupied for a period of time. Then when another user requests a connection, because there is no If the available idle threads are used for processing, the client connection will fail, thus affecting the user experience. Therefore, the thread pool is more suitable for a large number of short connection applications.

Therefore, the following two high-performance IO design patterns have emerged: Reactor and Proactor.

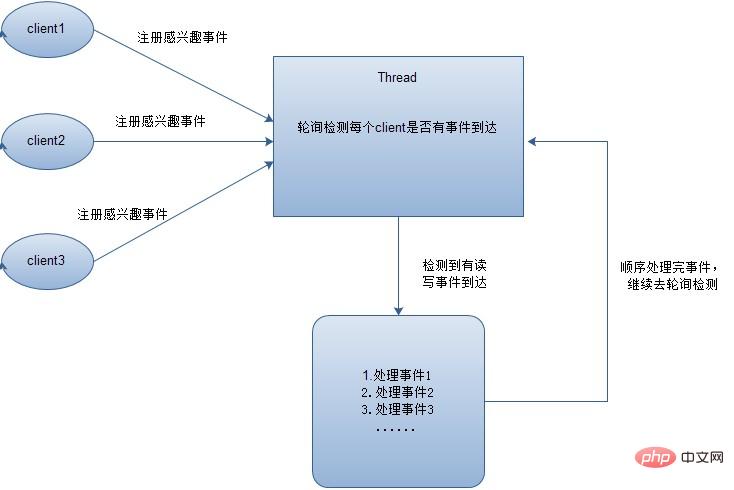

In the Reactor mode, events of interest will be registered for each client first, and then a thread will poll each client to see if an event occurs. When an event occurs, each client will be processed sequentially. Events, when all events are processed, they will be transferred to continue polling, as shown in the following figure:

As can be seen from here, among the five IO models above The multiplexed IO uses the Reactor mode. Note that the above figure shows that each event is processed sequentially. Of course, in order to improve the event processing speed, events can be processed through multi-threads or thread pools.

In Proactor mode, when an event is detected, a new asynchronous operation will be started and then handed over to the kernel thread for processing. When the kernel thread completes the IO operation, a notification will be sent to inform that the operation has been completed. It can be known that the asynchronous IO model uses the Proactor mode.

Please forgive me if there are any errors in the above content and welcome your criticisms and corrections!

If you want to know more related content, please visit the PHP Chinese website: JAVA Video Tutorial

The above is the detailed content of Detailed explanation of I/O model in JAVA (with examples). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How does Java's classloading mechanism work, including different classloaders and their delegation models?

Mar 17, 2025 pm 05:35 PM

How does Java's classloading mechanism work, including different classloaders and their delegation models?

Mar 17, 2025 pm 05:35 PM

Java's classloading involves loading, linking, and initializing classes using a hierarchical system with Bootstrap, Extension, and Application classloaders. The parent delegation model ensures core classes are loaded first, affecting custom class loa

How do I implement multi-level caching in Java applications using libraries like Caffeine or Guava Cache?

Mar 17, 2025 pm 05:44 PM

How do I implement multi-level caching in Java applications using libraries like Caffeine or Guava Cache?

Mar 17, 2025 pm 05:44 PM

The article discusses implementing multi-level caching in Java using Caffeine and Guava Cache to enhance application performance. It covers setup, integration, and performance benefits, along with configuration and eviction policy management best pra

How can I use JPA (Java Persistence API) for object-relational mapping with advanced features like caching and lazy loading?

Mar 17, 2025 pm 05:43 PM

How can I use JPA (Java Persistence API) for object-relational mapping with advanced features like caching and lazy loading?

Mar 17, 2025 pm 05:43 PM

The article discusses using JPA for object-relational mapping with advanced features like caching and lazy loading. It covers setup, entity mapping, and best practices for optimizing performance while highlighting potential pitfalls.[159 characters]

How do I use Maven or Gradle for advanced Java project management, build automation, and dependency resolution?

Mar 17, 2025 pm 05:46 PM

How do I use Maven or Gradle for advanced Java project management, build automation, and dependency resolution?

Mar 17, 2025 pm 05:46 PM

The article discusses using Maven and Gradle for Java project management, build automation, and dependency resolution, comparing their approaches and optimization strategies.

How do I create and use custom Java libraries (JAR files) with proper versioning and dependency management?

Mar 17, 2025 pm 05:45 PM

How do I create and use custom Java libraries (JAR files) with proper versioning and dependency management?

Mar 17, 2025 pm 05:45 PM

The article discusses creating and using custom Java libraries (JAR files) with proper versioning and dependency management, using tools like Maven and Gradle.