Hadoop is used for distributed computing, what is it

What is hadoop?

(1)Hadoop is an open source framework that can write and run distributed applications to process large-scale data. It is designed for offline and large-scale data analysis. The design is not suitable for the online transaction processing mode that randomly reads and writes several records. (Recommended learning: web front-end video tutorial)

Hadoop=HDFS (file system, data storage technology related) Mapreduce (data processing), Hadoop data source can be in any form , has better performance than relational databases in processing semi-structured and unstructured data, and has more flexible processing capabilities. Regardless of any data form, it will eventually be converted into key/value, which is the basic data unit. .

Use functional expressions to Mapreduce instead of SQL. SQL is a query statement, while Mapreduce uses scripts and codes. For relational databases, Hadoop, which is accustomed to SQL, is replaced by the open source tool hive.

(2)Hadoop is a distributed computing solution.

What can hadoop do?

Hadoop is good at log analysis, and Facebook uses Hive for log analysis. In 2009, 30% of non-programmers at Facebook used HiveQL for data analysis;

Taobao Hive is also used for custom filtering in searches; Pig can also be used for advanced data processing, including discovering people you may know on Twitter and LinkedIn, which can achieve a recommendation effect similar to Amazon.com's collaborative filtering.

Taobao product recommendations are also available! In Yahoo! 40% of Hadoop jobs are run using pig, including spam identification and filtering, and user feature modeling.

Hadoop is made up of many elements.

At the bottom is the Hadoop Distributed File System (HDFS), which stores files on all storage nodes in the Hadoop cluster.

The upper layer of HDFS is the MapReduce engine, which consists of JobTrackers and TaskTrackers. Through the introduction of the core distributed file system HDFS and MapReduce processing of the Hadoop distributed computing platform, as well as the data warehouse tool Hive and the distributed database Hbase, it basically covers all the technical core of the Hadoop distributed platform.

The above is the detailed content of Hadoop is used for distributed computing, what is it. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1207

1207

24

24

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Java big data technology stack: Understand the application of Java in the field of big data, such as Hadoop, Spark, Kafka, etc. As the amount of data continues to increase, big data technology has become a hot topic in today's Internet era. In the field of big data, we often hear the names of Hadoop, Spark, Kafka and other technologies. These technologies play a vital role, and Java, as a widely used programming language, also plays a huge role in the field of big data. This article will focus on the application of Java in large

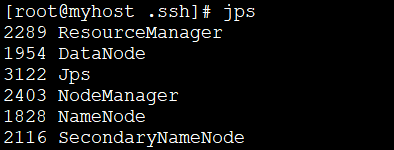

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

1: Install JDK1. Execute the following command to download the JDK1.8 installation package. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Execute the following command to decompress the downloaded JDK1.8 installation package. tar-zxvfjdk-8u151-linux-x64.tar.gz3. Move and rename the JDK package. mvjdk1.8.0_151//usr/java84. Configure Java environment variables. echo'

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

Use PHP to achieve large-scale data processing: Hadoop, Spark, Flink, etc.

May 11, 2023 pm 04:13 PM

As the amount of data continues to increase, large-scale data processing has become a problem that enterprises must face and solve. Traditional relational databases can no longer meet this demand. For the storage and analysis of large-scale data, distributed computing platforms such as Hadoop, Spark, and Flink have become the best choices. In the selection process of data processing tools, PHP is becoming more and more popular among developers as a language that is easy to develop and maintain. In this article, we will explore how to leverage PHP for large-scale data processing and how

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

In the current Internet era, the processing of massive data is a problem that every enterprise and institution needs to face. As a widely used programming language, PHP also needs to keep up with the times in data processing. In order to process massive data more efficiently, PHP development has introduced some big data processing tools, such as Spark and Hadoop. Spark is an open source data processing engine that can be used for distributed processing of large data sets. The biggest feature of Spark is its fast data processing speed and efficient data storage.

Comparison and application scenarios of Redis and Hadoop

Jun 21, 2023 am 08:28 AM

Comparison and application scenarios of Redis and Hadoop

Jun 21, 2023 am 08:28 AM

Redis and Hadoop are both commonly used distributed data storage and processing systems. However, there are obvious differences between the two in terms of design, performance, usage scenarios, etc. In this article, we will compare the differences between Redis and Hadoop in detail and explore their applicable scenarios. Redis Overview Redis is an open source memory-based data storage system that supports multiple data structures and efficient read and write operations. The main features of Redis include: Memory storage: Redis