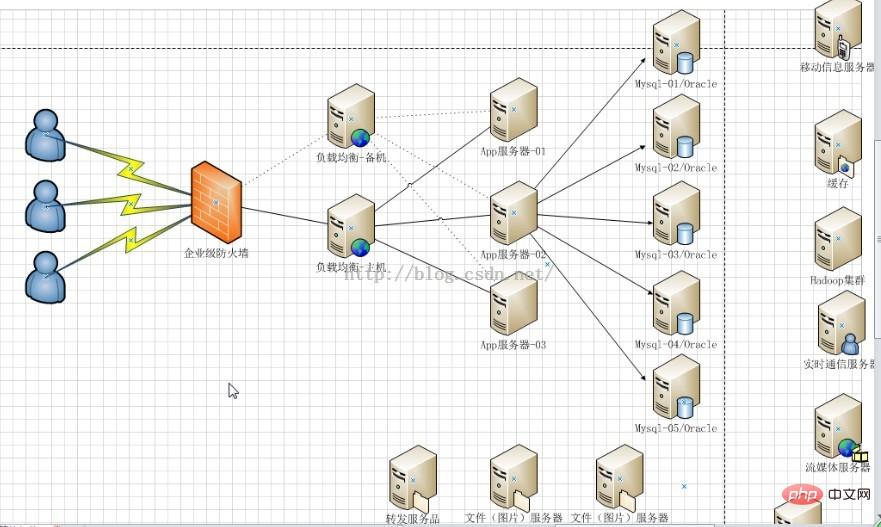

##1. The role of load balancing

1. Forwarding function ##) According to a certain algorithm [weight, polling], client requests are forwarded to different application servers to reduce the pressure on a single server and increase system concurrency.

2. Fault removal

Use heartbeat detection to determine whether the application server can currently work normally. If the server goes down, the request will be automatically sent to other application server.

3. Recovery Addition

If it is detected that the failed application server has resumed work, it will be automatically added to the team that handles user requests.

2. Nginx implements load balancing

2. Nginx implements load balancing

Also uses two tomcats to simulate two application servers, with port numbers 8080 and 8081

1. Nginx’s load distribution strategy

Nginx’s upstream currently supports the distribution algorithm:

1), Polling - 1:1 processing requests in turn (default)

2), weight - you can you up

2. Configure Nginx's load balancing and distribution strategy

Just add the specified parameters after the application server IP added in the upstream parameter. Implement

upstream tomcatserver1 {

server 192.168.72.49:8080 weight=3;

server 192.168.72.49:8081;

}

server {

listen 80;

server_name 8080.max.com;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

proxy_pass http://tomcatserver1;

index index.html index.htm;

}

}

The above is the detailed content of How to configure nginx as a load balancer. For more information, please follow other related articles on the PHP Chinese website!

nginx restart

nginx restart

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

What are the differences between tomcat and nginx

What are the differences between tomcat and nginx

what is url

what is url

mysql transaction isolation level

mysql transaction isolation level

The difference between win10 home version and professional version

The difference between win10 home version and professional version

special symbol point

special symbol point

What is the cmd command to clean up C drive junk?

What is the cmd command to clean up C drive junk?