What to do if the amount of redis data is too large

There is no doubt about the high performance and stability of redis, an in-memory database. However, if we stuff too much data into redis and the memory is too large, then if something goes wrong problem, then it may bring us disaster.

Online business over the past few years has shown that there is no doubt about the high performance and stability of redis, an in-memory database. However, we stuffed too much data into redis and the memory was too large. If something goes wrong, it may bring us disaster (I think many companies have encountered it). Here are some of the problems we encountered:

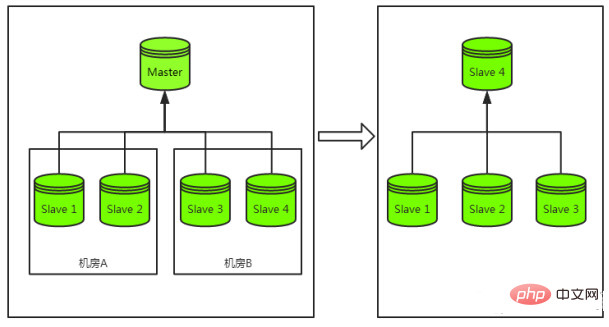

When the main database goes down, our most common disaster recovery strategy is "cutting off the main database". Specifically, it selects a slave library from the remaining slave libraries of the cluster and upgrades it to the master library. After the slave library is upgraded to the master library, the remaining slave libraries are mounted under it to become its slave library, and finally the entire master-slave database is restored. Cluster structure. The above is a complete disaster recovery process, and the most costly process is the remounting of the slave library, not the switching of the main library.

Solution

Solution

The solution is of course to minimize the use of memory. Under normal circumstances, we do this:

1 Set the expiration time

Set the expiration time for time-sensitive keys, and reduce the memory usage of expired keys through redis' own expired key cleanup strategy. It can also Reduce business troubles, no need to clean up regularly

2 Do not store garbage in redis

This is simply nonsense, but is there anyone who has the same problem as us?3 Clean up useless data in a timely manner

For example, a redis carries the data of 3 businesses, and 2 businesses go offline after a period of time, then you can The related data of the two businesses has been cleaned up4 Try to compress the data

For example, for some long text data, compression can greatly reduce memory usage5 Pay attention to memory growth and locate large-capacity keys

Whether you are a DBA or a developer, if you use redis, you must pay attention to memory, otherwise, you are actually incompetent. , here you can analyze which keys in the redis instance are relatively large to help the business quickly locate abnormal keys (keys with unexpected growth are often the source of problems)6 pika

If you really don’t want to be so tired, then migrate the business to the new open source pika, so that you don’t have to pay too much attention to the memory. The problems caused by the large redis memory are not a problem. For more Redis-related technical articles, please visit theIntroduction to Using Redis Database Tutorial column to learn!

The above is the detailed content of What to do if the amount of redis data is too large. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to use redis lock

Apr 10, 2025 pm 08:39 PM

How to use redis lock

Apr 10, 2025 pm 08:39 PM

Using Redis to lock operations requires obtaining the lock through the SETNX command, and then using the EXPIRE command to set the expiration time. The specific steps are: (1) Use the SETNX command to try to set a key-value pair; (2) Use the EXPIRE command to set the expiration time for the lock; (3) Use the DEL command to delete the lock when the lock is no longer needed.

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

The best way to understand Redis source code is to go step by step: get familiar with the basics of Redis. Select a specific module or function as the starting point. Start with the entry point of the module or function and view the code line by line. View the code through the function call chain. Be familiar with the underlying data structures used by Redis. Identify the algorithm used by Redis.

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

Redis data loss causes include memory failures, power outages, human errors, and hardware failures. The solutions are: 1. Store data to disk with RDB or AOF persistence; 2. Copy to multiple servers for high availability; 3. HA with Redis Sentinel or Redis Cluster; 4. Create snapshots to back up data; 5. Implement best practices such as persistence, replication, snapshots, monitoring, and security measures.

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.