Configure Nginx to achieve load balancing (picture)

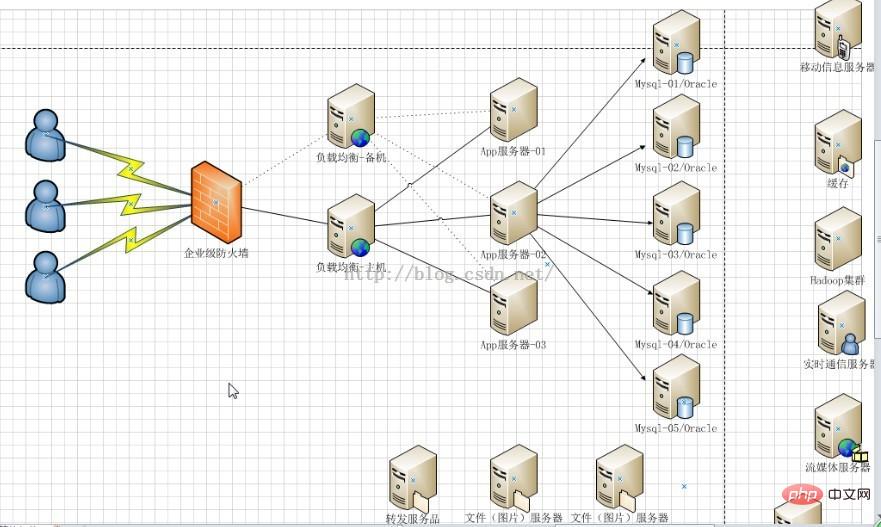

When enterprises solve high concurrency problems, they generally have two processing strategies, software and hardware. On the hardware, a load balancer is added to distribute a large number of requests. On the software side, two solutions can be added at the high concurrency bottleneck: database and web server. Solution, among which the most commonly used solution for adding load on the front layer of the web server is to use nginx to achieve load balancing.

1. The role of load balancing

1. Forwarding function

According to A certain algorithm [weighting, polling] forwards client requests to different application servers, reducing the pressure on a single server and increasing system concurrency.

2. Fault removal

Use heartbeat detection to determine whether the application server can currently work normally. If the server goes down, the request will be automatically sent to other application servers.

3. Recovery addition

(Recommended learning: nginx tutorial)

If it is detected that the failed application server has resumed work, it will be added automatically. Join the team that handles user requests.

2. Nginx implements load balancing

Also uses two tomcats to simulate two application servers, with port numbers 8080 and 8081

1. Nginx's load distribution strategy

Nginx's upstream currently supports the distribution algorithm:

1), polling - 1:1 processing in turn Requests (default)

Each request is assigned to a different application server one by one in chronological order. If the application server goes down, it will be automatically eliminated, and the remaining ones will continue to be polled.

2), weight - you can you up

By configuring the weight, specify the polling probability, the weight is proportional to the access ratio, which is used when the application server performance is not good average situation.

3), ip_hash algorithm

Each request is allocated according to the hash result of the accessed IP, so that each visitor has a fixed access to an application server and can solve the session Shared issues.

2. Configure Nginx's load balancing and distribution strategy

This can be achieved by adding specified parameters after the application server IP added in the upstream parameter, such as:

upstream tomcatserver1 {

server 192.168.72.49:8080 weight=3;

server 192.168.72.49:8081;

}

server {

listen 80;

server_name 8080.max.com;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

proxy_pass http://tomcatserver1;

index index.html index.htm;

}

} Pass The above configuration can be achieved. When accessing the website 8080.max.com, because the proxy_pass address is configured, all requests will first pass through the nginx reverse proxy server, and then the server will When the request is forwarded to the destination host, read the upstream address of tomcatsever1, read the distribution policy, and configure tomcat1 weight to be 3, so nginx will send most of the requests to tomcat1 on server 49, which is port 8080; a smaller number will be sent to tomcat1 on server 49, which is port 8080; tomcat2 to achieve conditional load balancing. Of course, this condition is the hardware index processing request capability of servers 1 and 2.

3. Other configurations of nginx

upstream myServer {

server 192.168.72.49:9090 down;

server 192.168.72.49:8080 weight=2;

server 192.168.72.49:6060;

server 192.168.72.49:7070 backup;

}1) down

means that the previous server will not participate in the load temporarily

2) Weight

The default is 1. The larger the weight, the greater the weight of the load.

3) max_fails

The number of allowed request failures defaults to 1. When the maximum number is exceeded, the error defined by the proxy_next_upstream module is returned

4) fail_timeout

Pause time after max_fails failures.

5) Backup

When all other non-backup machines are down or busy, request the backup machine. So this machine will have the least pressure.

3. High availability using Nginx

In addition to achieving high availability of the website, it also means providing n multiple servers for publishing the same service and adding load balancing The server distributes requests to ensure that each server can process requests relatively saturated under high concurrency. Similarly, the load balancing server also needs to be highly available to prevent the subsequent application servers from being disrupted and unable to work if the load balancing server hangs up.

Solutions to achieve high availability: Add redundancy. Add n nginx servers to avoid the above single point of failure.

4. Summary

To summarize, load balancing, whether it is a variety of software or hardware solutions, mainly distributes a large number of concurrent requests according to certain rules. Let different servers handle it, thereby reducing the instantaneous pressure on a certain server and improving the anti-concurrency ability of the website. The author believes that the reason why nginx is widely used in load balancing is due to its flexible configuration. An nginx.conf file solves most problems, whether it is nginx creating a virtual server, nginx reverse proxy server, or nginx introduced in this article. Load balancing is almost always performed in this configuration file. The server is only responsible for setting up nginx and running it. Moreover, it is lightweight and does not need to occupy too many server resources to achieve better results.

The above is the detailed content of Configure Nginx to achieve load balancing (picture). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

You can query the Docker container name by following the steps: List all containers (docker ps). Filter the container list (using the grep command). Gets the container name (located in the "NAMES" column).

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure cloud server domain name in nginx

Apr 14, 2025 pm 12:18 PM

How to configure an Nginx domain name on a cloud server: Create an A record pointing to the public IP address of the cloud server. Add virtual host blocks in the Nginx configuration file, specifying the listening port, domain name, and website root directory. Restart Nginx to apply the changes. Access the domain name test configuration. Other notes: Install the SSL certificate to enable HTTPS, ensure that the firewall allows port 80 traffic, and wait for DNS resolution to take effect.

How to check nginx version

Apr 14, 2025 am 11:57 AM

How to check nginx version

Apr 14, 2025 am 11:57 AM

The methods that can query the Nginx version are: use the nginx -v command; view the version directive in the nginx.conf file; open the Nginx error page and view the page title.

How to configure nginx in Windows

Apr 14, 2025 pm 12:57 PM

How to configure nginx in Windows

Apr 14, 2025 pm 12:57 PM

How to configure Nginx in Windows? Install Nginx and create a virtual host configuration. Modify the main configuration file and include the virtual host configuration. Start or reload Nginx. Test the configuration and view the website. Selectively enable SSL and configure SSL certificates. Selectively set the firewall to allow port 80 and 443 traffic.

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to confirm whether Nginx is started: 1. Use the command line: systemctl status nginx (Linux/Unix), netstat -ano | findstr 80 (Windows); 2. Check whether port 80 is open; 3. Check the Nginx startup message in the system log; 4. Use third-party tools, such as Nagios, Zabbix, and Icinga.

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

Create a container in Docker: 1. Pull the image: docker pull [mirror name] 2. Create a container: docker run [Options] [mirror name] [Command] 3. Start the container: docker start [Container name]

How to start nginx server

Apr 14, 2025 pm 12:27 PM

How to start nginx server

Apr 14, 2025 pm 12:27 PM

Starting an Nginx server requires different steps according to different operating systems: Linux/Unix system: Install the Nginx package (for example, using apt-get or yum). Use systemctl to start an Nginx service (for example, sudo systemctl start nginx). Windows system: Download and install Windows binary files. Start Nginx using the nginx.exe executable (for example, nginx.exe -c conf\nginx.conf). No matter which operating system you use, you can access the server IP

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

Docker container startup steps: Pull the container image: Run "docker pull [mirror name]". Create a container: Use "docker create [options] [mirror name] [commands and parameters]". Start the container: Execute "docker start [Container name or ID]". Check container status: Verify that the container is running with "docker ps".