Detailed explanation of java thread pool

Thread pool overview

1. The thread pool is a pool that manages threads, which can reduce the creation and destruction of threads. The resource consumption caused

Because a thread is actually an object. To create an object, you need to go through the class loading process, to destroy an object, and to go through the GC garbage collection process, all of which require resource overhead.

2. Improve the response speed. When the task arrives, compared with taking the thread from the thread pool, creating the thread yourself will definitely be much slower.

3. Reuse, and put the thread back into the pool after it is used up. , achieving the effect of reuse

(Recommended video: java video tutorial)

Thread pool execution

Make a Metaphor

Core threads are compared to regular employees of the company

Non-core threads are compared to outsourced employees

Blocking queues are compared to demand pools

Submitting tasks is compared to submissions Requirements

Formal execution

1 2 3 4 |

|

1 2 3 4 5 6 7 |

|

● When submitting a task and the number of surviving core threads in the thread pool is less than the number of threads corePoolSize, The thread pool will create a core thread to handle submitted tasks.

● If the number of core threads in the thread pool is full, that is, the number of threads is equal to corePoolSize, a newly submitted task will be put into the task queue workQueue and queued for execution.

● When the number of surviving threads in the thread pool is equal to corePoolSize, and the task queue workQueue is also full, determine whether the number of threads reaches maximumPoolSize, that is, whether the maximum number of threads is full. If not, create a non-core The thread executes the submitted task.

● If the current number of threads reaches maximumPoolSize and new tasks come, the rejection policy will be used directly.

Several saturation strategies

1 2 3 4 |

|

Thread pool exception handling

Due to the occurrence of thread processing tasks during the thread pool call Exceptions may be caught by the thread pool, so the execution of the task may be unaware, so we need to consider thread pool exceptions.

Method one:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

|

Method two:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

|

Thread pool work queue

● ArrayBlockingQueue

● LinkedBlockingQueue

● SynchronousQueue

● DelayQueue

● PriorityBlockingQueue

==ArrayBlockingQueue==

● Initialize an array of a certain capacity

● Use a reentrant lock. Unfair lock is used by default. Enqueue and dequeue share the same lock. Mutual exclusion

● is a bounded design. If the capacity is full, elements cannot be added until An element was removed

● When using it, open up a continuous memory. If the initialization capacity is too large, it will easily cause a waste of resources. If it is too small, it will easily fail to add.

==LinkedBlockingQueue==

● Use linked list data structure

● Non-continuous memory space

● Use two reentrant locks to control the entry and exit of elements respectively, and use Condition to wake up and dequeue between threads Wait

● Bounded, in the default constructor the capacity is Integer.MAX_VALUE

==SynchronousQueue==

● The internal capacity is 0

● Every deletion operation has to wait for the insertion operation

● Every insertion operation has to wait for the deletion operation

● For an element, once there is an insertion thread and a removal thread, it will soon be The insertion thread is handed over to the removal thread. This container is equivalent to a channel and does not store elements

● In a multi-task queue, it is the fastest way to process tasks.

==PriorityBlockingQueue==

● Boundless design, but capacity actually depends on system resources.

● Add elements, if more than 1, enter priority sorting

==DelayQueue==

● Borderless design

● Adding (put) does not block, removing blocking

● Elements have an expiration time

● Only expired elements will be taken out

Commonly used thread pools

● newFixedThreadPool (thread pool with a fixed number of threads)

● newCachedThreadPool (thread pool that can cache threads)

● newSingleThreadExecutor (single-threaded thread pool)

● newScheduledThreadPool (thread pool for scheduled and periodic execution)

==newFixedThreadPool==

1 2 3 4 5 |

|

Features

1. The number of core threads is the same as the maximum number of threads

2. There is no so-called non- The idle time, that is, keepAliveTime is 0

3. The blocking queue is an unbounded queue LinkedBlockingQueue

Working mechanism:

● Submit the task

● If the number of threads is less than the core thread, create a core thread to execute the task

● If the number of threads is equal to the core thread, add the task to the LinkedBlockingQueue blocking queue

● 如果线程执行完任务,去阻塞队列取任务,继续执行。

==newCachedThreadPool==

1 2 3 4 5 |

|

线程池特点

● 核心线程数为0

● 最大线程数为Integer.MAX_VALUE

● 阻塞队列是SynchronousQueue

● 非核心线程空闲存活时间为60秒

工作机制:

● 提交任务

● 因为没有核心线程,所以任务直接加到SynchronousQueue队列。

● 判断是否有空闲线程,如果有,就去取出任务执行。

● 如果没有空闲线程,就新建一个线程执行。

● 执行完任务的线程,还可以存活60秒,如果在这期间,接到任务,可以继续活下去;否则,被销毁。

使用场景

用于并发执行大量短期的小任务。

使用SynchronousQueue作为工作队列,工作队列本身并不限制待执行的任务的数量。但此时需要限定线程池的最大大小为一个合理的有限值,而不是Integer.MAX_VALUE,否则可能导致线程池中的工作者线程的数量一直增加到系统资源所无法承受为止。

如果应用程序确实需要比较大的工作队列容量,而又想避免无界工作队列可能导致的问题,不妨考虑SynchronousQueue。SynchronousQueue实现上并不使用缓存空间

==newSingleThreadExecutor==

线程池特点

● 核心线程数为1

● 最大线程数也为1

● 阻塞队列是LinkedBlockingQueue

● keepAliveTime为0

1 2 3 4 5 6 7 |

|

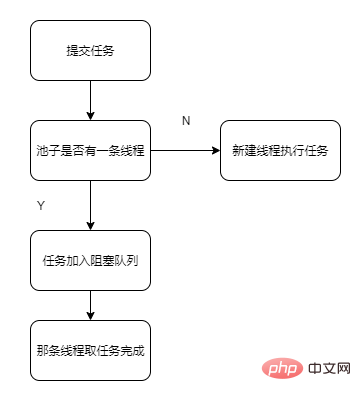

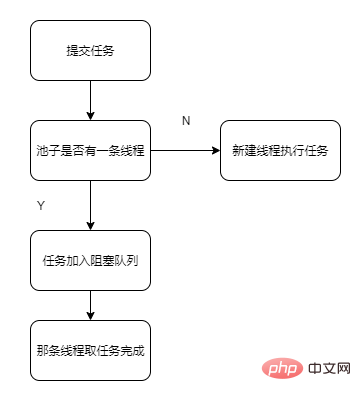

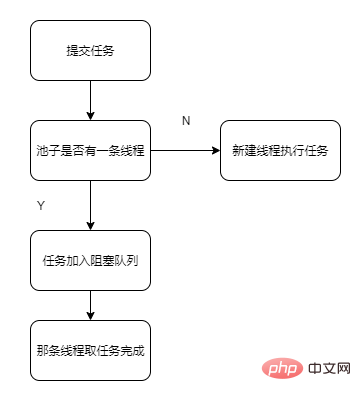

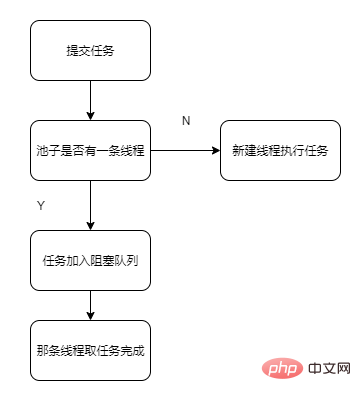

工作机制

● 提交任务

● 线程池是否有一条线程在,如果没有,新建线程执行任务

● 如果有,讲任务加到阻塞队列

● 当前的唯一线程,从队列取任务,执行完一个,再继续取,一个人(一条线程)夜以继日地干活。

使用场景

适用于串行执行任务的场景,一个任务一个任务的执行

==newScheduledThreadPool==

线程池特点

1 2 3 |

|

● 最大线程数为Integer.MAX_VALUE

● 阻塞队列是DelayedWorkQueue

● keepAliveTime为0

● scheduleAtFixedRate() :按某种速率周期执行

● scheduleWithFixedDelay():在某个延迟后执行

工作机制

● 添加一个任务

● 线程池中的线程从 DelayQueue 中取任务

● 线程从 DelayQueue 中获取 time 大于等于当前时间的task

● 执行完后修改这个 task 的 time 为下次被执行的时间

● 这个 task 放回DelayQueue队列中

scheduleWithFixedDelay

● 无论任务执行时间长短,都是当第一个任务执行完成之后,延迟指定时间再开始执行第二个任务

scheduleAtFixedRate

● 在任务执行时间小于间隔时间的情况下,程序以起始时间为准则,每隔指定时间执行一次,不受任务执行时间影响

● 当执行任务时间大于间隔时间,此方法不会重新开启一个新的任务进行执行,而是等待原有任务执行完成,马上开启下一个任务进行执行。此时,执行间隔时间已经被打乱

本文来自php中文网,java教程栏目,欢迎学习!

The above is the detailed content of Detailed explanation of java thread pool. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Perfect Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Perfect Number in Java. Here we discuss the Definition, How to check Perfect number in Java?, examples with code implementation.

Weka in Java

Aug 30, 2024 pm 04:28 PM

Weka in Java

Aug 30, 2024 pm 04:28 PM

Guide to Weka in Java. Here we discuss the Introduction, how to use weka java, the type of platform, and advantages with examples.

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Smith Number in Java

Aug 30, 2024 pm 04:28 PM

Guide to Smith Number in Java. Here we discuss the Definition, How to check smith number in Java? example with code implementation.

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

Java Spring Interview Questions

Aug 30, 2024 pm 04:29 PM

In this article, we have kept the most asked Java Spring Interview Questions with their detailed answers. So that you can crack the interview.

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Break or return from Java 8 stream forEach?

Feb 07, 2025 pm 12:09 PM

Java 8 introduces the Stream API, providing a powerful and expressive way to process data collections. However, a common question when using Stream is: How to break or return from a forEach operation? Traditional loops allow for early interruption or return, but Stream's forEach method does not directly support this method. This article will explain the reasons and explore alternative methods for implementing premature termination in Stream processing systems. Further reading: Java Stream API improvements Understand Stream forEach The forEach method is a terminal operation that performs one operation on each element in the Stream. Its design intention is

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

TimeStamp to Date in Java

Aug 30, 2024 pm 04:28 PM

Guide to TimeStamp to Date in Java. Here we also discuss the introduction and how to convert timestamp to date in java along with examples.

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Java Program to Find the Volume of Capsule

Feb 07, 2025 am 11:37 AM

Capsules are three-dimensional geometric figures, composed of a cylinder and a hemisphere at both ends. The volume of the capsule can be calculated by adding the volume of the cylinder and the volume of the hemisphere at both ends. This tutorial will discuss how to calculate the volume of a given capsule in Java using different methods. Capsule volume formula The formula for capsule volume is as follows: Capsule volume = Cylindrical volume Volume Two hemisphere volume in, r: The radius of the hemisphere. h: The height of the cylinder (excluding the hemisphere). Example 1 enter Radius = 5 units Height = 10 units Output Volume = 1570.8 cubic units explain Calculate volume using formula: Volume = π × r2 × h (4

Create the Future: Java Programming for Absolute Beginners

Oct 13, 2024 pm 01:32 PM

Create the Future: Java Programming for Absolute Beginners

Oct 13, 2024 pm 01:32 PM

Java is a popular programming language that can be learned by both beginners and experienced developers. This tutorial starts with basic concepts and progresses through advanced topics. After installing the Java Development Kit, you can practice programming by creating a simple "Hello, World!" program. After you understand the code, use the command prompt to compile and run the program, and "Hello, World!" will be output on the console. Learning Java starts your programming journey, and as your mastery deepens, you can create more complex applications.